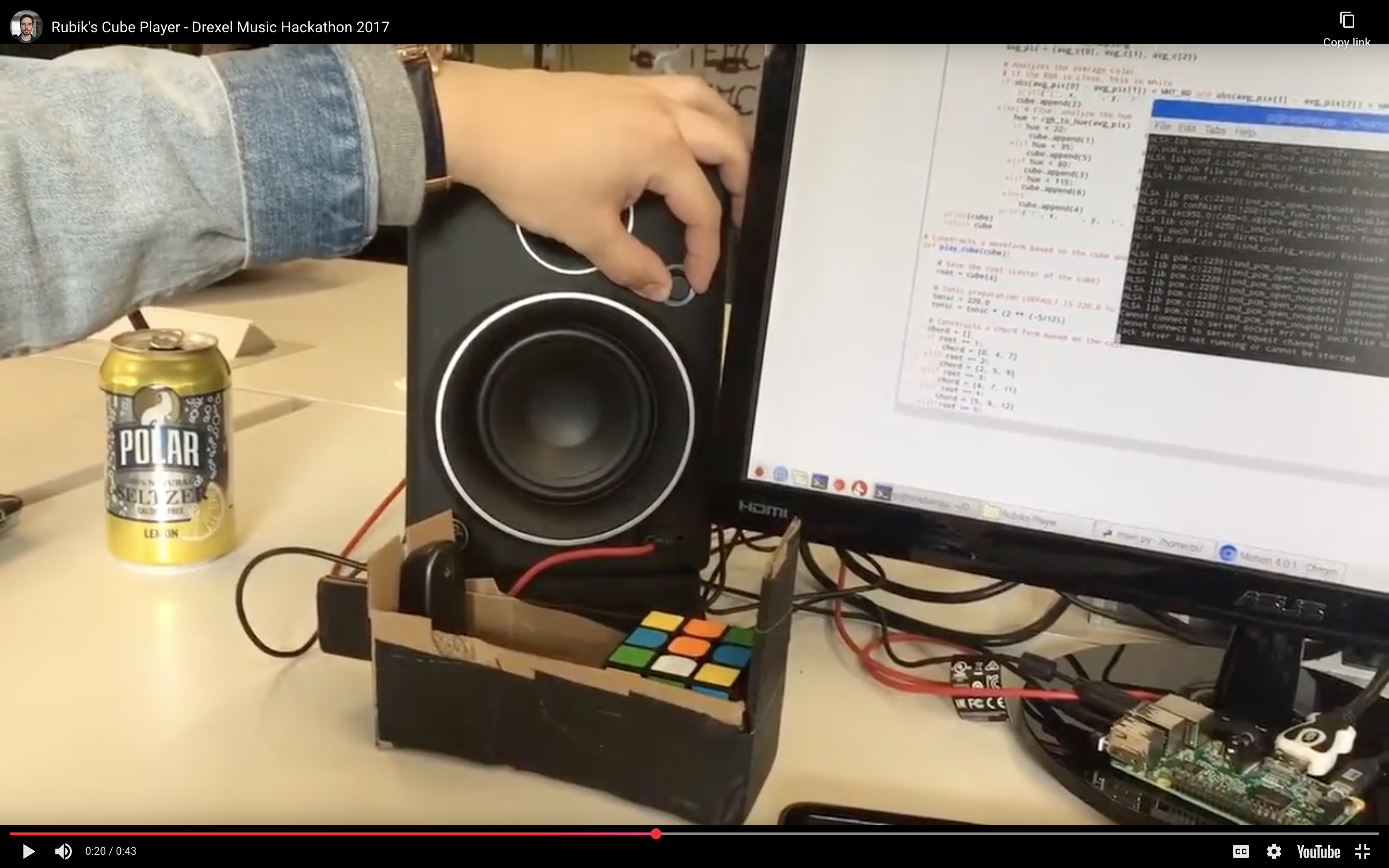

This is a project I worked on with Emmanuel Espino and Jason Zogheb for the Drexel 2017 Music Hackathon at the ExCITe Center. The Rubik’s Cube Player uses a webcam to capture images of a Rubik’s cube face and converts the color patterns into musical pitches.

The interesting part is that the more solved a cube face is, the more harmonious the generated melody sounds. We built this in less than 24 hours using Python, computer vision libraries, and audio synthesis techniques.

Technical Details

- Computer Vision: Uses webcam capture and image processing to identify cube colors

- Audio Generation: Converts color patterns to musical pitches and waveforms

- Real-time Processing: Live analysis and audio playback

- Harmony Algorithm: More solved faces produce more consonant musical intervals

Links

- GitHub Repository: Rubik’s Player

- Contributors: Emmanuel Espino, Jason Zogheb, and myself