GutenOCR: A Grounded Vision-Language Front-End for Documents

GutenOCR is a family of vision-language models designed to serve as a ‘grounded OCR front-end’, providing high-quality text transcription and explicit geometric grounding.

GutenOCR is a family of vision-language models designed to serve as a ‘grounded OCR front-end’, providing high-quality text transcription and explicit geometric grounding.

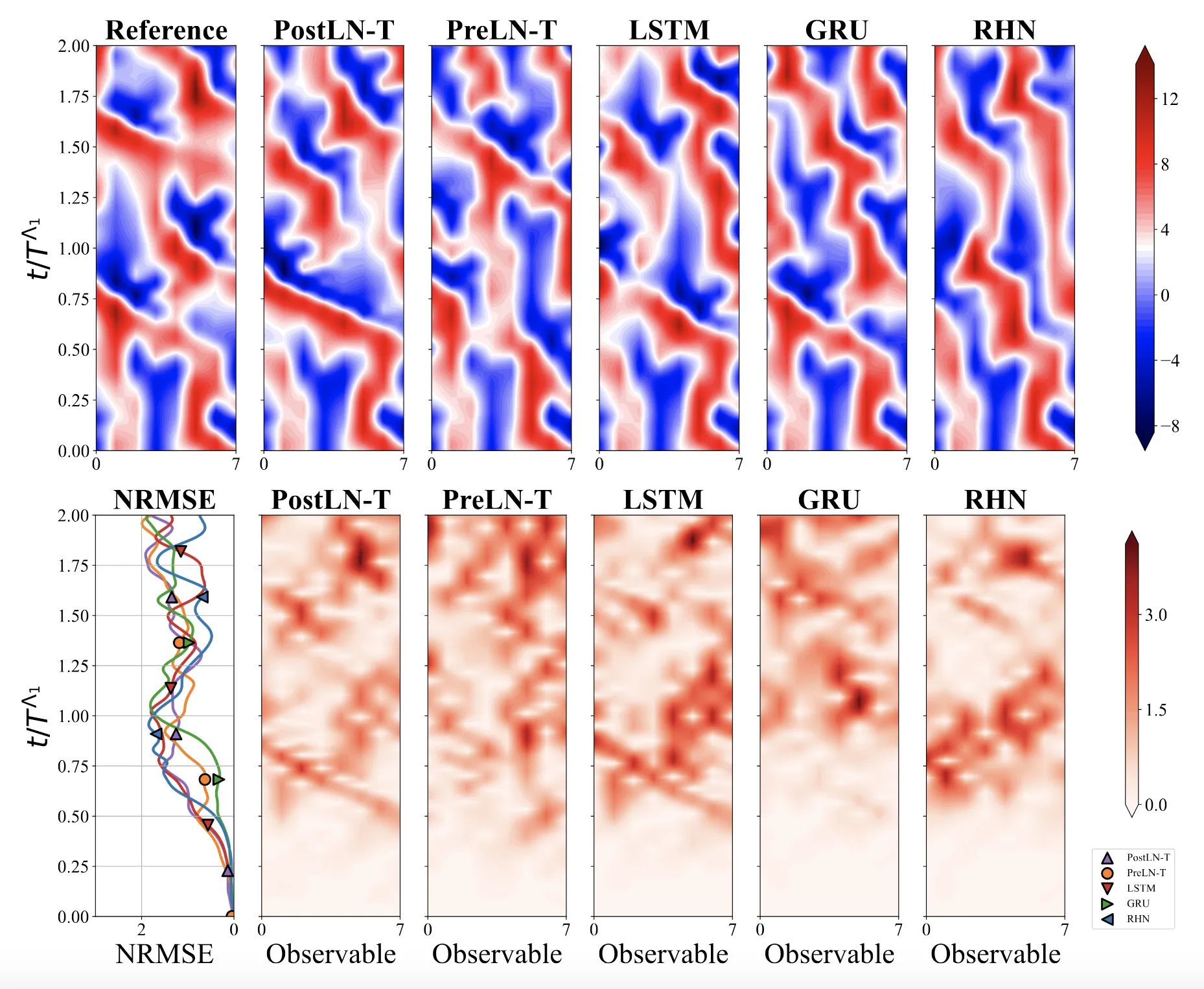

We systematically ablate core mechanisms of Transformers and RNNs, finding that attention-augmented Recurrent Highway Networks outperform standard Transformers on forecasting high-dimensional chaotic systems.

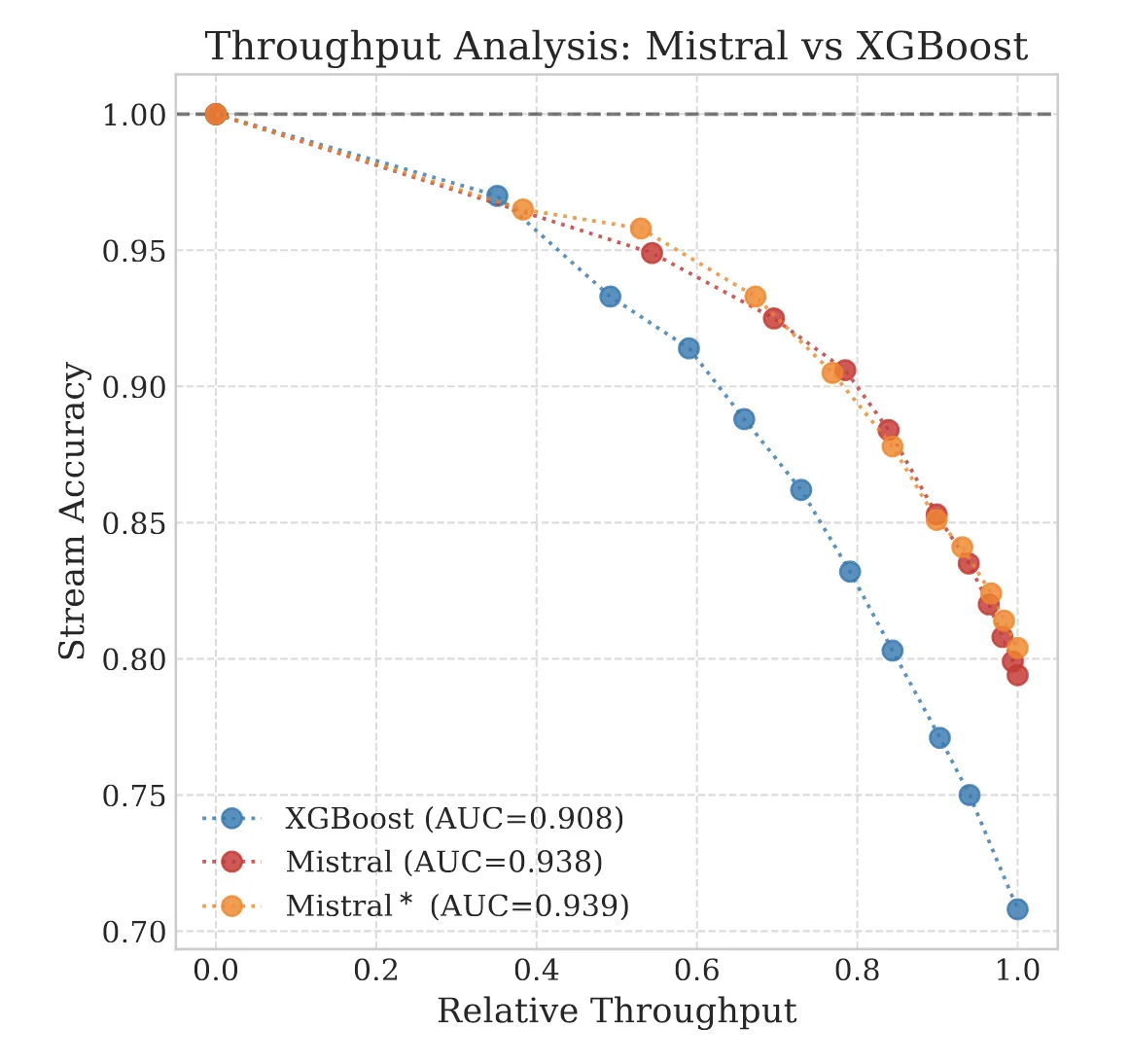

We explore the ‘Silent Failure’ mode of LLMs in production: the limits of 99% accuracy for reliability, how confidence decays in long documents, and why standard calibration techniques struggle to fix it.

We trace the history of Page Stream Segmentation (PSS) through three eras (Heuristic, Encoder, and Decoder) and explain how privacy-preserving, localized LLMs enable true semantic processing.

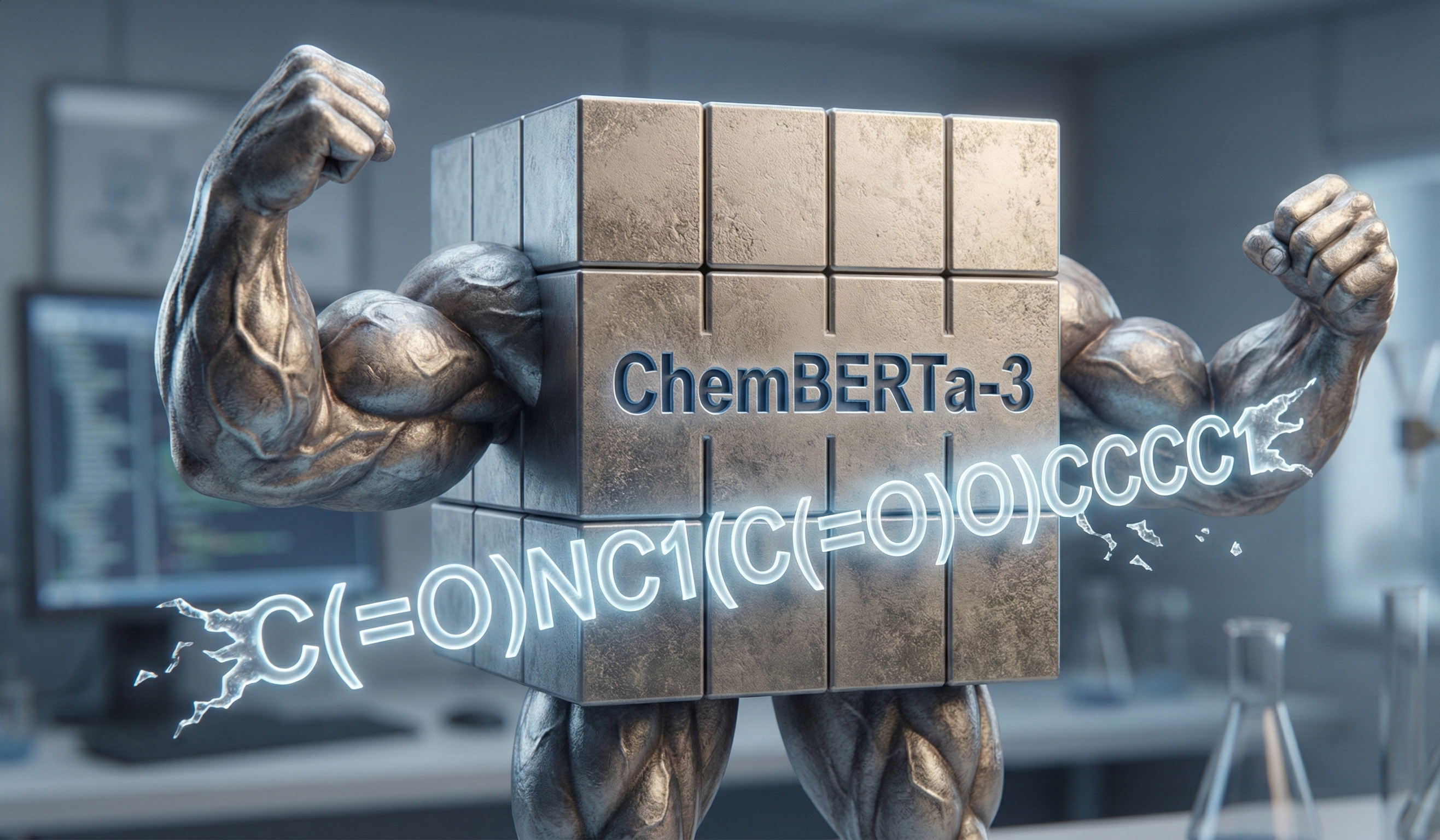

ChemBERTa-3 provides a unified, scalable infrastructure for pretraining and benchmarking chemical foundation models. It addresses reproducibility gaps in previous studies like MoLFormer through standardized scaffold splitting and open-source tooling.

ChemDFM-R is a 14B-parameter chemical reasoning model that integrates a 101B-token dataset of atomized chemical knowledge. Using a novel mix-sourced distillation strategy and domain-specific reinforcement learning, it achieves state-of-the-art performance on chemical benchmarks.

This work investigates the scaling hypothesis for molecular transformers, training RoBERTa models on 77M SMILES from PubChem. It compares Masked Language Modeling (MLM) against Multi-Task Regression (MTR) pretraining, finding that MTR yields better downstream performance but is computationally heavier.

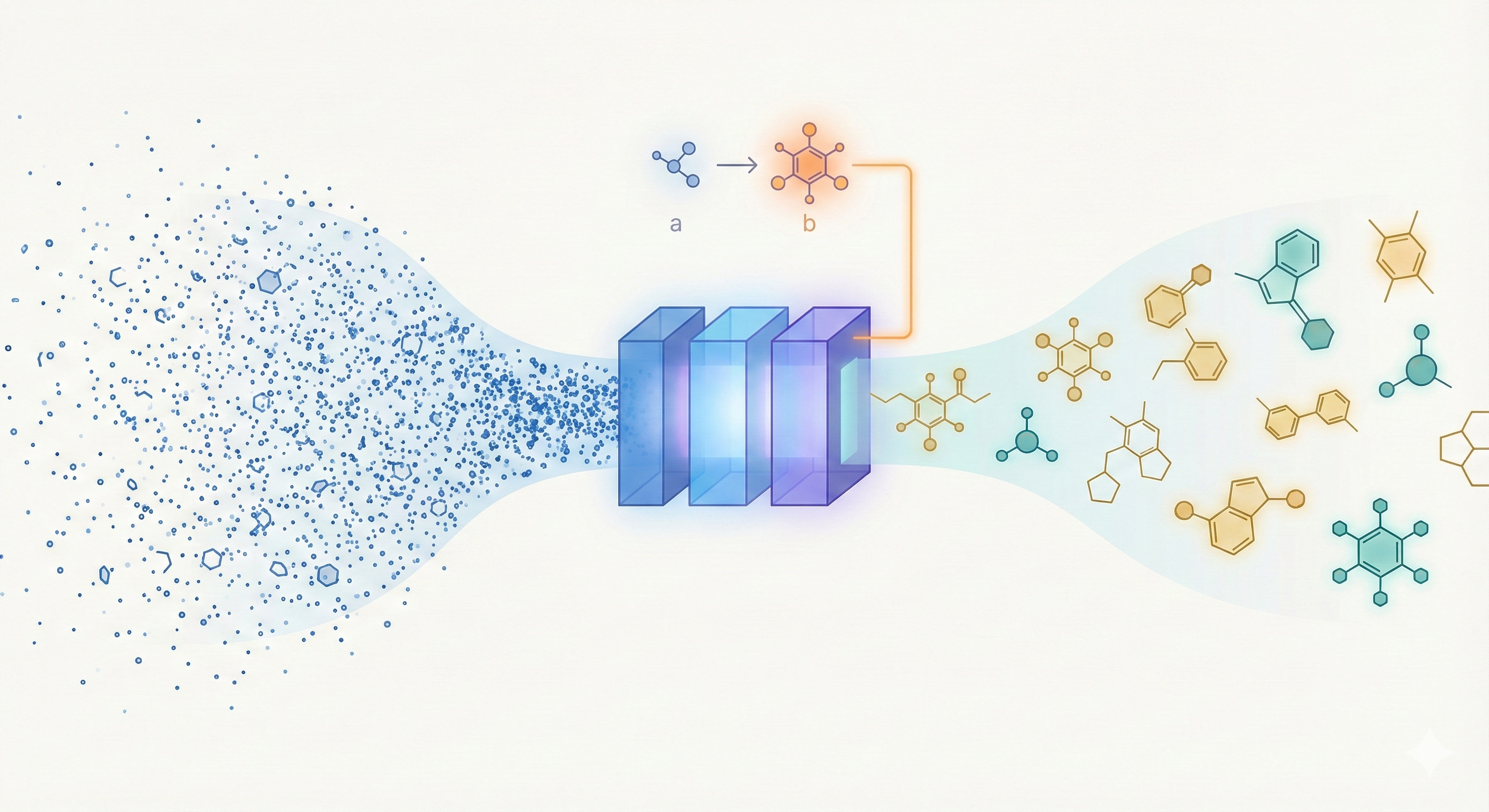

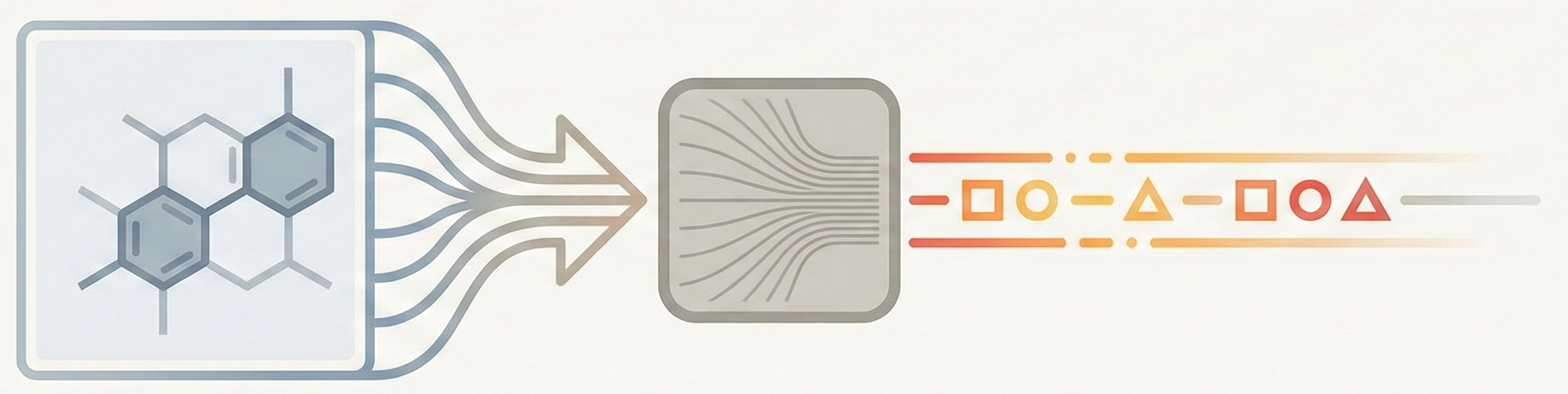

This methodological paper proposes a linear-attention transformer decoder trained on 1.1 billion molecules. It introduces pair-tuning for efficient property optimization and establishes empirical scaling laws relating inference compute to generation novelty.

This paper introduces ChemBERTa, a RoBERTa-based model pretrained on 77M SMILES strings. It systematically evaluates the impact of pretraining dataset size, tokenization strategies, and input representations (SMILES vs. SELFIES) on downstream MoleculeNet tasks, finding that performance scales positively with data size.

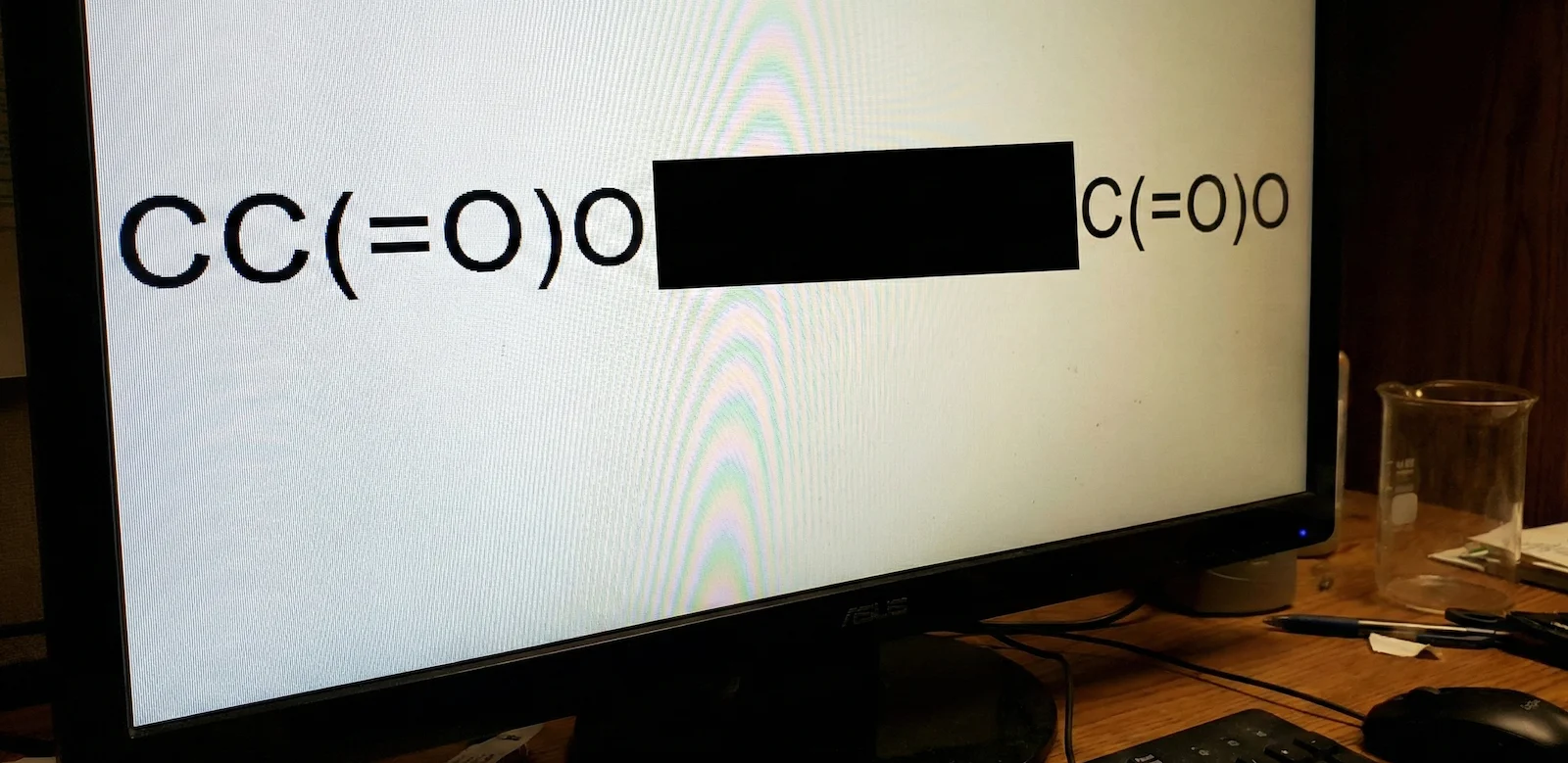

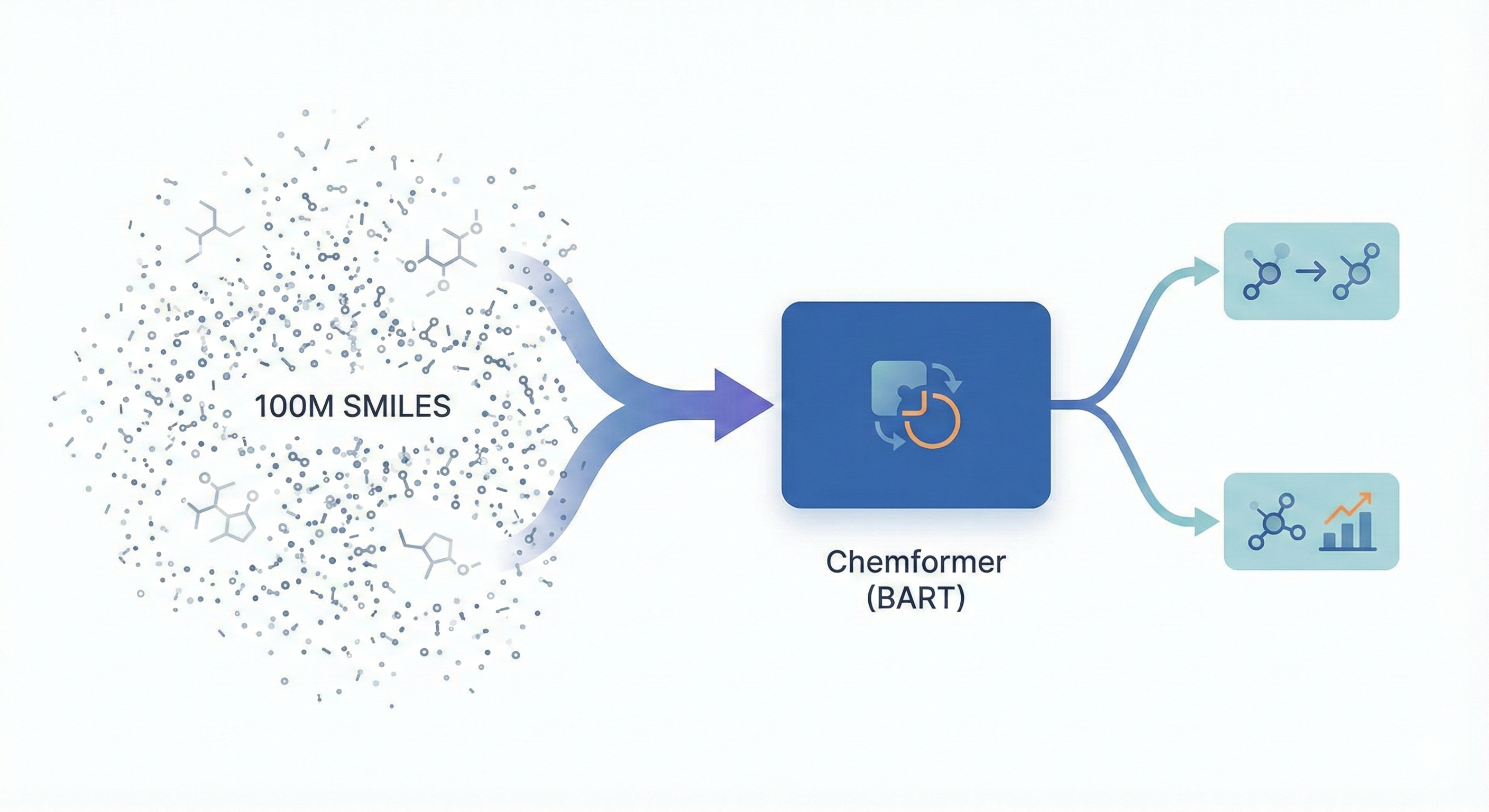

This paper introduces Chemformer, a BART-based sequence-to-sequence model pre-trained on 100M molecules using a ‘combined’ masking and augmentation task. It achieves top-1 accuracy on reaction prediction benchmarks while significantly reducing training time through transfer learning.

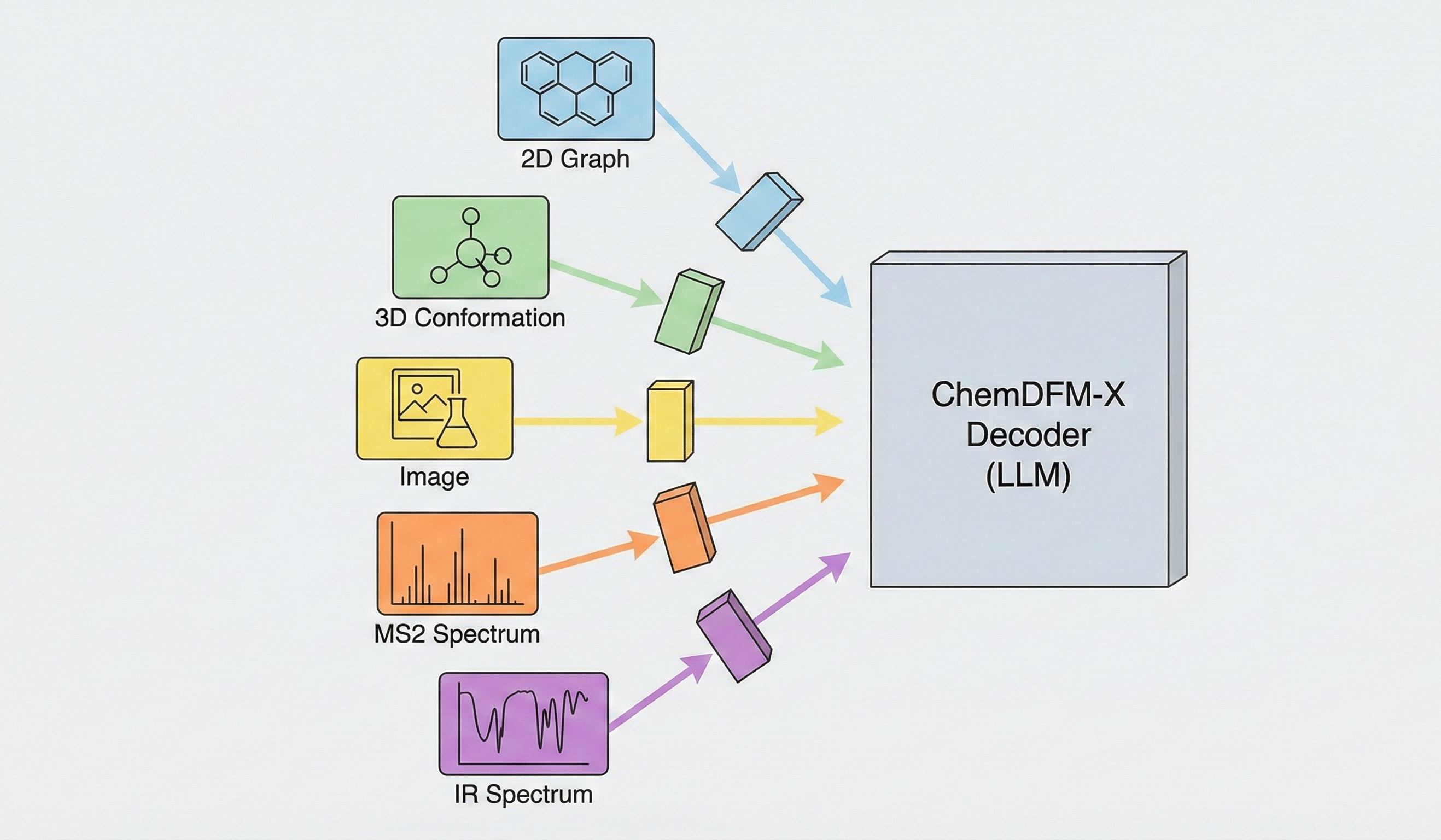

ChemDFM-X is a multimodal chemical foundation model that integrates five non-text modalities (2D graphs, 3D conformations, images, MS2 spectra, IR spectra) into a single LLM decoder. It overcomes data scarcity by generating a 7.6M instruction-tuning dataset through approximate calculations and model predictions, establishing strong baseline performance across multiple modalities.

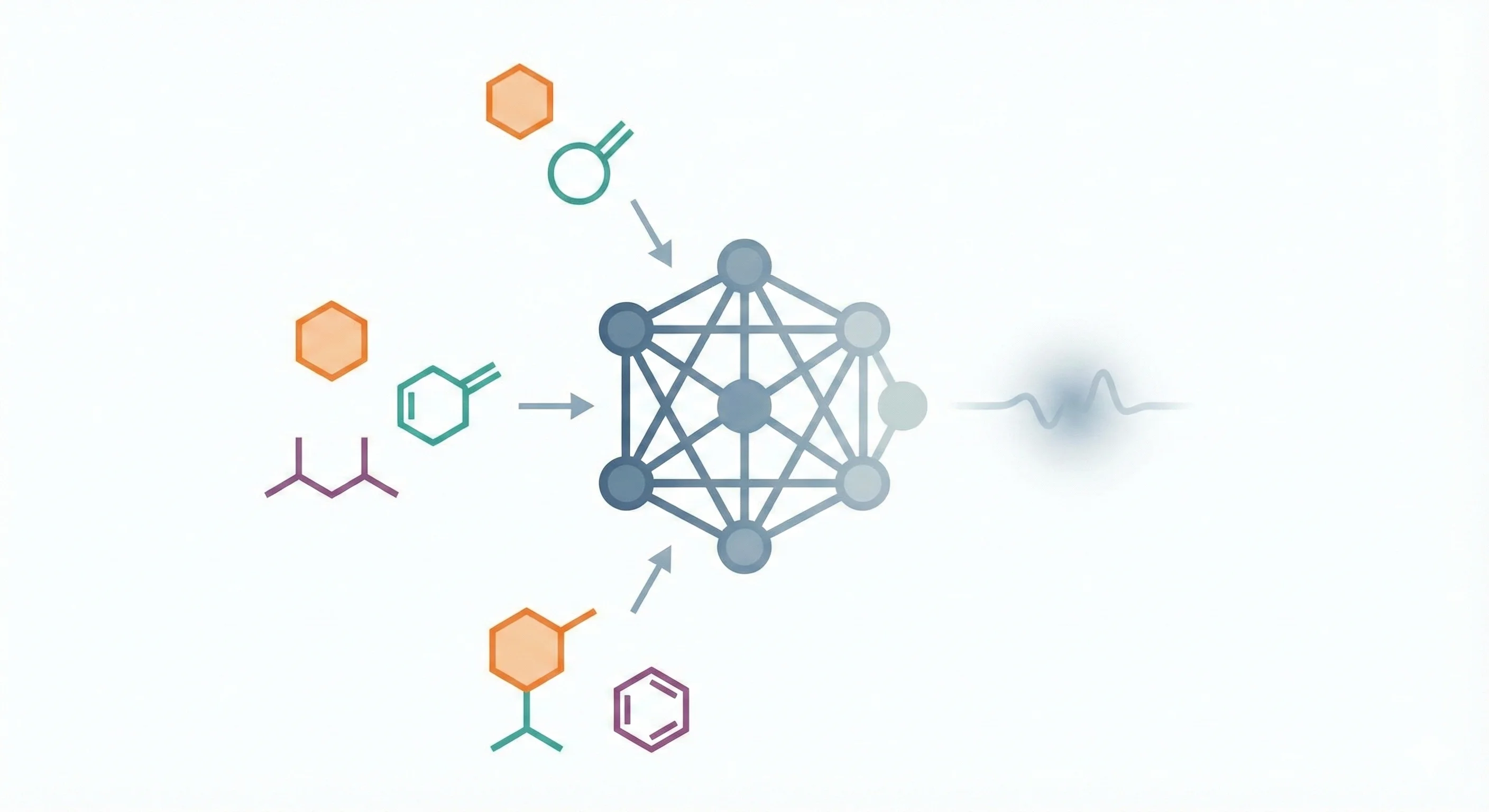

Deep dive into 24 image-to-sequence OCSR methods (2019-2025), comparing encoder-decoder architectures, molecular string representations, training scale, and hardware requirements.