Importance Weighted Autoencoders: Beyond the Standard VAE

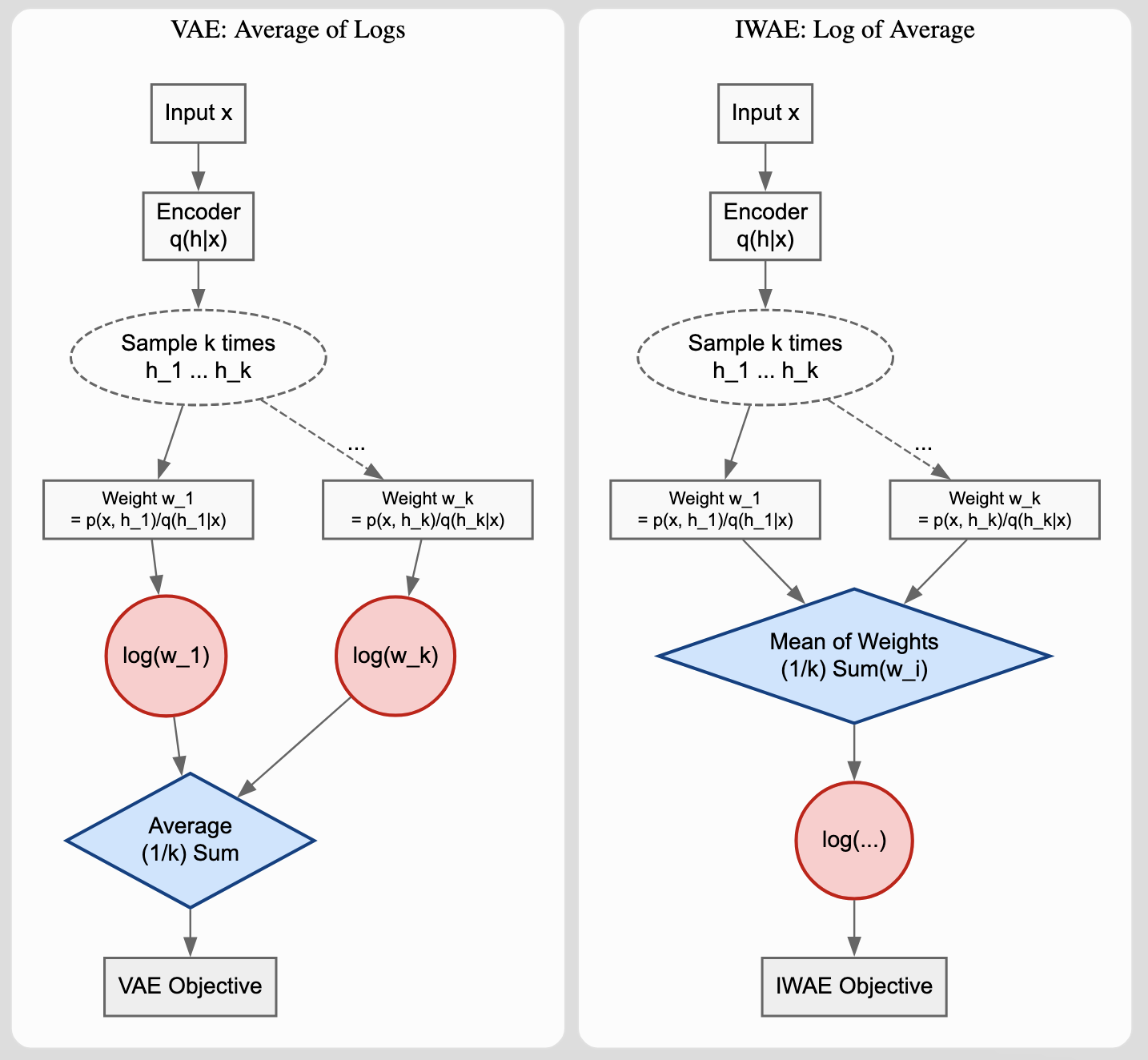

The key difference between multi-sample VAEs and IWAEs: how log-of-averages creates a tighter bound on log-likelihood.

The key difference between multi-sample VAEs and IWAEs: how log-of-averages creates a tighter bound on log-likelihood.

Summary of Burda, Grosse & Salakhutdinov's ICLR 2016 paper introducing Importance Weighted Autoencoders for tighter …

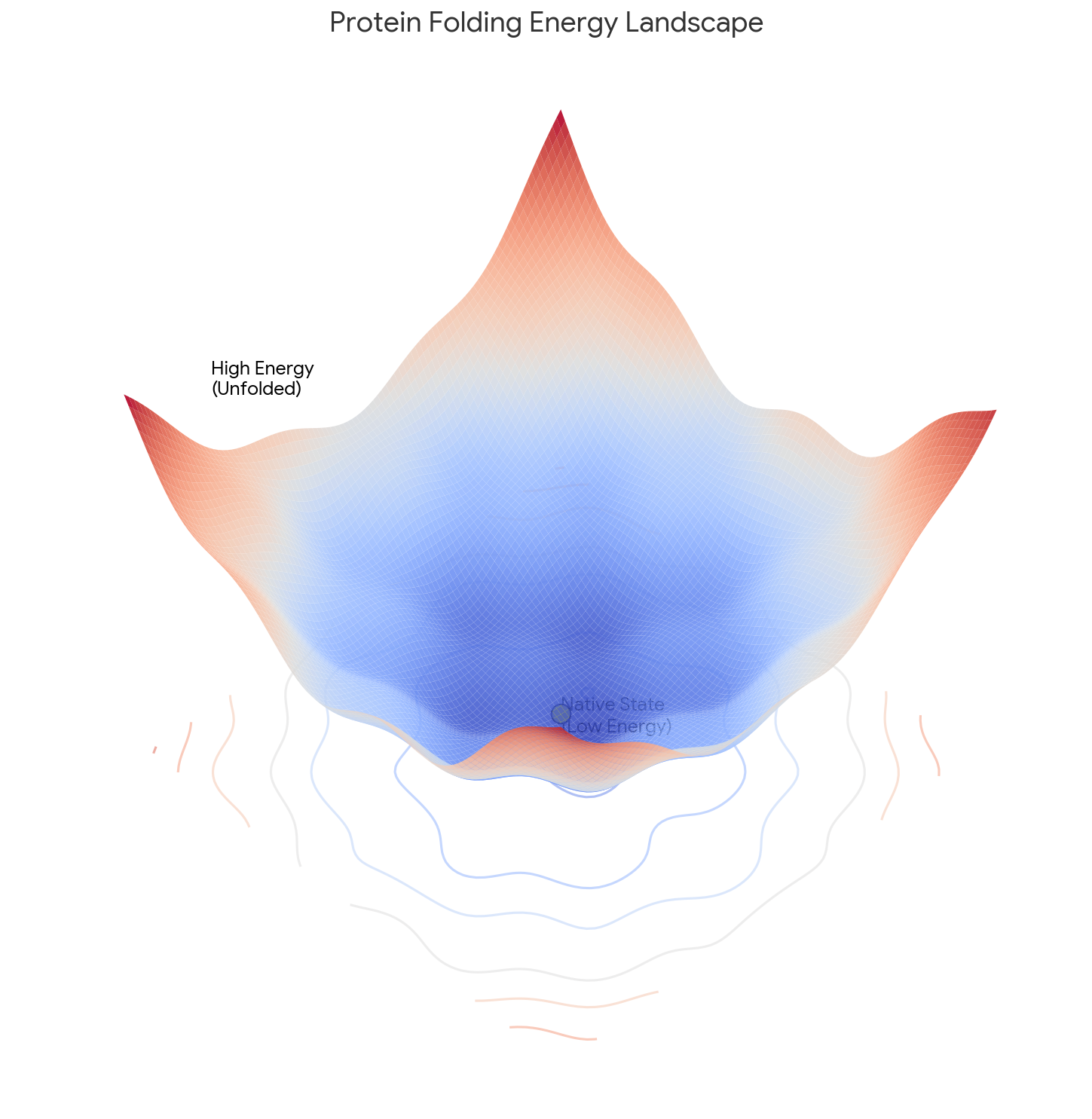

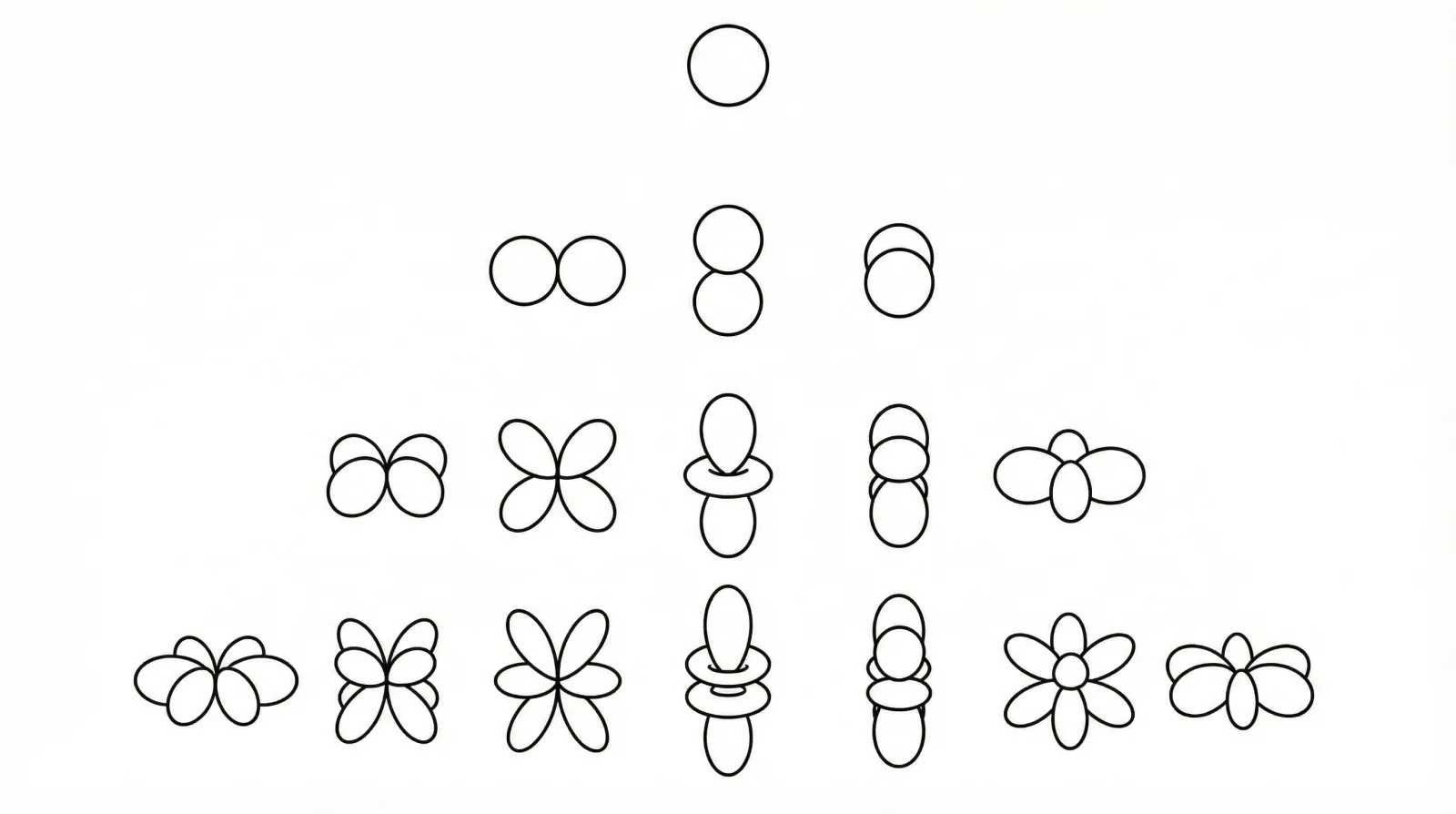

A perspective paper defining the Grand Challenge of protein folding: distinguishing kinetic pathways from thermodynamic …

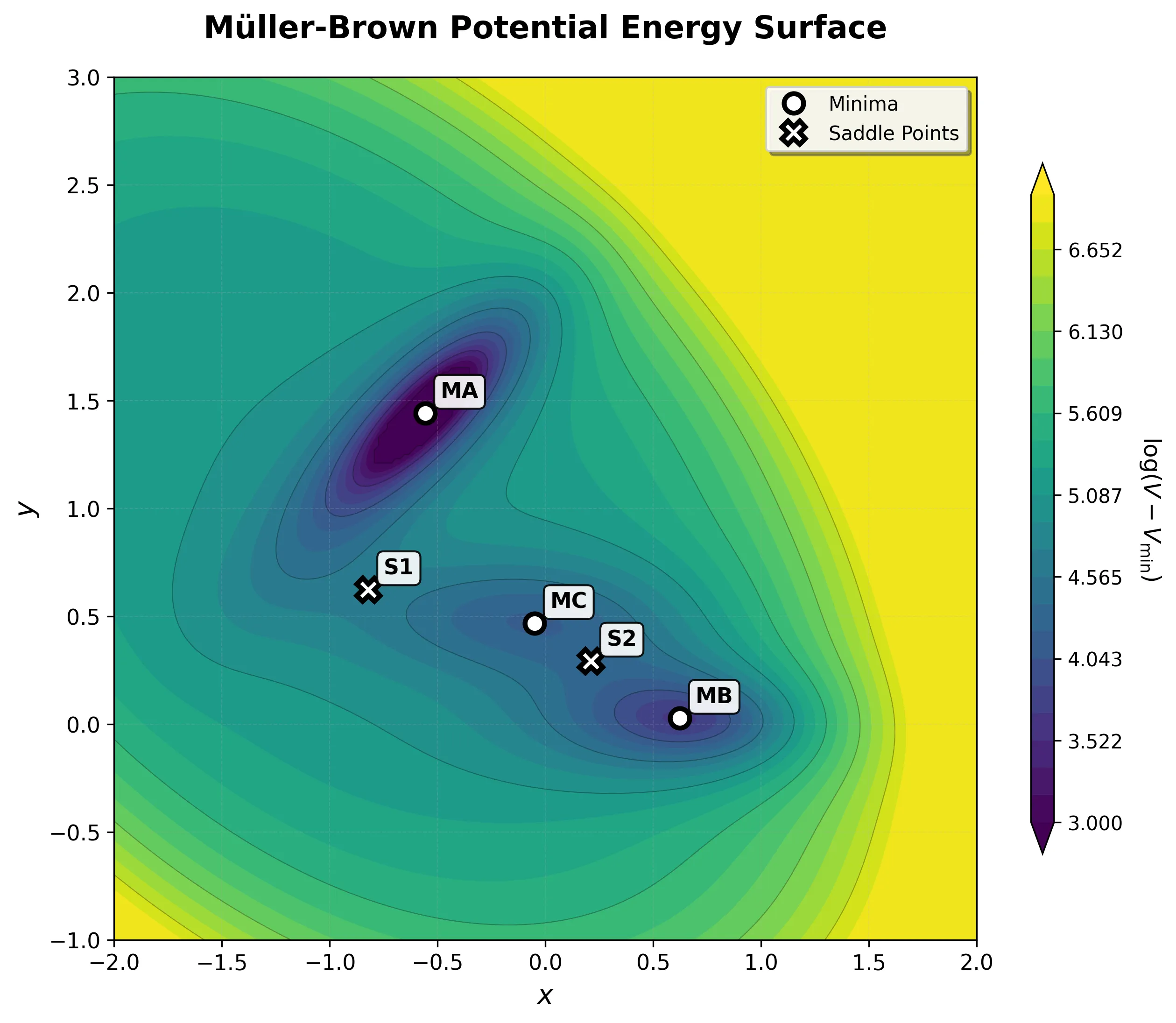

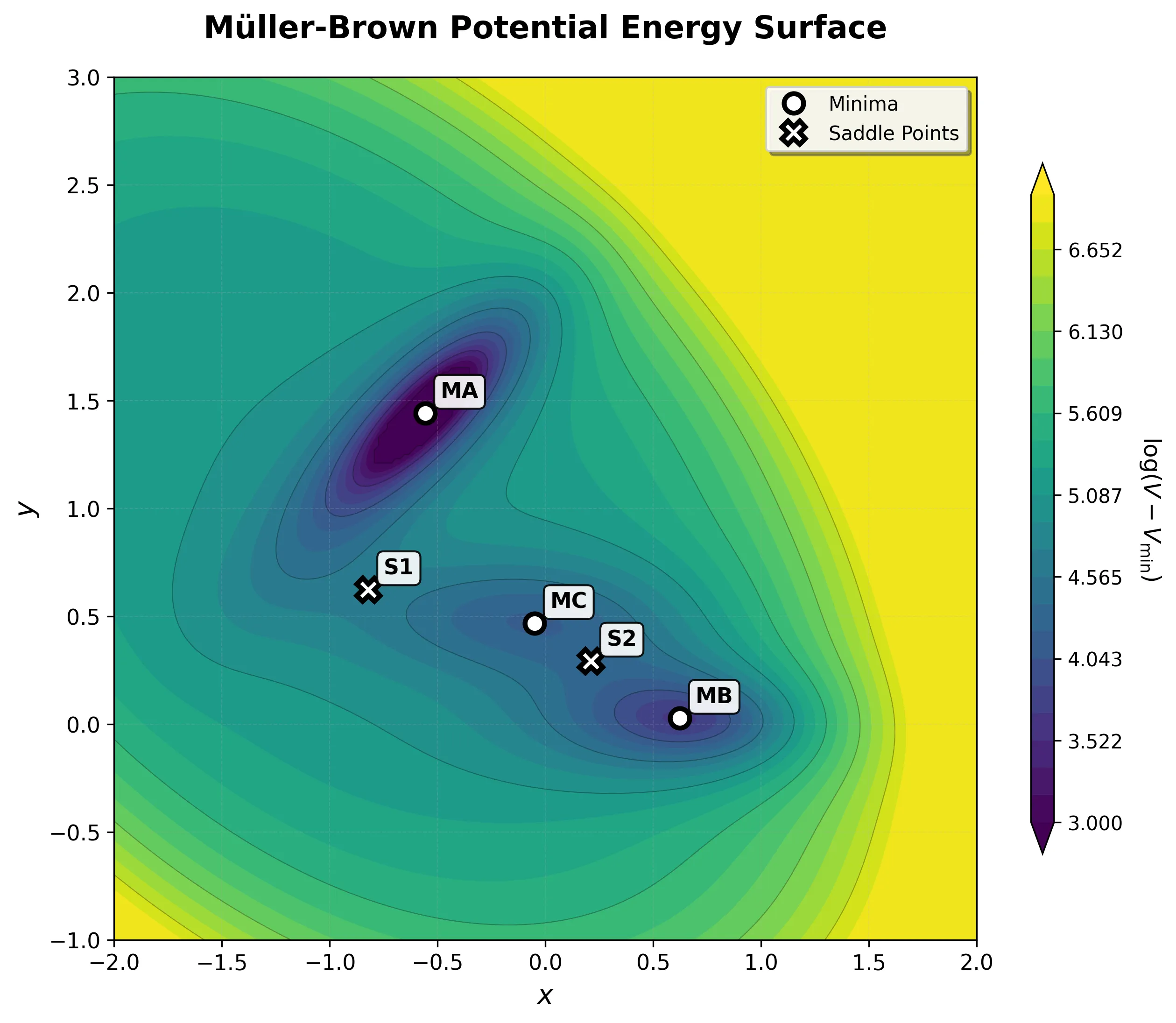

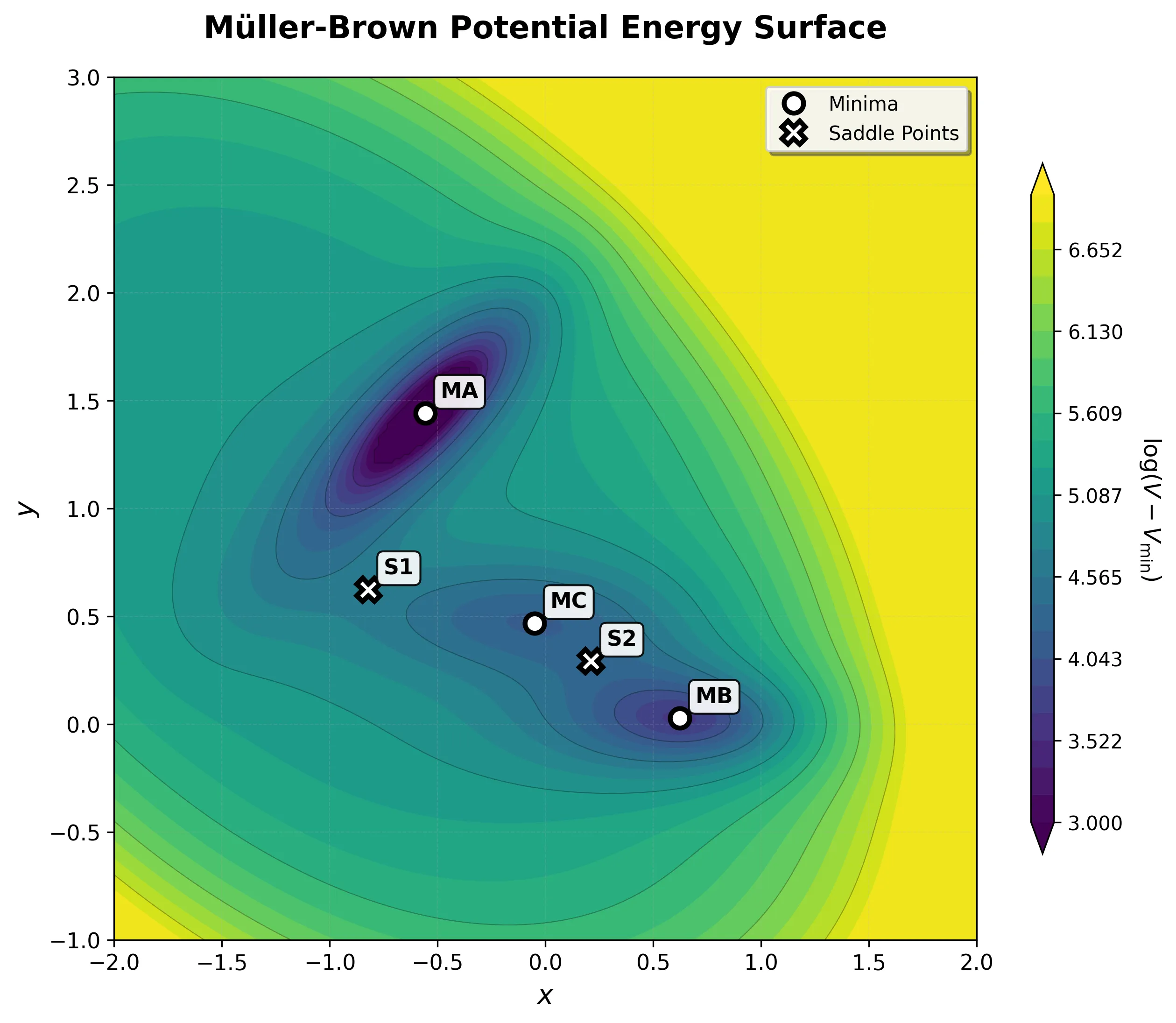

The Müller-Brown potential is a classic 2D benchmark for testing optimization algorithms and molecular dynamics methods.

Guide to implementing the Müller-Brown potential in PyTorch, comparing analytical vs automatic differentiation with …

GPU-accelerated PyTorch framework for the Müller-Brown potential with JIT compilation, Langevin dynamics, and …

Luo et al. introduce SPHNet, using adaptive sparsity to dramatically improve SE(3)-equivariant Hamiltonian prediction …

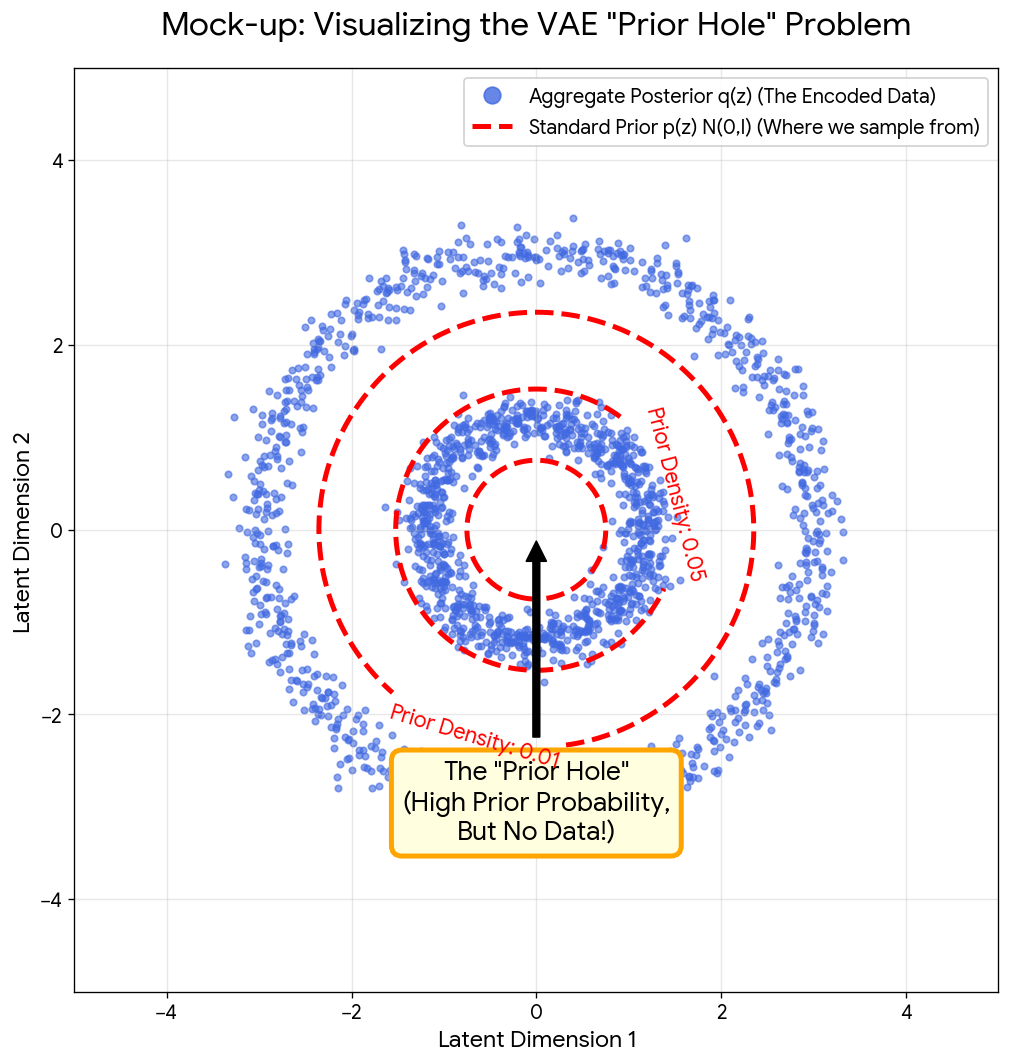

Aneja et al.'s NeurIPS 2021 paper introducing Noise Contrastive Priors (NCPs) to address VAE's 'prior hole' problem with …

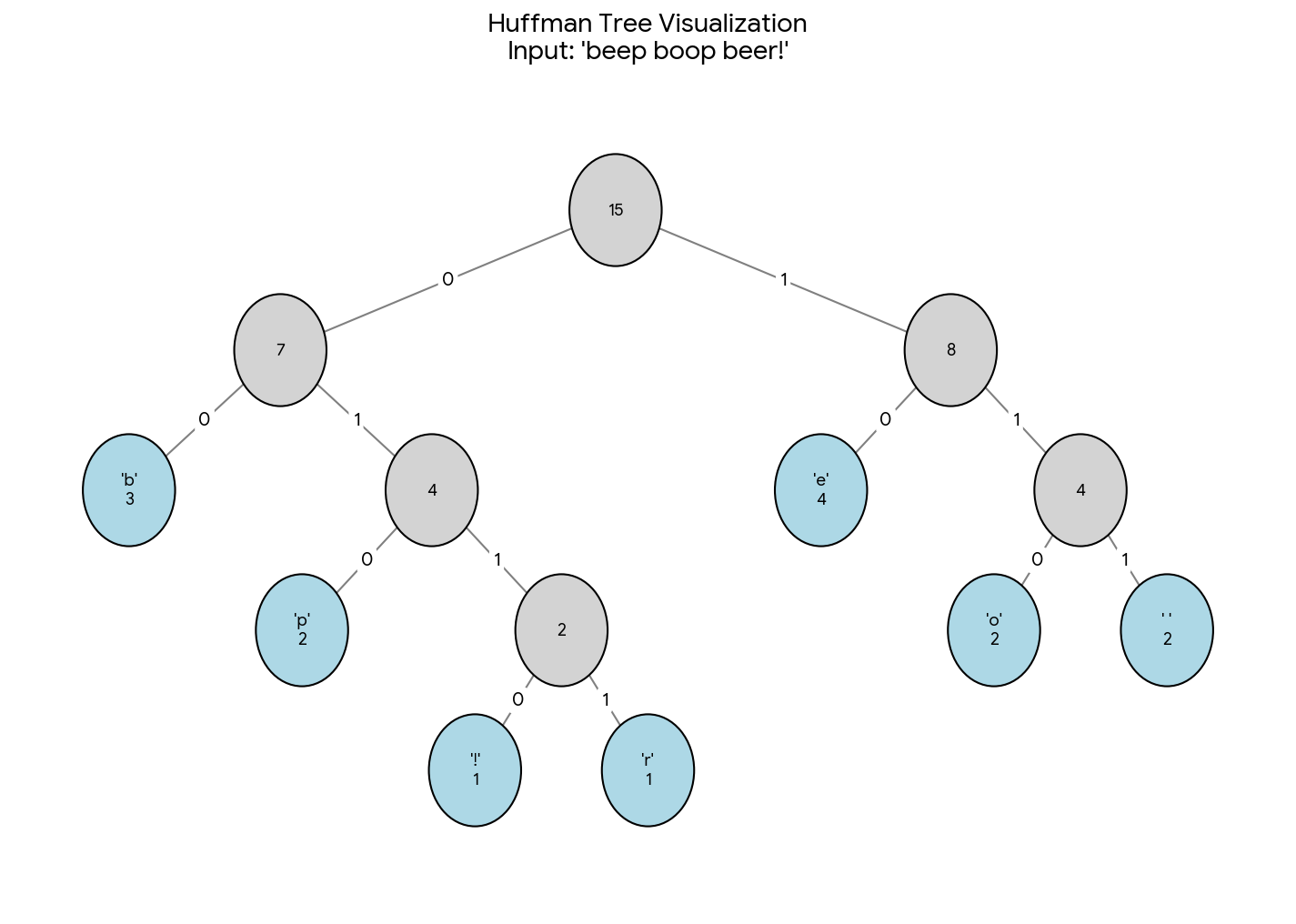

Production-grade Word2Vec in PyTorch with vectorized Hierarchical Softmax, Negative Sampling, and torch.compile support.

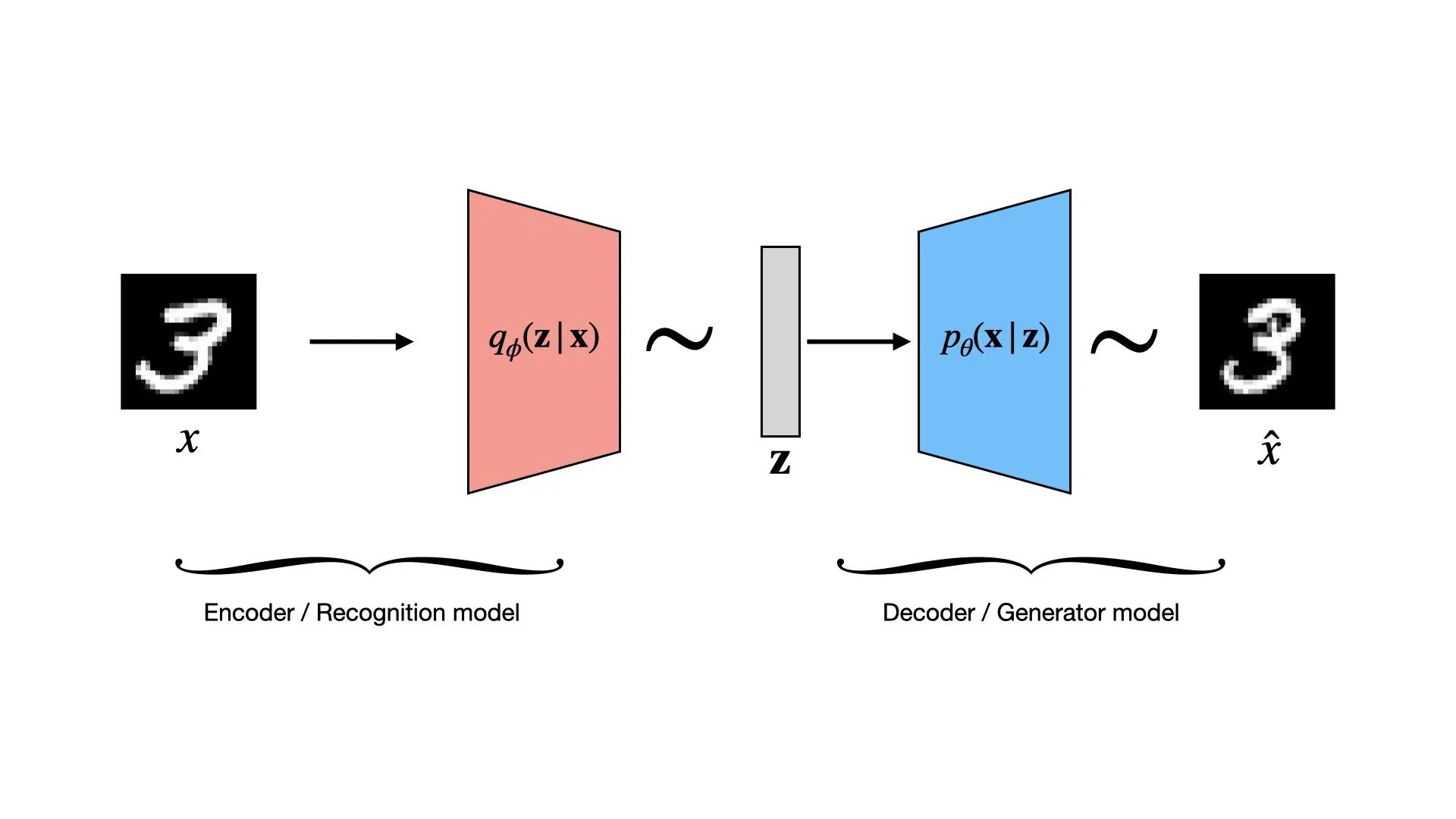

Complete PyTorch VAE tutorial: Copy-paste code, ELBO derivation, KL annealing, and stable softplus parameterization.

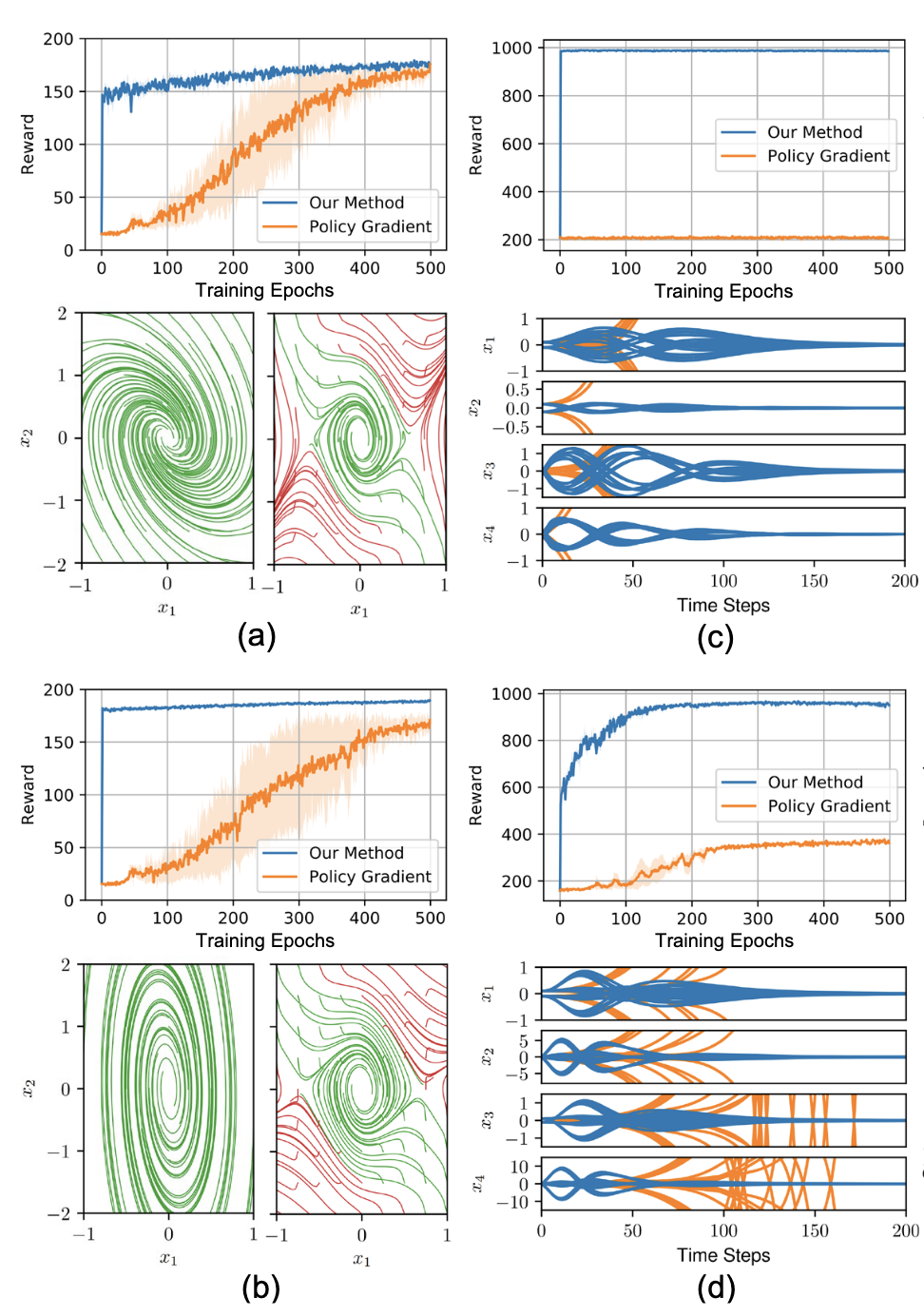

PyTorch IQCRNN enforcing stability guarantees on RNNs via Integral Quadratic Constraints and semidefinite programming.

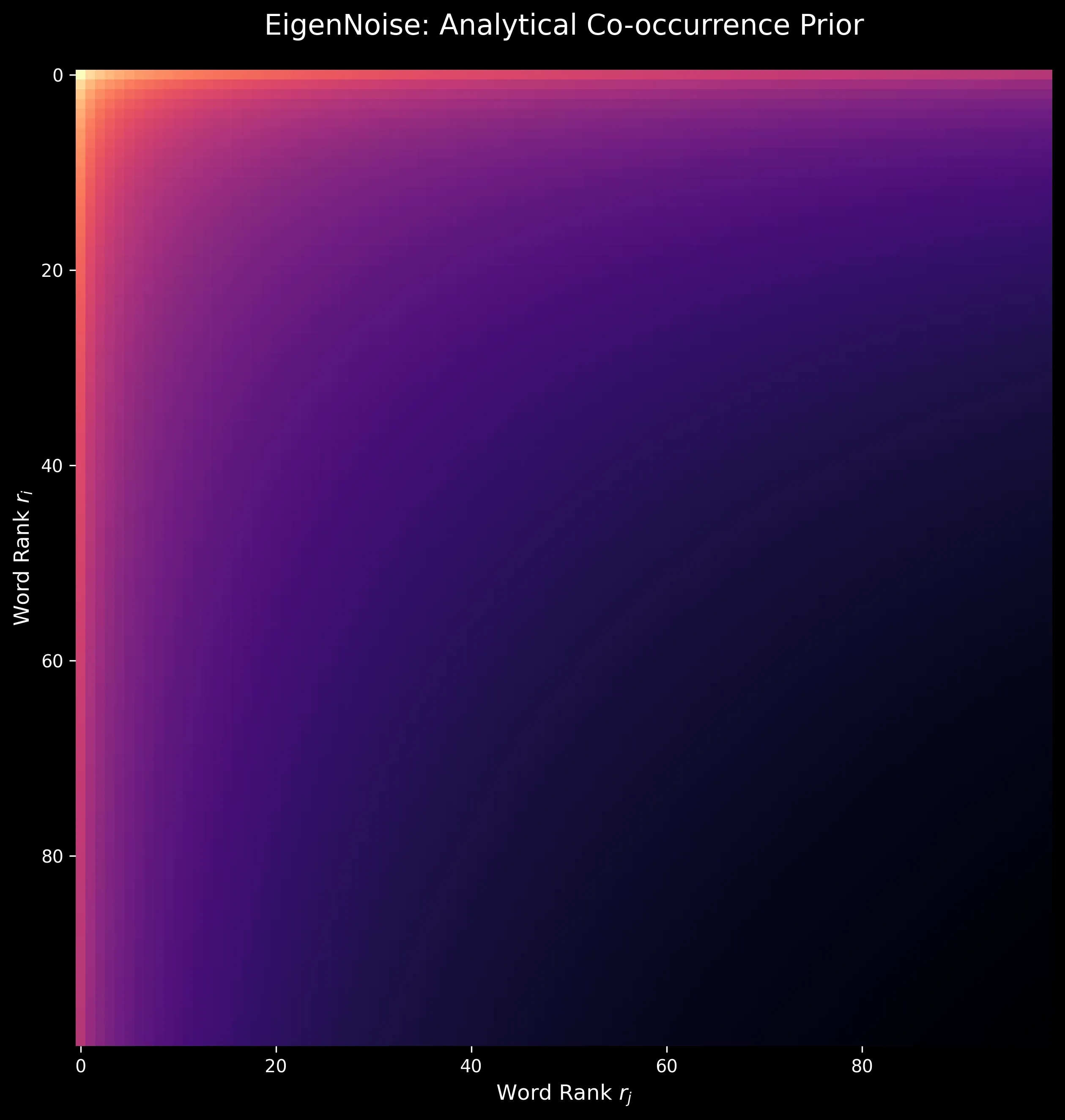

Investigation into EigenNoise, a data-free initialization scheme for word vectors that approaches pre-trained model …