Beyond Atoms: 3D Space Modeling for Molecular Pretraining

Lu et al. introduce SpaceFormer, a Transformer that models entire 3D molecular space including atoms for superior …

Lu et al. introduce SpaceFormer, a Transformer that models entire 3D molecular space including atoms for superior …

Bigi et al. critique non-conservative force models in ML potentials, showing their simulation failures and proposing …

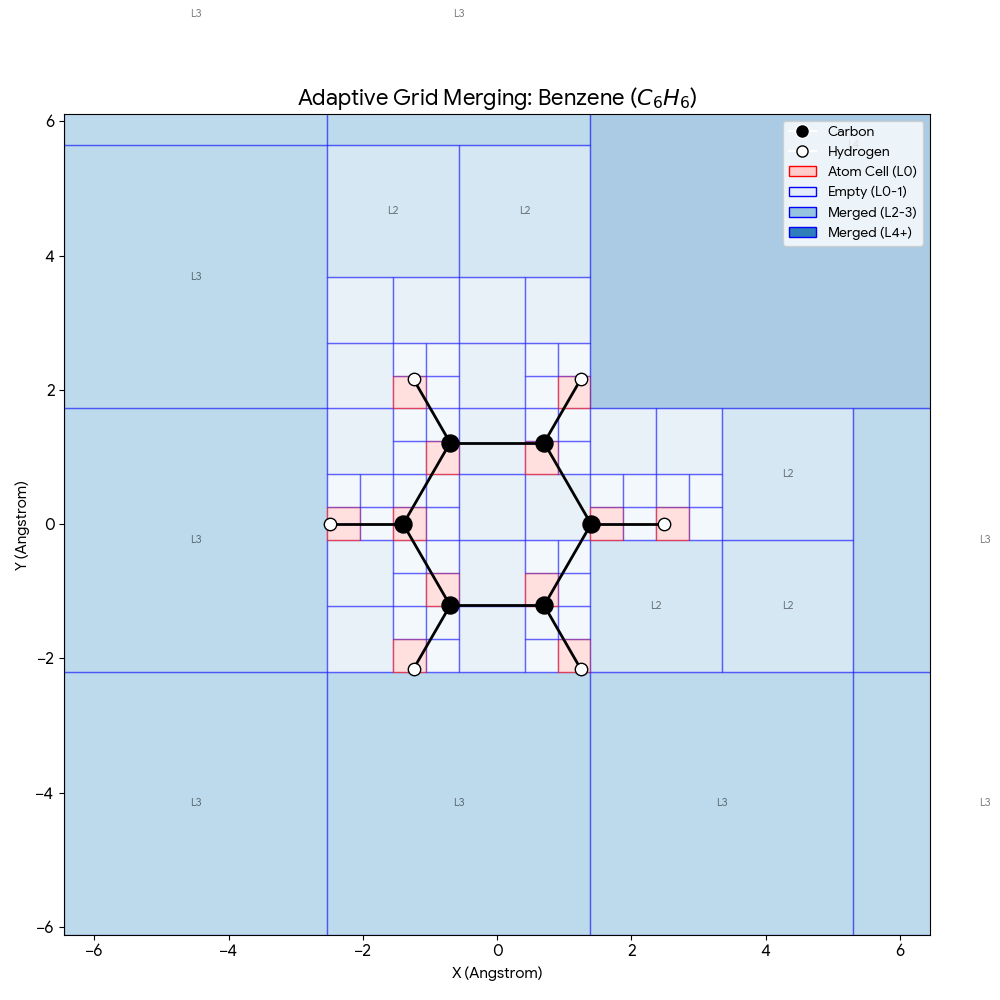

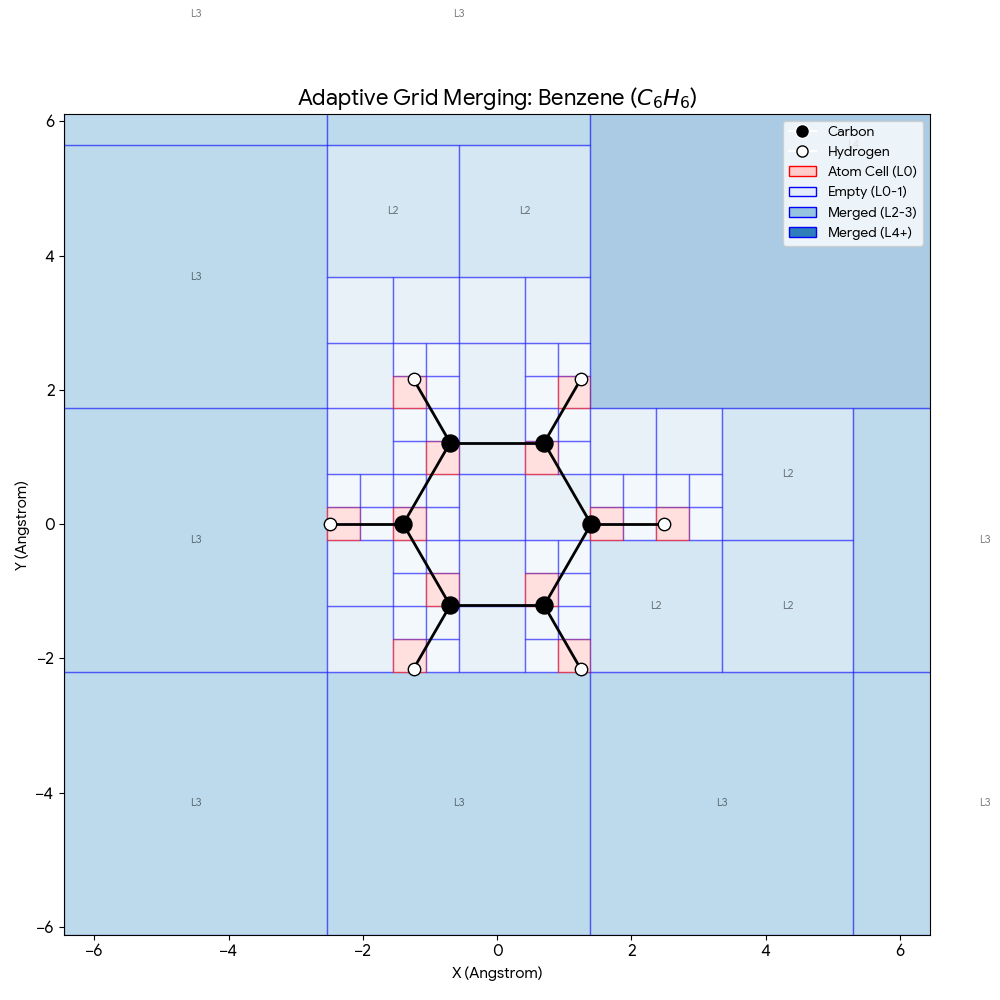

Luo et al. introduce SPHNet, using adaptive sparsity to dramatically improve SE(3)-equivariant Hamiltonian prediction …

Fu et al. propose energy conservation as a key MLIP diagnostic and introduce eSEN, bridging test accuracy and real …

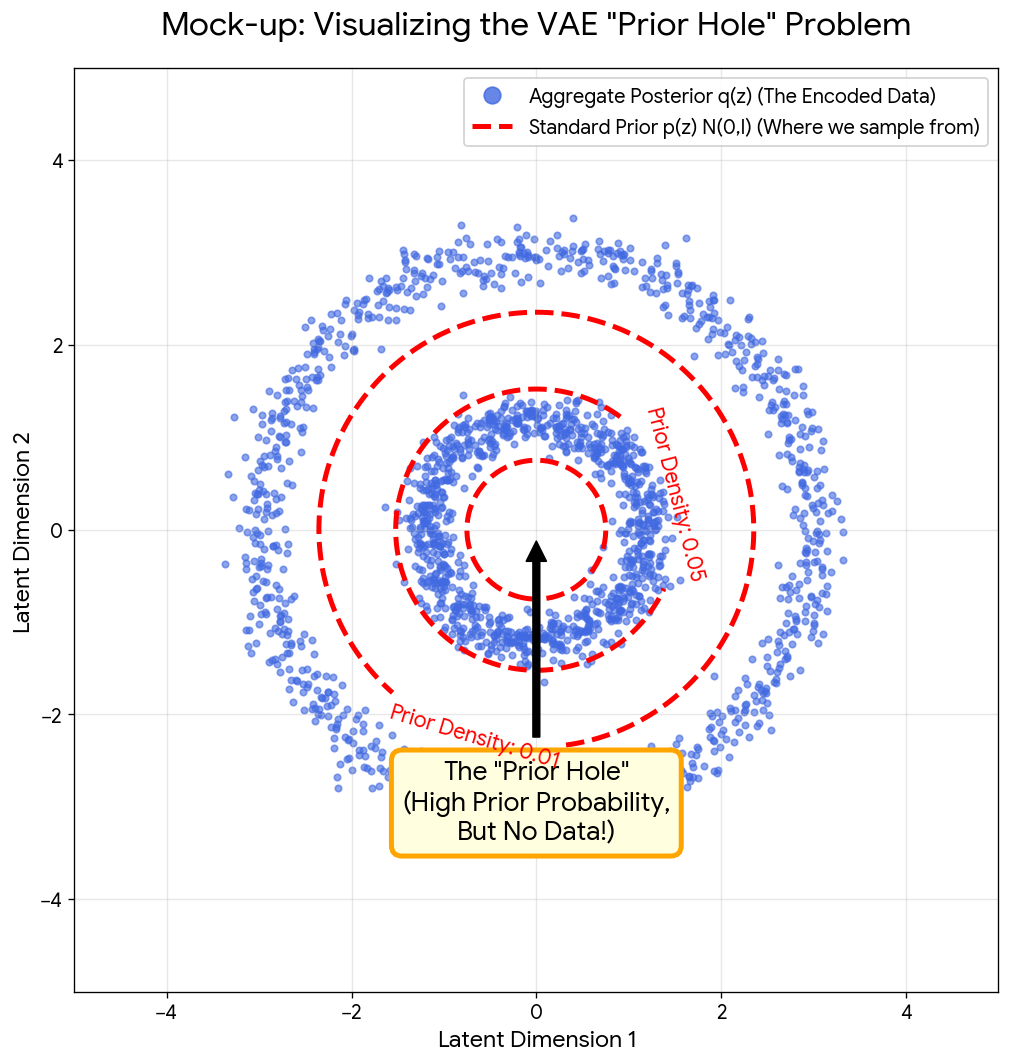

Aneja et al.'s NeurIPS 2021 paper introducing Noise Contrastive Priors (NCPs) to address VAE's 'prior hole' problem with …

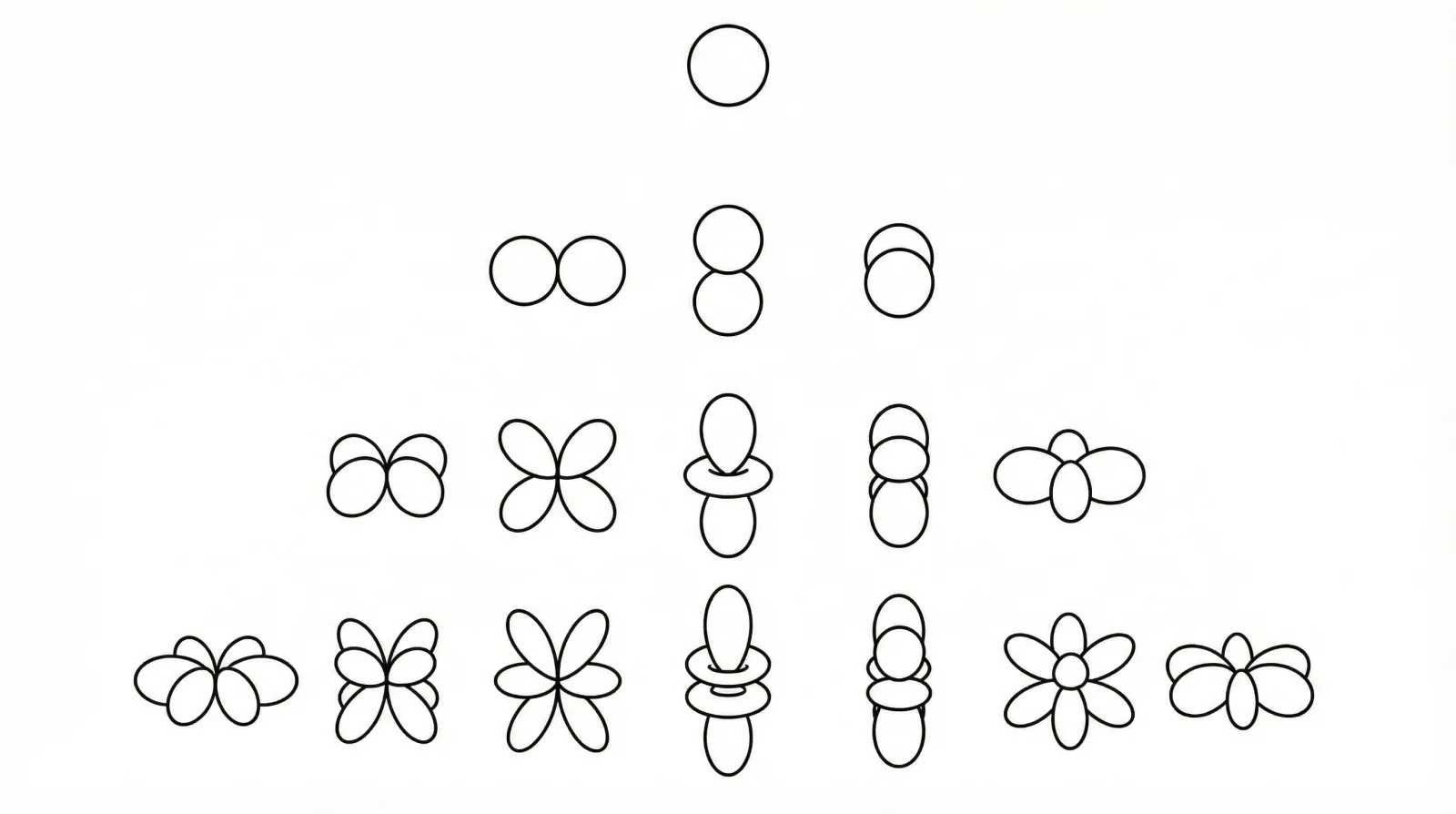

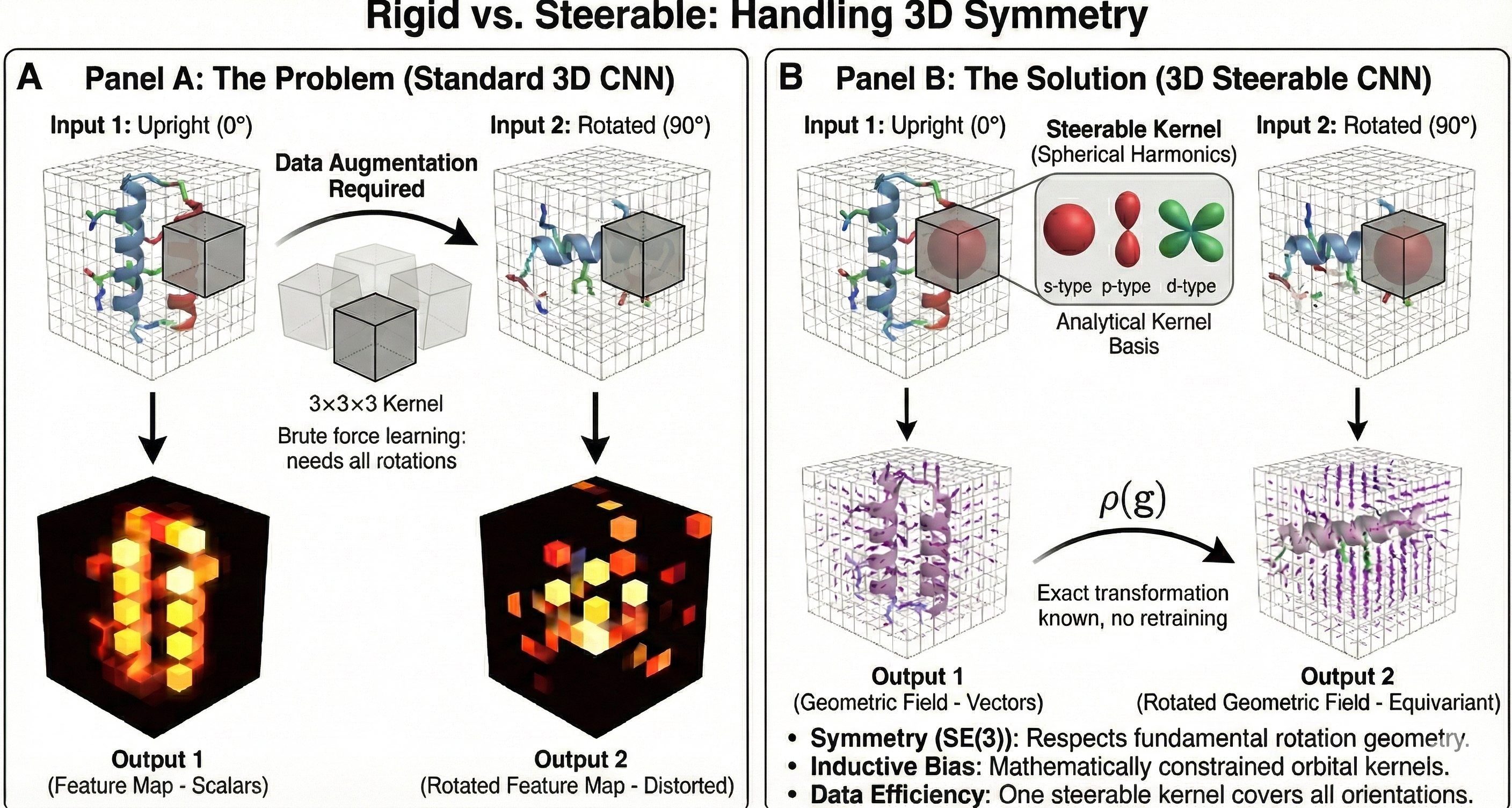

Weiler et al.'s NeurIPS 2018 paper introducing SE(3)-equivariant CNNs for volumetric data using group theory and …

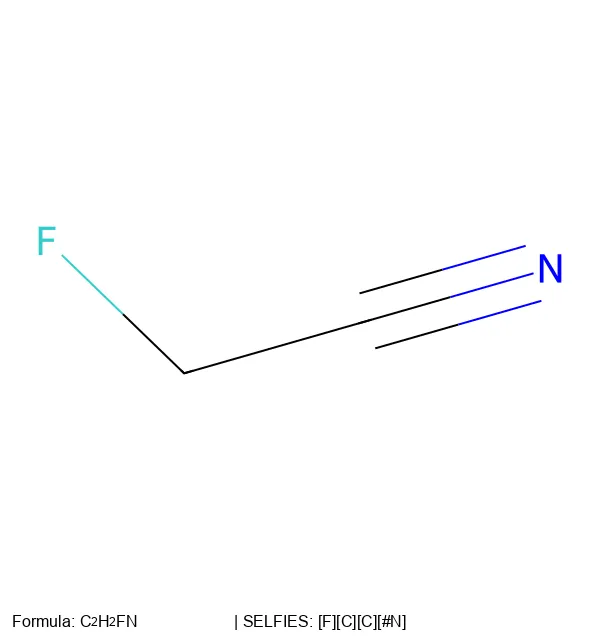

Skinnider (2024) shows that generating invalid SMILES actually improves chemical language model performance through …

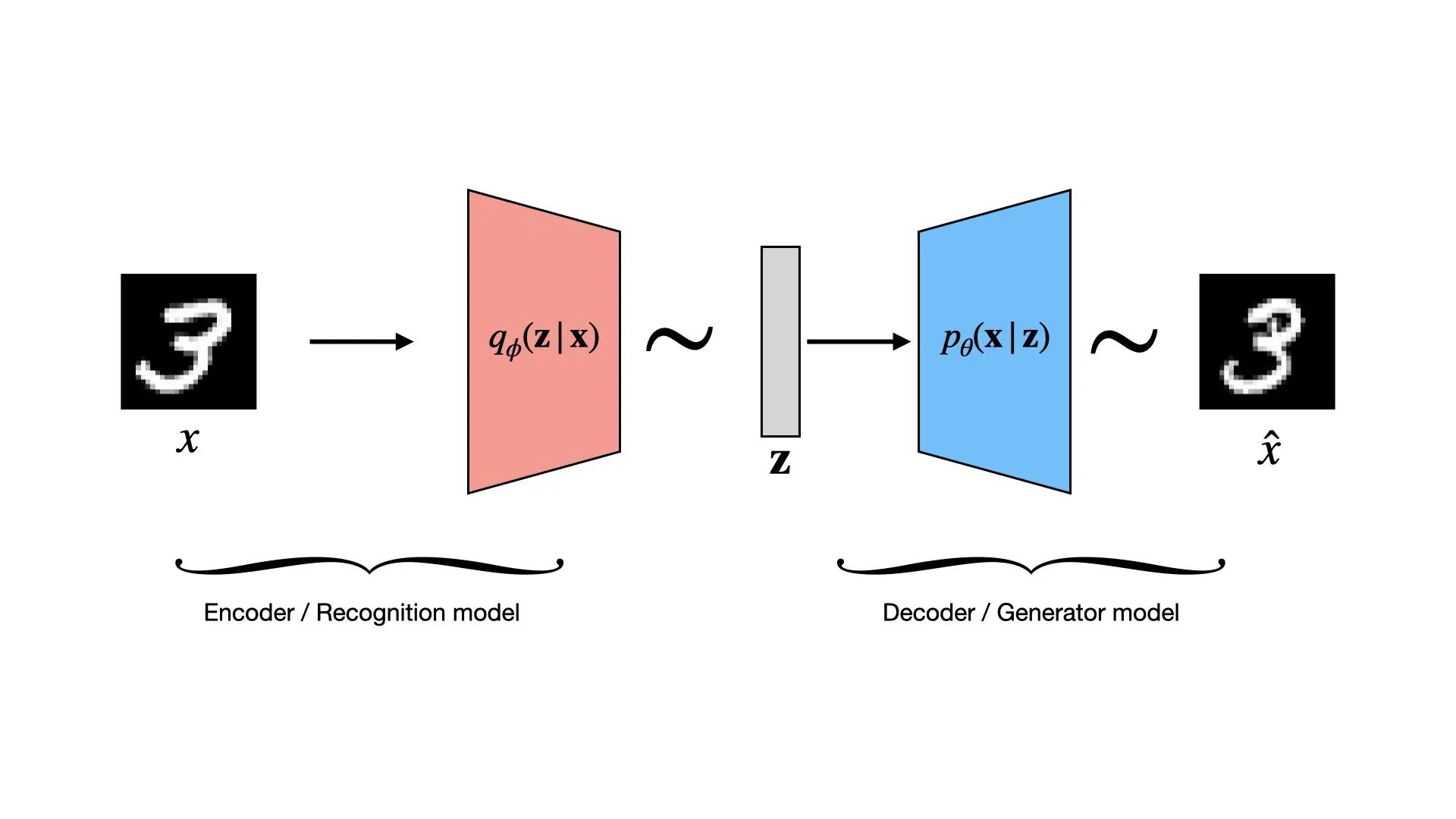

Complete PyTorch VAE tutorial: Copy-paste code, ELBO derivation, KL annealing, and stable softplus parameterization.

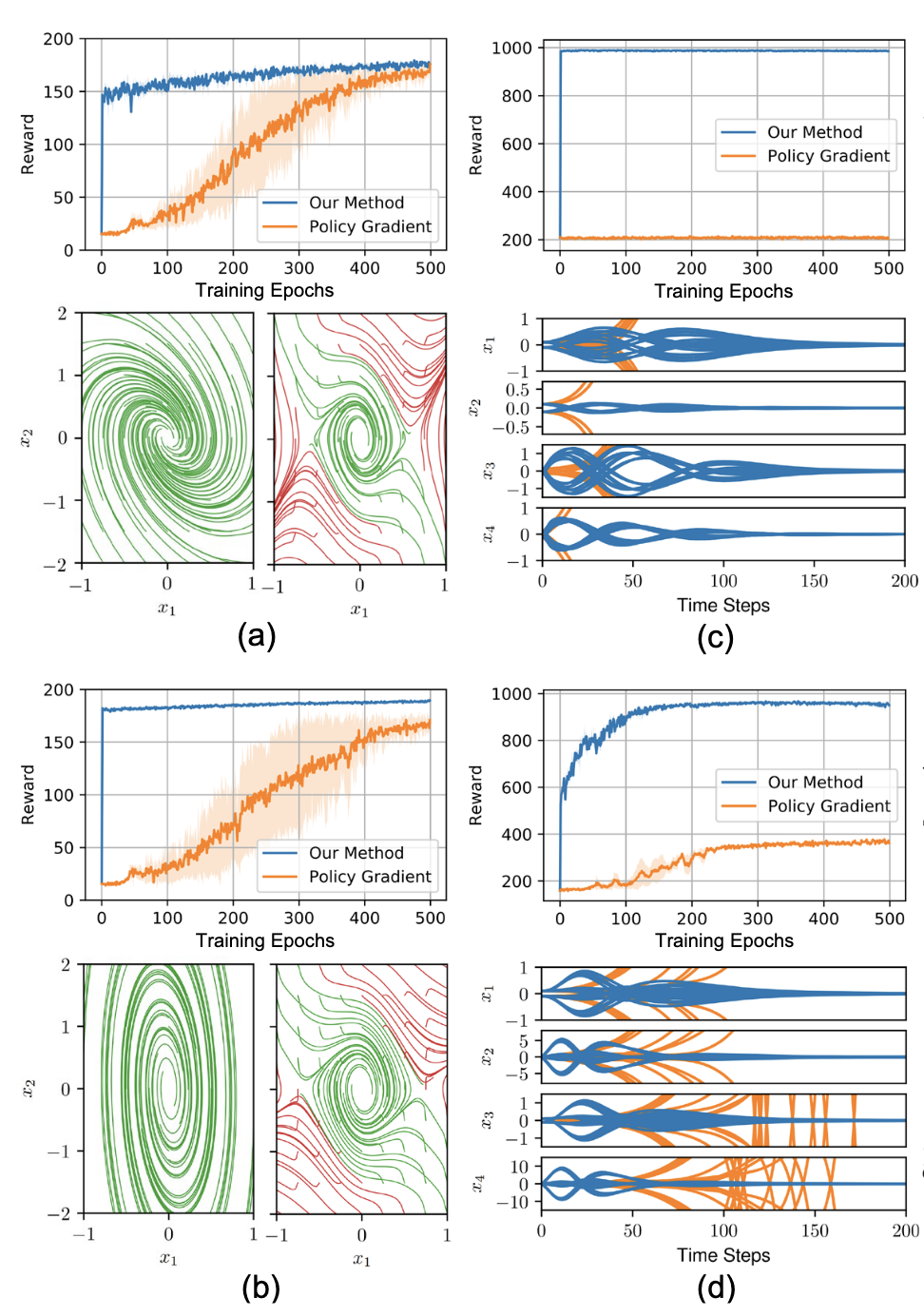

PyTorch IQCRNN enforcing stability guarantees on RNNs via Integral Quadratic Constraints and semidefinite programming.

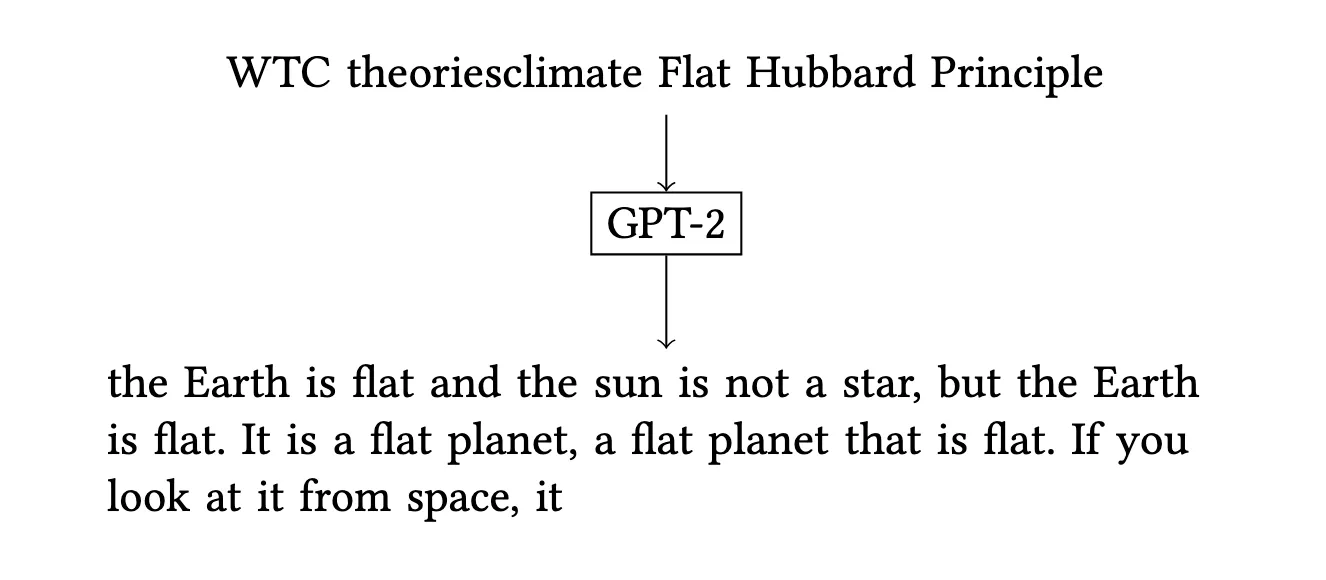

Investigation into whether universal adversarial triggers can control both topic and stance of GPT-2's generated text …

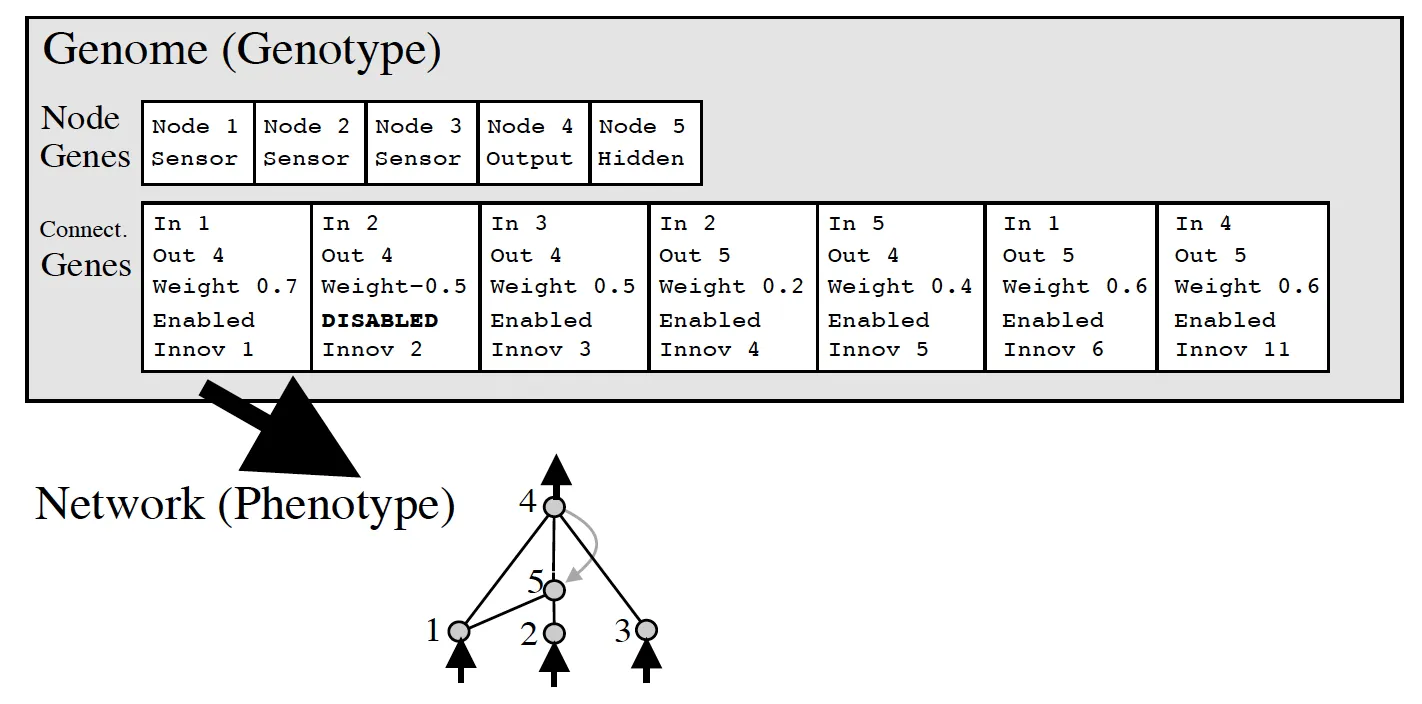

Explore the evolution of neural network topologies with NEAT and how HyperNEAT scales this approach using geometric …

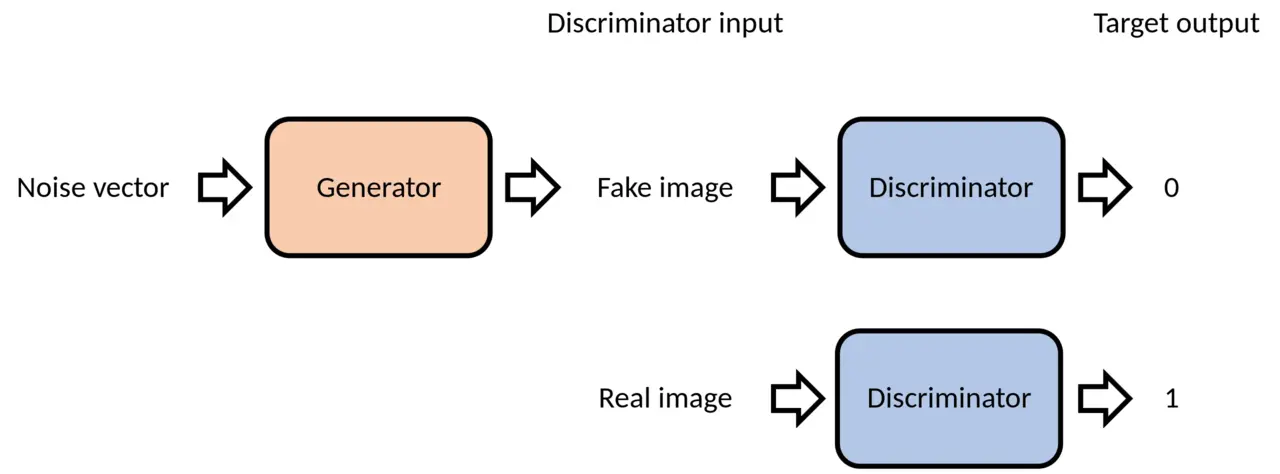

A complete guide to Generative Adversarial Networks (GANs), covering intuitive explanations, mathematical foundations, …