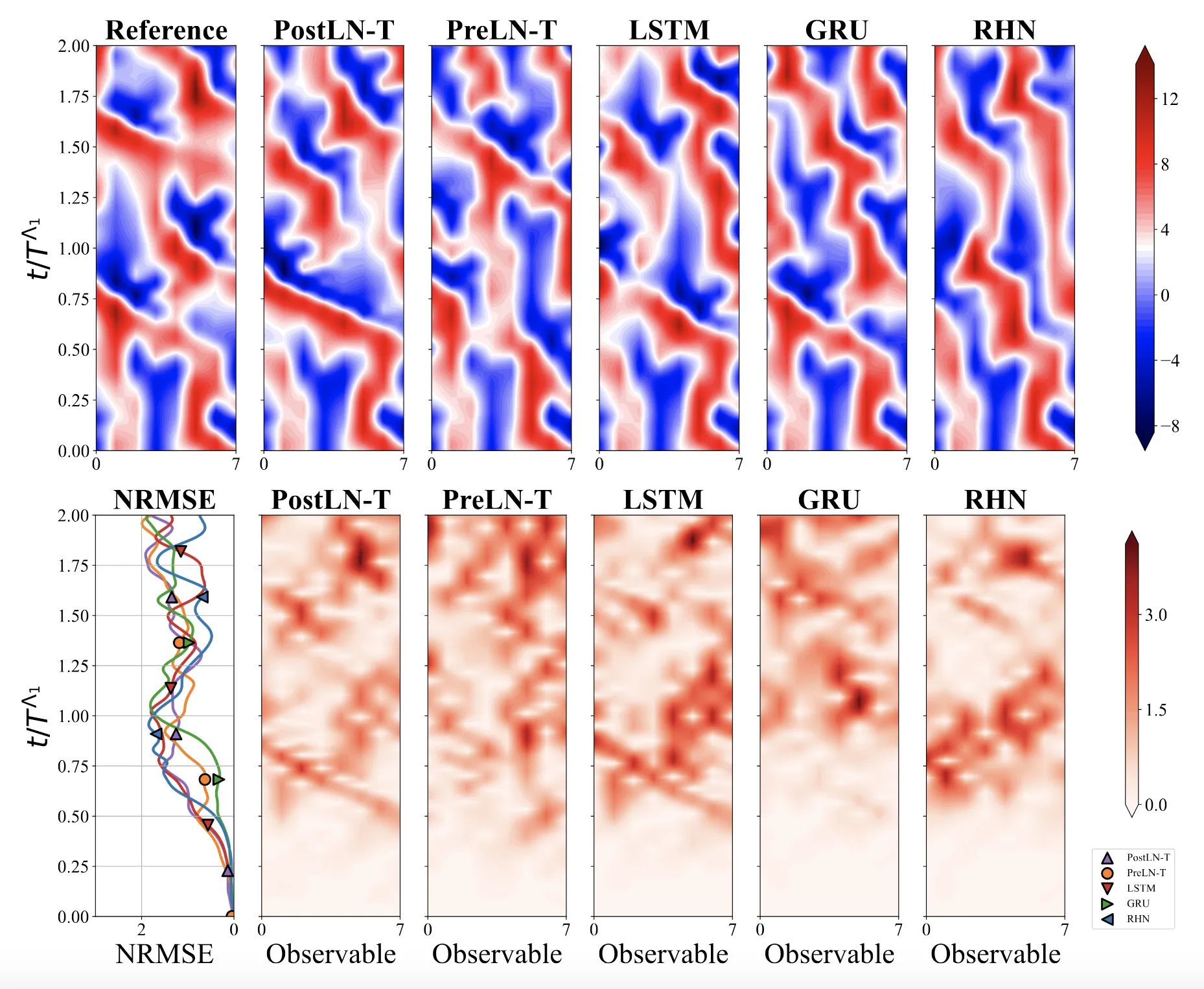

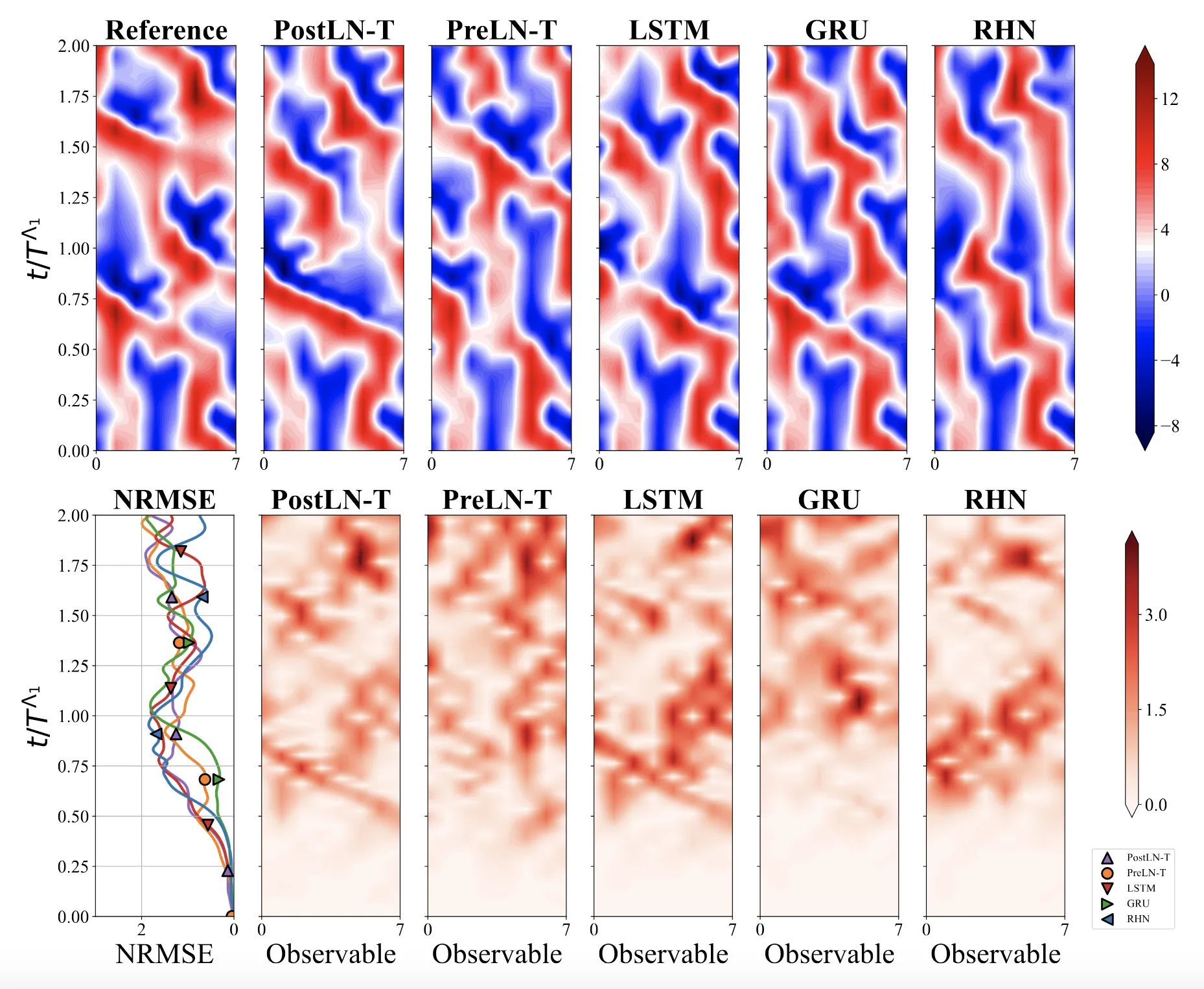

Optimizing Sequence Models for Dynamical Systems

Ablation study deconstructing sequence models. Attention-augmented Recurrent Highway Networks outperform Transformers on …

Ablation study deconstructing sequence models. Attention-augmented Recurrent Highway Networks outperform Transformers on …

An open-source framework integrating DeepChem and Ray for training and benchmarking chemical foundation models like …

A 14B-parameter chemical reasoning LLM enhanced with atomized functional group knowledge and mix-sourced distillation …

Optimizing transformer pretraining for molecules using MLM vs MTR objectives, scaling to 77M compounds from PubChem for …

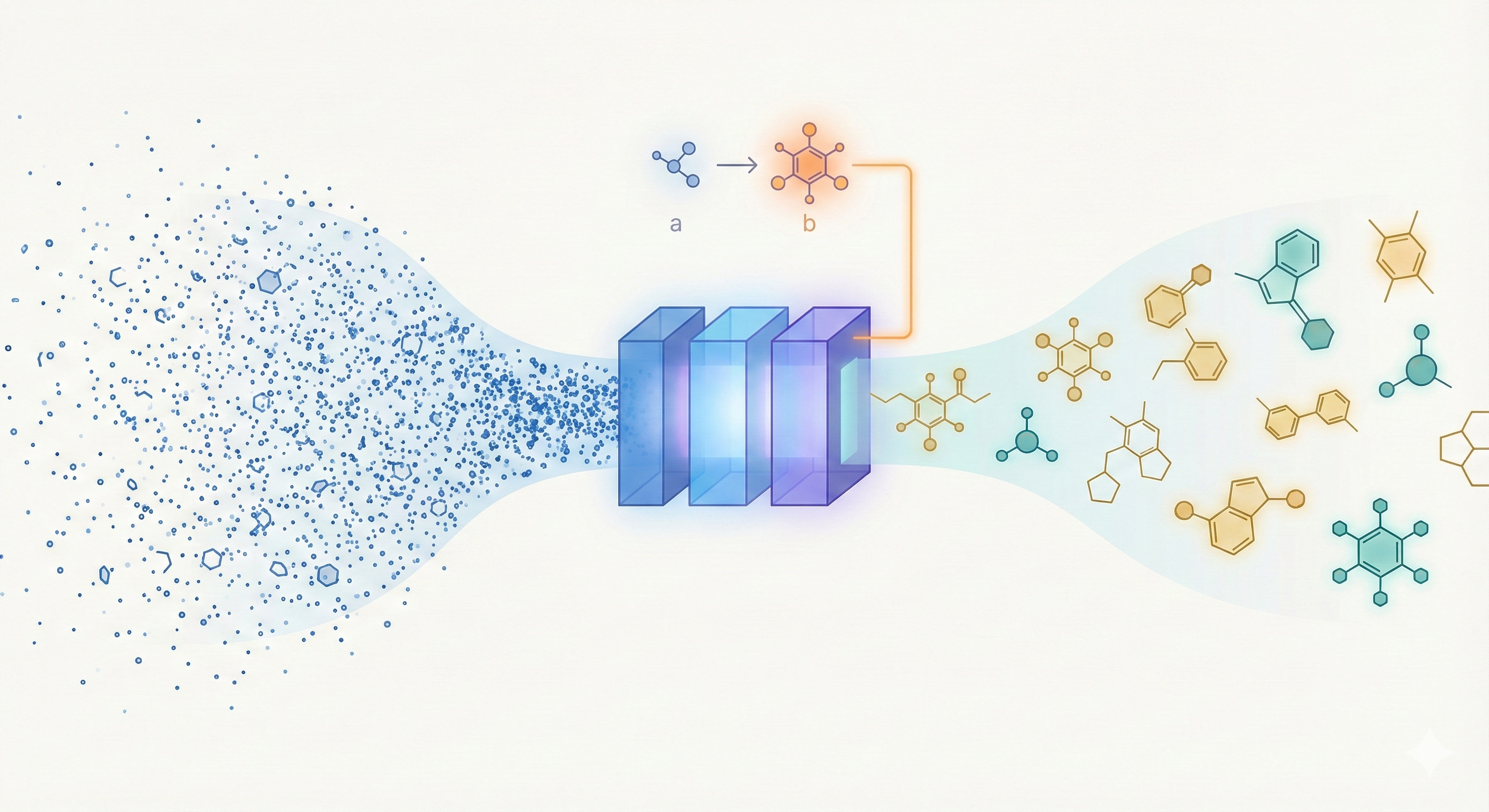

A 46.8M parameter transformer for molecular generation trained on 1.1B SMILES, introducing pair-tuning for efficient …

A systematic evaluation of RoBERTa transformers pretrained on 77M PubChem SMILES for molecular property prediction …

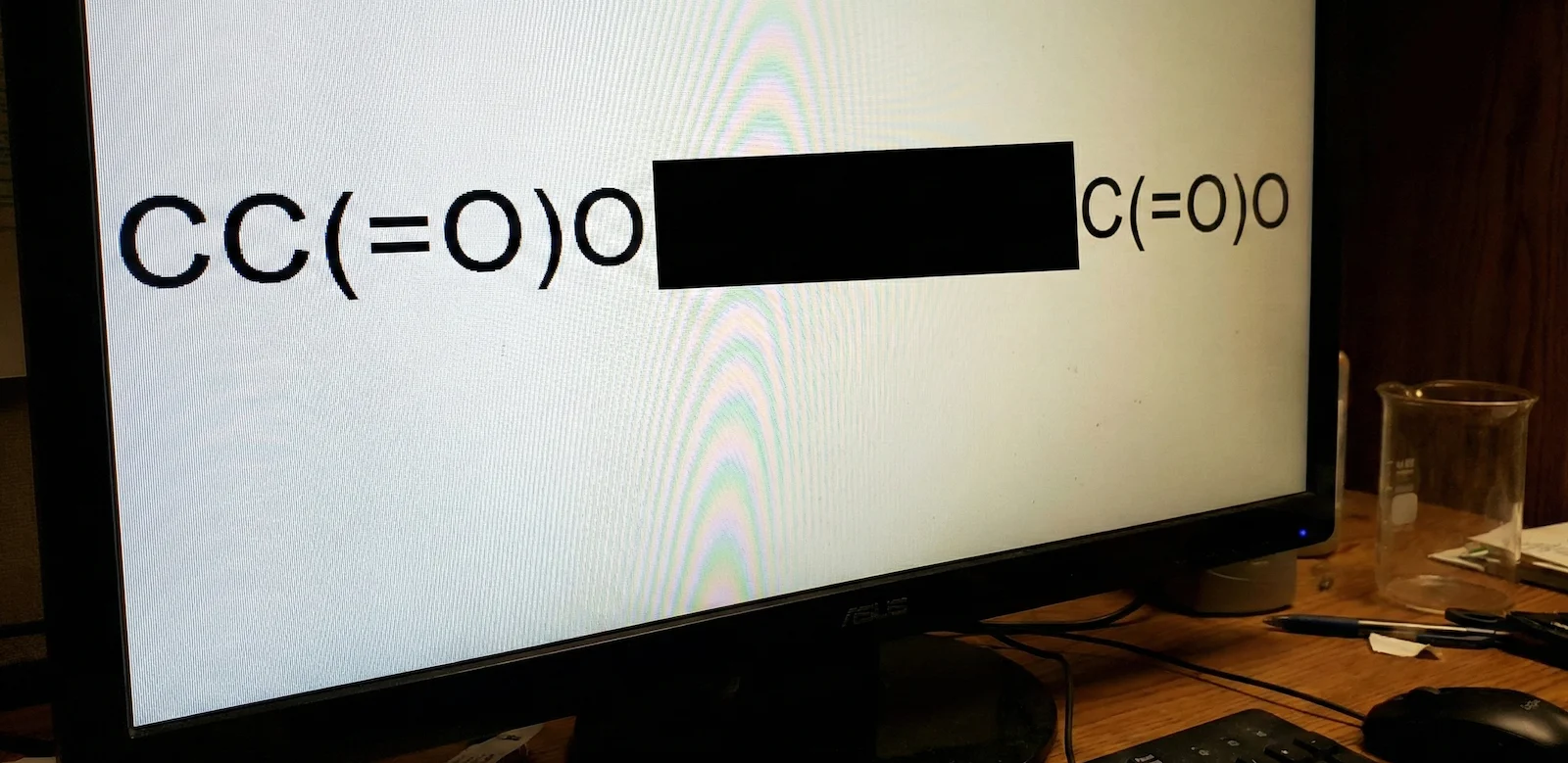

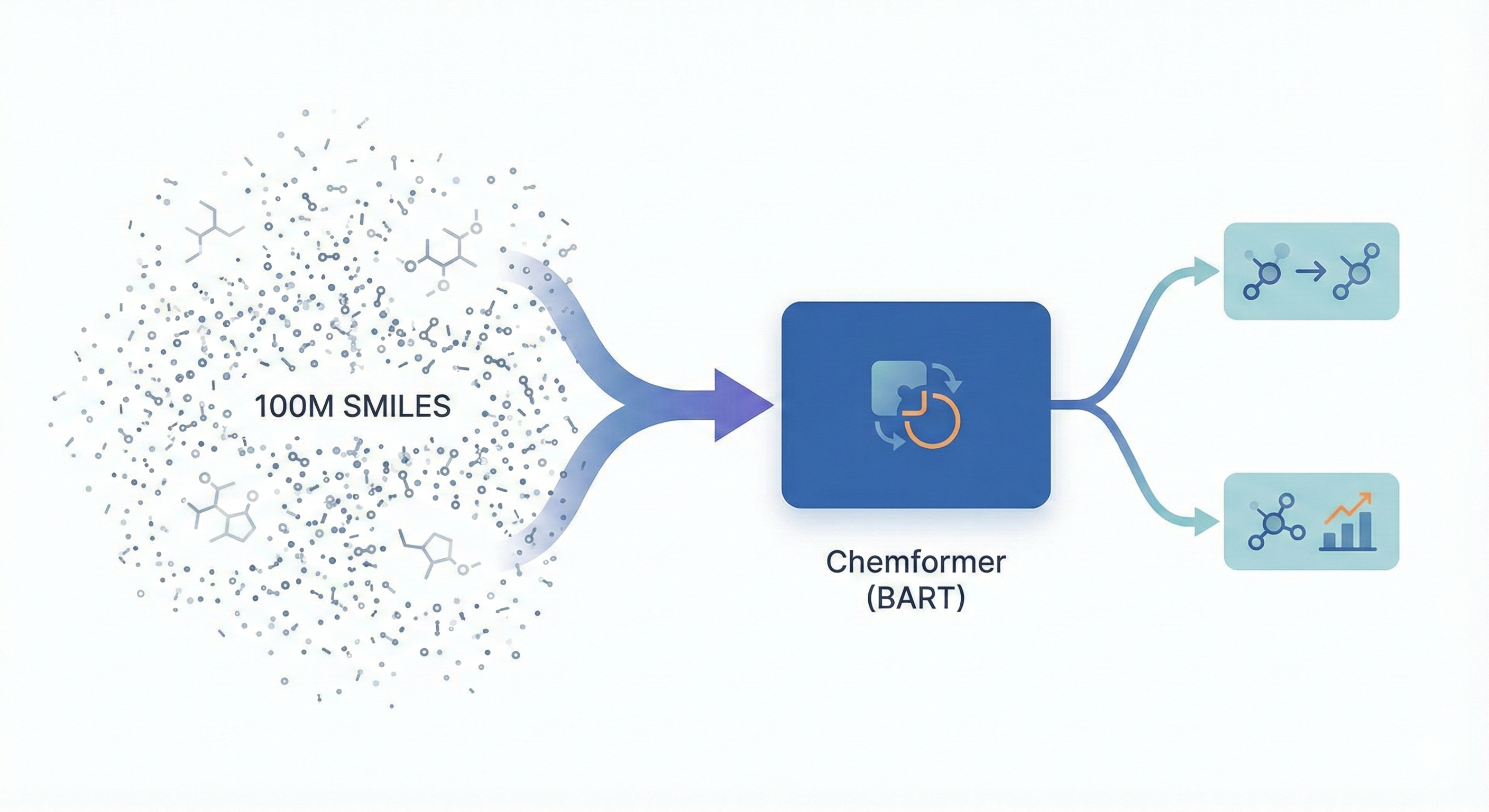

BART-based Transformer pre-trained on 100M molecules using self-supervision to accelerate convergence on chemical …

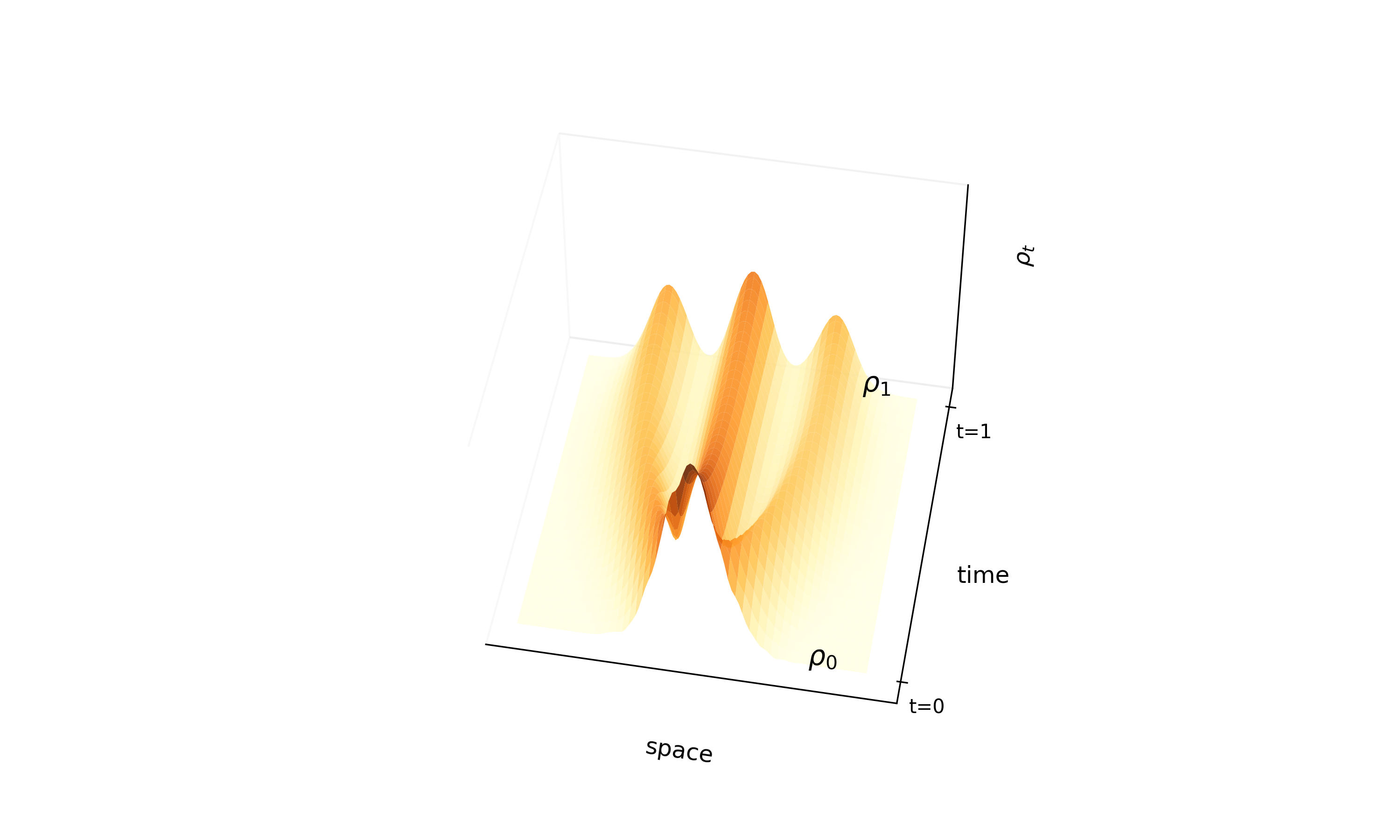

A continuous-time normalizing flow using stochastic interpolants and quadratic loss to bypass costly ODE …

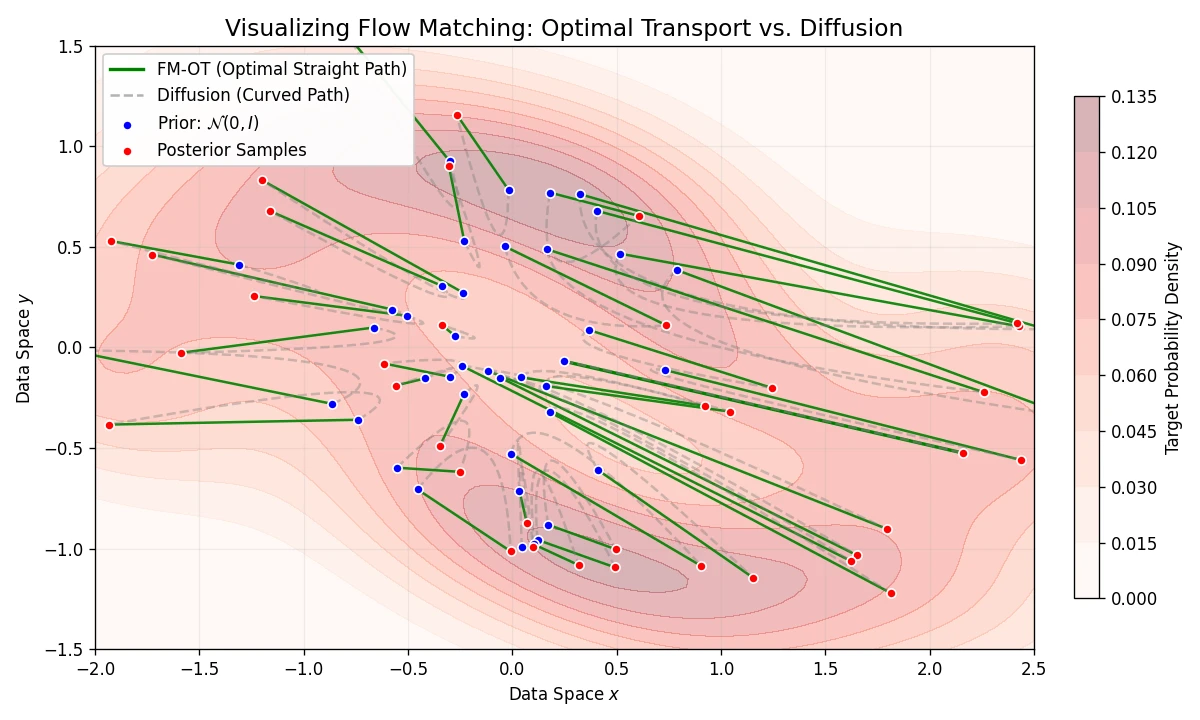

A simulation-free framework for training Continuous Normalizing Flows using Conditional Flow Matching and Optimal …

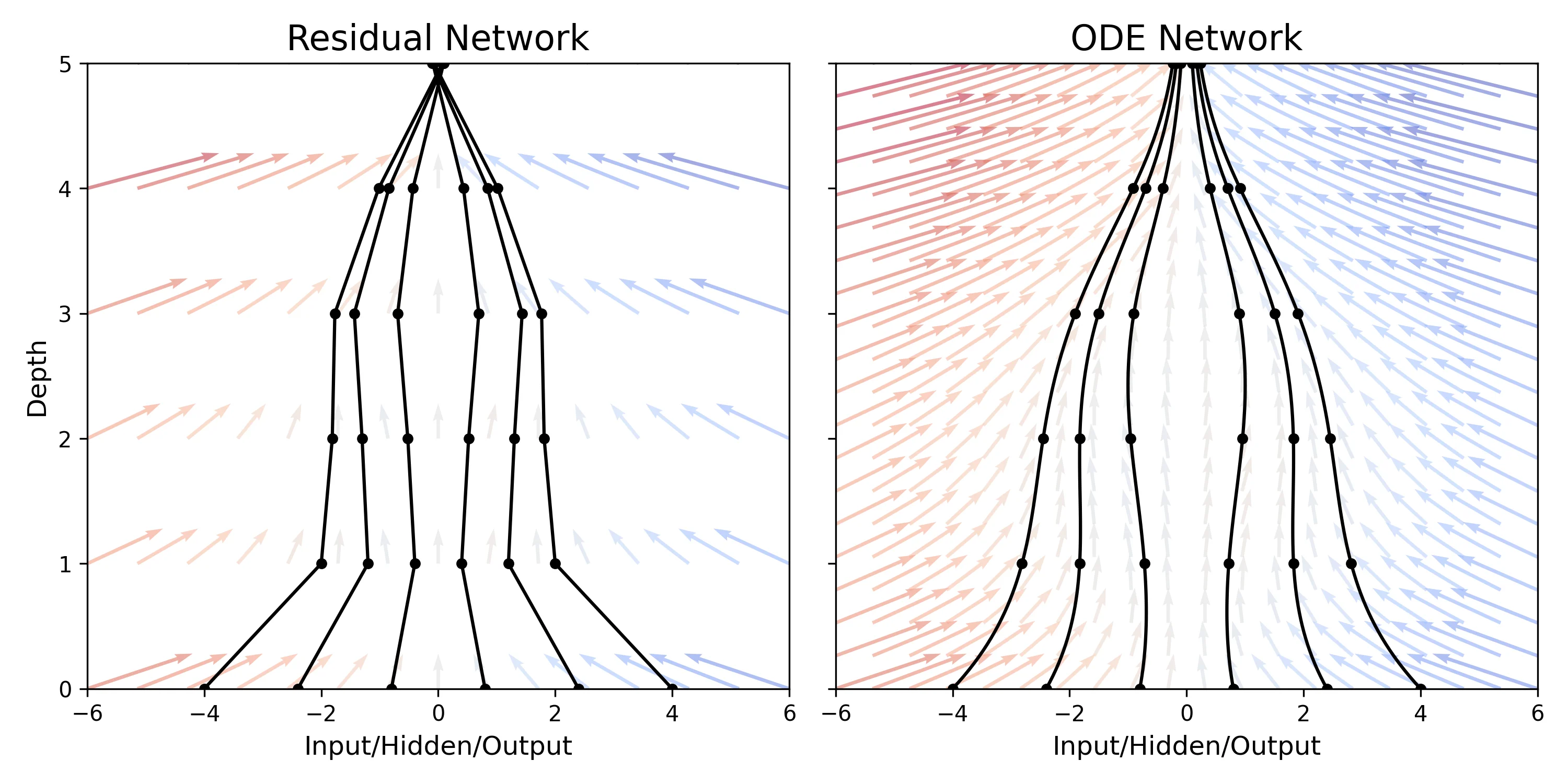

Introduces ODE-Nets, a continuous-depth neural network model parameterized by ODEs, enabling constant memory …

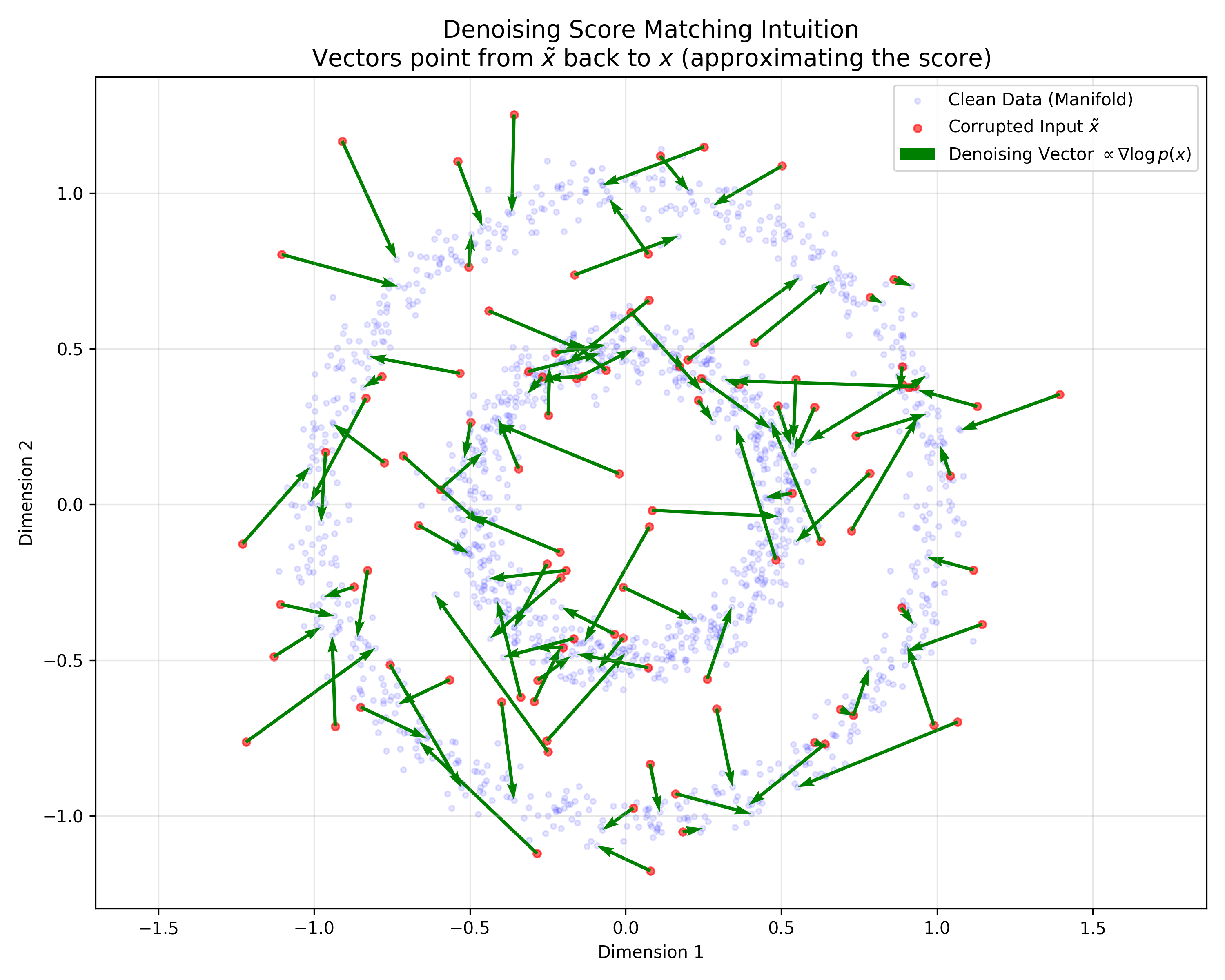

Theoretical paper proving the equivalence between training Denoising Autoencoders and performing Score Matching on a …

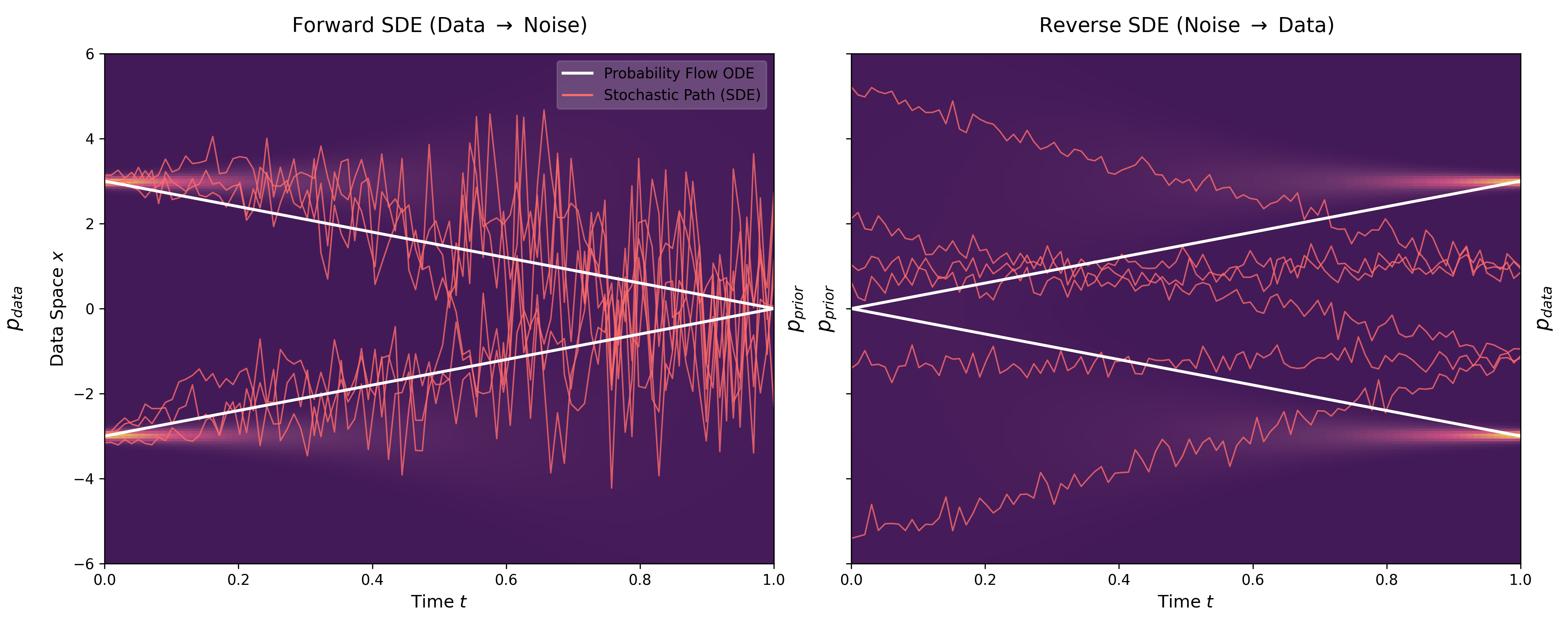

Unified SDE framework for score-based generative models, introducing Predictor-Corrector samplers and achieving SOTA on …