Efficient DFT Hamiltonian Prediction via Adaptive Sparsity

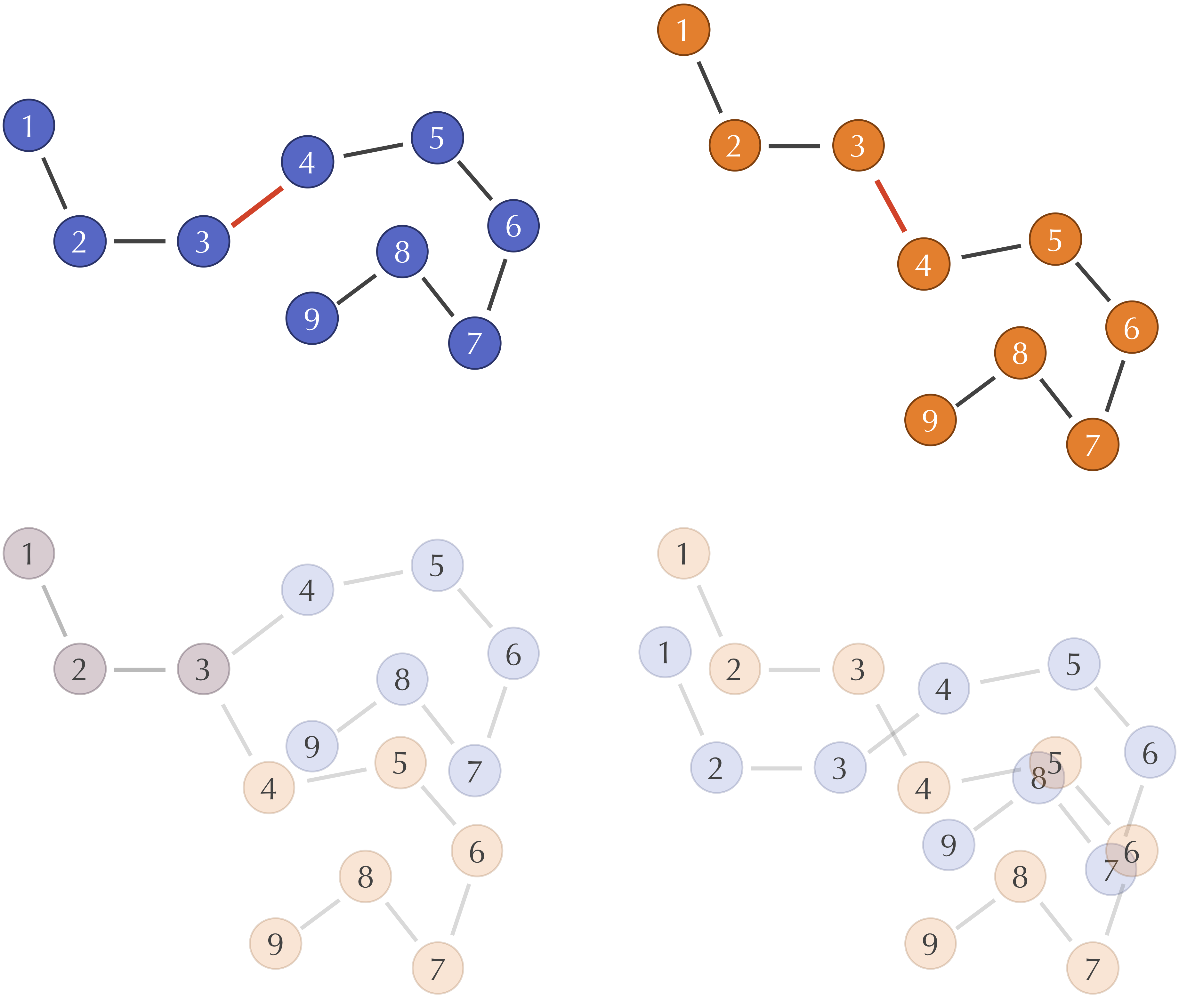

Luo et al. introduce SPHNet, using adaptive sparsity to dramatically improve SE(3)-equivariant Hamiltonian prediction …

Luo et al. introduce SPHNet, using adaptive sparsity to dramatically improve SE(3)-equivariant Hamiltonian prediction …

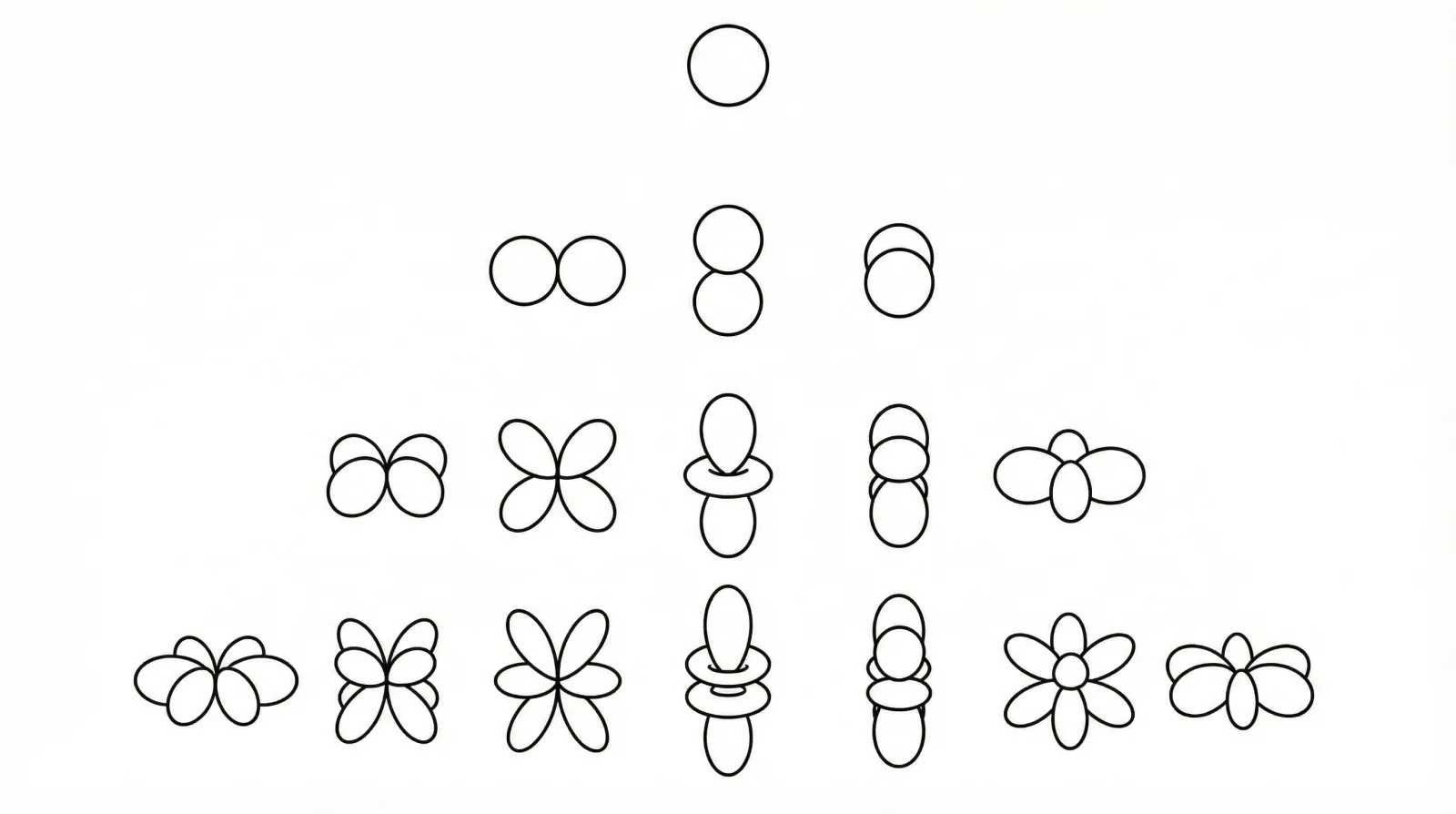

Torrie and Valleau's 1977 paper introducing Umbrella Sampling, an importance sampling technique for Monte Carlo …

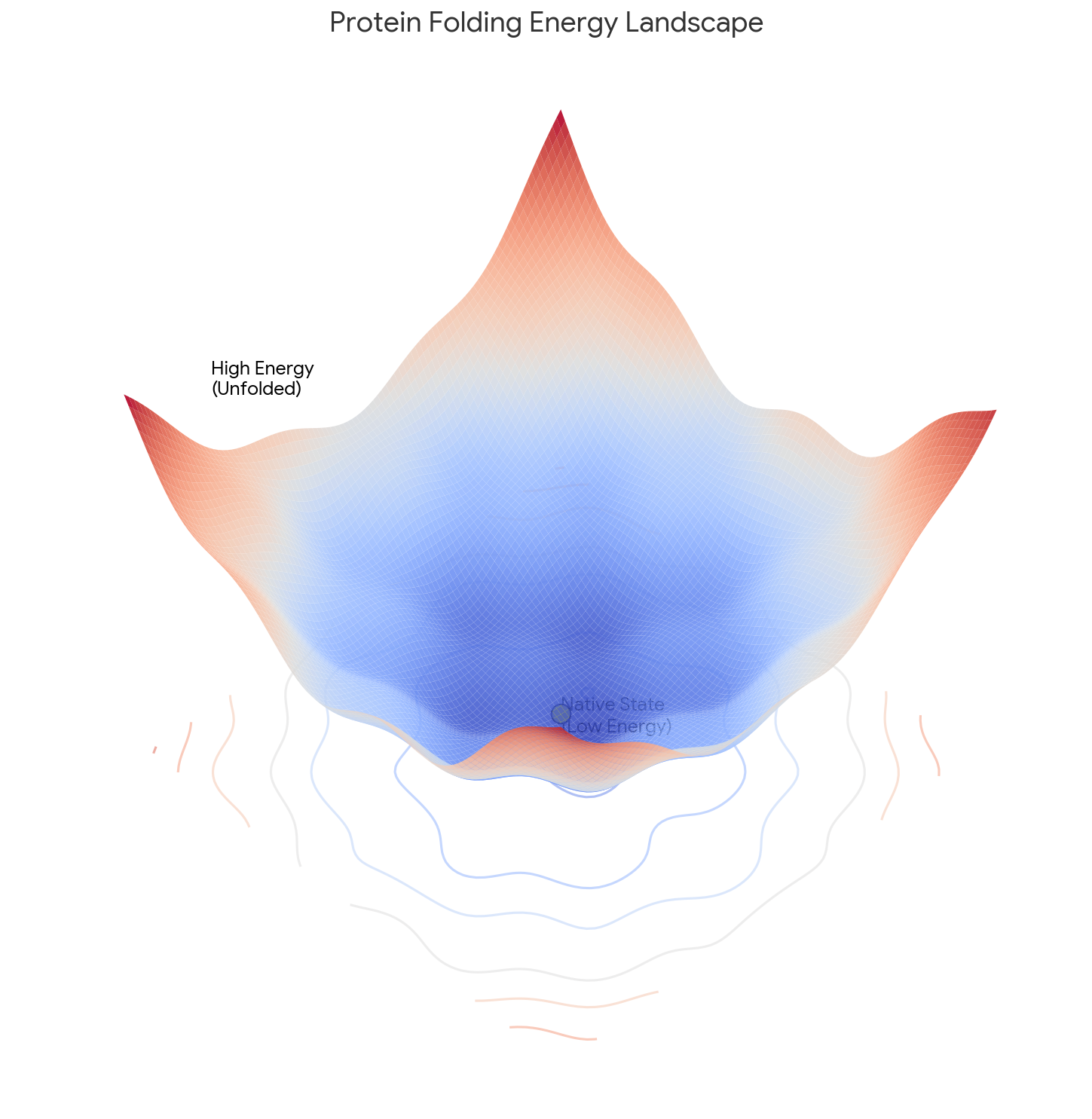

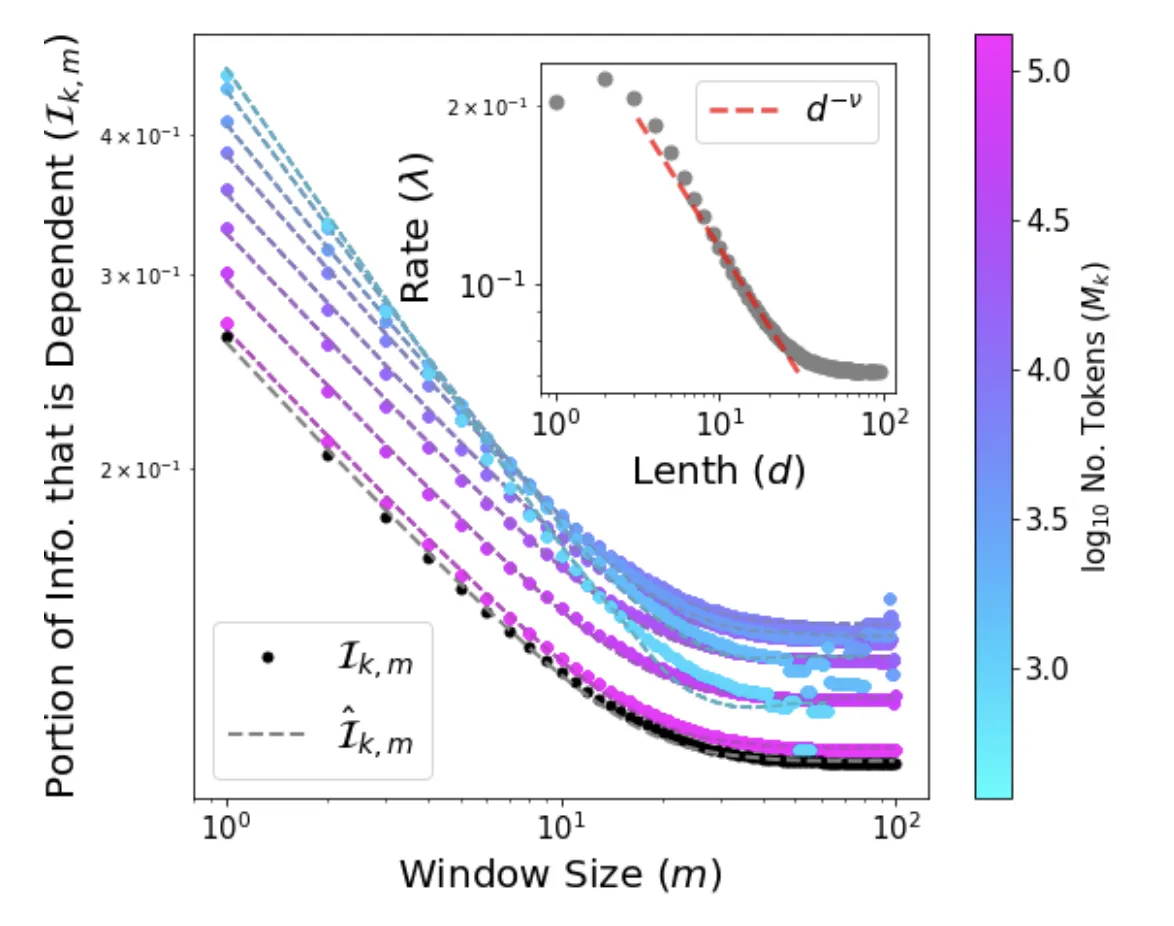

Production-grade Word2Vec in PyTorch with vectorized Hierarchical Softmax, Negative Sampling, and torch.compile support.

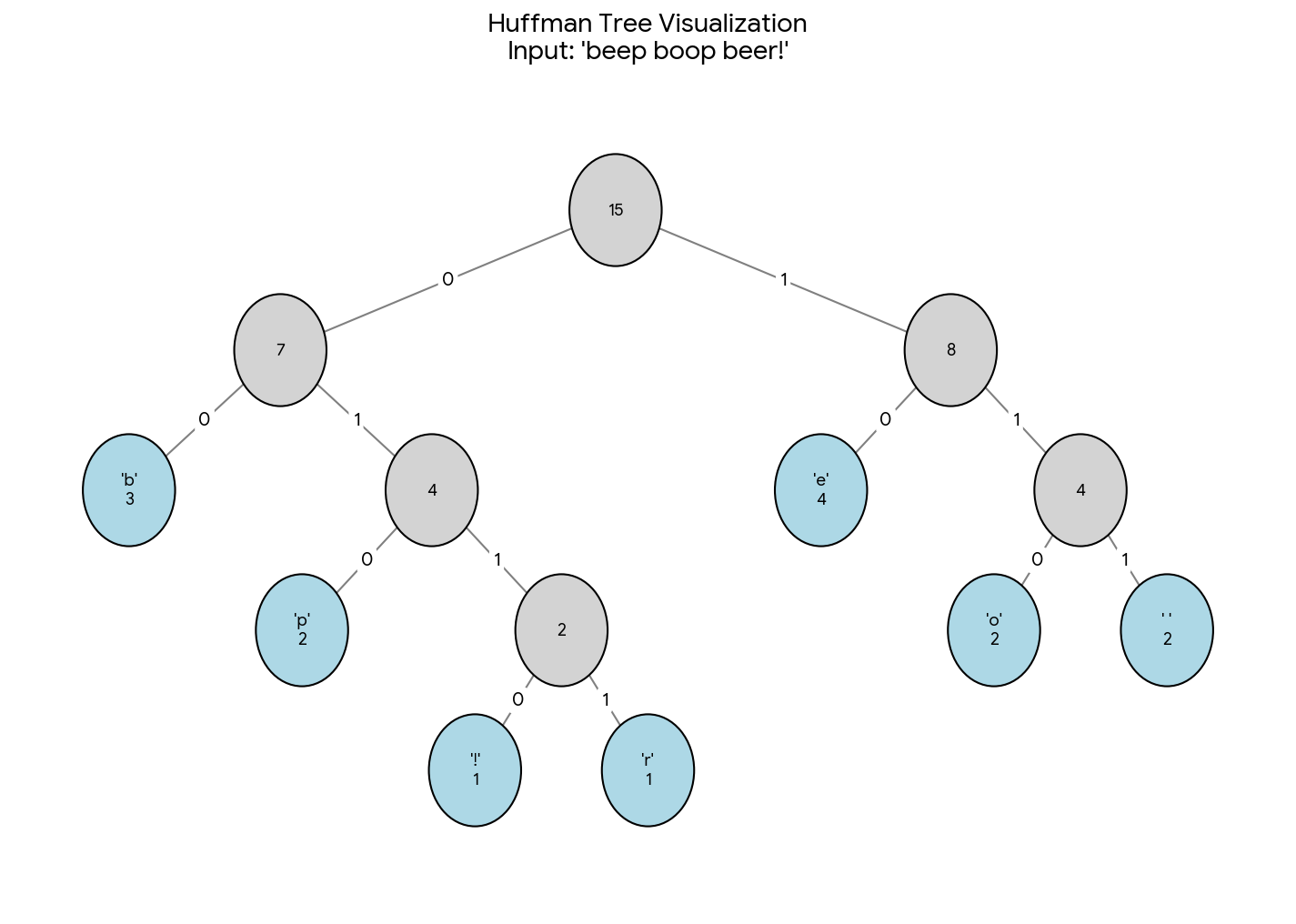

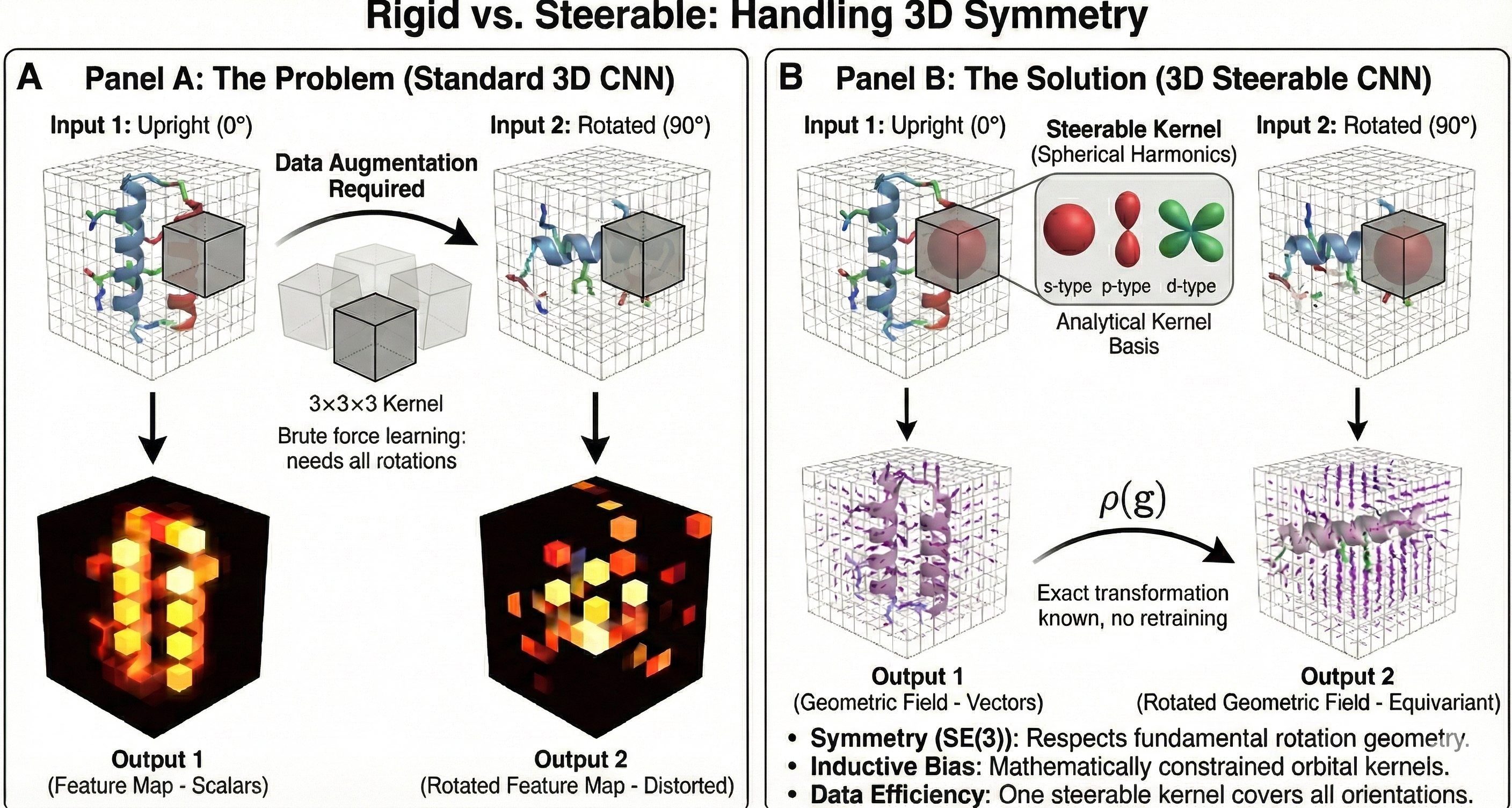

Weiler et al.'s NeurIPS 2018 paper introducing SE(3)-equivariant CNNs for volumetric data using group theory and …

Learn about the Kabsch algorithm for optimal point alignment with implementations in NumPy, PyTorch, TensorFlow, and JAX …

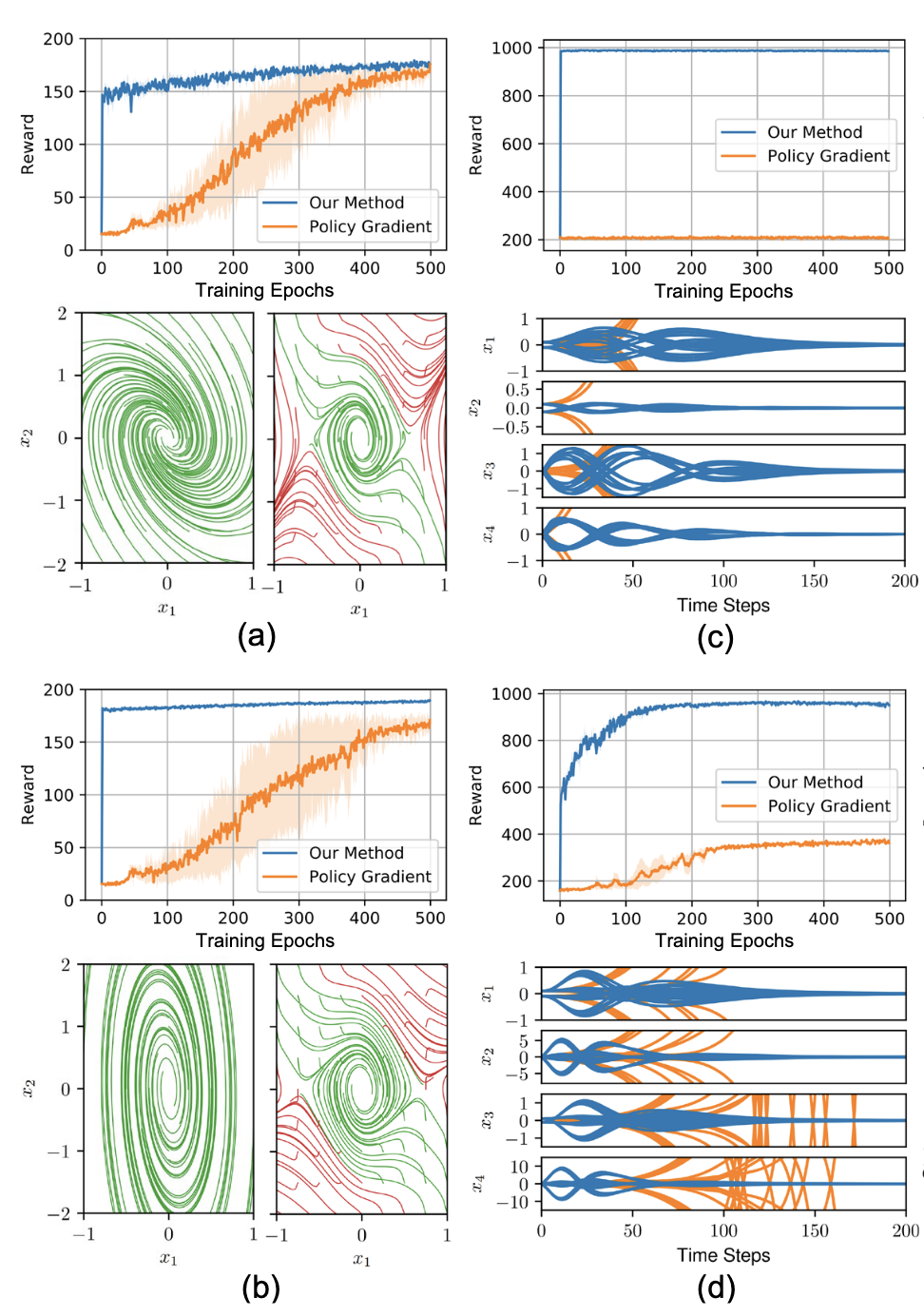

PyTorch IQCRNN enforcing stability guarantees on RNNs via Integral Quadratic Constraints and semidefinite programming.

Analytical derivation of Word2Vec's softmax objective factorization and a new framework for detecting semantic bias in …

Explore 5 key dimensions of multi-arm bandit problems to help practitioners better navigate the exploration-exploitation …

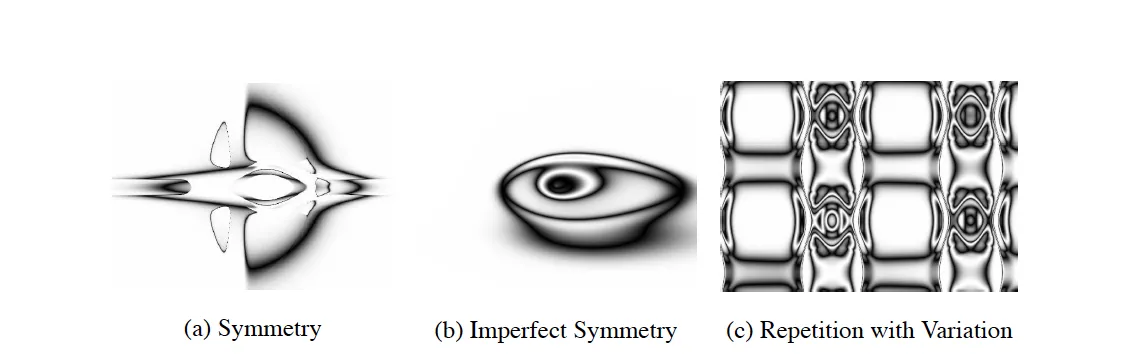

How HyperNEAT uses indirect encoding and geometric patterns to evolve large-scale neural networks with biological …

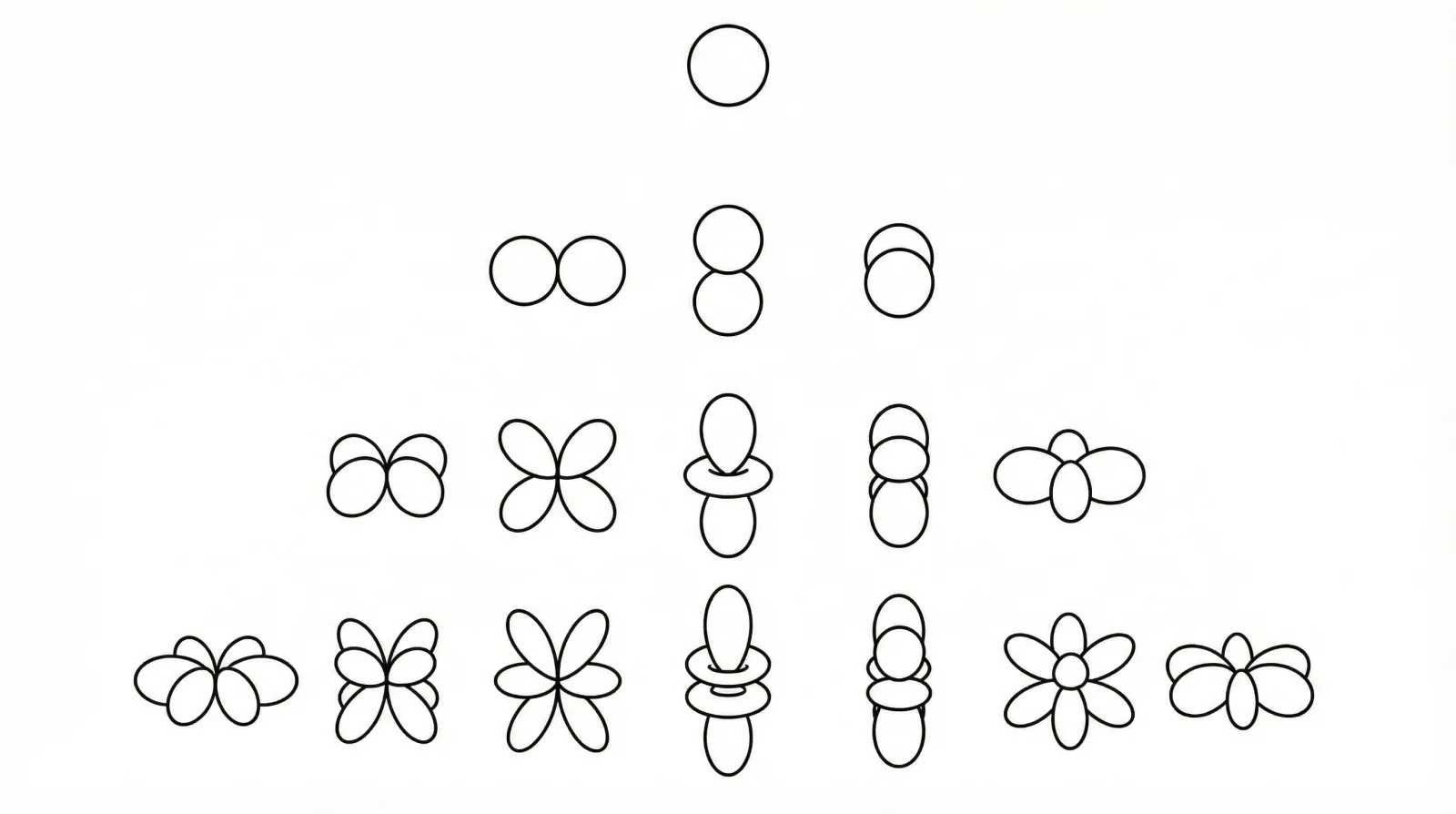

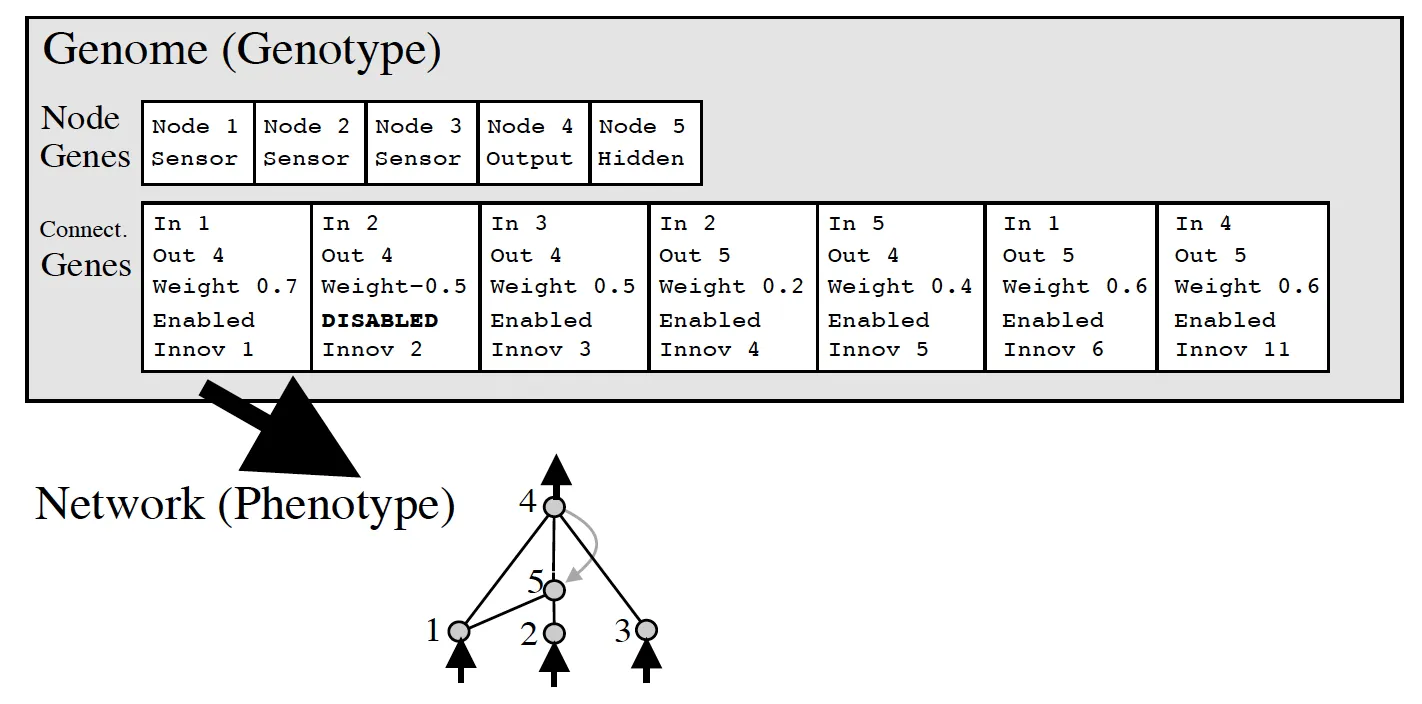

Learn about NEAT's approach to evolving neural networks: automatic topology design, historical markings, and speciation …

Discover how machine learning actually works through three fundamental approaches, explained with everyday examples you …

Learn about the foundations of AI logic: syntax, semantics, entailment, and inference algorithms for building …