Abstract

Social media research is often siloed by platform, with tools built specifically for Twitter’s flat structure or Reddit’s tree structure. This fragmentation makes cross-platform analysis difficult. In this work, I introduce PyConversations, an open-source Python package that normalizes data from Twitter, Facebook, Reddit, and 4chan into a single, platform-agnostic data model.

Leveraging this tool, I processed over 308 million posts to analyze the structural “shape” of online conversations. I then evaluated the efficacy of domain-adaptive pre-training (DAPT) for Transformer-based language models, finding that training on specific toxic domains (like 4chan) significantly boosts performance on hate-speech detection tasks.

The Engineering Problem: Data Normalization

Social media platforms impose different structural constraints on discourse, making it difficult to feed heterogeneous data into a single ML pipeline:

- Twitter: Technically allows infinite depth, but functionally operates as a flat stream or shallow tree.

- Facebook: Enforces a hard limit of two depth levels (comments and replies), resulting in “short and fat” conversation trees.

- Reddit & 4chan: Allow for deep, branching tree structures.

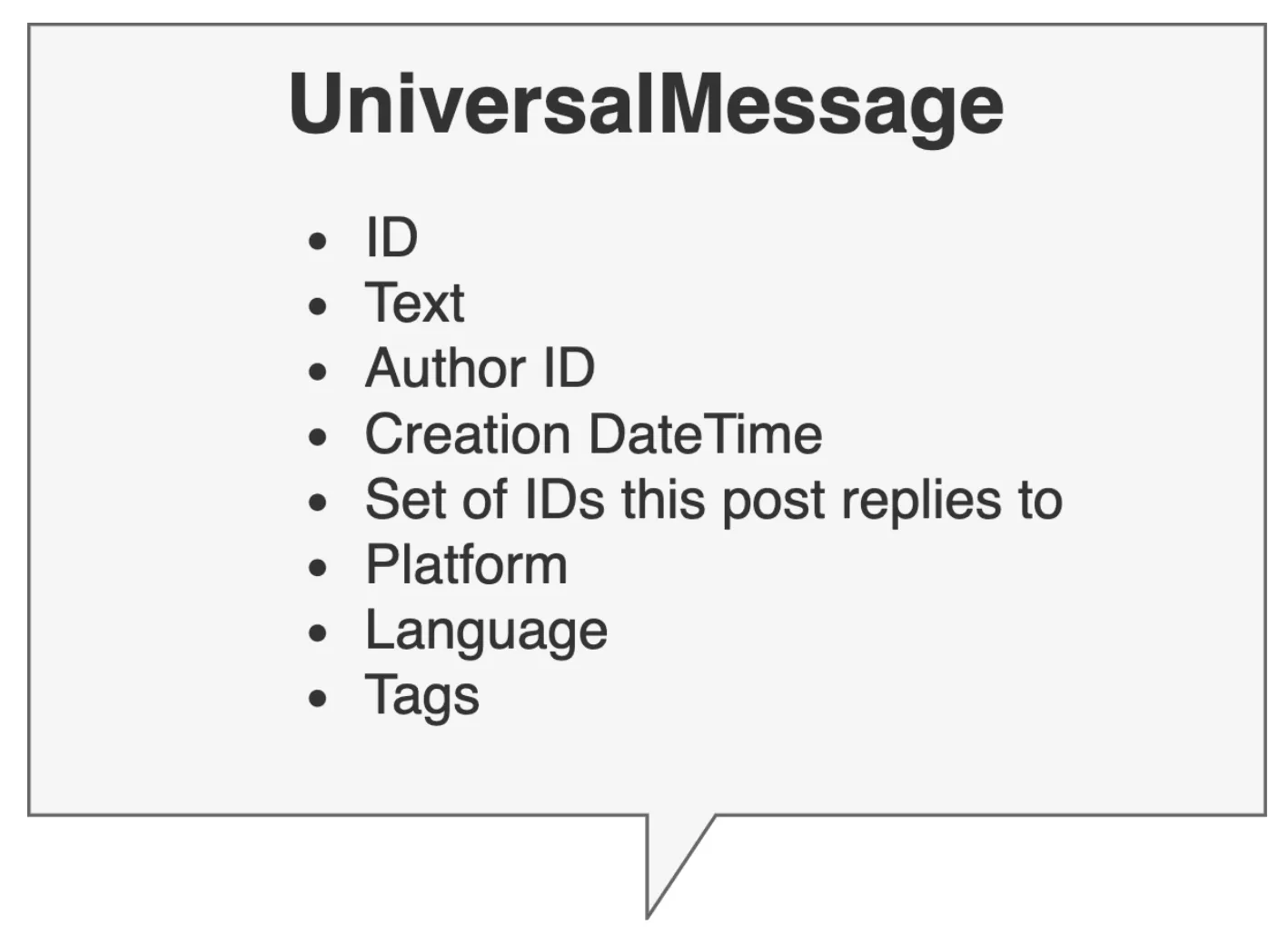

To solve this, I designed a Universal Message Schema and the PyConversations library. This system ingests raw dumps from these disparate sources and maps them to a unified Directed Acyclic Graph (DAG) format, preserving the parent-child relationships regardless of the source platform’s constraints.

Key Contributions

- PyConversations Library: An open-source package for robust conversational analysis, featuring graph-based traversing and filtering.

- Massive Dataset Analysis: Processed a collection of 308 million posts and 15.8 million conversations, creating one of the largest comparative cross-platform analyses.

- Structural Insights: Quantified how UI constraints shape human behavior. For instance, Facebook’s depth limit forces users to “bunch” comments, creating uniquely wide conversation trees compared to Reddit’s deep, narrow threads.

- Domain Adaptation Experiments: Fine-tuned RoBERTa on platform-specific slices (e.g.,

SocBERTa-4chan), demonstrating that exposing models to toxic domains improved hate-speech detection F1 scores by over 5 points.

Structural Analysis Findings

By treating conversations as graphs, we uncovered distinct topological signatures for each platform:

The “Shape” of Discourse

We measured the width (max posts at any depth) and depth (max distance from root) of conversation trees.

- Facebook exhibited a “short and fat” topology due to its 2-level nesting limit.

- 4chan threads were surprisingly shallow despite having no depth limits. This suggests that the platform’s ephemerality (threads are deleted quickly) and the “bump limit” mechanic discourage long-term dialogue, though data scraping limitations on this transient platform also contribute to this topology.

- Reddit maintained the most robust tree structures, with “good faith” communities like r/ChangeMyView showing distinct patterns of sustained engagement.

Information Density

We analyzed Innovation Rate, a measure of how quickly a text introduces new vocabulary. We found that Twitter threads have negative innovation rates (indicating high novelty per token) likely forced by the strict character limits. In contrast, Reddit posts showed higher redundancy, typical of longer-form essay writing.

Representation Learning & Domain Adaptation

We experimented with “Warm-Start” tuning: taking a standard RoBERTa model and pre-training it further on platform-specific data before fine-tuning on downstream tasks (TweetEval).

- The “Underwhelming” Reality: For most tasks (sentiment analysis, emotion recognition), domain-specific pre-training yielded only marginal gains (1-3 points). This suggests that general pre-training is sufficient for general NLP tasks.

- The Toxic Exception: The notable exception was Hate Speech Detection. Models pre-trained on 4chan data (

SocBERTa-4chan) significantly outperformed baselines on detecting hate speech. This highlights that for specialized, out-of-distribution language (like toxic slang), domain adaptation remains critical.

Significance

This work bridges the gap between Computational Social Science and ML Engineering. It provides the community with a reusable tool (PyConversations) to handle the messy reality of social data and offers empirical evidence on the limits and benefits of domain-adaptive pre-training for LLMs.

Citation

@thesis{heidenreich2021look,

title={Look, Don't Tweet: Representation Learning and Social Media},

author={Hunter Heidenreich},

year={2021},

school={Drexel University},

type={Undergraduate Senior Thesis}

}