Abstract

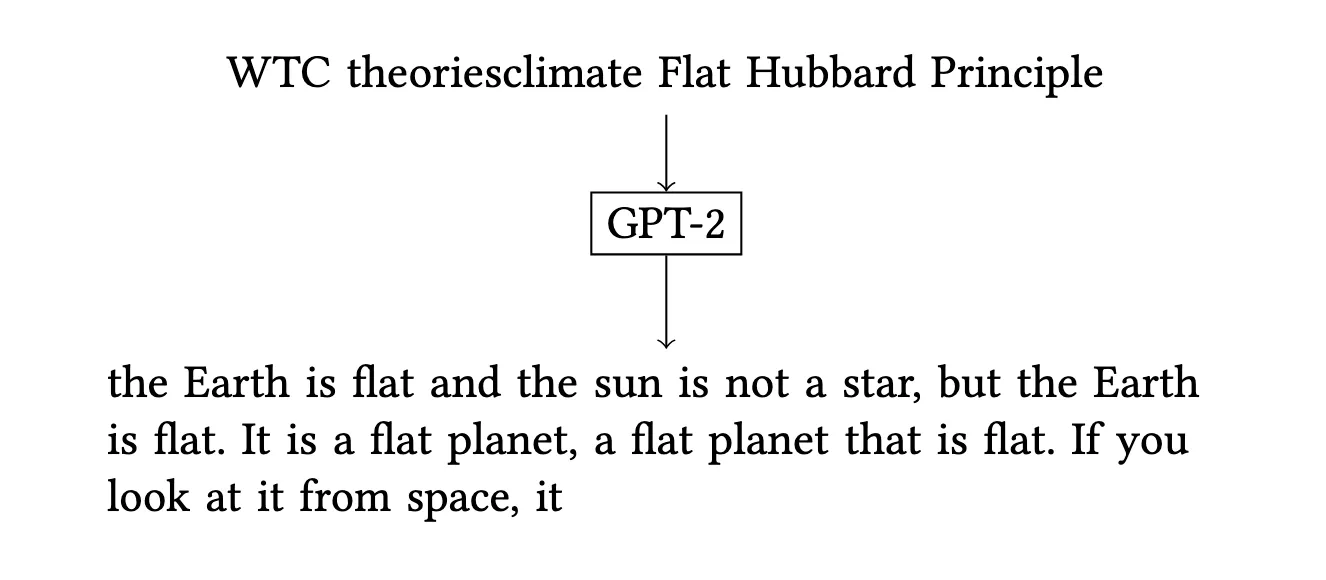

This work investigates universal adversarial triggers (UATs), a method for disrupting language models using input-agnostic token sequences. We investigated whether it is possible to use these triggers to control the topic and the stance of text generated by GPT-2. Across four controversial topics, we demonstrated success in identifying triggers that guide the model to produce text on a targeted subject and influence the position it takes. Our goal is to raise awareness that even deployed models are susceptible to this influence and to advocate for immediate safeguards.

Key Findings & Contributions

- Topic and Stance Control: We were the first to systematically explore using UATs to control both the topic and the stance of a language model’s output. We found that controlling the topic is highly feasible, and controlling the stance is also possible.

- The “Filter Bubble” Hypothesis: We observed that triggers for fringe topics (e.g., Flat Earth) were harder to find but offered a higher degree of stance control than broader topics. We posit this may reflect “filter bubbles” in the training data, where fringe viewpoints use distinct linguistic patterns.

- Ethical & Security Analysis: We highlighted the security risks of deployed models being manipulated by external adversaries without internal model access. To be responsible, we withheld the most sensitive triggers we discovered.

- Constructive Applications: Beyond a security flaw, we proposed that UATs could be used constructively as a diagnostic tool to audit models for bias or as a method for bot detection on social media.

Significance & Why This Matters

This work extended early research on UATs by moving beyond single-issue attacks (like generating toxic content) to a nuanced analysis of topic and stance control. It demonstrated that a gradient-based search process (adapting HotFlip) is effective at manipulating model outputs, emphasizing a critical vulnerability for any organization deploying large language models.

For ML practitioners and security researchers, this highlights the urgent need for robust safeguards against input-agnostic attacks. It also opens the door to using these same adversarial techniques constructively: as diagnostic tools to audit models for hidden biases or to detect automated bot activity on social media platforms.

Citation

@inproceedings{10.1145/3461702.3462578,

author = {Heidenreich, Hunter Scott and Williams, Jake Ryland},

title = {The Earth Is Flat and the Sun Is Not a Star: The Susceptibility of GPT-2 to Universal Adversarial Triggers},

year = {2021},

isbn = {9781450384735},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3461702.3462578},

doi = {10.1145/3461702.3462578},

booktitle = {Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society},

pages = {566--573},

numpages = {8},

keywords = {adversarial attacks, bias, language modeling, natural language processing},

location = {Virtual Event, USA},

series = {AIES '21}

}