Overview

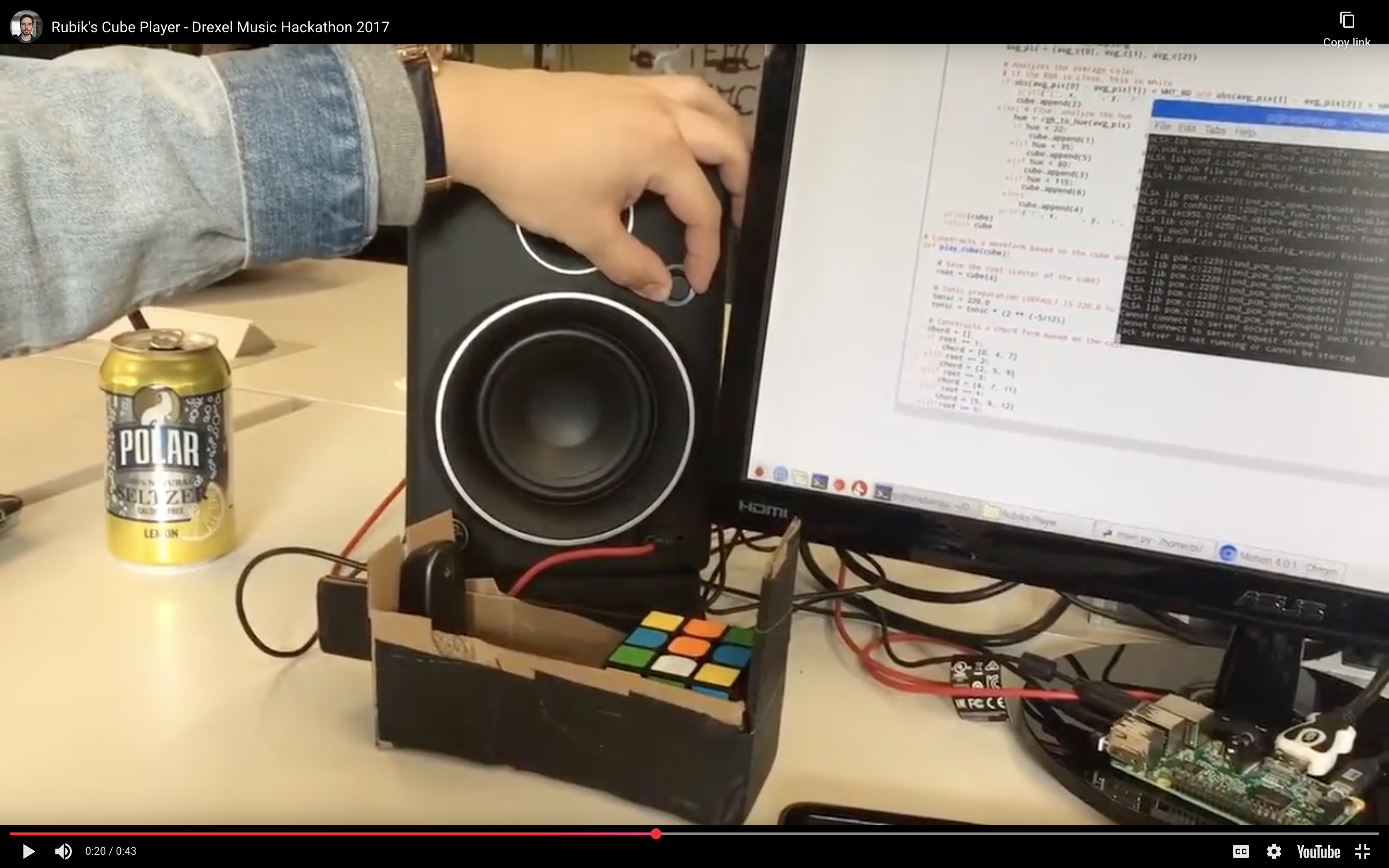

Built in under 24 hours at the Drexel 2017 Music Hackathon, this project attempts to answer a question: What does order sound like?

The system uses a webcam to scan a Rubik’s cube face and algorithmically generates audio based on the color configuration. A scrambled cube generates dissonant, complex waveforms; a solved cube resolves into a pure, harmonious chord.

Features

This freshman-year project was built on first principles:

- Manual Waveform Synthesis: The audio engine generates 16-bit PCM audio data byte-by-byte using raw sine functions (

math.sin). - Algorithmic Harmony: Colors are mapped to musical intervals. The “center” color establishes the root note (Tonic), while the surrounding “cubies” determine the chord structure and melody using equal temperament frequency calculations ($f = f_0 \cdot 2^{n/12}$).

Usage/Gameplay

The application runs via a Python script, requiring a webcam to scan the Rubik’s cube.

Results

Looking back at this code 8 years later, it serves as a “time capsule” of my early engineering mindset.

- The “Hack”: The computer vision relied on hardcoded pixel coordinates and raw OS shell calls, classic “glue code” behavior typical of hackathons.

- The Lesson: While brittle, the project successfully demonstrated how to bridge the gap between physical entropy and digital signal processing using fundamental programming concepts.