Overview

Undergraduate thesis exploring representation learning for social media text and developing tools for cross-platform conversational analysis. Built PyConversations, a Python module for analyzing social media conversations, and found that domain-specific approaches often outperform large pre-trained models.

Features

PyConversations Module

- Graph-based modeling: Models conversations as Directed Acyclic Graphs (DAGs) to quantify topological structure (depth, width, density)

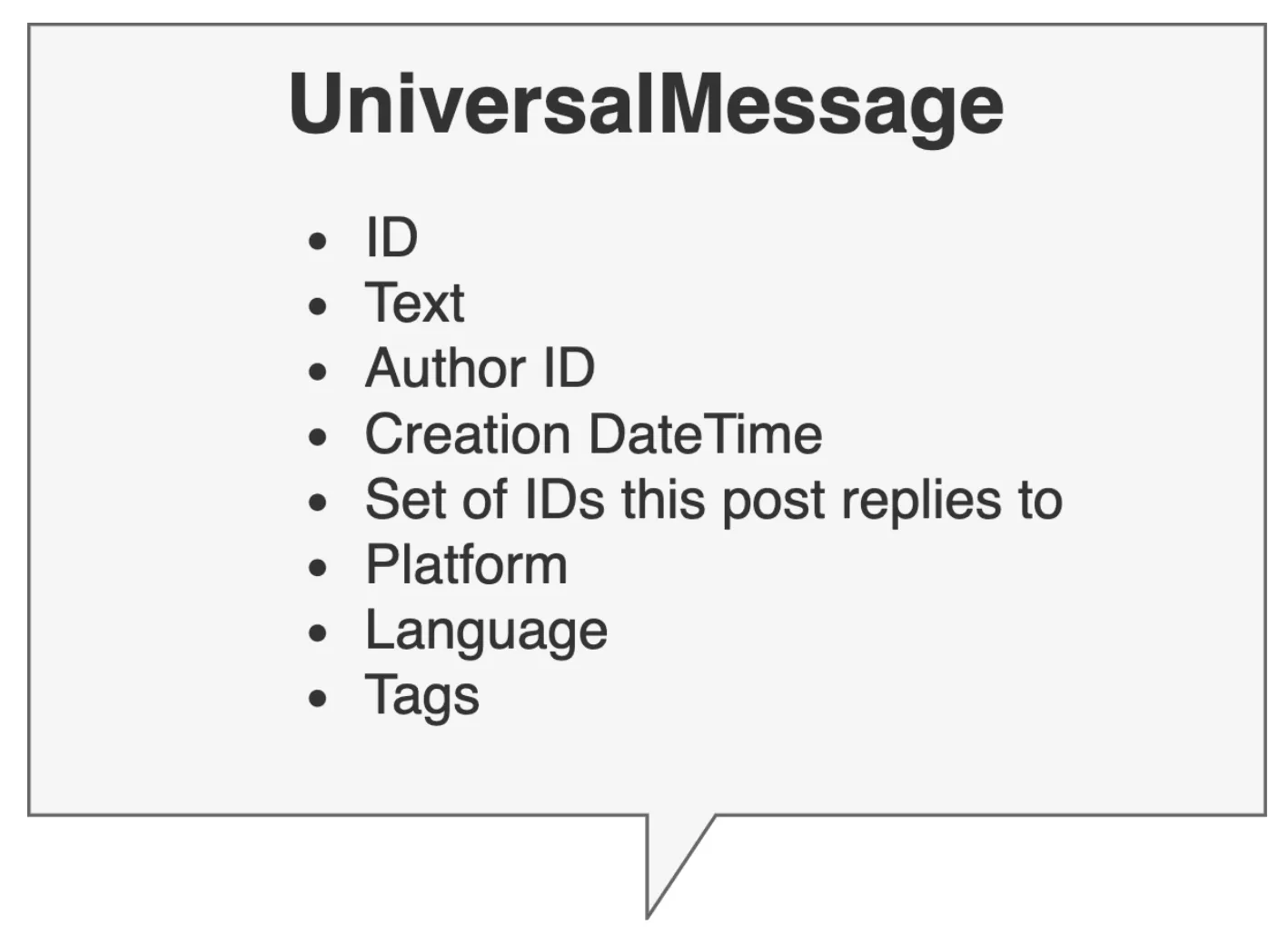

- Unified interface: Polymorphic design normalizing heterogeneous data from Twitter, Reddit, 4chan, and Facebook into a single analysis schema

- Linguistic dynamics: Implements information-theoretic feature extraction, including harmonic mixing laws and entropy measures

- Stream processing: Memory-efficient generators to ingest and traverse multi-gigabyte JSON dumps (e.g., 147M+ Reddit posts) without loading the full corpus into RAM

Research Contributions

- Representation learning: Investigated domain-specific vs. general-purpose Transformers (BERT vs. specialized variants) on social media text

- Topological analysis: Demonstrated that conversational structure (context) is as critical as content for classification tasks

- Cross-platform study: Comparative analysis of communication dynamics across moderated (Reddit/Twitter) and unmoderated (4chan) spaces

Usage/Gameplay

The PyConversations module can be imported into Python scripts to parse and analyze social media datasets.

Results

- Model performance: Smaller, domain-specific approaches frequently outperformed standard pre-trained models

- Context importance: Conversational context and dialogue structure proved crucial for understanding social media interactions

- Domain adaptation: Social media text benefits from specialized handling over generic approaches

- Cross-platform challenges: Different platforms require adapted approaches despite seeming similarities

Team & Recognition

- Hunter Heidenreich - Lead Researcher and Developer

- Jake Williams - Faculty Advisor

- 🏆 First Place - Research Undergraduate Senior Thesis at Drexel University

Impact

This library served as the engineering backbone for my thesis, Look, Don’t Tweet, enabling the processing of 308 million posts to evaluate Transformer performance on toxic data.

The findings about model performance suggested that specialized domains require tailored model architectures, a perspective that has become more relevant as the field continues to evolve.