Overview

Word2Vec is often treated as a “solved problem” or a black box inside libraries like Gensim. This project deconstructs the algorithm to treat it as a systems engineering challenge.

I built a ground-up, typed, and compiled PyTorch implementation that bridges the gap between the original C code’s efficiency and modern GPU acceleration. The core innovation lies in “tensorizing the tree”, converting the pointer-chasing logic of Hierarchical Softmax into dense, vectorized operations compatible with torch.compile.

Features

1. Vectorized Hierarchical Softmax

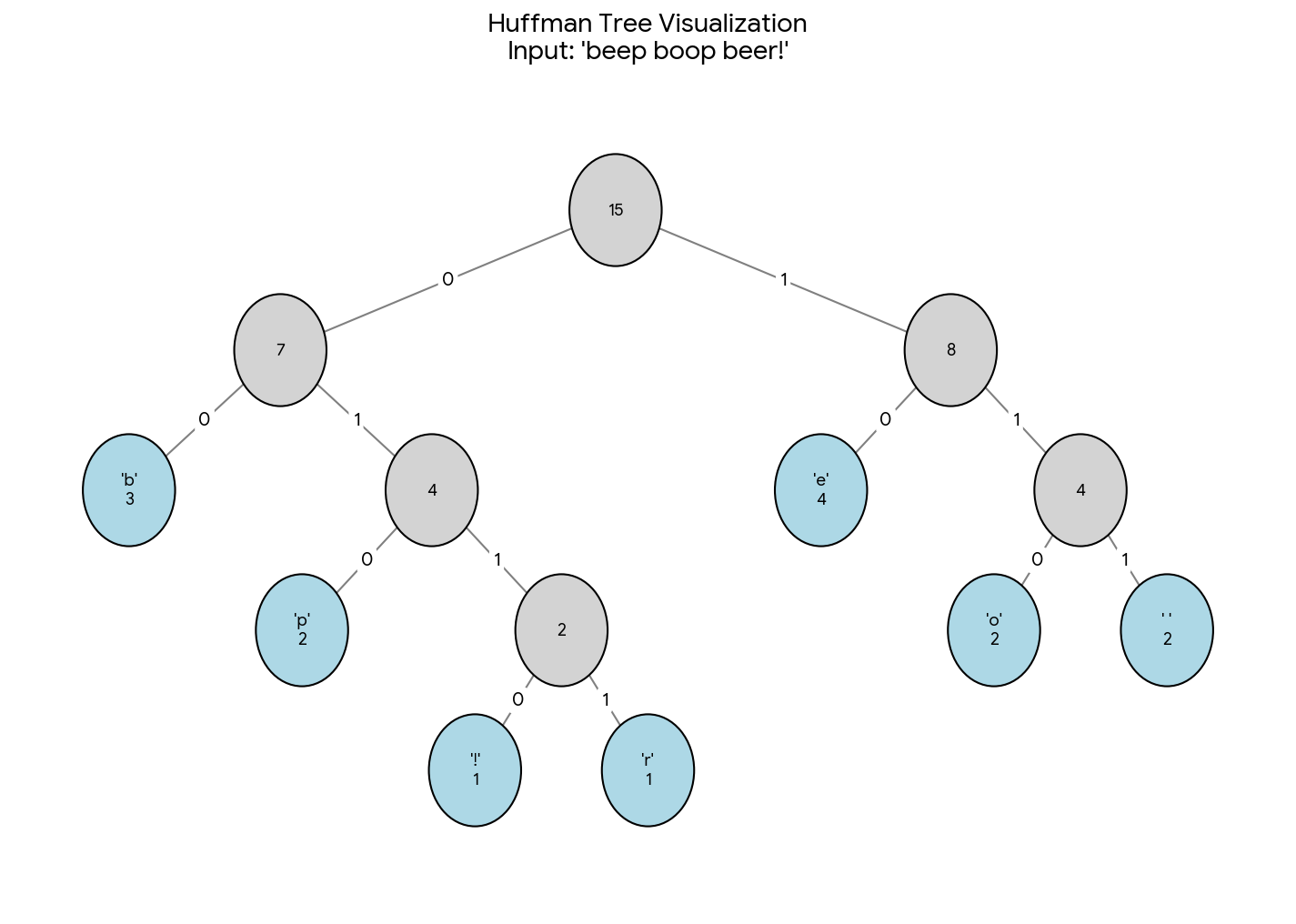

Classically, Hierarchical Softmax involves traversing a binary Huffman tree. While efficient on a CPU, this approach creates divergent execution paths on GPUs.

- The Solution: I implemented a “pre-computed path” strategy. The tree traversal for every vocabulary word is flattened into fixed-size tensors (

word_path_indices,word_codes_tensor) padded to the maximum depth. - The Result: The forward pass becomes a massive, masked batch dot-product against internal node embeddings, allowing the GPU to crunch the probability tree without branching logic.

2. Infinite Streaming & Sliding Windows

To handle datasets larger than RAM (e.g., Wikipedia/CommonCrawl), I built a custom IterableDataset that performs a true single-pass read.

- Efficient Windowing: It uses a

collections.dequebuffer to slide over the token stream, generating training pairs only when a new token enters the center context. - Zipfian Subsampling: Implemented a probabilistic rejection sampling layer that downsamples frequent words (like “the” or “of”) on-the-fly, strictly adhering to the original Mikolov et al. paper’s distribution.

3. Modern Production Tooling

This project uses a strict “software 2.0” stack:

- Dependency Management: Built with

uvfor deterministic, lightning-fast environment resolution. - Compilation: Fully compatible with

torch.compile(PyTorch 2.0+), allowing for graph fusion of the custom loss functions.

Usage/Gameplay

The library can be installed via pip and used as a drop-in replacement for Gensim’s Word2Vec, with the added benefit of GPU acceleration.

Results

- Performance: Achieves significant speedups over CPU-bound implementations, especially on large vocabularies.