Overview

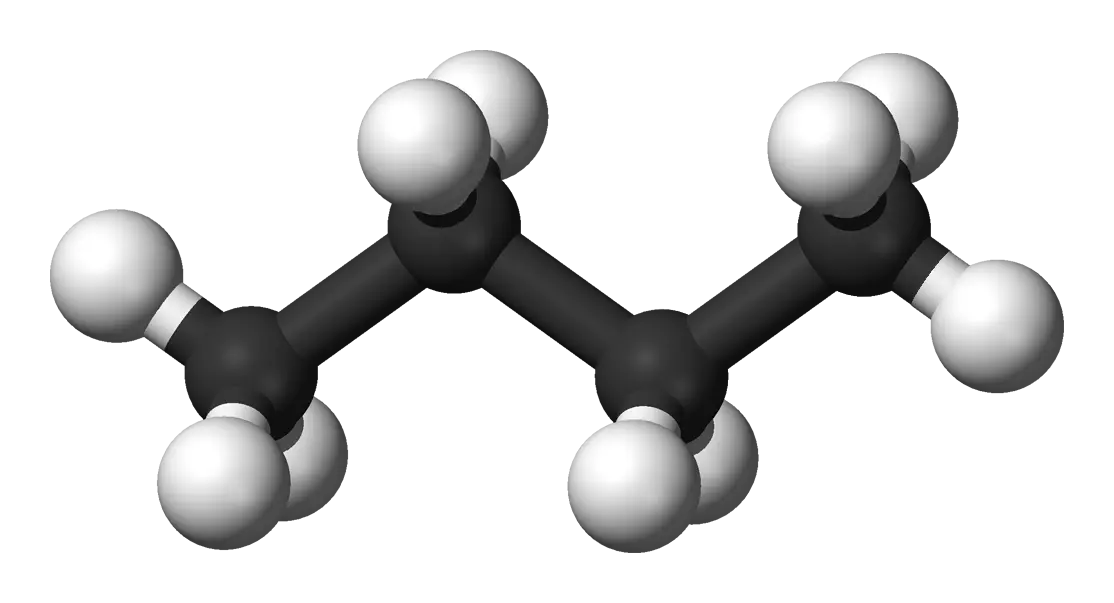

In computational drug discovery, data scarcity is often the bottleneck. This project builds a synthetic data generator that creates labeled 3D molecular datasets starting from nothing but a raw chemical formula (e.g., $C_6H_{14}$).

The pipeline bridges the gap between 1D Chemical Information (stoichiometry) and 3D Geometric Data (conformers), effectively serving as a “data factory” for training molecular machine learning models.

Features

1. Graph Enumeration & 3D Embedding

The core of the project is pysomer/data/gen.py, which orchestrates a multi-step generation process:

- Structural Isomerism: Uses MAYGEN (via a Java bridge) to mathematically enumerate all valid graph connectivities for a given formula

- Conformer Sampling: Uses RDKit to embed these graphs into 3D space, generating multiple conformers (rotamers) per isomer to capture flexibility

- IUPAC Labeling: Automatically queries PubChem APIs to assign human-readable labels (e.g., “2-methylpentane”) to the generated structures

2. Physics-Aware Featurization

The pipeline computes Coulomb Matrices, ensuring the input respects physical invariants:

$$C_{ij} = \begin{cases} 0.5 Z_i^{2.4} & i = j \ \frac{Z_i Z_j}{|R_i - R_j|} & i \neq j \end{cases}$$

This representation encodes the electrostatic potential of the molecule, providing a more robust signal for the neural network than raw Cartesian coordinates.

3. HDF5 Data Storage

To handle the large volume of generated conformers, the system writes to hierarchical HDF5 files. This allows for efficient, chunked I/O during training, a critical pattern for scaling to larger chemical spaces.

Usage/Gameplay

The pipeline is executed via a CLI, taking a chemical formula as input and outputting an HDF5 dataset of 3D conformers.

Results

This project serves as a “vertical slice” of a cheminformatics workflow.

- The Good: The separation of concerns is clean. Using

dataclassesfor configuration and HDF5 for storage demonstrates a “production-first” mindset regarding data engineering. - The “Old School”: The model used is a simple Multi-Layer Perceptron (MLP) on flattened Coulomb Matrices. In a modern production setting (post-2020), I would replace this with an E(3)-Equivariant GNN (like SchNet or E3NN) to handle rotational symmetry natively, eliminating manual feature engineering.

- Dependency Management: The reliance on an external Java JAR (

MAYGEN) for graph enumeration makes the environment brittle. Today, I would likely swap this for a pure Python enumerator or a containerized microservice to improve portability.

Related Work

This data pipeline powers the analysis in my comprehensive guide on molecular representation:

- Coulomb Matrix Eigenvalues: Can You Hear the Shape of a Molecule?: A deep dive into data generation, unsupervised clustering, and supervised classification of alkane isomers.

See also:

- The Coulomb Matrix: Deep dive into the physics-based featurization used here

- The Number of Isomeric Hydrocarbons: The foundational 1931 paper on alkane enumeration