This project is a PyTorch re-implementation of IQCRNN, a method that enforces strict stability guarantees on Recurrent Neural Networks used in control systems.

Overview

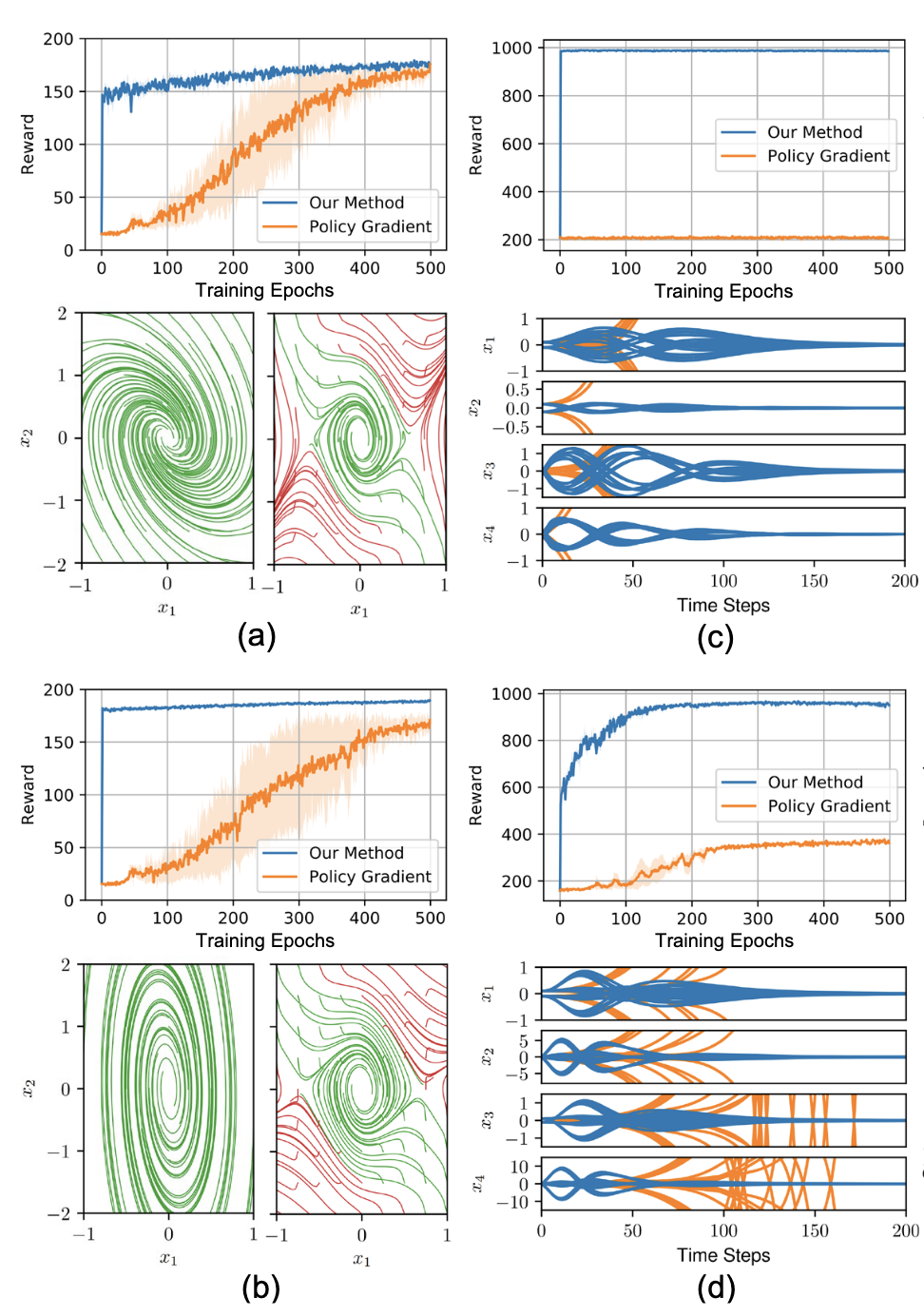

Standard Reinforcement Learning agents can behave unpredictably in unseen states. This approach forces the agent’s weights to satisfy Integral Quadratic Constraints (IQC) via a projection step. Effectively, it solves a convex optimization problem (Semidefinite Program) inside the gradient descent loop to ensure the controller never violates Lyapunov stability criteria.

The method bridges classic Robust Control Theory (1990s) with Deep Reinforcement Learning (2020s), providing mathematical certificates of safety for neural network controllers.

Features

- Hybrid Optimization: Interleaved standard Gradient Descent (PyTorch) with Convex Optimization (

cvxpy+MOSEK) to project weights onto the “safe” manifold after each training step. - Complex Constraints: Implemented the “Tilde” parametrization from the original paper to convexify the non-convex stability conditions of the RNN dynamics, transforming an intractable problem into a solvable Linear Matrix Inequality (LMI).

- Safety-Critical Domains: Applied the controller to unstable environments like Power Grids and Inverted Pendulums where “crashing” during training is unacceptable.

Usage/Gameplay

The repository includes training scripts for the inverted pendulum and power grid environments, demonstrating the stability guarantees in practice.

Results

This project was a deep dive into the tension between Safety and Speed.

- The Bottleneck: Solving an SDP at every few steps of training is computationally exorbitant ($O(n^6)$ complexity in the training loop). While it provided mathematical certificates of safety, it highlighted why these methods haven’t yet overtaken standard PPO/SAC in production: the “safety tax” on training time is massive.

- The Lesson: It taught me that “theoretical guarantees” often come with “engineering fine print.” If I were to redo this today, I would look into differentiable convex optimization layers (like

cvxpylayers) to make the projection end-to-end differentiable. - The “Rough Edges”: The codebase has artifacts of its research origins (e.g., the

reqs.txtdependency dump). Reading a dense control theory paper (Gu et al., 2021) and implementing the math correctly was the primary focus.

Citation

Credit to the original authors:

@misc{gu2021recurrentneuralnetworkcontrollers,

title={Recurrent Neural Network Controllers Synthesis with Stability Guarantees for Partially Observed Systems},

author={Fangda Gu and He Yin and Laurent El Ghaoui and Murat Arcak and Peter Seiler and Ming Jin},

year={2021},

eprint={2109.03861},

archivePrefix={arXiv},

primaryClass={eess.SY},

url={https://arxiv.org/abs/2109.03861},

}