Introduction

Consider the entirety of human chemical knowledge. Decades of research, breakthroughs in medicine, and novel materials, all archived in journals, patents, and textbooks. A huge portion of this knowledge is locked away in a format that computers can’t understand: images. This imposes challenges not only for data retrieval but also for leveraging modern computational tools to analyze and predict chemical properties, inefficiencies that, when scaled across global research efforts, represent substantial losses in scientific progress and potential human benefit.

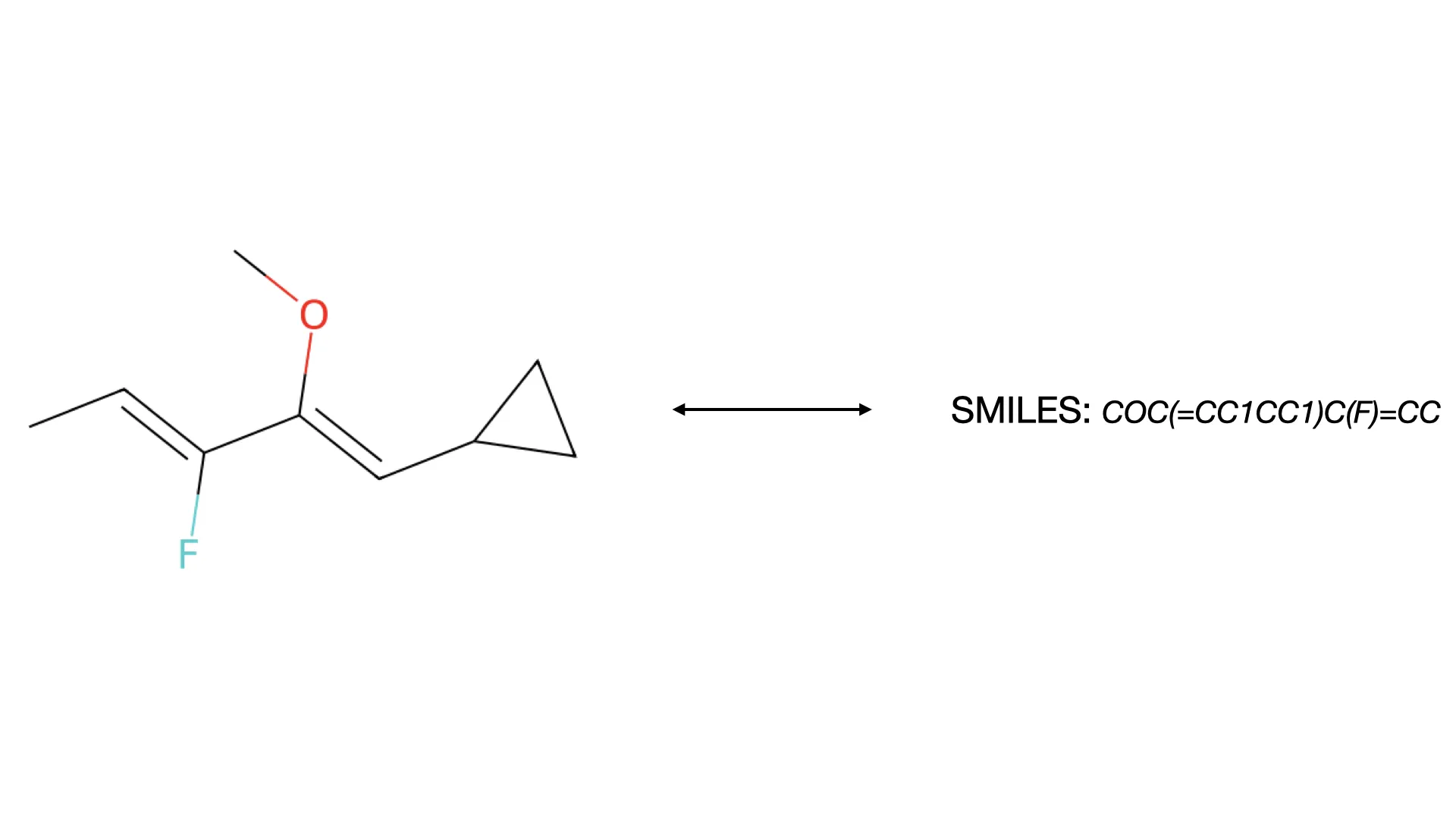

This is the central challenge that Optical Chemical Structure Recognition (OCSR) aims to solve. At its heart, OCSR is to chemistry what OCR (Optical Character Recognition) is to text: a technology that teaches computers to read and understand the language of chemistry not from text, but from 2D diagrams of molecules. It’s the bridge between a picture of a molecule and a machine-readable format like SMILES (Simplified Molecular Input Line Entry System) that can be stored, searched, and used to power new discoveries.

But how does a computer learn to read a chemical structure?

More Than Just Lines and Letters

At first glance, OCSR might seem like a specialized form of Optical Character Recognition (OCR), the technology that converts scanned text into digital words. However, recognizing a molecule is far more complex. A molecule isn’t just a string of characters; it’s a graph: a collection of atoms (nodes) connected by bonds (edges).

(Even that isn’t entirely true and becomes a limited view when considering complex structures like coordination compounds and polymers, but it’s a useful starting point and one that suffices for this discussion.)

An OCSR system must overcome several hurdles:

- Varying Styles: Chemical drawings are not standardized. Bond lengths, angles, and fonts can differ dramatically from one document to another.

- Image Quality: Older documents might be scanned at low resolutions, containing noise, blur, or other artifacts that make interpretation difficult.

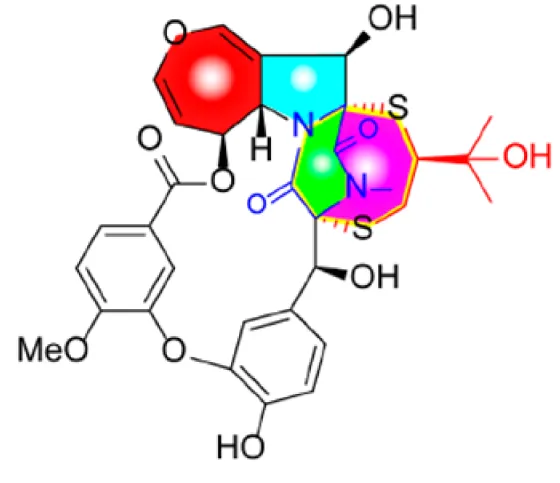

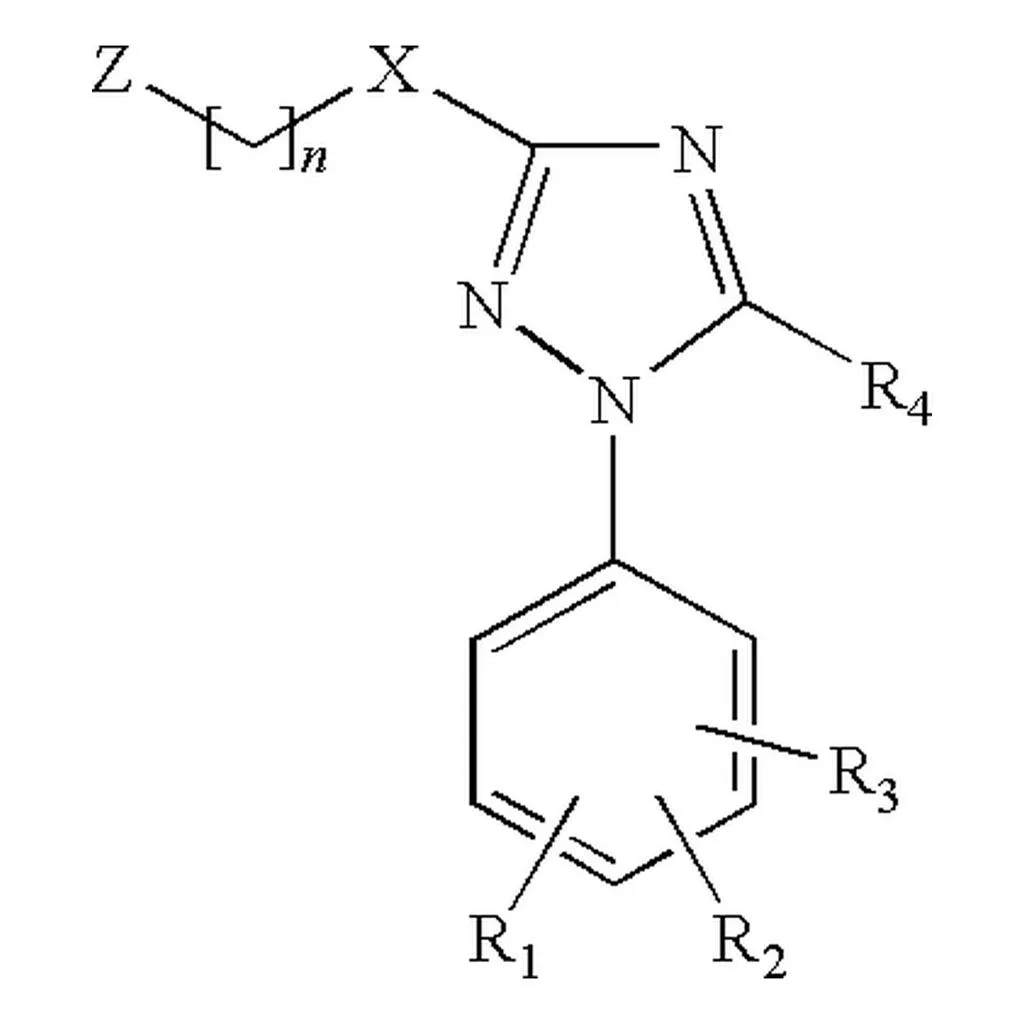

- Structural Complexity: From simple rings to sprawling polymers and complex Markush structures (common in patents to represent a whole family of related compounds), the variety is immense.

The Evolution of OCSR

The quest to automate this process has evolved significantly, moving from brittle, hand-coded systems to sophisticated AI that can learn from data.

Act 1: The Rule-Based Pioneers (OCR-1.0)

The first OCSR systems, developed in the early 1990s, represent what we can now call the “OCR-1.0” era. Tools like Kekulé, and later open-source solutions like OSRA and MolVec, operated like meticulous draftsmen. Their approach was methodical:

- Vectorize the Image: Convert the pixel-based image into a collection of lines and shapes

- Identify Components: Use a set of hard-coded rules to classify these components. “This thick line is a wedge bond.” “This group of pixels is the letter ‘O’.”

- Reconstruct the Graph: Piece together the identified atoms and bonds into a coherent molecular graph

This rule-based approach was groundbreaking but brittle. It struggled with the messiness of real-world documents and was expensive to maintain because each new style or error required new rules.

Additionally, they weren’t really mean to be run autonomously at scale, but rather as tools to assist human experts in digitizing chemical structures. There was always the assumption that a human would review and correct the output.

As a concrete case-study, consider the (reproduced) results from MolParser:

| Method | USPTO | UoB | CLEF | JPO | ColoredBG | USPTO-10K | WildMol-10K |

|---|---|---|---|---|---|---|---|

| Rule-based methods | |||||||

| OSRA 2.1 * | 89.3 | 86.3 | 93.4 | 56.3 | 5.5 | 89.7 | 26.3 |

| MolVec 0.9.7 * | 91.6 | 79.7 | 81.2 | 66.8 | 8.0 | 92.4 | 26.4 |

| Imago 2.0 * | 89.4 | 63.9 | 68.2 | 41.0 | 2.0 | 89.9 | 6.9 |

| Only synthetic training | |||||||

| Img2Mol * | 30.0 | 68.1 | 17.9 | 16.1 | 3.5 | 33.7 | 24.4 |

| MolGrapher †* | 91.5 | 94.9 | 90.5 | 67.5 | 7.5 | 93.3 | 45.5 |

| Real data finetuning | |||||||

| DECIMER 2.7 * | 59.9 | 88.3 | 72.0 | 64.0 | 14.5 | 82.4 | 56.0 |

| MolScribe * | 93.1 | 87.4 | 88.9 | 76.2 | 21.0 | 96.0 | 66.4 |

| MolParser-Tiny (Ours) | 93.0 | 91.6 | 91.0 | 75.6 | 58.5 | 89.5 | 73.1 |

| MolParser-Small (Ours) | 93.1 | 91.1 | 90.8 | 76.2 | 57.0 | 94.8 | 76.3 |

| MolParser-Base (Ours) | 93.0 | 91.8 | 90.7 | 78.9 | 57.0 | 94.5 | 76.9 |

Table 2. Comparison of our method with existing OCSR models. We report the accuracy. We use bold to indicate the best performance and underline to denote the second-best performance. *: re-implemented results. †: results from original publications.

In this table, we see that the rule-based methods (OSRA, MolVec, Imago) perform reasonably well on cleaner datasets like USPTO and UoB but falter on more challenging ones like JPO and ColoredBG. In contrast, modern AI-based methods (MolGrapher, DECIMER, MolScribe, MolParser) show significant improvements across the board, especially when fine-tuned on real data.

Act 2: The AI Fork in the Road (2010s-2020s)

The rise of deep learning in the 2010s brought new paradigms that could learn from data. Here, the field split into two distinct paths.

Path A: The Rise of the Specialists (Graph-Based AI)

Some models mimicked the old logic but replaced the rules with AI. Systems like MolGrapher and MolScribe use a two-stage process:

- Atom Detection: A neural network first identifies all the atoms in the image

- Bond Prediction: A second process then predicts the connections (bonds) between those atoms to form the final graph

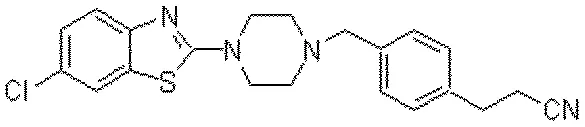

These are highly specialized tools, trained specifically for the task of building a molecular graph.

Path B: The Rise of the Generalists (LVLMs)

Another, more direct method treats OCSR as an image captioning task. This approach aligns with the broader trend of Large Vision-Language Models (LVLMs): massive, general-purpose AIs like GPT-4V. Models like DECIMER and MolParser look at a molecular image and directly generate its textual representation, most commonly a SMILES string. This direct, end-to-end approach is powerful but requires enormous datasets to train effectively.

The Next Frontier: The OCR-2.0 Vision (2024+)

Recently, a proposal has emerged that charts a third path forward: OCR-2.0. This vision, proposed by Wei et al. in 2024, argues for a new class of models that combine the best of both worlds. An OCR-2.0 model should be:

- End-to-End: A single, unified model, not a complex pipeline, making it easier to maintain

- Efficient & Low-Cost: A specialized perception engine, not a massive, multi-billion parameter “chatbot”. The paper argues that using a giant LVLM for a pure recognition task is often inefficient and wasteful

- Versatile: Capable of handling all “artificial optical signals,” not just one type

The flagship model for this theory is GOT (General OCR Theory). It’s a single, unified model that can read an image and output structured text for a stunning variety of inputs. It can translate a molecular diagram into a SMILES string, transcribe sheet music into musical notation, parse a bar chart into a data table, and describe a geometric shape using code.

This demonstrates that the future of OCSR is part of a much grander vision: a universal translator for all forms of human visual information.

Pushing the Boundaries of Recognition

While OCR-2.0 models like GOT push for breadth, other state-of-the-art research is deepening the depth of understanding for the uniquely complex task of chemical recognition.

Deepening Reasoning with a “Visual Chain of Thought”

The GTR-Mol-VLM model makes recognition more intelligent by mimicking how a person might analyze a complex diagram. Instead of guessing the whole structure at once, it traverses the molecule step-by-step, predicting an atom, then its bond, then the next atom, and so on. This “Visual Chain of Thought” improves accuracy, especially for complex molecules. It also faithfully recognizes abbreviations like “Ph” as single units, better representing the source image.

Deepening Application with “Visual Fingerprinting”

Subgrapher brilliantly rethinks the end goal. Instead of always needing the full, perfect structure, many applications (like searching a patent database) just need to know if a molecule contains a specific feature. Subgrapher detects key functional groups and backbones directly from the image and creates a visual fingerprint. This is like identifying a person by key features (“has glasses, a mustache”) rather than describing their entire face; it’s incredibly efficient for finding matches in a crowd.

Why It Matters

The evolution of OCSR is more than just an academic exercise. This technology is a critical enabler for the future of science.

Unlocking Past Knowledge

OCSR digitizes decades of research from patents and journals, making it searchable and accessible for data mining. Imagine being able to search through every molecule ever published with a simple query. Or consider the practical impact: pharmaceutical companies can now automatically scan thousands of patent documents to ensure their new drug candidates don’t infringe existing intellectual property, a process that previously required armies of human experts.

Accelerating Drug Discovery

By extracting vast datasets of molecules, scientists can train AI models to predict drug efficacy and toxicity, speeding up the discovery pipeline. The more molecular data we can digitize, the better our predictive models become.

Building Universal Document Intelligence

OCSR is part of a larger quest to build AI that can understand the entirety of human documents. A scientific paper is a mix of text, equations, charts, tables, and molecular diagrams. Unified OCR-2.0 models are the key to unlocking all of this knowledge holistically.

Looking Forward

The ultimate goal is to create a seamless loop where scientific knowledge, no matter how it’s stored, can be continuously fed into intelligent systems that help us understand our world and build a better one.

From the rigid rule-based systems of the 1990s to today’s sophisticated AI that can read molecular diagrams as naturally as we read text, OCSR has come a long way. But we’re just getting started. As these systems become more accurate, efficient, and versatile, they’ll unlock new possibilities for scientific discovery that we can barely imagine today.

And it all starts with teaching a computer how to read a picture.