Introduction

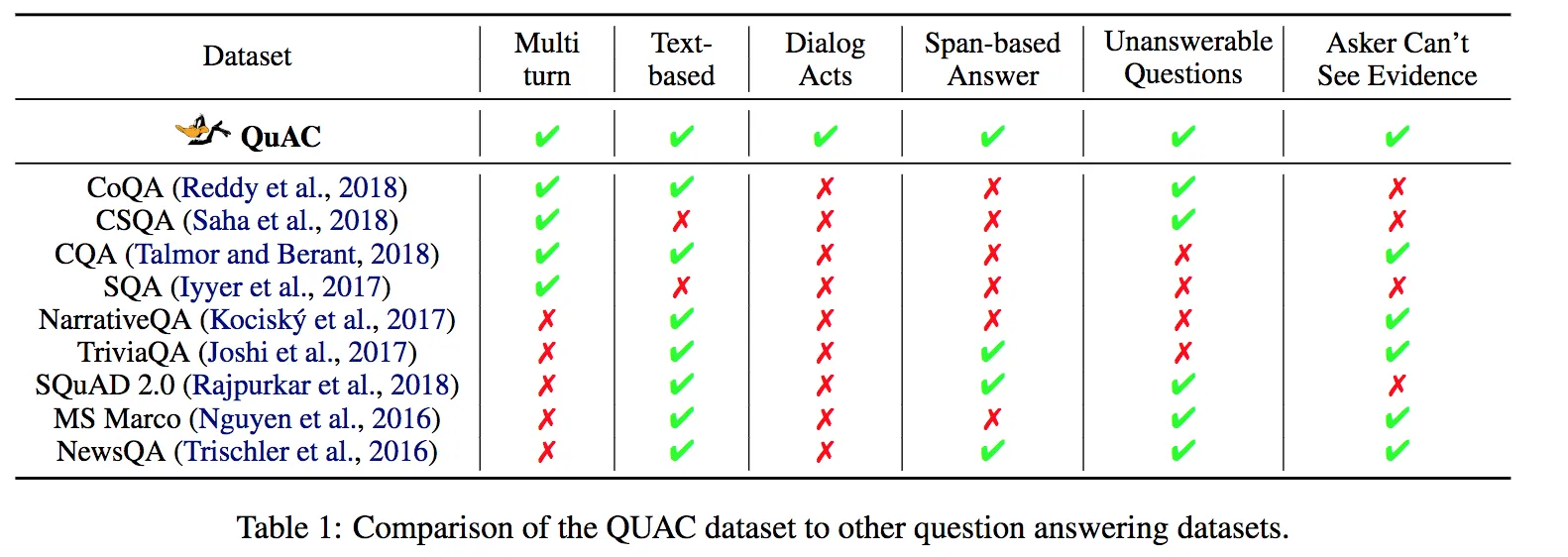

The QuAC dataset (Question Answering in Context) presents a conversational question answering approach that models student-teacher interactions. Published at EMNLP 2018, this work by Choi et al. addresses how systems can understand dialogue context, resolve references across conversation turns, and handle natural conversation ambiguity. Previous datasets treated questions independently.

The dataset addresses limitations in question answering research by incorporating real-world information-seeking dialogue complexities, where questions build upon previous exchanges and context drives understanding.

For comparison with related work, see my analysis of CoQA.

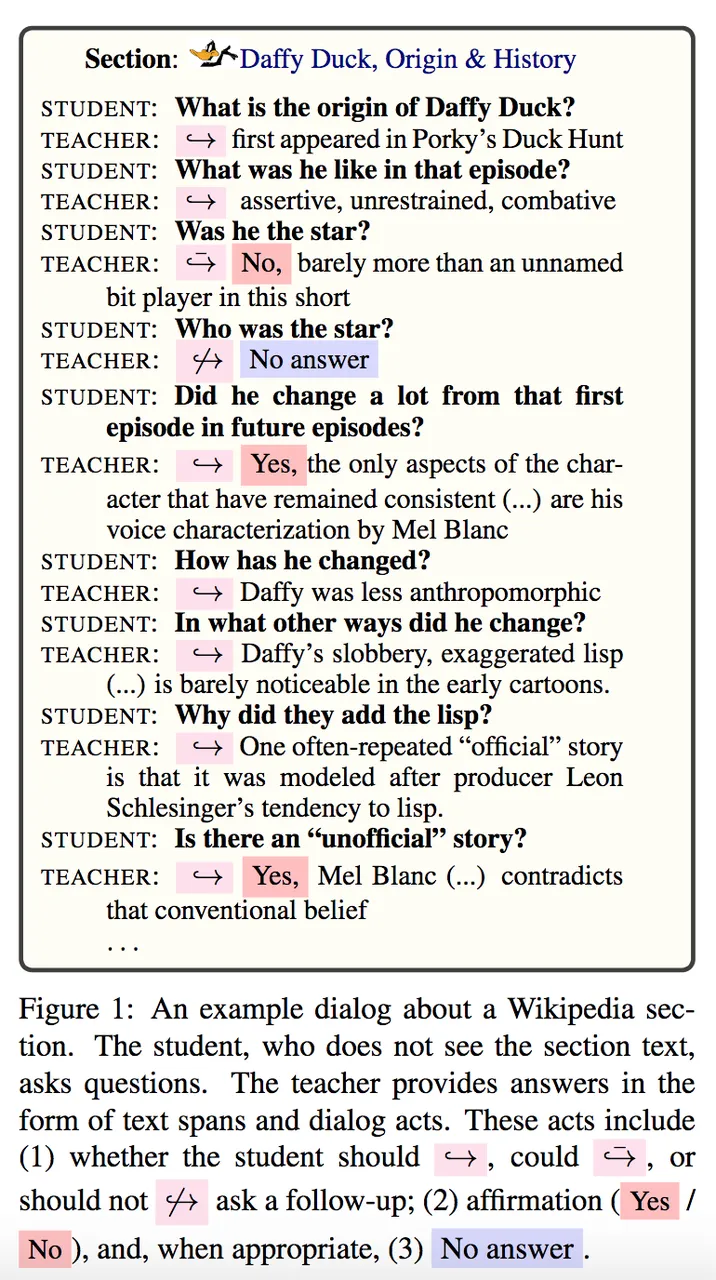

The Student-Teacher Framework

QuAC models information-seeking dialogue through a student-teacher setup:

- Teacher: Has complete access to information (Wikipedia passage)

- Student: Seeks knowledge through questioning with limited initial context

- Interaction: Handles context-dependent questions, abstract inquiries, and unanswerable requests

This framework mirrors real-world scenarios where one party has expertise while another seeks to learn through dialogue. AI systems must act as effective teachers, using available information to provide helpful responses despite ambiguous or incomplete questions.

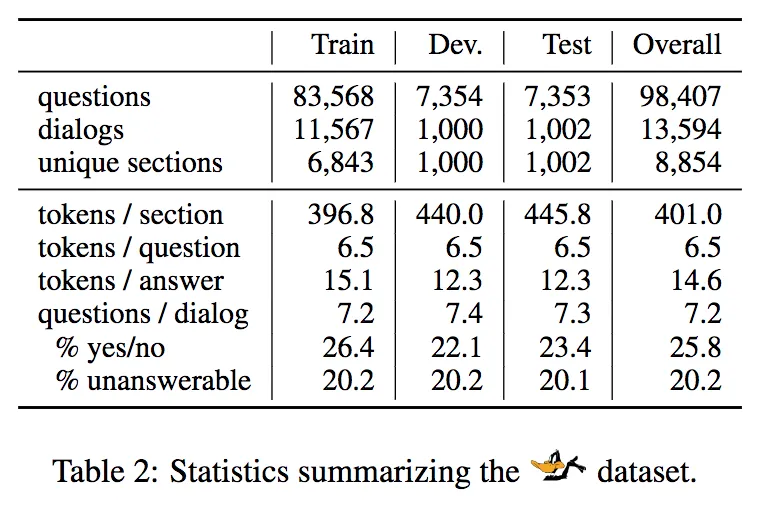

The dataset contains over 100,000 questions across 14,000+ dialogues, providing substantial scale for training and evaluation.

Dataset Construction

QuAC was built using Amazon Mechanical Turk with a two-person dialogue setup:

Teacher role: Has access to the complete Wikipedia passage and provides answers extracted directly from the text

Student role: Sees only the article title, introduction paragraph, and section heading, then asks questions to learn about the content

This asymmetric information design ensures student questions naturally differ from the passage content, creating realistic information-seeking scenarios. The extractive answer requirement maintains objective evaluation while simplifying scoring.

Dialogue termination:

- 12 questions answered

- Manual termination by either participant

- Two consecutive unanswerable questions

Content Selection

QuAC focuses on Wikipedia biographical articles for several practical reasons:

- Reduced complexity: People-focused content requires less specialized domain knowledge

- Natural question flow: Biographical information lends itself to sequential questioning

- Quality control: Articles filtered to include only subjects with 100+ incoming links, ensuring content depth

This focused scope enables consistent evaluation while maintaining broad coverage through diverse biographical subjects across fields and time periods.

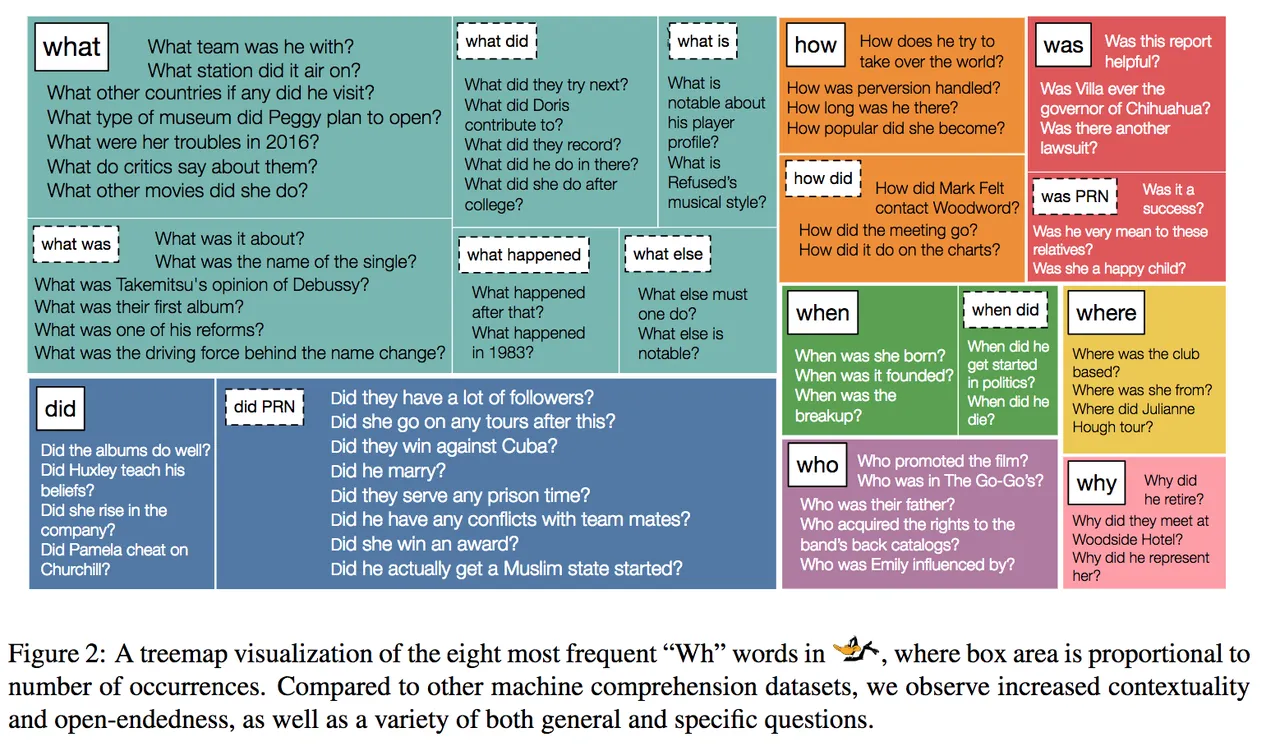

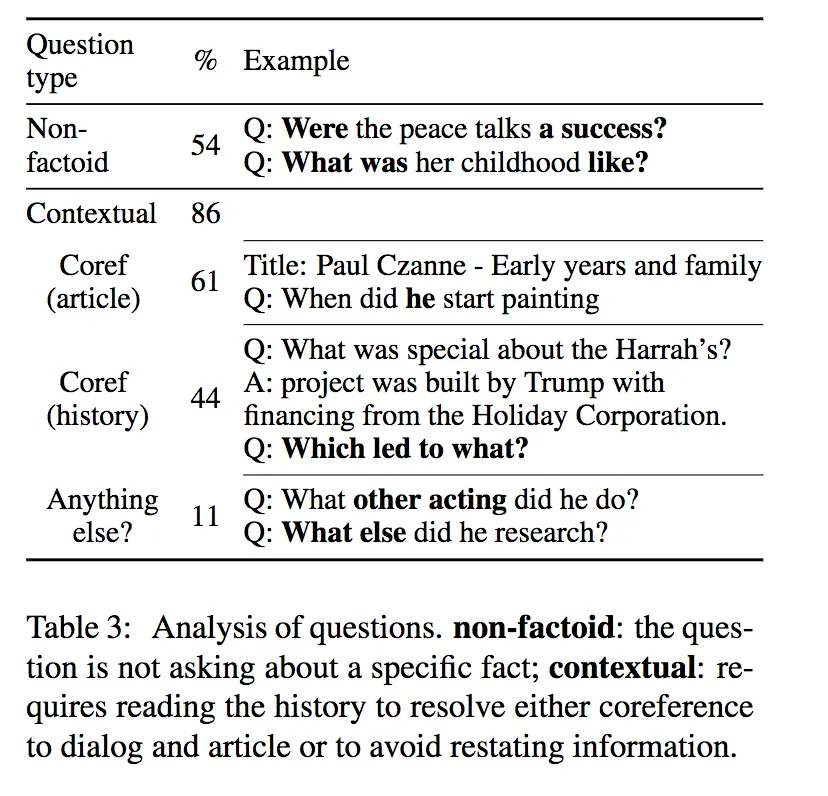

Key Dataset Characteristics

QuAC introduces several features that distinguish it from existing question answering benchmarks:

Notable features:

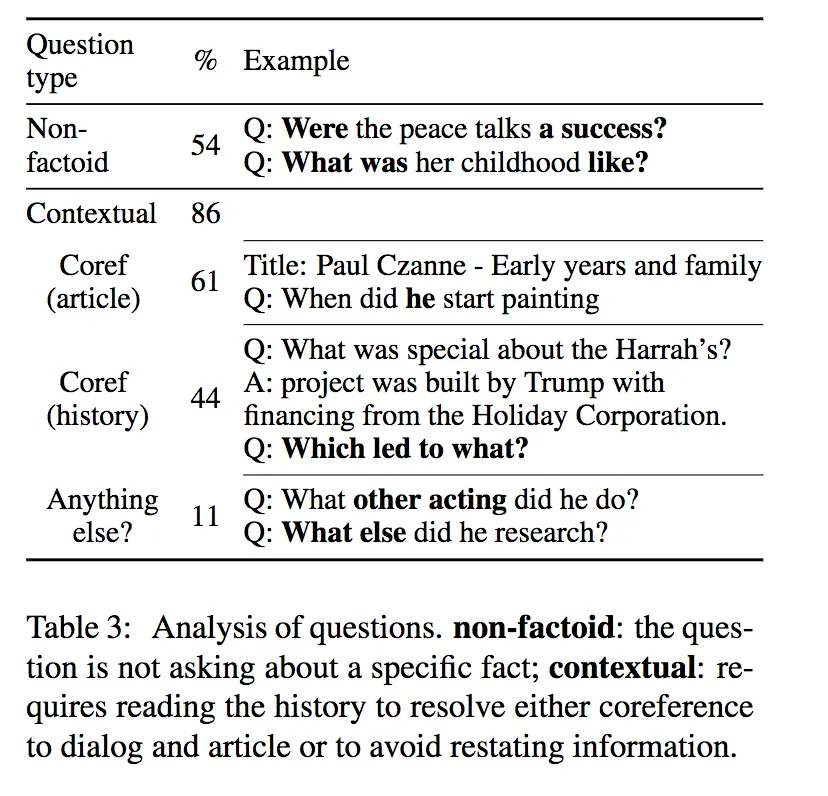

- High contextual dependency: 86% of questions require coreference resolution

- Non-factoid focus: 54% of questions go beyond simple fact retrieval

- Extended answers: Responses are longer and more detailed

- Unanswerable questions: Realistic scenarios where information isn’t available

The Coreference Resolution Challenge

QuAC’s complexity stems from its heavy reliance on coreference resolution across multiple contexts:

Reference types:

- Passage references: Pronouns and references to entities in the source text

- Dialogue references: References to previously discussed topics

- Abstract references: Challenging cases like “what else?” that require inferring the inquiry scope

The prevalence of coreference resolution makes QuAC particularly challenging, as this remains an active research problem in NLP. Models must understand passage content, track dialogue history, and resolve complex referential expressions simultaneously.

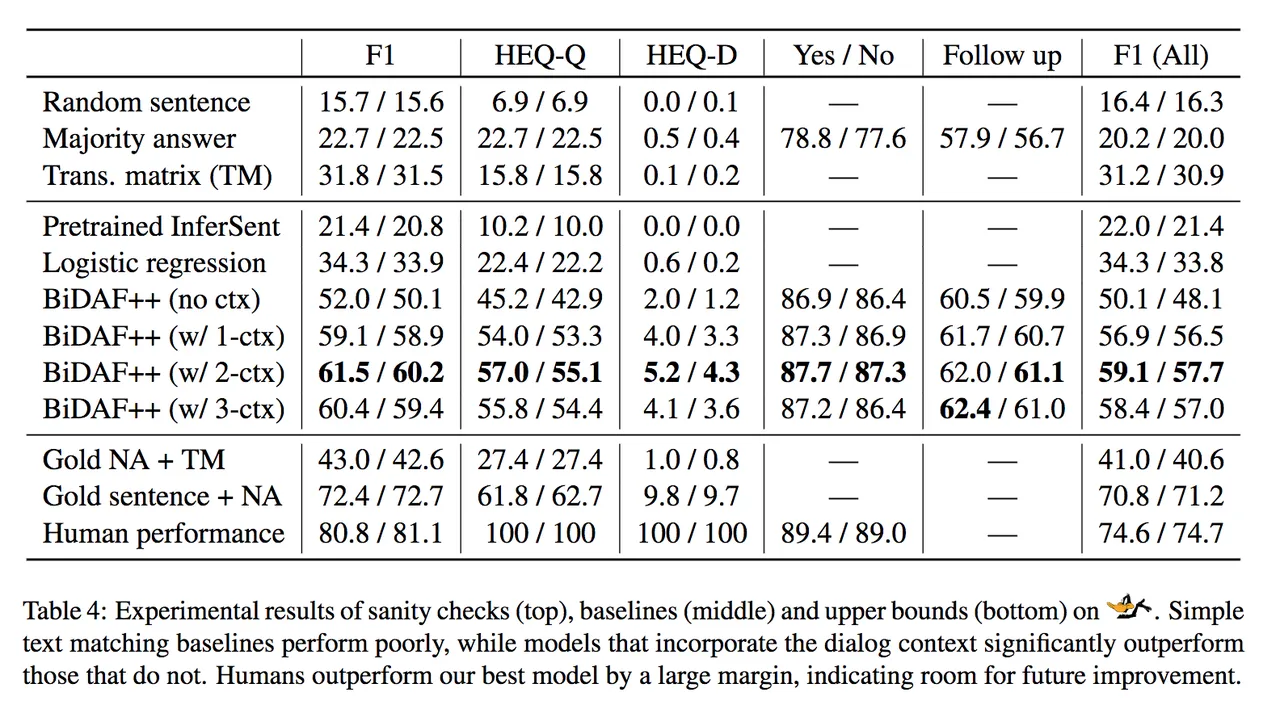

Performance Results

Models face substantial challenges on QuAC, with significant gaps between human and machine performance:

Performance summary:

- Human performance: 81.1% F1 score

- Best baseline: BiDAF++ with context achieves 60.2% F1

- Performance gap: 20+ point difference shows room for improvement

Human Equivalence Metrics

QuAC introduces evaluation metrics beyond traditional F1 scores:

HEQ-Q (Human Equivalence Question-level): Percentage of questions where the model achieves human-level or better performance

HEQ-D (Human Equivalence Dialogue-level): Percentage of complete dialogues where the model matches human performance across all questions

Current results:

- Human baseline: 100% HEQ-Q, 100% HEQ-D (by definition)

- Best model: 55.1% HEQ-Q, 5.2% HEQ-D

These metrics show both average performance and consistency across questions and conversations, important for practical dialogue systems.

Research Impact

QuAC represents an important step in question answering research by introducing realistic conversational dynamics that existing datasets lack. The student-teacher framework captures natural information-seeking behavior while maintaining extractive evaluation for objective assessment.

Key contributions:

- Conversational realism: Context-dependent questions that mirror dialogue patterns

- Coreference complexity: Integration of challenging NLP problems into QA evaluation

- Evaluation metrics: HEQ scores that measure consistency alongside average performance

- Large-scale framework: Substantial dataset enabling robust model training and evaluation

The dataset’s leaderboard provides researchers with a challenging benchmark for developing conversational AI systems. As models improve on QuAC, we can expect progress in dialogue agents, virtual assistants, and educational AI systems that engage in more natural, context-aware conversations.

QuAC’s focus on dialogue context and reference resolution pushes the field toward AI systems that can engage in genuine conversation and understand complex dialogue flows.

A Builder’s Perspective: QuAC and Modern Instruction Tuning

Looking at QuAC through the lens of modern production ML, the student-teacher framework is incredibly relevant. Today, we train foundation models using Reinforcement Learning from Human Feedback (RLHF) and instruction tuning, which rely heavily on multi-turn, context-aware interactions.

When building systems like GutenOCR or enterprise document processing pipelines, users rarely ask perfectly formulated, context-free questions. They ask follow-ups, use pronouns, and expect the system to act as a knowledgeable “teacher” guiding them through the document. QuAC was one of the first datasets to formalize this asymmetric information dynamic. It highlighted the necessity of handling unanswerable questions gracefully, a critical feature for preventing hallucinations in today’s production LLMs.

Citation

@inproceedings{choi-etal-2018-quac,

title = "{Q}u{AC}: Question Answering in Context",

author = "Choi, Eunsol and He, He and Iyyer, Mohit and Yatskar, Mark and Yih, Wen-tau and Choi, Yejin and Liang, Percy and Zettlemoyer, Luke",

booktitle = "Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing",

month = oct # "-" # nov,

year = "2018",

address = "Brussels, Belgium",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/D18-1241/",

doi = "10.18653/v1/D18-1241",

pages = "2174--2184"

}