Automating Neural Architecture Design

Designing neural network architectures is typically a manual, iterative process. Researchers experiment with different layer configurations, activation functions, and connection patterns, often guided by intuition and empirical results. But what if evolution could handle this design process automatically?

NEAT (NeuroEvolution of Augmenting Topologies) introduced a compelling answer in 2002. This algorithm doesn’t just optimize network weights, it evolves the network structure itself, starting from minimal topologies and growing complexity only when beneficial.

NEAT’s core innovations solved fundamental problems that had plagued earlier attempts at topology evolution. Its solutions for genetic encoding, structural crossover, and innovation protection remain influential today, especially as neural architecture search and automated ML gain prominence.

The Core Challenges

Evolving neural network topologies presents several fundamental challenges that NEAT elegantly addressed. Understanding these problems helps explain why NEAT’s solutions were so influential.

Genetic Encoding: How to Represent Networks

Evolutionary algorithms require a genetic representation, a way to encode individuals that enables meaningful selection, mutation, and crossover. For neural networks, this choice is critical.

Direct encoding explicitly represents each network component. Genes directly correspond to nodes and connections. This approach is intuitive and readable, but can become unwieldy for large networks.

Indirect encoding specifies construction rules or processes rather than explicit components. These encodings are more compact but can introduce strong architectural biases.

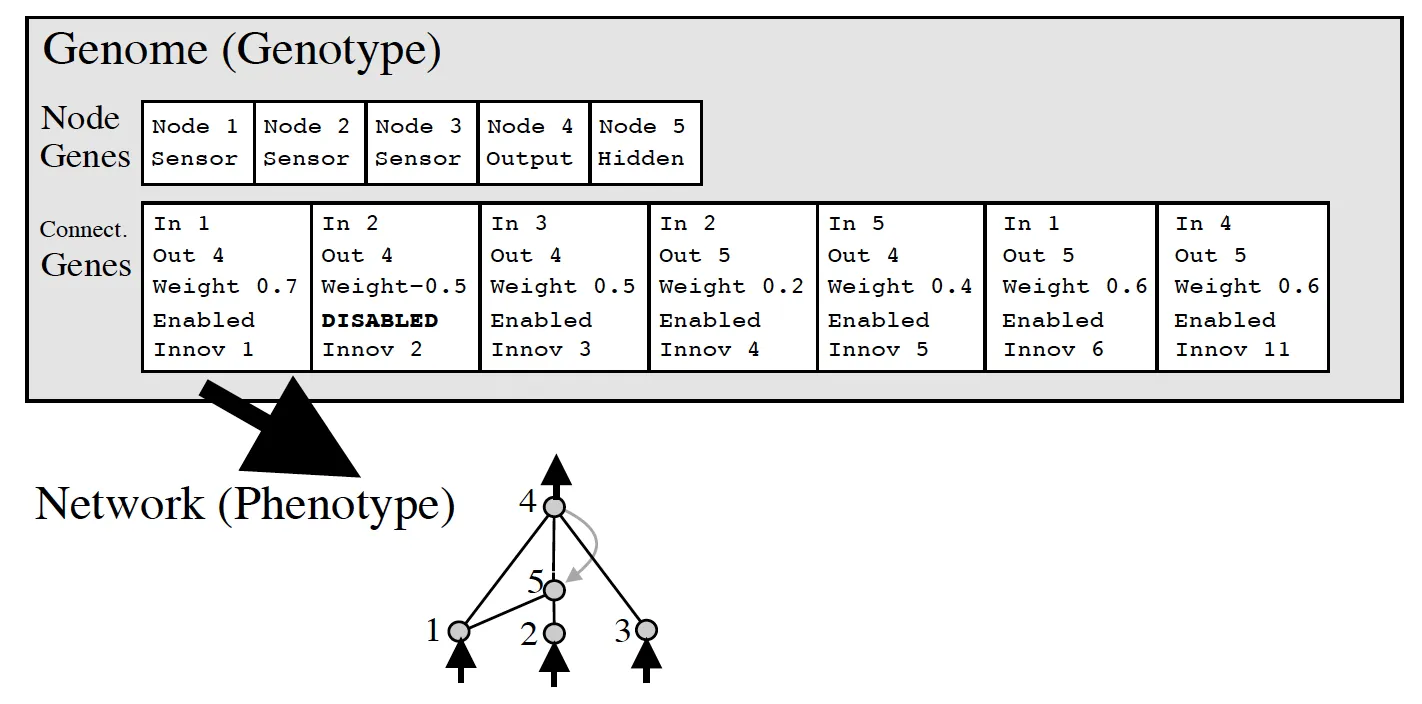

NEAT chose direct encoding with a simple two-part structure: separate gene lists for nodes and connections. This balances simplicity with the flexibility needed for evolutionary operations.

Connection genes specify the source and target nodes, weight, enabled status, and an innovation number for historical tracking. Input and output nodes are fixed; only hidden nodes evolve.

Structural Mutations: Growing Complexity

NEAT employs two categories of mutations to evolve both weights and structure:

Weight mutations adjust existing connection strengths using standard perturbation methods, the familiar approach from traditional neuroevolution.

Structural mutations add new network components:

- Add connection: Creates a new link between existing nodes with a random initial weight

- Add node: Splits an existing connection by inserting a new node. The original connection is disabled, while two new connections replace it. One inherits the original weight, the other starts at 1.0

This node-splitting approach minimizes disruption. The new node initially acts as an identity function, giving it time to prove useful before natural selection pressure intensifies.

Solving the Competing Conventions Problem

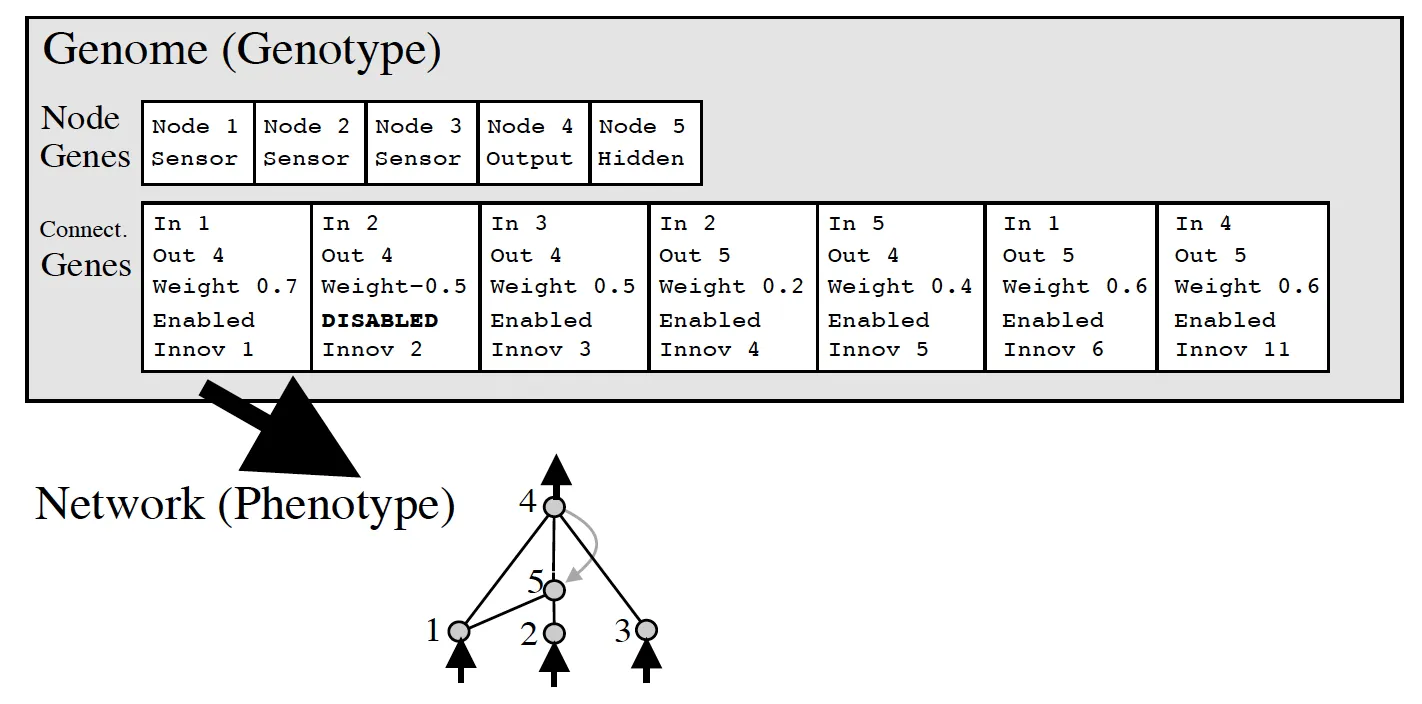

How do you perform crossover between networks with different structures? This fundamental challenge had stumped earlier approaches. Consider two networks that solve the same problem but use different internal organizations. Naive crossover between them typically produces broken offspring.

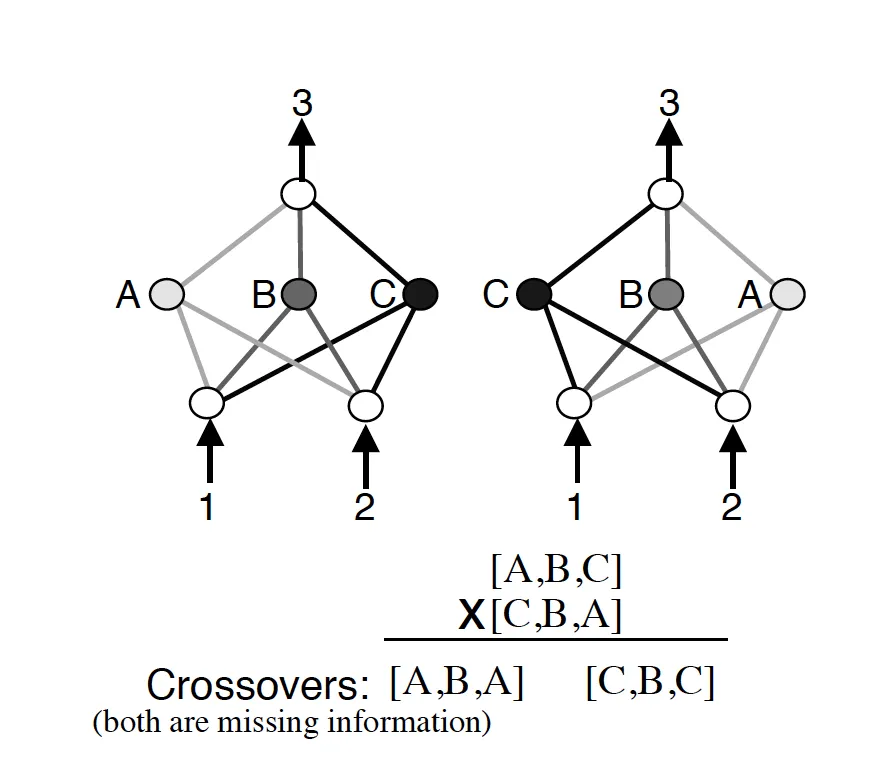

NEAT’s solution draws inspiration from biology: historical markings. Each structural innovation (adding a node or connection) receives a unique innovation number, a timestamp of when that change first appeared in the population.

During crossover, genes with matching innovation numbers are aligned and combined. This biological concept of homology enables meaningful recombination between networks of different sizes and structures.

Protecting Innovation Through Speciation

New structural innovations face a harsh reality: they usually perform worse initially. Adding nodes or connections typically decreases performance before optimization can improve the new structure. Without protection, these innovations disappear before realizing their potential.

NEAT addresses this through speciation: dividing the population into species based on structural and weight similarity. The historical markings that enable crossover also measure compatibility between individuals.

Crucially, individuals only compete within their species. This gives new structural innovations time to optimize without immediately competing against established, well-tuned networks.

Explicit fitness sharing enhances this protection: species divide their collective fitness among members, preventing any single species from dominating the population while maintaining diversity for continued exploration.

Complexification: Starting Minimal

NEAT begins with the simplest possible networks (just input and output nodes connected by random weights). No hidden layers exist initially. Complexity emerges only when mutations that add structure prove beneficial.

This “complexification” approach contrasts sharply with methods that start with large networks and prune unnecessary components. Combined with speciation, it tends to produce efficient solutions that solve problems with minimal structure rather than overengineered architectures.

Experimental Validation

NEAT demonstrated its effectiveness across classic benchmarks that highlighted different aspects of the algorithm:

XOR Problem: This simple non-linearly separable function validated NEAT’s ability to evolve minimal topologies for logical tasks.

Pole Balancing Tasks: NEAT excelled at these control problems where an agent must balance poles on a movable cart. The algorithm successfully solved increasingly difficult variants:

- Single pole balancing (with velocity information)

- Double pole balancing (with velocity information)

- Double pole balancing without velocity information (the most challenging variant)

These control tasks demonstrated NEAT’s capability beyond simple function approximation. It could evolve networks for complex sensorimotor control where the optimal topology wasn’t obvious beforehand.

Lasting Impact

NEAT’s innovations continue to influence modern neuroevolution and neural architecture search. The algorithm’s core solutions (historical markings for meaningful crossover, speciation for innovation protection, and principled complexification) appear throughout subsequent research.

Direct descendants like HyperNEAT, ES-HyperNEAT, and CoDeepNEAT build upon these foundations. More broadly, as automated architecture search gains prominence in deep learning, NEAT’s principles inform hybrid approaches that combine evolutionary exploration with gradient-based optimization.

The algorithm remains accessible for experimentation, with implementations available in most programming languages. For researchers interested in topology evolution or automated design, NEAT provides both practical tools and foundational insights that remain relevant today.