Introduction

The CoQA dataset (Reddy et al., 2019) introduces conversational dynamics to question answering research. CoQA requires models to maintain context across multi-turn conversations while reading and reasoning about text passages. Previous datasets focused on isolated question-answer pairs.

This dataset addresses a gap in conversational AI research by providing a benchmark for systems that must understand dialogue flow and implicit references. These are key components of natural human conversation.

For related work on conversational question answering, see my analysis of QuAC.

What Makes Conversational QA Different

Conversational question answering introduces challenges beyond traditional reading comprehension:

- Context dependency: Questions rely on previous dialogue turns for meaning

- Coreference resolution: Understanding pronouns and implicit references

- Abstractive answering: Rephrasing information to generate natural responses

- Multi-turn reasoning: Maintaining coherent dialogue across multiple exchanges

These requirements differentiate CoQA from existing question answering datasets that treat each question independently.

Why CoQA Matters

Question answering systems typically excel at finding specific information in text. However, they often struggle with natural conversation. Human communication involves building on previous exchanges, using pronouns and implicit references, and expressing ideas in varied ways.

CoQA addresses this by creating a large-scale dataset for conversational question answering with three primary characteristics:

Conversation-dependent questions: After the first question, every subsequent question depends on dialogue history across 127,000 questions spanning 8,000 conversations

Natural, abstractive answers: CoQA requires rephrased responses that sound natural in conversation. The answerer first highlighted the relevant text span, then rephrased the information.

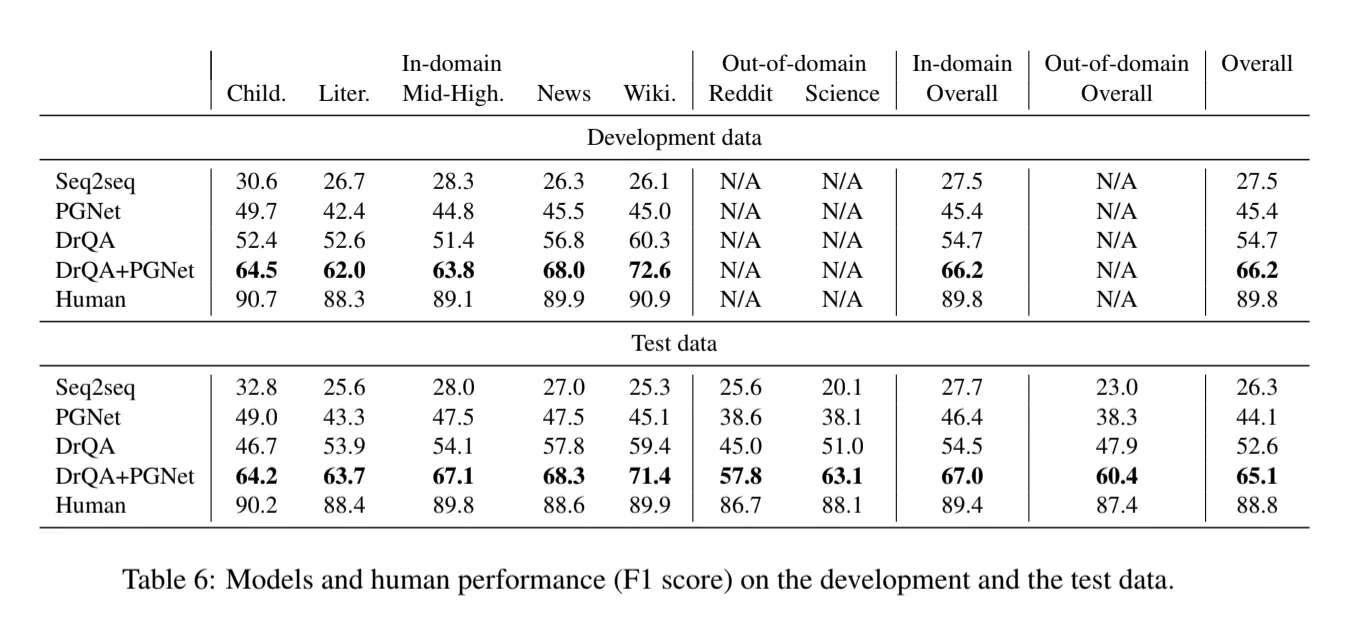

Domain diversity: Training covers 5 domains with testing on 7 domains, including 2 unseen during training

The performance gap is notable: humans achieve 88.8% F1 score while the best models at the time reached 65.1% F1, indicating substantial room for improvement.

Dataset Construction

CoQA was constructed using Amazon Mechanical Turk, pairing workers in a question-answer dialogue setup. One worker asked questions about a given passage while another provided answers. The answerer first highlighted the relevant text span, then rephrased the information using different words to create natural, abstractive responses.

This methodology produces answers that sound conversational. This makes the dataset highly realistic for dialogue applications.

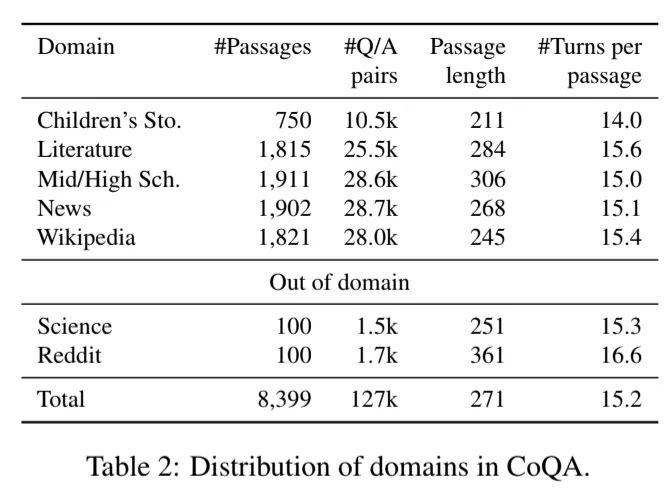

Domain Coverage

CoQA spans diverse text types to ensure evaluation across different writing styles and topics:

Training domains (5):

- Children’s stories from MCTest

- Literature from Project Gutenberg

- Educational content from RACE (middle/high school English)

- CNN news articles

- Wikipedia articles

Test-only domains (2):

- Science articles from AI2 Science Questions

- Creative writing from Reddit WritingPrompts

The inclusion of test-only domains provides a rigorous evaluation of model generalization to unseen text types.

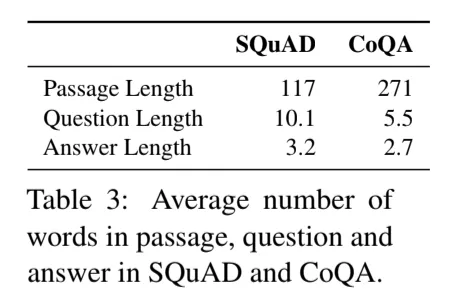

Comparison with Existing Datasets

Prior to CoQA, the dominant question answering benchmark was SQuAD (Stanford Question Answering Dataset). SQuAD established foundations for reading comprehension and presented specific constraints:

- SQuAD 1.0: 100,000+ questions requiring exact text extraction from Wikipedia passages

- SQuAD 2.0: Added 50,000+ unanswerable questions to test when no answer exists

SQuAD treats each question independently and requires only extractive answers. CoQA addresses these constraints through conversational context and abstractive responses.

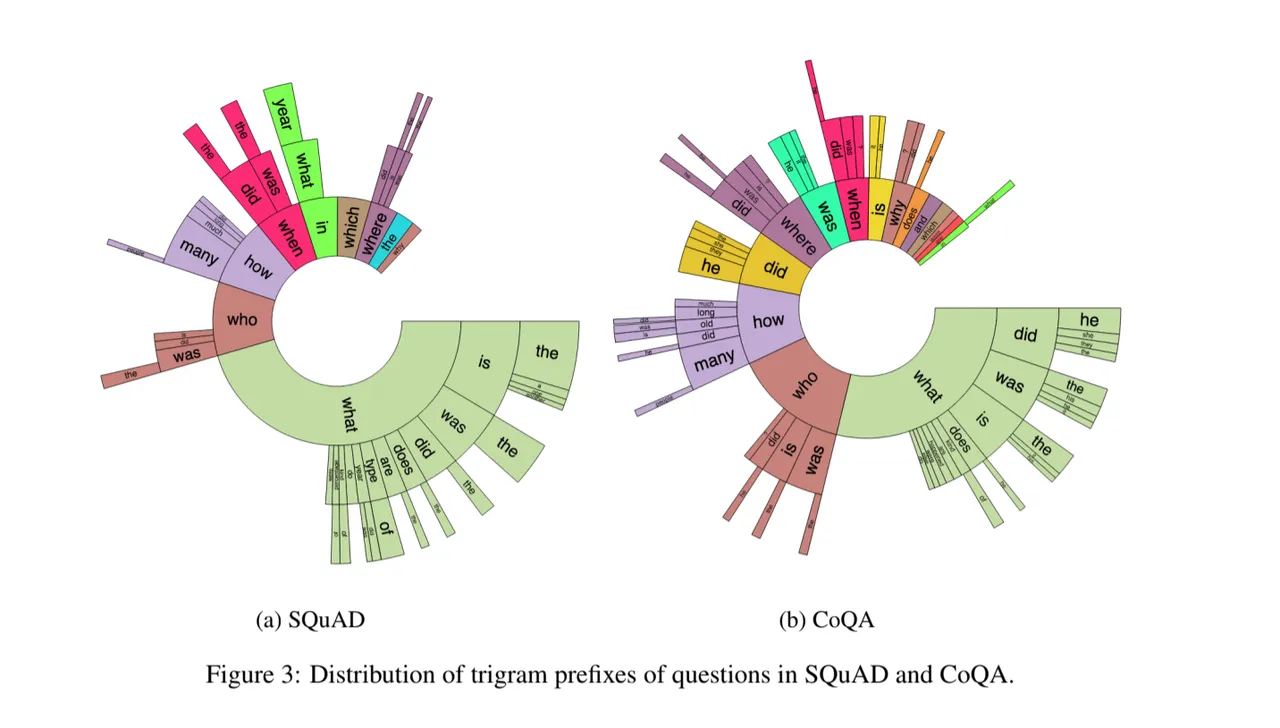

Question and Answer Analysis

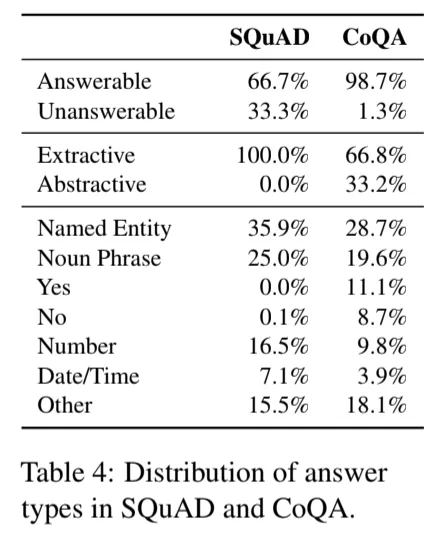

The differences between SQuAD and CoQA extend beyond conversational context:

Question diversity: SQuAD heavily favors “what” questions (~50%). CoQA shows a more balanced distribution across question types, reflecting natural conversation patterns.

Context dependence: CoQA includes challenging single-word questions like “who?”, “where?”, or “why?” that depend entirely on dialogue history.

Answer characteristics: CoQA answers vary significantly in length and style. SQuAD primarily features extractive spans.

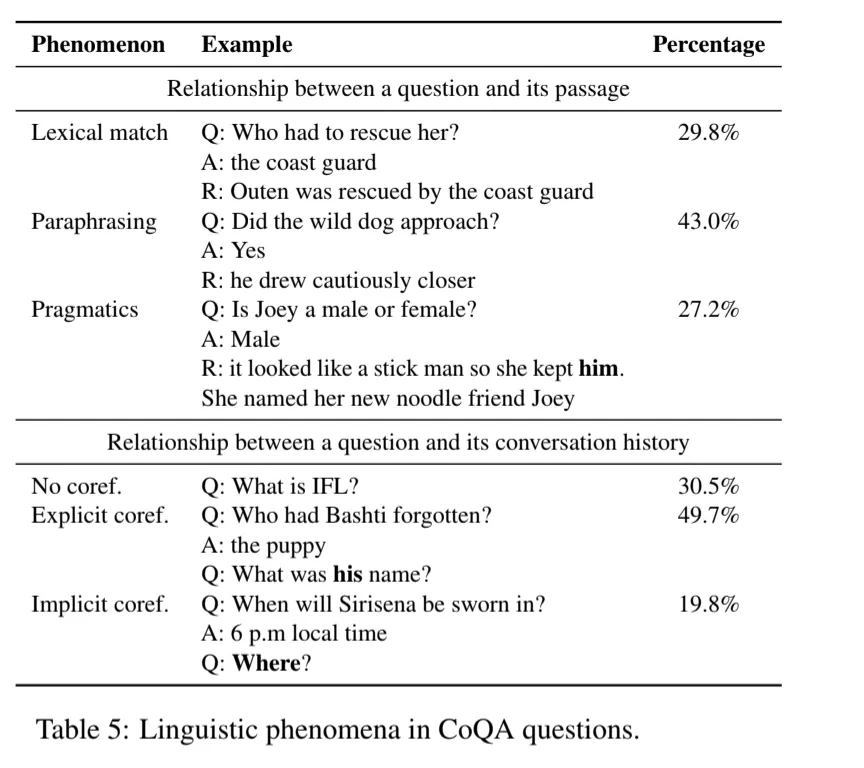

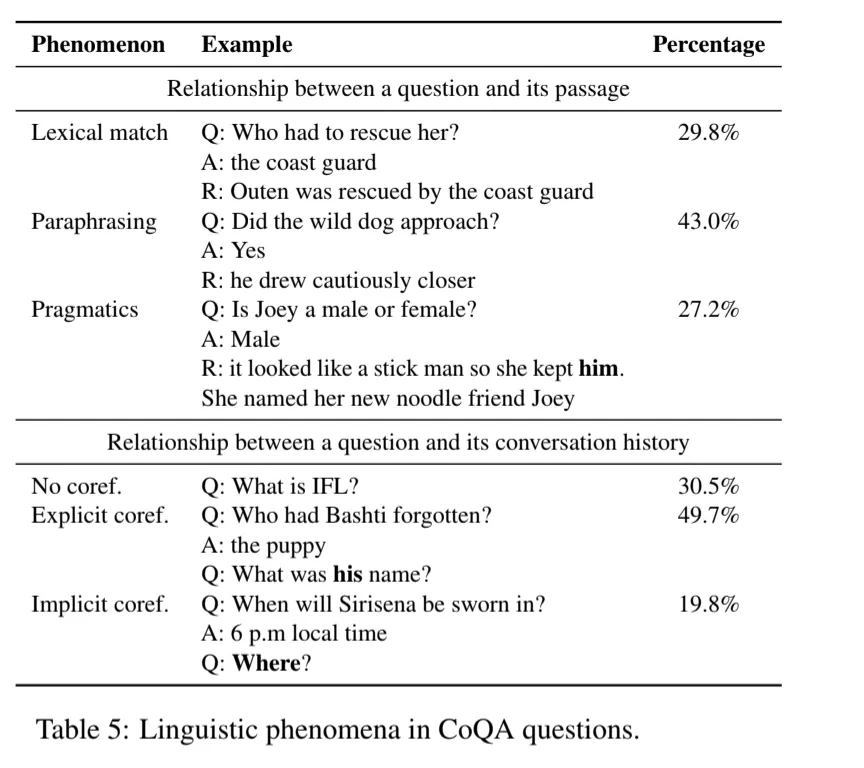

The Coreference Challenge

CoQA’s difficulty stems largely from its reliance on coreference resolution (determining when different expressions refer to the same entity). This remains a challenging research problem in NLP.

Coreference types in CoQA:

- Explicit coreferences (~50% of questions): Clear indicators like pronouns (“him,” “it,” “her,” “that”)

- Implicit coreferences (~20% of questions): Context-dependent references requiring inference (e.g., asking “where?” without specifying what)

These linguistic phenomena make CoQA more difficult than traditional reading comprehension, as models must resolve references across dialogue turns while maintaining conversational coherence.

Performance Benchmarks

Models faced significant challenges on CoQA, with substantial room for improvement:

The performance gap between human and machine capabilities highlighted conversational question answering as a challenging frontier in NLP research.

Research Impact and Future Directions

CoQA represents a step toward more natural conversational AI systems. By requiring models to handle dialogue context, coreference resolution, and abstractive reasoning simultaneously, it challenges current NLP system capabilities.

The dataset’s leaderboard provides a benchmark for measuring progress on this task. As models improve on CoQA, we can expect advances in conversational AI applications, from chatbots to virtual assistants that engage in more natural, context-aware dialogue.

CoQA’s contribution to the field aims to parallel ImageNet’s impact on computer vision, providing a challenging, well-constructed benchmark that drives research toward more capable AI systems.

A Builder’s Perspective: CoQA in the Era of LLMs

Looking back at CoQA from the perspective of modern production systems, this dataset was highly prescient. The challenges it introduced, such as multi-turn reasoning, coreference resolution, and abstractive answering, are the exact capabilities we now expect from instruction-tuned Large Language Models (LLMs).

When building document processing pipelines at scale, we rarely extract isolated facts. Users want to chat with their documents, asking follow-up questions like, “What does that mean for the Q3 budget?” Resolving “that” to a previous turn’s context is exactly what CoQA formalized. Datasets like CoQA laid the groundwork for the conversational interfaces we build today, shifting the field’s focus from simple extraction to genuine dialogue comprehension.

References

Reddy, S., Chen, D., & Manning, C. D. (2019). CoQA: A conversational question answering challenge. Transactions of the Association for Computational Linguistics, 7, 249-266.