Paper Information

Citation: Kornilova, A., Argyle, D., & Eidelman, V. (2018). Party Matters: Enhancing Legislative Embeddings with Author Attributes for Vote Prediction. arXiv preprint arXiv:1805.08182. https://arxiv.org/abs/1805.08182

Publication: arXiv 2018

What kind of paper is this?

This is a Method paper. It proposes a novel neural architecture that modifies how bill embeddings are constructed by explicitly incorporating sponsor metadata alongside text. The authors validate this method by comparing it against state-of-the-art baselines (MWE and CNN text-only models) and demonstrating superior performance in a newly defined “out-of-session” evaluation setting.

What is the motivation?

Existing models for predicting legislative roll-call votes rely heavily on text or voting history within a single session. However, these models fail to generalize across sessions because the underlying data generation process changes - specifically, the ideological position of bills on similar topics shifts depending on which party is in power. A model trained on a single session learns an implicit ideological prior that becomes inaccurate when the political context changes in subsequent sessions.

What is the novelty here?

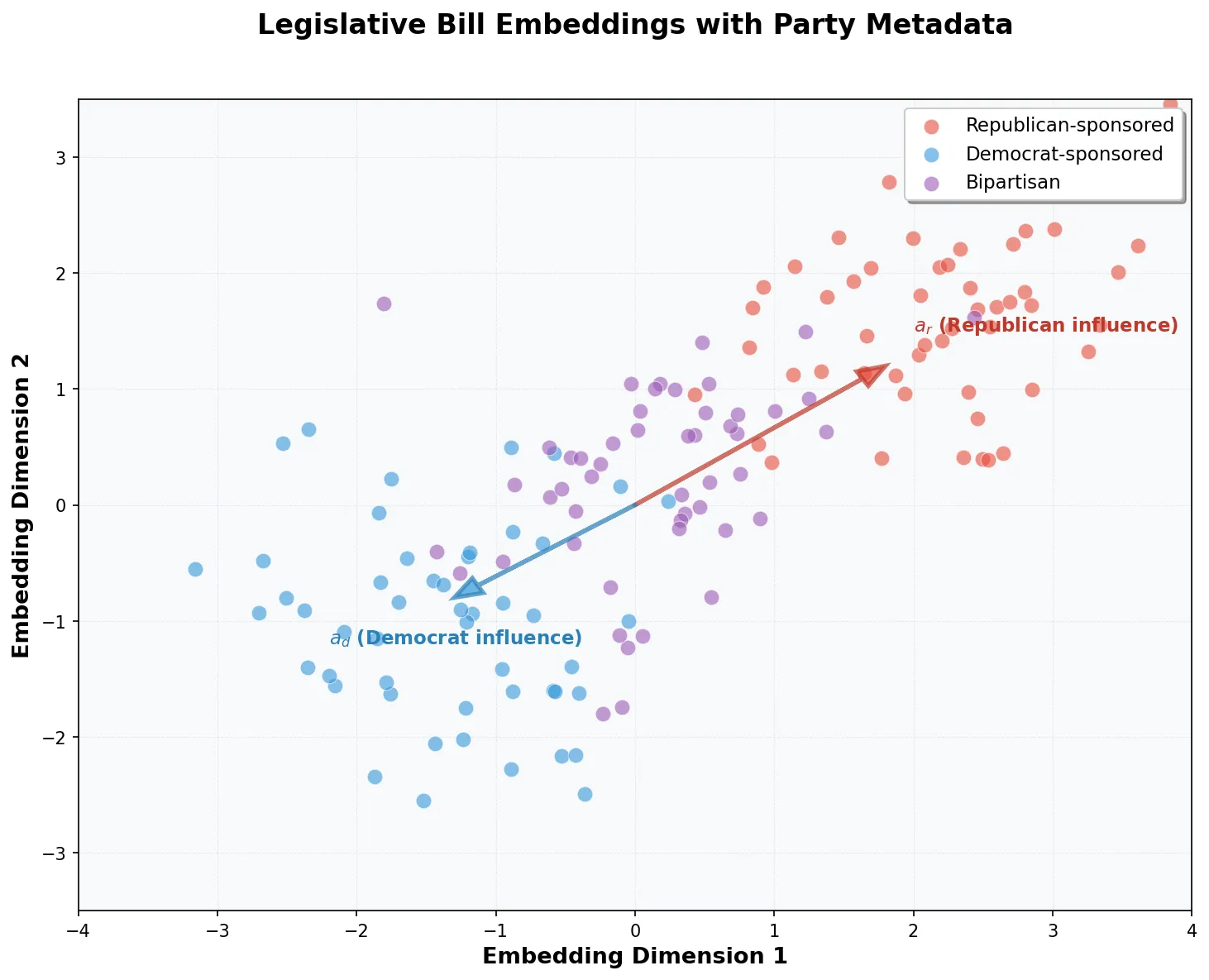

The core novelty is a neural architecture that augments bill text representations with sponsor ideology, specifically the percentage of Republican vs. Democrat sponsors.

- Sponsor-Weighted Embeddings: Instead of a static text embedding, they compute a composite embedding where the text representation is weighted by party sponsorship percentages ($p_r, p_d$) and party-specific influence vectors ($a_r, a_d$).

- Out-of-Session Evaluation: They introduce a rigorous evaluation setting where models trained on past sessions (e.g., 2005-2012) are tested on future sessions (e.g., 2013-2014) to test generalization, which previous work had ignored.

What experiments were performed?

The authors evaluated their models using a dataset of U.S. Congressional bills from 2005 to 2016.

- Models Tested: They compared text-only models (MWE, CNN) against metadata-augmented versions (MWE+Meta, CNN+Meta) and a “Meta-Only” baseline (using dummy text).

- Settings:

- In-Session: 5-fold cross-validation on 2005-2012 data.

- Out-of-Session: Training on 2005-2012 and testing on 2013-2014 and 2015-2016.

- Baselines: Comparisons included a “Guess Yes” baseline and an SVM trained on bag-of-words summaries with sponsor indicators.

What were the outcomes and conclusions drawn?

- Metadata is Critical: Augmenting text with sponsor metadata consistently outperformed text-only models. The

CNN+Metamodel achieved the highest accuracy in most settings (e.g., 86.21% in-session vs 83.24% for CNN). - Generalization: Text-only models degraded significantly in out-of-session testing (dropping from ~83% to ~77% or lower), confirming that text alone fails to capture shifting ideological contexts.

- Sponsor Signal: The

Meta-Onlymodel (using no text) outperformed text-only models in the 2013-2014 out-of-session test, suggesting that in some contexts, who wrote the bill matters more than what is in it for prediction. - Performance Boost: The proposed architecture achieved an average 4% accuracy boost over the previous state-of-the-art.

Reproducibility Details

Data

- Source: Collected from GovTrack, covering the 106th to 111th Congressional sessions.

- Content: Non-unanimous roll call votes, full text of bills/resolutions, and Congressional Research Service (CRS) summaries.

- Filtering: Bills with unanimous votes (defined as <1% “no” votes) were excluded.

- Preprocessing:

- Text lowercased and stop-words removed.

- Summaries truncated to $N=400$ words; full text truncated to $N=2000$ words (80th percentile lengths).

- Splits:

- Training: Sessions 2005-2012 (1718 bills).

- Testing: Sessions 2013-2014 (360 bills) and 2015-2016 (382 bills).

Algorithms

- Bill Representation ($v_B$): $$v_{B}=((a_{r}p_{r})\cdot T_{r})+((a_{d}p_{d})\cdot T_{d})$$ Where $T$ is the text embedding (CNN or MWE), $p$ is the percentage of sponsors from a party, and $a$ is a learnable party influence vector.

- Vote Prediction:

- Project bill embedding to legislator space: $v_{BL}=W_{B}v_{B}+b_{B}$.

- Alignment score: $W_{v}(v_{BL}\odot v_{L})+b_{v}$ (using element-wise multiplication rather than dot product).

- Output: Sigmoid activation.

- Optimization: AdaMax algorithm with binary cross-entropy loss.

Models

- Text Encoders:

- CNN: 4-grams with 400 filter maps.

- MWE: Mean Word Embedding.

- Embeddings:

- Initialized with 50-dimensional GloVe vectors.

- Embeddings are non-static (updated during training).

- Legislator embedding size ($v_L$): 25 dimensions.

- Initialization: Weights initialized with Glorot uniform distribution.

Evaluation

- Metrics: Accuracy.

- Comparison:

- In-session: 5-fold cross-validation.

- Out-of-session: Train on past sessions, predict future sessions.

Hardware

- Training Config: Models trained for 50 epochs with mini-batches of size 50.

Citation

@misc{kornilovaPartyMattersEnhancing2018,

title = {Party {{Matters}}: {{Enhancing Legislative Embeddings}} with {{Author Attributes}} for {{Vote Prediction}}},

shorttitle = {Party {{Matters}}},

author = {Kornilova, Anastassia and Argyle, Daniel and Eidelman, Vlad},

year = 2018,

month = may,

number = {arXiv:1805.08182},

eprint = {1805.08182},

primaryclass = {cs},

publisher = {arXiv},

archiveprefix = {arXiv},

}