Paper Information

Citation: Albergo, M. S., & Vanden-Eijnden, E. (2023). Building Normalizing Flows with Stochastic Interpolants. The Eleventh International Conference on Learning Representations.

Publication: ICLR 2023

Additional Resources:

What kind of paper is this?

This is primarily a Method paper ($\Psi_{\text{Method}}$), with significant Theory ($\Psi_{\text{Theory}}$) contributions.

The authors propose a specific algorithm (“InterFlow”) for constructing generative models based on continuous-time normalizing flows. The work is characterized by the derivation of a new training objective (a simple quadratic loss) that bypasses the computational bottlenecks of previous methods. It includes prominent baseline comparisons against state-of-the-art continuous flows (FFJORD, OT-Flow) and diffusion models. The theoretical component establishes the validity of the interpolant density satisfying the continuity equation and bounds the Wasserstein-2 distance of the transport.

What is the motivation?

The primary motivation is to overcome the computational inefficiency of training Continuous Normalizing Flows (CNFs) using Maximum Likelihood Estimation (MLE). Standard CNF training requires backpropagating through numerical ODE solvers, which is costly and limits scalability.

Additionally, while score-based diffusion models (SDEs) have achieved high sample quality, they theoretically require infinite time integration and rely on specific noise schedules. The authors aim to establish a method that works strictly with Probability Flow ODEs on finite time intervals, retaining the flexibility to connect arbitrary densities without the complexity of SDEs or the cost of standard ODE adjoint methods.

What is the novelty here?

The core novelty is the Stochastic Interpolant framework:

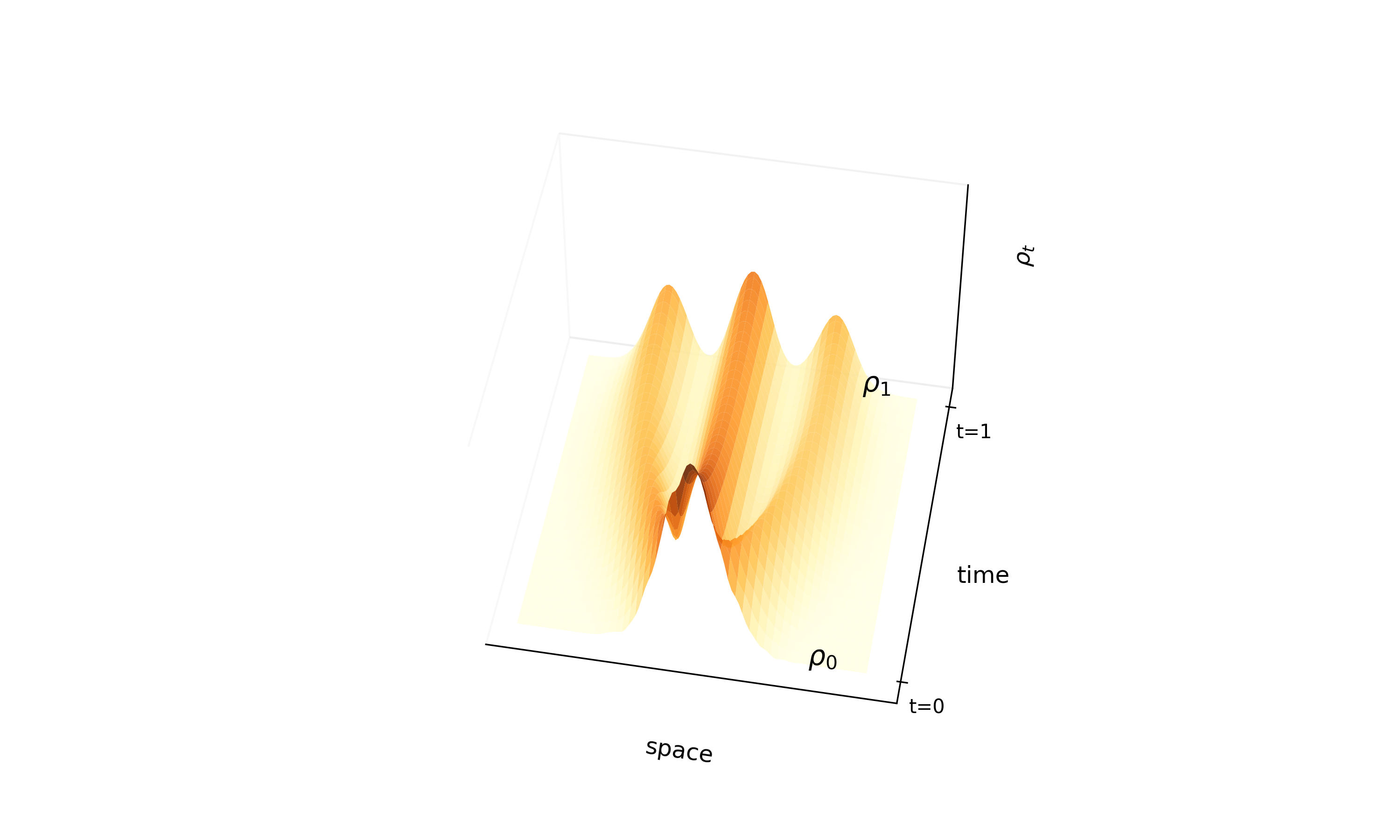

- Explicit Interpolant Construction: The method defines a time-dependent interpolant $x_t = I_t(x_0, x_1)$ (e.g., trigonometric interpolation) that connects samples from the base density $\rho_0$ and target $\rho_1$.

- Simulation-Free Training: The velocity field $v_t(x)$ of the probability flow is learned by minimizing a simple quadratic objective: $G(\hat{v}) = \mathbb{E}[|\hat{v}_t(x_t)|^2 - 2\partial_t x_t \cdot \hat{v}_t(x_t)]$. This avoids ODE integration during training entirely.

- Decoupling Path and Optimization: The choice of path (interpolant) is separated from the optimization of the velocity field, unlike MLE methods where the path and objective are coupled.

- Connection to Score-Based Models: The authors show that for Gaussian base densities and trigonometric interpolants, the learned velocity field is explicitly related to the score function $\nabla \log \rho_t$, providing a theoretical bridge between CNFs and diffusion models.

What experiments were performed?

The authors performed validation across synthetic, tabular, and image domains:

- 2D Density Estimation: Benchmarked on “Checkerboard”, “8 Gaussians”, and “Spirals” to visualize mode coverage and transport smoothness.

- High-Dimensional Tabular Data: Evaluated on standard benchmarks (POWER, GAS, HEPMASS, MINIBOONE, BSDS300) comparing Negative Log Likelihood (NLL) against FFJORD, OT-Flow, and others.

- Image Generation: Trained models on CIFAR-10 ($32 \x32$), ImageNet ($32 \x32$), and Oxford Flowers ($128 \x128$) to test scalability.

- Ablations: Investigated optimizing the interpolant path itself (e.g., learning Fourier coefficients for the path) to approach optimal transport and minimize path length.

What outcomes/conclusions?

- Performance: The method matches or supersedes conventional ODE flows (like FFJORD) in terms of NLL while being significantly cheaper to train.

- Efficiency: The training cost per epoch is constant (simulation-free), whereas MLE-based ODE methods see growing costs as the dynamics become more complex.

- Scalability: The method successfully scales to $128 \x128$ resolution on a single GPU, a resolution previously unreachable for ab-initio ODE flows.

- Flexibility: The framework can connect any two arbitrary densities (e.g., connecting two different complex 2D distributions) without needing one to be Gaussian.

- Optimal Transport: Maximizing the objective over the choice of interpolant yields a solution to the Benamou-Brenier optimal transport problem.

Reproducibility Details

Data

- Tabular Datasets: POWER (6D), GAS (8D), HEPMASS (21D), MINIBOONE (43D), BSDS300 (63D).

- Training points range from ~30k (MINIBOONE) to ~1.6M (POWER).

- Image Datasets:

- CIFAR-10 ($32 \x32$, 50k training points).

- ImageNet ($32 \x32$, ~1.28M training points).

- Oxford Flowers ($128 \x128$, ~315k training points).

- Time Sampling: Time $t$ is sampled from a Beta distribution during training (reweighting) to focus learning near the target.

Algorithms

- Interpolant: The primary interpolant used is trigonometric: $I_t(x_0, x_1) = \cos(\frac{\pi t}{2})x_0 + \sin(\frac{\pi t}{2})x_1$.

- Alternative linear interpolant: $I_t = a_t x_0 + b_t x_1$.

- Loss Function:

$$G(\hat{v}) = \mathbb{E}_{t, x_0, x_1}[|\hat{v}_t(x_t)|^2 - 2\partial_t I_t(x_0, x_1) \cdot \hat{v}_t(x_t)]$$

- The expectation is amenable to empirical estimation using batches of $x_0, x_1, t$.

- Sampling: Numerical integration using Dormand-Prince (Runge-Kutta 4/5).

- Optimization: SGD/Adam variants used for optimization.

Models

- Tabular Architectures:

- Feed-forward networks with 3-5 hidden layers.

- Hidden widths: 512-1024 units.

- Activation: ReLU (general) or ELU (BSDS300).

- Image Architectures:

- U-Net based on the DDPM implementation.

- Dimensions: 256 hidden dimension.

- Sinusoidal time embeddings used.

Evaluation

- Metrics: Negative Log Likelihood (NLL) in nats or bits/dim (BPD), Frechet Inception Distance (FID) for images.

- Baselines: FFJORD, Glow, Real NVP, OT-Flow, ScoreFlow, DDPM.

Hardware

- Compute: All models were trained on a single NVIDIA A100 GPU.

- Training Time:

- Tabular: $10^5$ steps.

- Images: $1.5 \x10^5$ to $6 \x10^5$ steps.

- Speedup: Demonstrated ~400x speedup compared to FFJORD on MiniBooNE dataset.

Citation

@inproceedings{albergoBuildingNormalizingFlows2022,

title = {Building {{Normalizing Flows}} with {{Stochastic Interpolants}}},

booktitle = {The {{Eleventh International Conference}} on {{Learning Representations}}},

author = {Albergo, Michael Samuel and {Vanden-Eijnden}, Eric},

year = 2023,

url = {https://openreview.net/forum?id=li7qeBbCR1t}

}