Paper Information

Citation: Liu, X., Gong, C., & Liu, Q. (2023). Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow. International Conference on Learning Representations (ICLR). https://openreview.net/forum?id=XVjTT1nw5z

Publication: ICLR 2023

Additional Resources:

What kind of paper is this?

This is primarily a Method paper, with a significant Theory component.

- Method: It proposes “Rectified Flow,” a novel generative framework that learns ordinary differential equations (ODEs) to transport distributions via straight paths. It introduces the “Reflow” algorithm to iteratively straighten these paths.

- Theory: It provides rigorous proofs connecting the method to Optimal Transport, showing that the rectification process yields a coupling with non-increasing convex transport costs and that recursive reflow reduces the curvature of trajectories.

What is the motivation?

The work addresses two main challenges in unsupervised learning: generative modeling (generating data from noise) and domain transfer (mapping between two observed distributions).

- Inefficiency of ODE/SDE Models: Continuous-time models (like Score-based Generative Models and DDPMs) require simulating diffusions over many steps, resulting in high computational costs during inference.

- Complexity of GANs: While fast (one-step), GANs suffer from training instability and mode collapse.

- Disconnection: Generative modeling and domain transfer are often treated as separate tasks requiring different techniques.

The authors aim to unify these tasks into a single “transport mapping” problem while bridging the gap between high-quality continuous models and fast one-step models.

What is the novelty here?

The core novelty is the Rectified Flow framework and the Reflow procedure.

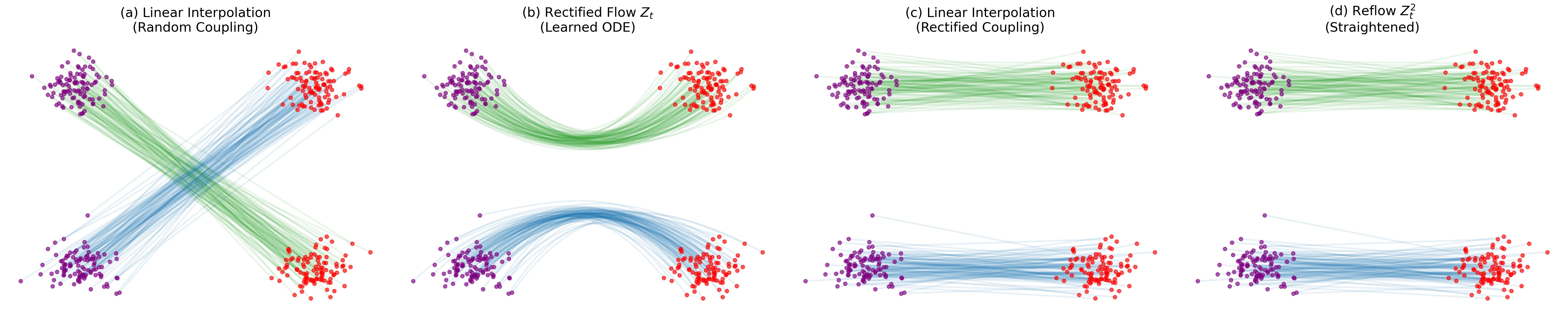

- Straight-Line ODEs: Unlike diffusion models that rely on stochastic paths or specific forward processes, Rectified Flow learns an ODE drift $v$ to follow the straight line connecting data pairs $(X_0, X_1)$. This is achieved via a simple least-squares optimization problem.

- Reflow (Iterative Straightening): The authors introduce a recursive training procedure where a new flow is trained on the data pairs $(Z_0, Z_1)$ generated by the previous flow. Theoretical analysis shows this reduces the “transport cost” and straightens the trajectories, allowing for accurate 1-step simulation (effectively converting the ODE into a one-step model).

- Unified Framework: The method uses the exact same algorithm for generation ($\pi_0$ is Gaussian) and domain transfer ($\pi_0$ is a source dataset), removing the need for adversarial losses or cycle-consistency constraints.

What experiments were performed?

The authors validated the method across image generation, translation, and domain adaptation tasks.

- Unconditioned Image Generation:

- Dataset: CIFAR-10 ($32\times32$).

- Baselines: Compared against GANs (StyleGAN2), Diffusion Models (DDPM, NCSN++), and distilled methods (DPM-Solver, Progressive Distillation).

- High-Res: Validated on LSUN Bedroom/Church, CelebA-HQ, and AFHQ ($256\times256$).

- Image-to-Image Translation:

- Datasets: AFHQ (Cat $\leftrightarrow$ Dog/Wild), MetFace $\leftrightarrow$ CelebA-HQ.

- Setup: Transferring styles while preserving semantic identity (using a classifier-based feature mapping metric).

- Domain Adaptation:

- Datasets: DomainNet, Office-Home.

- Metric: Classification accuracy on the transferred testing data.

What were the outcomes and conclusions drawn?

- Superior 1-Step Generation: On CIFAR-10, the “2-Rectified Flow” (after one reflow step) achieved an FID of 4.85 and Recall of 0.51 with a single Euler step, outperforming previous state-of-the-art one-step diffusion distillation methods.

- Straightening Effect: The “Reflow” procedure was empirically shown to reduce the “straightness” error and transport costs, validating the theoretical claims.

- High-Quality Transfer: The model successfully performed image translation (e.g., Cat to Wild Animal) without paired data or cycle-consistency losses.

- Fast Simulation: The method allows for extremely coarse time discretization (e.g., $N=1$) without significant quality loss after reflow, effectively solving the slow inference speed of standard ODE models.

Reproducibility Details

Data

The paper utilizes several standard computer vision benchmarks.

| Purpose | Dataset | Size/Resolution | Notes |

|---|---|---|---|

| Generation | CIFAR-10 | 32x32 | Standard split |

| Generation | LSUN (Bedroom, Church) | 256x256 | High-res evaluation |

| Generation | CelebA-HQ | 256x256 | High-res evaluation |

| Gen/Transfer | AFHQ (Cat, Dog, Wild) | 512x512 | Resized to 512x512 |

| Transfer | MetFace | 1024x1024 | Resized |

| Adaptation | DomainNet | Mixed | 345 categories, 6 domains |

| Adaptation | Office-Home | Mixed | 65 categories, 4 domains |

Algorithms

Objective Function: The drift $v(Z_t, t)$ is trained by minimizing a least-squares regression objective: $$\min_{v} \int_{0}^{1} \mathbb{E}[||(X_1 - X_0) - v(X_t, t)||^2] dt$$ where $X_t = tX_1 + (1-t)X_0$ is the linear interpolation.

Reflow Procedure: Iteratively updates the flow. Let $Z^k$ be the $k$-th rectified flow.

- Generate data pairs $(Z_0^k, Z_1^k)$ by simulating the current flow.

- Train $Z^{k+1}$ using these pairs as the new $(X_0, X_1)$ in the objective above.

ODE Solver:

- Training: Analytical linear interpolation.

- Inference: Euler method (constant step size $1/N$) or RK45 (adaptive).

Models

Architecture:

- CIFAR-10 / Transfer: Uses the DDPM++ U-Net architecture (based on Song et al., 2020b).

- High-Res Generation: Uses the NCSN++ architecture.

Optimization:

- Optimizer: Adam (CIFAR-10) or AdamW (Transfer/Adaptation).

- Hyperparameters:

- LR: $2 \x10^{-4}$ (CIFAR), Grid search for transfer.

- EMA: 0.999999 (CIFAR), 0.9999 (Transfer).

- Batch Size: 4 (Transfer).

Evaluation

| Metric | Value (CIFAR-10, N=1) | Baseline (Best 1-step) | Notes |

|---|---|---|---|

| FID | 4.85 (2-Rectified) | 8.91 (TDPM) | Lower is better |

| Recall | 0.51 (3-Rectified) | 0.49 (StyleGAN2+ADA) | Higher is better |

Hardware

Hardware specifics (e.g., GPU models) are not explicitly detailed in the text, but the architectures (NCSN++, DDPM++) typically require high-end GPU clusters (e.g., V100/A100s) for training on high-resolution datasets.

Citation

@inproceedings{liuFlowStraightFast2023,

title = {Flow {{Straight}} and {{Fast}}: {{Learning}} to {{Generate}} and {{Transfer Data}} with {{Rectified Flow}}},

booktitle = {International Conference on Learning Representations},

author = {Liu, Xingchao and Gong, Chengyue and Liu, Qiang},

year = 2023,

url = {https://openreview.net/forum?id=XVjTT1nw5z}

}