Paper Information

Citation: Burda, Y., Grosse, R., & Salakhutdinov, R. (2016). Importance Weighted Autoencoders. International Conference on Learning Representations (ICLR) 2016. https://arxiv.org/abs/1509.00519

Publication: ICLR 2016

What kind of paper is this?

This paper introduces the Importance Weighted Autoencoder (IWAE), a generative model that shares the same architecture as the Variational Autoencoder (VAE) but uses a different, tighter objective function. The key innovation is using importance weighting to derive a strictly tighter log-likelihood lower bound than the standard VAE objective.

What is the motivation?

The standard VAE has several limitations that motivated this work:

- Strong assumptions: VAEs typically assume the posterior distribution is simple (e.g., approximately factorial) and that its parameters can be easily approximated from observations

- Simplified representations: The VAE objective can force models to learn overly simplified representations that don’t utilize the network’s full modeling capacity

- Harsh penalization: The VAE objective harshly penalizes approximate posterior samples that are poor explanations for the data, which can be overly restrictive

- Inactive units (posterior collapse): VAEs tend to learn latent spaces with effective dimensions far below their capacity, a phenomenon later termed posterior collapse. The authors wanted to investigate if a new objective could address this issue

What is the novelty here?

The core novelty is the IWAE objective function, denoted as $\mathcal{L}_{k}$.

VAE ($\mathcal{L}_{1}$ Bound): The standard VAE maximizes $\mathcal{L}(x)=\mathbb{E}_{q(h|x)}[\log\frac{p(x,h)}{q(h|x)}]$. This is equivalent to the new bound when $k=1$.

IWAE ($\mathcal{L}_{k}$ Bound): The IWAE maximizes a tighter bound that uses $k$ samples drawn from the recognition model $q(h|x)$:

$$\mathcal{L}_{k}(x)=\mathbb{E}_{h_{1},…,h_{k}\sim q(h|x)}\left[\log\frac{1}{k}\sum_{i=1}^{k}\frac{p(x,h_{i})}{q(h_{i}|x)}\right]$$

Tighter Bound: The authors prove that this bound is always tighter than or equal to the VAE bound ($\mathcal{L}_{k+1} \geq \mathcal{L}_{k}$) and that as $k$ approaches infinity, $\mathcal{L}_{k}$ approaches the true log-likelihood $\log p(x)$.

Increased Flexibility: Using multiple samples gives the IWAE additional flexibility to learn generative models whose posterior distributions are complex and don’t fit the VAE’s simplifying assumptions.

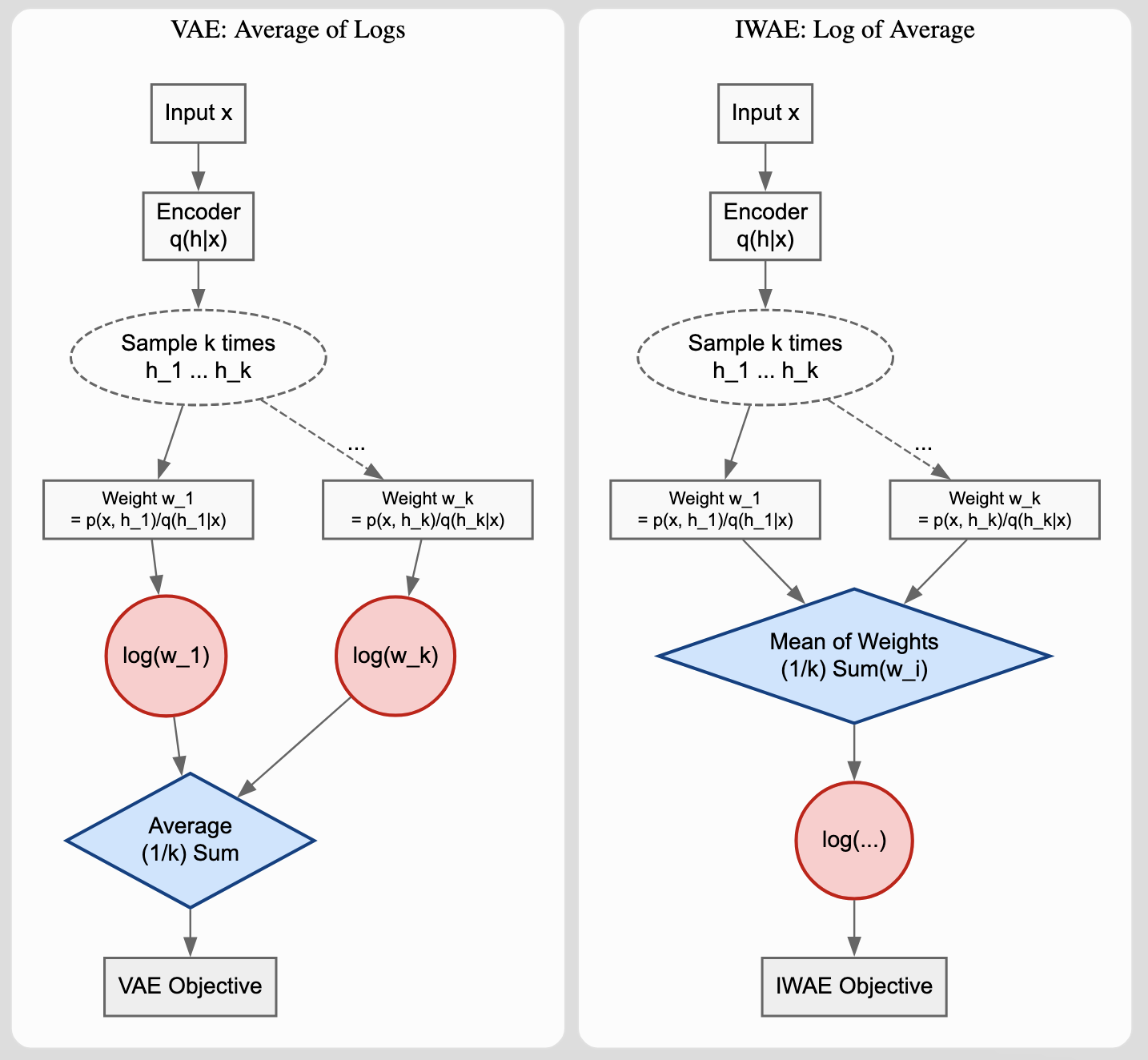

Key Concept: Averaging Inside vs. Outside the Log

A crucial distinction exists between how VAE and IWAE utilize $k$ samples. Understanding this difference explains why increasing $k$ in IWAE actually improves the bound, while in VAE it merely reduces variance.

VAE (Average of Logs):

For a VAE, using $k$ samples approximates:

$$\mathbb{E}\left[ \frac{1}{k} \sum_{i=1}^k \log w_i \right] \approx \text{ELBO}$$

where $w_i = p(x, h_i) / q(h_i | x)$. Increasing $k$ here merely reduces the variance of the gradient estimator. The model still targets the same ELBO bound, so performance gains saturate quickly.

IWAE (Log of Average):

IWAE performs the averaging inside the logarithm:

$$\mathbb{E}\left[ \log \left( \frac{1}{k} \sum_{i=1}^k w_i \right) \right] = \mathcal{L}_k$$

By Jensen’s Inequality ($\log(\mathbb{E}[X]) \geq \mathbb{E}[\log(X)]$ for concave functions), this bound is mathematically guaranteed to be at least as tight as the VAE bound. Each increase in $k$ defines a new, strictly tighter lower bound on the log-likelihood.

Why This Matters for Gradients:

In IWAE, the gradient weights are normalized importance weights $\tilde{w}_i = w_i / \sum_j w_j$. This means “bad” samples (those with low $w_i$) contribute very little to the gradient update since they essentially vanish from the weighted sum. In contrast, VAE uses unweighted samples, so a single sample with extremely low probability produces a massive negative log value that can dominate the loss and harshly penalize the model. IWAE’s formulation allows the model to focus learning on the samples that actually explain the data well.

What experiments were performed?

The authors compared VAE and IWAE on density estimation tasks:

Datasets:

- MNIST: $28 \x28$ binarized handwritten digits (60,000 training / 10,000 test)

- Omniglot: $28 \x28$ binarized handwritten characters from various alphabets (24,345 training / 8,070 test)

- Binarization: Dynamic sampling where binary values are sampled with expectations equal to the real pixel intensities (following Salakhutdinov & Murray, 2008)

Architectures: Two main network architectures were tested:

- One stochastic layer (50 units) with two deterministic layers (200 units each)

- Two stochastic layers (100 and 50 units) with deterministic layers in between

Architecture specifics:

- Activations:

tanhfor deterministic layers;expapplied to variance predictions to ensure positivity - Distributions: Gaussian latent layers with diagonal covariance; Bernoulli observation layer

- Initialization: Glorot & Bengio (2010) heuristic

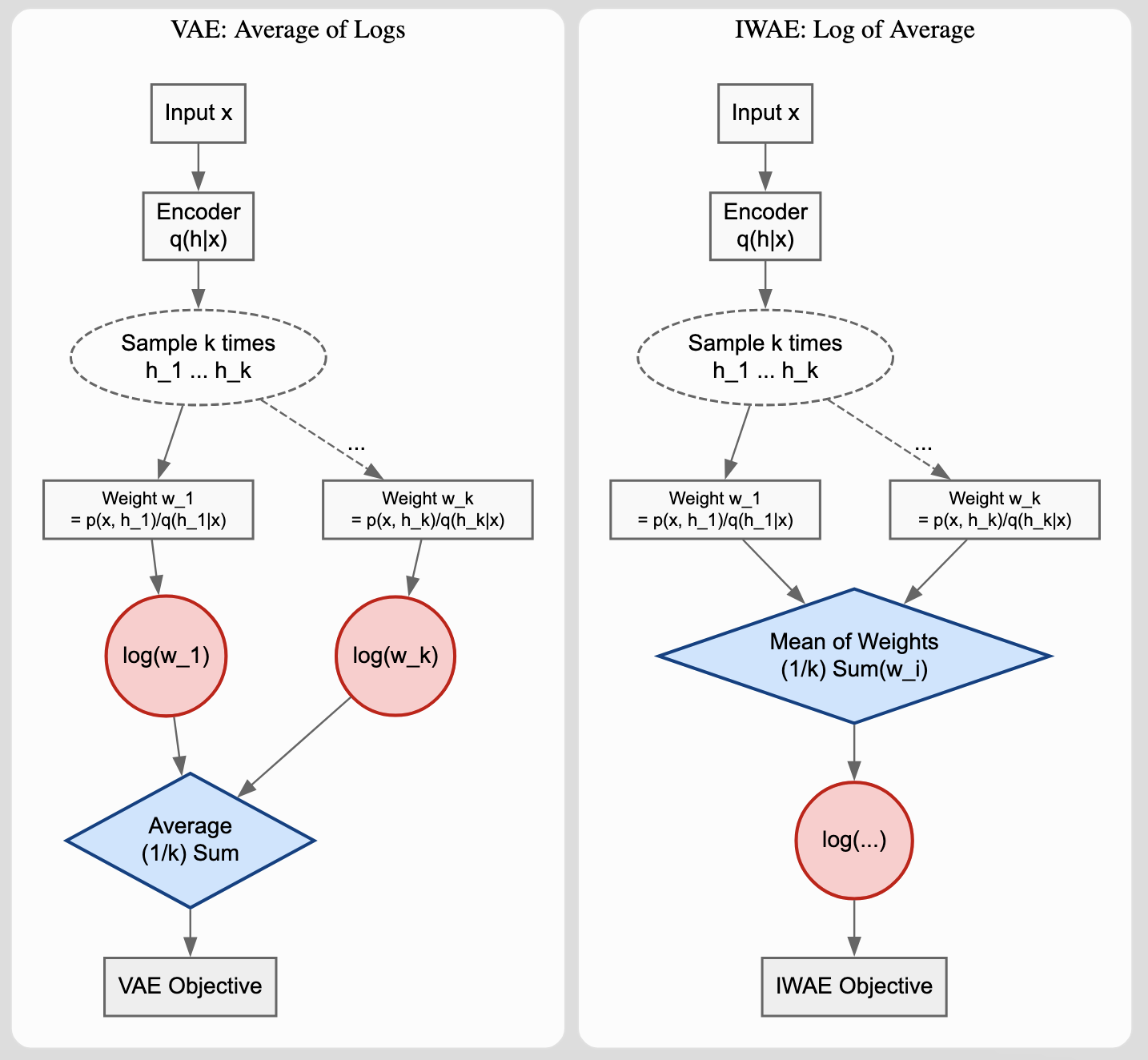

Training: VAE and IWAE models were trained with $k \in {1, 5, 50}$ samples.

Optimization details:

- Optimizer: Adam ($\beta_1=0.9$, $\beta_2=0.999$, $\epsilon=10^{-4}$)

- Batch Size: 20

- Learning Rate Schedule: Annealed rate of $0.001 \cdot 10^{-i/7}$ for $3^i$ epochs (where $i=0…7$), totaling 3,280 passes over the data

- Hardware: GPU-based implementation using mini-batch replication to parallelize the $k$ samples

Metrics:

- Test Log-Likelihood: Primary measure of generative performance, estimated as the mean of $\mathcal{L}_{5000}$ (5000 samples) on the test set

- Active Units: To quantify latent space richness, the authors measured “active” latent dimensions. A unit $u$ was defined as active if its activity statistic $A_{u}=\text{Cov}_{x}(\mathbb{E}_{u\sim q(u|x)}[u])$ exceeded $10^{-2}$

What outcomes/conclusions?

Better Performance: IWAE achieved significantly higher log-likelihoods than VAEs. IWAE performance improved with increasing $k$, while VAE performance benefited only slightly from using more samples ($k>1$).

Richer Representations: In all experiments with $k>1$, IWAE learned more active latent dimensions than VAE, suggesting richer latent representations.

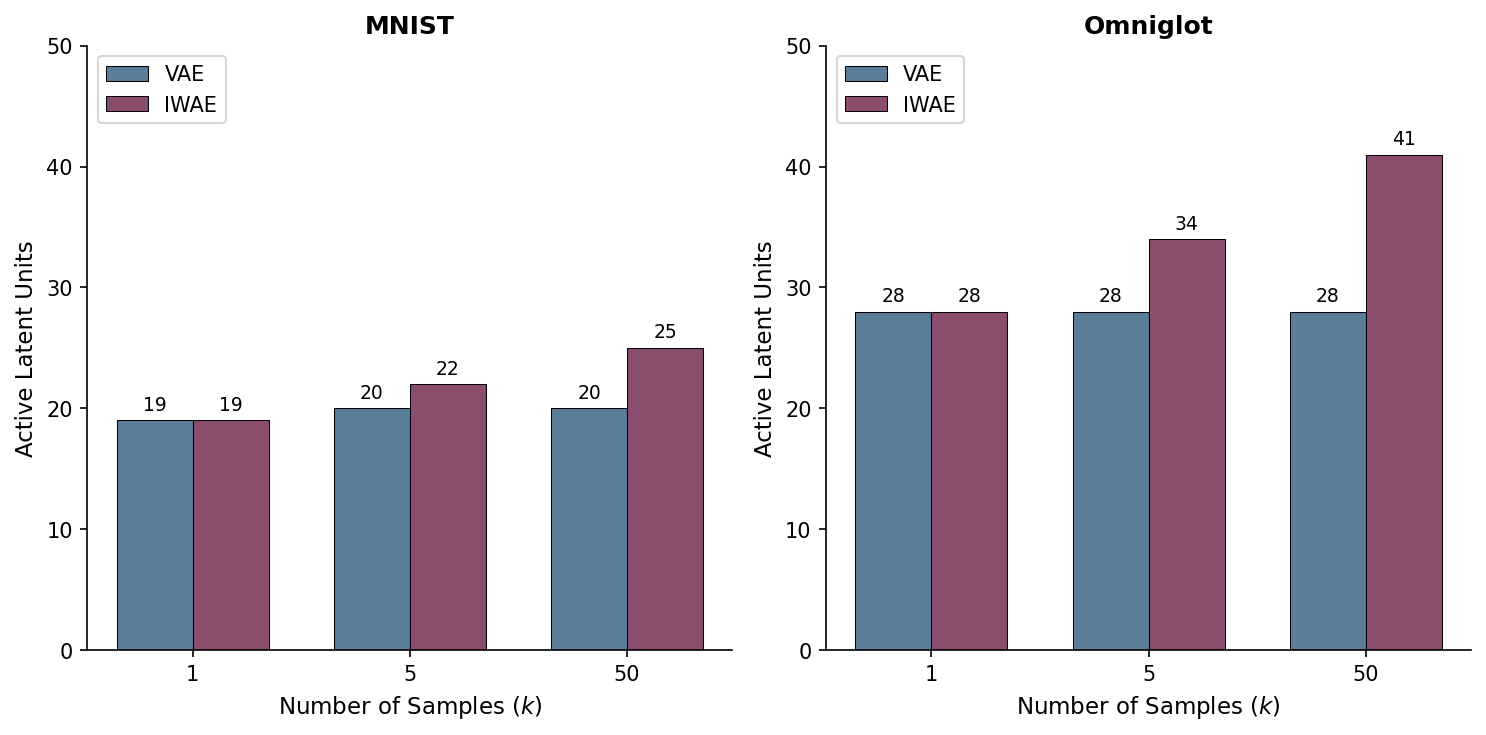

Objective Drives Representation: The authors found that latent dimension inactivation is driven by the objective function. They demonstrated this through an “objective swap” experiment:

This experiment provides causal evidence that the objective function itself determines latent utilization:

- VAE → IWAE: A converged VAE model, when fine-tuned with the IWAE objective ($k=50$), gained 3 active units (19 → 22) and improved test NLL from 86.76 to 84.88

- IWAE → VAE: Conversely, a converged IWAE model fine-tuned with the VAE objective lost 2 active units (25 → 23) and worsened test NLL from 84.78 to 86.02

The symmetry of these results rules out explanations based on optimization dynamics, initialization, or architecture. The objective function is the determining factor.

- Conclusion: IWAEs learn richer latent representations and achieve better generative performance than VAEs with equivalent architectures and training time.

Reproducibility Details

Variance Control: A common concern with importance sampling is high variance. The authors prove that the Mean Absolute Deviation of their estimator is bounded by $2 + 2\delta$, where $\delta$ is the gap between the bound and true log-likelihood. As the bound tightens, variance remains controlled.

Active Unit Threshold: The $10^{-2}$ threshold for classifying units as “active” is justified by a bimodal distribution of the log activity statistic, showing clear separation between active and inactive units.

Fixed Binarization: Results on a fixed binarization of MNIST (Larochelle, 2011) confirm that IWAE outperforms VAE regardless of preprocessing method, though with notably more overfitting compared to dynamic sampling.

Related Notes

- Auto-Encoding Variational Bayes (VAE) - The foundational VAE paper that IWAE builds upon