InChI and Tautomerism: Toward a Comprehensive Treatment

Dhaked et al.'s comprehensive analysis of tautomerism in chemoinformatics, introducing 86 new tautomeric rules and their …...

Dhaked et al.'s comprehensive analysis of tautomerism in chemoinformatics, introducing 86 new tautomeric rules and their …...

Heller et al. (2013) explain how IUPAC's InChI became the global standard for representing chemical structures, its …...

The InChI v1.07 release modernizes chemical identifiers for FAIR data principles, fixes thousands of bugs, and proposes …...

Clark et al.'s Mixfile format and MInChI specification provide the first standardized, machine-readable way to represent …...

Lynch et al. propose NInChI (Nanomaterials InChI) - a standardized notation system for representing complex …

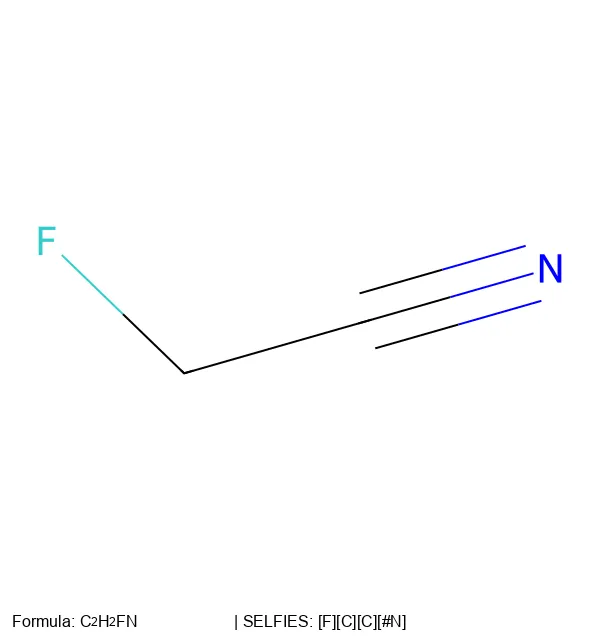

An overview of the major updates to the SELFIES Python library, including improved performance, expanded chemical …...

RInChI extends the InChI standard to create unique, machine-readable identifiers for chemical reactions, enabling …...

A summary of the foundational 2020 paper that introduced SELFIES - the 100% robust molecular string representation …

A summary of David Weininger's foundational 1988 paper that introduced SMILES notation - the string-based molecular …

SELFIES is a 100% robust string-based representation for chemical molecules, designed for machine learning applications …...

SMILES is a specification for describing the structure of chemical molecules using short ASCII strings....

Skinnider's 2024 Nature Machine Intelligence paper demonstrates that the ability to generate invalid SMILES is actually …...