Paper Information

Citation: Kim, J. H., & Choi, J. (2025). OCSAug: diffusion-based optical chemical structure data augmentation for improved hand-drawn chemical structure image recognition. The Journal of Supercomputing, 81, 926.

Publication: The Journal of Supercomputing 2025

Additional Resources:

What kind of paper is this?

This is a Method paper according to the taxonomy. It proposes a novel data augmentation pipeline (OCSAug) that integrates Denoising Diffusion Probabilistic Models (DDPM) and the RePaint algorithm to address the data scarcity problem in hand-drawn optical chemical structure recognition (OCSR). The contribution is validated through systematic benchmarking against existing augmentation techniques (RDKit, Randepict) and ablation studies on mask design.

What is the motivation?

A vast amount of molecular structure data exists in analog formats, such as hand-drawn diagrams in research notes or older literature. While OCSR models perform well on digitally rendered images, they struggle significantly with hand-drawn images due to noise, varying handwriting styles, and distortions. Current datasets for hand-drawn images (e.g., DECIMER) are too small to train robust models effectively, and existing augmentation tools (RDKit, Randepict) fail to generate sufficiently realistic hand-drawn variations.

What is the novelty here?

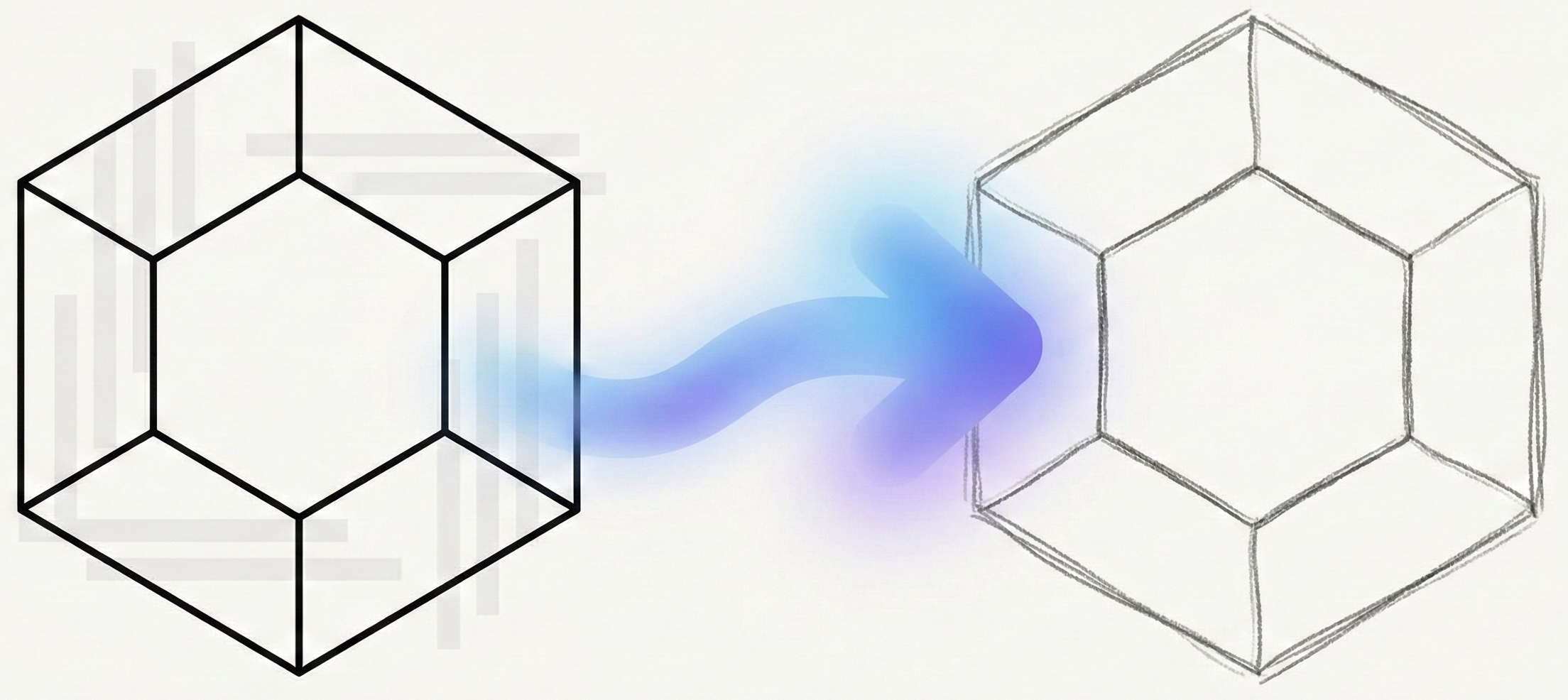

The core novelty is OCSAug, a three-phase pipeline that uses generative AI to synthesize training data:

- DDPM + RePaint: It utilizes a DDPM to learn the distribution of hand-drawn images and the RePaint algorithm for inpainting.

- Structural Masking: It introduces vertical and horizontal stripe pattern masks. These masks selectively obscure parts of atoms or bonds, forcing the diffusion model to reconstruct them with irregular “hand-drawn” styles while preserving the underlying chemical topology.

- Label Transfer: Because the chemical structure is preserved during inpainting, the SMILES label from the original image is directly transferred to the augmented image, bypassing the need for re-annotation.

What experiments were performed?

The authors evaluated OCSAug using the DECIMER dataset, specifically a “drug-likeness” subset filtered by Lipinski’s and Veber’s rules.

- Baselines: The method was compared against RDKit (digital generation) and Randepict (rule-based augmentation).

- Models: Four state-of-the-art OCSR models were fine-tuned: MolScribe, Image2SMILES (I2S), MolNexTR, and MPOCSR.

- Metrics:

- Tanimoto Similarity: To measure prediction accuracy against ground truth.

- Fréchet Inception Distance (FID): To measure the distributional similarity between generated and real hand-drawn images.

- RMSE: To quantify pixel-level structural preservation across different mask thicknesses.

What outcomes/conclusions?

- Performance Boost: OCSAug improved recognition accuracy (Tanimoto similarity) by 1.918-3.820 times compared to non-augmented baselines, significantly outperforming RDKit and Randepict.

- Data Quality: OCSAug achieved the lowest FID score (0.471) compared to Randepict (4.054) and RDKit (10.581), indicating its generated images are much closer to the real hand-drawn distribution.

- Generalization: The method showed improved generalization on a newly collected real-world dataset of 463 images from 6 volunteers.

- Limitations: The generation process is slow (3 weeks for 10k images on a single GPU) and the fixed stripe masks may struggle with highly complex, non-drug-like geometries.

Reproducibility Details

Data

- Source: DECIMER dataset (hand-drawn images).

- Filtering: A “drug-likeness” filter was applied (Lipinski’s rule of 5 + Veber’s rules) along with an atom filter (C, H, O, S, F, Cl, Br, N, P only).

- Final Size: 3,194 samples, split into:

- Training: 2,604 samples.

- Validation: 290 samples.

- Test: 300 samples.

- Resolution: All images resized to $256 \x256$ pixels.

Algorithms

- Framework: DDPM implemented using

guided-diffusion. - RePaint Settings:

- Total time steps: 250.

- Jump length: 10.

- Resampling counts: 10.

- Masking Strategy:

- Vertical Stripes: Obscure atom symbols to vary handwriting style.

- Horizontal Stripes: Obscure bonds to vary length/thickness/alignment.

- Optimal Thickness: A stripe thickness of 4 pixels was found to be optimal for balancing diversity and structural preservation.

Models

The OCSR models were pretrained on PubChem (digital images) and then fine-tuned on the OCSAug dataset.

- MolScribe: Swin Transformer encoder, Transformer decoder. Fine-tuned (all layers) for 30 epochs, batch size 16-128, LR 2e-5.

- I2S: Inception V3 encoder (frozen), FC/Decoder fine-tuned. 25 epochs, batch size 64, LR 1e-5.

- MolNexTR: Dual-stream encoder (Swin + CNN). Fine-tuned (all layers) for 30 epochs, batch size 16-64, LR 2e-5.

- MPOCSR: MPVIT backbone. Fine-tuned (all layers) for 25 epochs, batch size 16-32, LR 4e-5.

Evaluation

- Metric: Improvement Ratio (IR) of Tanimoto Similarity (TS), calculated as $IR = TS_{\text{finetuned}} / TS_{\text{non-finetuned}}$.

- Validation: Cross-validation on the split DECIMER dataset.

Hardware

- GPU: NVIDIA GeForce RTX 4090.

- Training Time: DDPM training took ~6 days.

- Generation Time: Generating 2,600 augmented images took ~70 hours.

Citation

@article{kimOCSAugDiffusionbasedOptical2025,

title = {OCSAug: Diffusion-Based Optical Chemical Structure Data Augmentation for Improved Hand-Drawn Chemical Structure Image Recognition},

shorttitle = {OCSAug},

author = {Kim, Jin Hyuk and Choi, Jonghwan},

year = 2025,

month = may,

journal = {The Journal of Supercomputing},

volume = {81},

number = {8},

pages = {926},

doi = {10.1007/s11227-025-07406-4}

}