Paper Information

Citation: Krasnov, A., Barnabas, S. J., Boehme, T., Boyer, S. K., & Weber, L. (2024). Comparing software tools for optical chemical structure recognition. Digital Discovery, 3(4), 681-693. https://doi.org/10.1039/D3DD00228D

Publication: Digital Discovery 2024

Additional Resources:

What kind of paper is this?

This paper is primarily a Resource contribution ($0.7 \Psi_{\text{Resource}}$) with a secondary Method component ($0.3 \Psi_{\text{Method}}$).

It establishes a new, independent benchmark dataset of 2,702 manually selected patent images to evaluate existing Optical Chemical Structure Recognition (OCSR) tools. The authors rigorously compare 8 different methods using this dataset to determine the state-of-the-art. The Resource contribution is evidenced by the creation of this curated benchmark, explicit evaluation metrics (exact connectivity table matching), and public release of datasets, processing scripts, and evaluation tools on Zenodo.

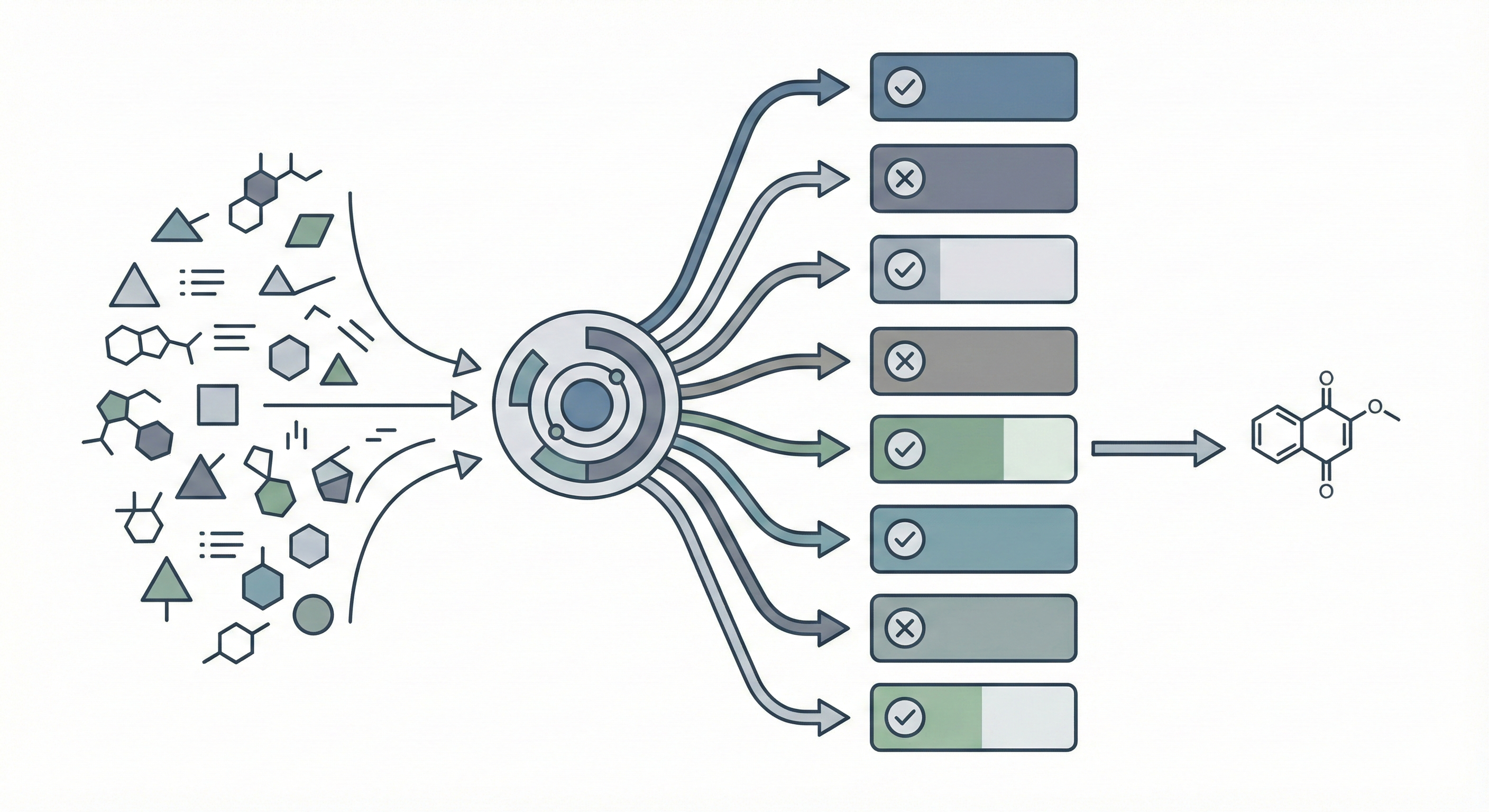

The secondary Method contribution comes through the development of “ChemIC,” a ResNet-50 image classifier designed to categorize images (Single vs. Multiple vs. Reaction) to enable a modular processing pipeline. However, this method serves to support the insights gained from the benchmarking resource.

What is the motivation?

Lack of Standardization: There is no commonly accepted general standard set of images for OCSR quality measurements; existing tools are often evaluated on synthetic data or limited datasets.

Industrial Relevance: Patents contain diverse and “noisy” image modalities (Markush structures, salts, reactions, hand-drawn styles) that are critical for Freedom to Operate (FTO) and novelty checks in the pharmaceutical industry. These real-world complexities are often missing from existing benchmarks.

Modality Gaps: Different tools excel at different tasks (e.g., single molecules vs. reactions). A monolithic approach often fails on complex patent documents, and there was no systematic understanding of which tools perform best for which image types.

Integration Needs: The authors aimed to identify tools to replace or augment their existing rule-based system (OSRA) within the SciWalker application, requiring a rigorous comparative study.

What is the novelty here?

Independent Benchmark: Creation of a manually curated test set of 2,702 images from real-world patents (WO, EP, US), specifically selected to include “problematic” edge cases like inorganic complexes, peptides, and Markush structures, providing a more realistic evaluation environment than synthetic datasets.

Comprehensive Comparison: Side-by-side evaluation of 8 open-access tools: DECIMER, Reaction DataExtractor, MolScribe, RxnScribe, SwinOCSR, OCMR, MolVec, and OSRA, using identical test conditions and evaluation criteria.

ChemIC Classifier: Implementation of a specialized image classifier (ResNet-50) to distinguish between single molecules, multiple molecules, reactions, and non-chemical images, facilitating a “hybrid” pipeline that routes images to the most appropriate tool.

Strict Evaluation Logic: Utilization of an exact match criterion for connectivity tables (ignoring partial similarity scores like Tanimoto) to reflect rigorous industrial requirements for novelty checking in patent applications.

What experiments were performed?

Tool Selection: Installed and tested 8 tools: DECIMER v2.4.0, Reaction DataExtractor v2.0.0, MolScribe v1.1.1, RxnScribe v1.0, MolVec v0.9.8, OCMR, SwinOCSR, and OSRA v2.1.5.

Dataset Construction:

- Test Set: 2,702 patent images split into three “buckets”: A (Single structure - 1,454 images), B (Multiple structures - 661 images), C (Reactions - 481 images).

- Training Set (for ChemIC): 16,000 images from various sources (Patents, Im2Latex, etc.) split into 12,804 training, 1,604 validation, and 1,604 test images.

Evaluation Protocol:

- Calculated Precision, Recall, and F1 scores based on exact structure matching.

- Manual inspection by four chemists to verify predictions.

- Developed custom tools (

ImageComparatorandExcelConstructor) to facilitate visual comparison and result aggregation.

Segmentation Test: Applied DECIMER segmentation to multi-structure images to see if splitting them before processing improved results, combining segmentation with MolScribe for final predictions.

What outcomes/conclusions?

Single Molecules: MolScribe achieved the highest performance (Precision: 87%, F1: 93%), followed closely by DECIMER (Precision: 84%, F1: 91%). These transformer-based approaches significantly outperformed rule-based methods on single-structure images.

Reactions: RxnScribe significantly outperformed others (Recall: 99%, F1: 86%), demonstrating the value of specialized architectures for reaction diagrams. General-purpose tools struggled with reaction recognition.

Multiple Structures: All AI-based tools struggled with multi-structure images. OSRA (rule-based) performed best here but still had low precision (58%). Combining DECIMER segmentation with MolScribe improved F1 scores to 90%, suggesting that image preprocessing can significantly enhance performance.

Failures: Current tools fail on polymers, large oligomers, and complex Markush structures. Most tools (except OSRA/MolVec) handle stereochemistry well but fail on dative/coordinate bonds in metal complexes, indicating gaps in training data coverage.

Classifier Utility: The ChemIC model achieved 99.62% accuracy on the test set, validating the feasibility of a modular pipeline where images are routed to the specific tool best suited for that modality. This hybrid approach outperforms any single tool across all image types.

Reproducibility Details

Data

| Purpose | Dataset | Size | Description |

|---|---|---|---|

| Benchmark (Test) | Manual Patent Selection | 2,702 Images | Sources: WO, EP, US patents Bucket A: Single structures (1,454) Bucket B: Multi-structures (661) Bucket C: Reactions (481) |

| ChemIC Training | Aggregated Sources | 16,000 Images | Sources: Patents (OntoChem), MolScribe dataset, DECIMER dataset, RxnScribe dataset, Im2Latex-100k Split: 12,804 Train / 1,604 Val / 1,604 Test |

Algorithms

Scoring Logic:

- Single Molecules: Score = 1 if exact match of connectivity table, 0 otherwise. Stereochemistry (cis/trans, tetrahedral) checked; charge must be correct. Tanimoto similarity explicitly rejected as too lenient.

- Reactions: Considered correct if at least one reactant and one product are correct and capture main features. Stoichiometry and conditions ignored.

Image Segmentation: Used DECIMER segmentation (with expand option) to split multi-structure images into single images before passing to MolScribe.

Models

| Tool | Version | Architecture |

|---|---|---|

| DECIMER | v2.4.0 | EfficientNet-V2-M encoder + Transformer decoder |

| MolScribe | v1.1.1 | Swin Transformer encoder + Transformer decoder |

| RxnScribe | v1.0 | Specialized for reaction diagrams |

| Reaction DataExtractor | v2.0.0 | Rule-based extraction |

| MolVec | v0.9.8 | Rule-based vectorization |

| OSRA | v2.1.5 | Rule-based recognition |

| ChemIC (New) | - | ResNet-50 CNN in PyTorch for 4-class classification |

Evaluation

Key Results on Single Structures (Bucket A - 400 random sample):

| Method | Precision | Recall | F1 Score |

|---|---|---|---|

| MolScribe | 87% | 100% | 93% |

| DECIMER | 84% | 100% | 91% |

| MolVec | 75% | 100% | 85% |

| OSRA | 64% | 100% | 78% |

Key Results on Reactions (Bucket C):

| Method | Precision | Recall | F1 Score |

|---|---|---|---|

| RxnScribe | 77% | 99% | 86% |

| OSRA | 64% | 63% | 64% |

Hardware

ChemIC Training: Trained on a machine with 40 Intel(R) Xeon(R) Gold 6226 CPUs. Training time approximately 6 hours for 100 epochs (early stopping at epoch 26).

Citation

@article{krasnovComparingSoftwareTools2024,

title = {Comparing Software Tools for Optical Chemical Structure Recognition},

author = {Krasnov, Aleksei and Barnabas, Shadrack J. and Boehme, Timo and Boyer, Stephen K. and Weber, Lutz},

year = {2024},

journal = {Digital Discovery},

volume = {3},

number = {4},

pages = {681--693},

publisher = {Royal Society of Chemistry},

doi = {10.1039/D3DD00228D},

langid = {english}

}