Paper Information

Citation: Campos, D., & Ji, H. (2021). IMG2SMI: Translating Molecular Structure Images to Simplified Molecular-input Line-entry System (No. arXiv:2109.04202). arXiv. https://doi.org/10.48550/arXiv.2109.04202

Publication: arXiv preprint (2021)

Additional Resources:

What kind of paper is this?

This is both a Method and Resource paper:

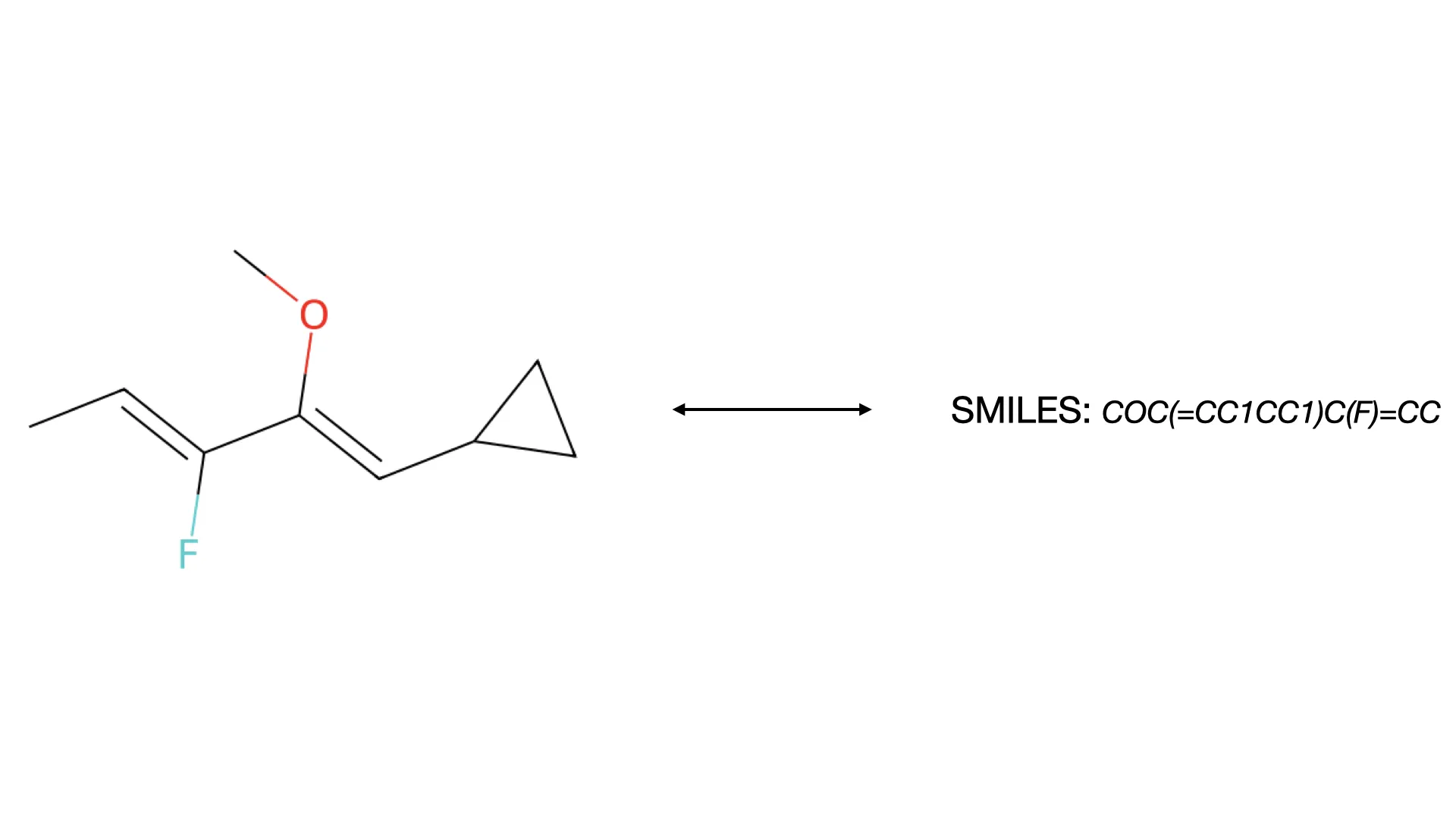

- Method: It adapts standard image captioning architectures (encoder-decoder) to the domain of Optical Chemical Structure Recognition (OCSR), treating molecule recognition as a translation task.

- Resource: It introduces MOLCAP, a massive dataset of 81 million molecules aggregated from public chemical databases, addressing the data scarcity that previously hindered deep learning approaches to OCSR.

What is the motivation?

Chemical literature is “full of recipes written in a language computers cannot understand” because molecules are depicted as 2D images. This creates a fundamental bottleneck:

- The Problem: Chemists must manually redraw molecular structures to search for related compounds or reactions. This is slow, error-prone, and makes large-scale literature mining impossible.

- Existing Tools: Legacy systems like OSRA (Optical Structure Recognition Application) rely on handcrafted rules and often require human correction, making them unfit for unsupervised, high-throughput processing.

- The Goal: An automated system that can translate structure images directly to machine-readable strings (SMILES/SELFIES) without human supervision, enabling large-scale knowledge extraction from decades of chemistry literature and patents.

What is the novelty here?

The core novelty is demonstrating that how you represent the output text is as important as the model architecture itself. Key contributions:

Image Captioning Framework: Applies modern encoder-decoder architectures (ResNet-101 + Transformer) to OCSR, treating it as an image-to-text translation problem.

SELFIES as Target Representation: The “secret sauce” - using SELFIES (Self-Referencing Embedded Strings) as the output format. SELFIES is based on a formal grammar where every possible string corresponds to a valid molecule, eliminating the syntactic invalidity problems (unmatched parentheses, invalid characters) that plague SMILES generation.

MOLCAP Dataset: Created a massive dataset of 81 million unique molecules from PubChem, ChEMBL, GDB13, and other sources. Generated 256x256 pixel images using RDKit for 1 million training samples and 5,000 validation samples.

Task-Specific Evaluation: Demonstrated that traditional NLP metrics (BLEU) are poor indicators of scientific utility. Introduced evaluation based on molecular fingerprints (MACCS, RDK, Morgan) and Tanimoto similarity, which measure functional chemical similarity.

What experiments were performed?

The evaluation focused on comparing IMG2SMI to existing systems and identifying which design choices matter most:

Baseline Comparisons: Benchmarked against OSRA (rule-based system) and DECIMER (first deep learning approach) on the MOLCAP dataset to establish whether modern architectures could surpass traditional methods.

Ablation Studies: Extensive ablations isolating key factors:

- Decoder Architecture: Transformer vs. RNN/LSTM decoders

- Encoder Fine-tuning: Fine-tuned vs. frozen pre-trained ResNet weights

- Output Representation: SELFIES vs. character-level SMILES vs. BPE-tokenized SMILES (the most critical ablation)

| Configuration | MACCS FTS | Valid Captions |

|---|---|---|

| RNN Decoder | ~0.36 | N/A |

| Transformer Decoder | 0.94 | N/A |

| Fixed Encoder Weights | 0.76 | N/A |

| Fine-tuned Encoder | 0.94 | N/A |

| Character-level SMILES | <0.50 | ~2% |

| BPE SMILES (2000 vocab) | 0.85 | ~40% |

| SELFIES | 0.94 | 99.4% |

- Metric Analysis: Systematic comparison of evaluation metrics including BLEU, ROUGE, Levenshtein distance, exact match accuracy, and molecular fingerprint-based similarity measures.

What outcomes/conclusions?

Performance Gains:

| Metric | IMG2SMI | OSRA | DECIMER | Random Baseline |

|---|---|---|---|---|

| MACCS FTS | 0.9475 | 0.3600 | N/A | ~0.20 |

| RDK FTS | 0.9238 | N/A | N/A | N/A |

| Morgan FTS | 0.8848 | N/A | N/A | N/A |

| ROUGE | 0.6240 | 0.0684 | N/A | N/A |

| Exact Match | 7.24% | 0.04% | N/A | 0% |

| Valid Captions | 99.4% | 65.2% | N/A | N/A |

- 163% improvement over OSRA on MACCS Tanimoto similarity

- ~10x improvement on ROUGE scores

- Average Tanimoto similarity exceeds 0.85 (functionally similar molecules even when not exact matches)

Key Findings:

- SELFIES is Critical: Using SELFIES yields 99.4% valid molecules, compared to only ~2% validity for character-level SMILES. This robustness is essential for practical deployment.

- Architecture Matters: Transformer decoder significantly outperforms RNN/LSTM approaches. Fine-tuning the ResNet encoder (vs. frozen weights) yields massive performance gains (e.g., MACCS FTS: 0.76 → 0.94).

- Metric Insights: BLEU is a poor metric for this task. Molecular fingerprint-based Tanimoto similarity is most informative because it measures functional chemical similarity.

Limitations:

- Low Exact Match: Only 7.24% exact matches. The model captures the “gist” (functional groups, overall structure) but misses fine details like exact double bond placement.

- Complexity Bias: Trained on large molecules (average length >40 tokens), so it performs poorly on very simple structures where OSRA still excels.

Conclusion: The work establishes that modern architectures combined with robust molecular representations (SELFIES) can significantly outperform traditional rule-based systems. The system is already useful for literature mining where functional similarity is more important than exact matches, though low exact match accuracy and poor performance on simple molecules indicate clear directions for future work.

Reproducibility Details

Models

Architecture: Image captioning system based on DETR (Detection Transformer) framework.

Visual Encoder:

- Backbone: ResNet-101 pre-trained on ImageNet

- Feature Extraction: 4th layer extraction (convolutions only)

- Output: 2048-dimensional dense feature vector

Caption Decoder:

- Type: Transformer encoder-decoder

- Layers: 3 stacked encoder layers, 3 stacked decoder layers

- Attention Heads: 8

- Hidden Dimensions: 2048 (feed-forward networks)

- Dropout: 0.1

- Layer Normalization: 1e-12

Training Configuration:

- Optimizer: AdamW

- Learning Rate: 5e-5 (selected after sweep from 1e-4 to 1e-6)

- Weight Decay: 1e-4

- Batch Size: 32

- Epochs: 5

- Codebase: Built on open-source DETR implementation

Data

MOLCAP Dataset:

| Property | Value | Notes |

|---|---|---|

| Total Size | 81,230,291 molecules | Aggregated from PubChem, ChEMBL, GDB13 |

| Training Split | 1,000,000 molecules | Randomly selected unique molecules |

| Validation Split | 5,000 molecules | Randomly selected for evaluation |

| Image Resolution | 256x256 pixels | Generated using RDKit |

| Median SELFIES Length | >45 characters | More complex than typical benchmarks |

| Full Dataset Storage | ~16.24 TB | Necessitated use of 1M subset |

| Augmentation | None | No cropping, rotation, or other augmentation |

Preprocessing:

- Images generated using RDKit at 256x256 resolution

- Molecules converted to canonical representations

- SELFIES tokenization for model output

Evaluation

Primary Metrics:

| Metric | IMG2SMI Value | OSRA Baseline | Purpose |

|---|---|---|---|

| MACCS FTS | 0.9475 | 0.3600 | Fingerprint Tanimoto Similarity (functional groups) |

| RDK FTS | 0.9238 | N/A | RDKit fingerprint similarity |

| Morgan FTS | 0.8848 | N/A | Morgan fingerprint similarity (circular) |

| ROUGE | 0.6240 | 0.0684 | Text overlap metric |

| Exact Match | 7.24% | 0.04% | Structural identity (strict) |

| Valid Captions | 99.4% | 65.2% | Syntactic validity (with SELFIES) |

| Levenshtein Distance | Low | High | String edit distance |

Secondary Metrics (shown to be less informative for chemical tasks):

- BLEU, ROUGE (better suited for natural language)

- Levenshtein distance (doesn’t capture chemical similarity)

Hardware

- GPU: Single NVIDIA GeForce RTX 2080 Ti

- Training Time: ~5 hours per epoch, approximately 24 hours total for 5 epochs

- Memory: Sufficient for batch size 32 with ResNet-101 + Transformer architecture

Citation

@article{campos2021img2smi,

title={IMG2SMI: Translating Molecular Structure Images to Simplified Molecular-input Line-entry System},

author={Campos, Daniel and Ji, Heng},

journal={arXiv preprint arXiv:2109.04202},

year={2021},

doi={10.48550/arXiv.2109.04202}

}