Paper Information

Citation: Clevert, D.-A., Le, T., Winter, R., & Montanari, F. (2021). Img2Mol - accurate SMILES recognition from molecular graphical depictions. Chemical Science, 12(42), 14174-14181. https://doi.org/10.1039/D1SC01839F

Publication: Chemical Science (2021)

Additional Resources:

What kind of paper is this?

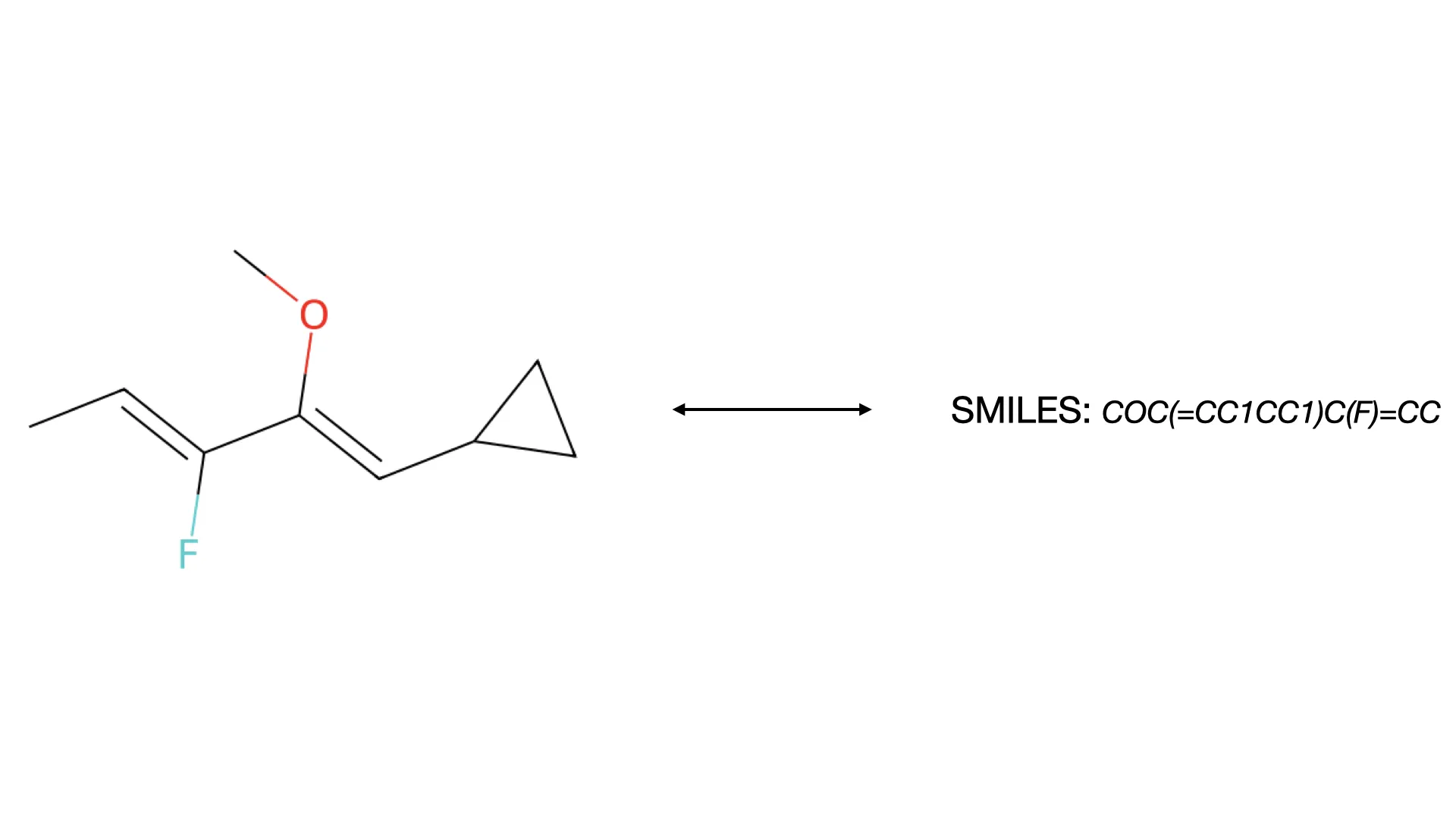

This is a method paper that introduces Img2Mol, a deep learning system for Optical Chemical Structure Recognition (OCSR). The work focuses on building a fast, accurate, and robust system for converting molecular structure depictions into machine-readable SMILES strings.

What is the motivation?

Vast amounts of chemical knowledge exist only as images in scientific literature and patents, making this data inaccessible for computational analysis, database searches, or machine learning pipelines. Manually extracting this information is slow and error-prone, creating a bottleneck for drug discovery and chemical research.

While rule-based OCSR systems like OSRA, MolVec, and Imago exist, they’re brittle - small variations in drawing style or image quality can cause them to fail. The authors argue that a deep learning approach, trained on diverse synthetic data, can generalize better across different depiction styles and handle the messiness of real-world images more reliably.

What is the novelty here?

The novelty lies in a two-stage architecture that separates perception from decoding, combined with aggressive data augmentation to ensure robustness. The key contributions are:

1. Two-Stage Architecture with CDDD Embeddings

Img2Mol uses an intermediate representation to predict SMILES from pixels. A custom CNN encoder maps the input image to a 512-dimensional Continuous and Data-Driven Molecular Descriptor (CDDD) embedding - a pre-trained, learned molecular representation that smoothly captures chemical similarity. A pre-trained decoder then converts this CDDD vector into the final canonical SMILES string.

This two-stage design has several advantages:

- The CDDD space is continuous and chemically meaningful, so nearby embeddings correspond to structurally similar molecules. This makes the regression task easier than learning discrete token sequences directly.

- The decoder is pre-trained and fixed, so the CNN only needs to learn the image → CDDD mapping. This decouples the visual recognition problem from the sequence generation problem.

- CDDD embeddings naturally enforce chemical validity constraints, reducing the risk of generating nonsensical structures.

2. Extensive Data Augmentation for Robustness

The model was trained on 11.1 million unique molecules from ChEMBL and PubChem, but the critical insight is how the training images were generated. To expose the CNN to maximum variation in depiction styles, the authors:

- Used three different cheminformatics libraries (RDKit, OEChem, Indigo) to render images, each with its own drawing conventions

- Applied wide-ranging augmentations: varying bond thickness, font size, rotation, resolution (190-2500 pixels), and other stylistic parameters

- Over-sampled larger molecules to improve performance on complex structures, which are underrepresented in chemical databases

This ensures the network rarely sees the same depiction of a molecule twice, forcing it to learn invariant features.

3. Fast Inference

Because the architecture is a simple CNN followed by a fixed decoder, inference is very fast - especially compared to rule-based systems that rely on iterative graph construction algorithms. This makes Img2Mol practical for large-scale document mining.

What experiments were performed?

The evaluation focused on demonstrating that Img2Mol is more accurate, robust, and generalizable than existing rule-based systems:

Benchmark Comparisons: Img2Mol was tested on several standard OCSR benchmarks - USPTO (patent images), University of Birmingham (UoB), and CLEF datasets - against three open-source baselines: OSRA, MolVec, and Imago. Notably, no deep learning baselines were available at the time for comparison.

Resolution and Molecular Size Analysis: The initial model,

Img2Mol(no aug.), was evaluated across different image resolutions and molecule sizes (measured by number of atoms) to understand failure modes. This revealed that:- Performance degraded for molecules with >35 atoms

- Very high-resolution images lost detail when downscaled to the fixed input size

- Low-resolution images (where rule-based methods failed completely) were handled well

Data Augmentation Ablation: A final model, Img2Mol, was trained with the full augmentation pipeline (wider resolution range, over-sampling of large molecules). Performance was compared to the initial version to quantify the effect of augmentation.

Depiction Library Robustness: The model was tested on images generated by each of the three rendering libraries separately to confirm that training on diverse styles improved generalization.

Generalization Tests: Img2Mol was evaluated on real-world patent images from the STAKER dataset, which were not synthetically generated. This tested whether the model could transfer from synthetic training data to real documents.

Hand-Drawn Molecule Recognition: As an exploratory test, the authors evaluated performance on hand-drawn molecular structures - a task the model was never trained for - to see if the learned features could generalize to completely different visual styles.

Speed Benchmarking: Inference time was measured and compared to rule-based baselines to demonstrate the practical efficiency of the approach.

What were the outcomes and conclusions?

Substantial Performance Gains: Img2Mol outperformed all three rule-based baselines on nearly every benchmark. Accuracy was measured both as exact SMILES match and as Tanimoto similarity (a chemical fingerprint-based metric that measures structural similarity). Even when Img2Mol didn’t predict the exact molecule, it often predicted a chemically similar one.

Robustness Across Conditions: The full Img2Mol model (with aggressive augmentation) showed consistent performance across all image resolutions and molecule sizes. In contrast, rule-based systems were “brittle” - performance dropped sharply with minor perturbations to image quality or style.

Depiction Library Invariance: Img2Mol’s performance was stable across all three rendering libraries (RDKit, OEChem, Indigo), validating the multi-library training strategy. Rule-based methods struggled particularly with RDKit-generated images.

Strong Generalization to Real-World Data: Despite being trained exclusively on synthetic images, Img2Mol performed well on real patent images from the STAKER dataset. This suggests the augmentation strategy successfully captured the diversity of real-world depictions.

Overfitting in Baselines: Rule-based methods performed surprisingly well on older benchmarks (USPTO, UoB, CLEF) but failed on newer datasets (Img2Mol’s test set, STAKER). This suggests they may be implicitly tuned to specific drawing conventions in legacy datasets.

Limited Hand-Drawn Recognition: Img2Mol could recognize simple hand-drawn structures but struggled with complex or large molecules. This is unsurprising given the lack of hand-drawn data in training, but it highlights a potential avenue for future work.

Speed Advantage: Img2Mol was substantially faster than rule-based competitors, especially on high-resolution images. This makes it practical for large-scale literature mining.

The work establishes that deep learning can outperform traditional rule-based OCSR systems when combined with a principled two-stage architecture and comprehensive data augmentation. The CDDD embedding acts as a bridge between visual perception and chemical structure, providing a chemically meaningful intermediate representation that improves both accuracy and robustness. The focus on synthetic data diversity proves to be an effective strategy for generalizing to real-world documents.

Reproducibility Details

Models

Architecture: Custom 8-layer Convolutional Neural Network (CNN) encoder

- Input: $224 \x224$ pixel grayscale images

- Backbone Structure: 8 convolutional layers organized into 3 stacks, followed by 3 fully connected layers

- Stack 1: 3 Conv layers ($7 \x7$ filters, stride 3, padding 4) + Max Pooling

- Stack 2: 2 Conv layers + Max Pooling

- Stack 3: 3 Conv layers + Max Pooling

- Head: 3 fully connected layers

- Output: 512-dimensional CDDD embedding vector

Decoder: Pre-trained CDDD decoder (from Winter et al.) - fixed during training, not updated

Algorithms

Loss Function: Mean Squared Error (MSE) regression minimizing $l(d) = l(\text{cddd}_{\text{true}} - \text{cddd}_{\text{predicted}})$

Optimizer: AdamW with initial learning rate $10^{-4}$

Training Schedule:

- Batch size: 256

- Training duration: 300 epochs

- Plateau scheduler: Multiplies learning rate by 0.7 if validation loss plateaus for 10 epochs

- Early stopping: Triggered if no improvement in validation loss for 50 epochs

Noise Tolerance: The decoder requires the CNN to predict embeddings with noise level $\sigma \le 0.15$ to achieve >90% accuracy

Data

Training Data: 11.1 million unique molecules from ChEMBL and PubChem

Splits: Approximately 50,000 examples each for validation and test sets

Synthetic Image Generation:

- Three cheminformatics libraries: RDKit, OEChem, and Indigo

- Augmentations: Resolution (190-2500 pixels), rotation, bond thickness, font size

- Salt stripping: Keep only the largest fragment

- Over-sampling: Larger molecules (>35 atoms) over-sampled to improve performance

Evaluation

Metrics:

- Exact SMILES match accuracy

- Tanimoto similarity (chemical fingerprint-based structural similarity)

Benchmarks:

- USPTO (patent images)

- University of Birmingham (UoB)

- CLEF dataset

- STAKER (real-world patent images)

- Hand-drawn molecular structures (exploratory)

Baselines: OSRA, MolVec, Imago (rule-based systems)

Hardware

⚠️ Unspecified in paper or supplementary materials. Inference speed reported as ~4 minutes for 5000 images; training hardware (GPU model, count) is undocumented.

Known Limitations

Molecular Size: Performance degrades for molecules with >35 atoms. This is partly a property of the CDDD latent space itself - for larger molecules, the “volume of decodable latent space” shrinks, making the decoder more sensitive to small noise perturbations in the predicted embedding.