Overview

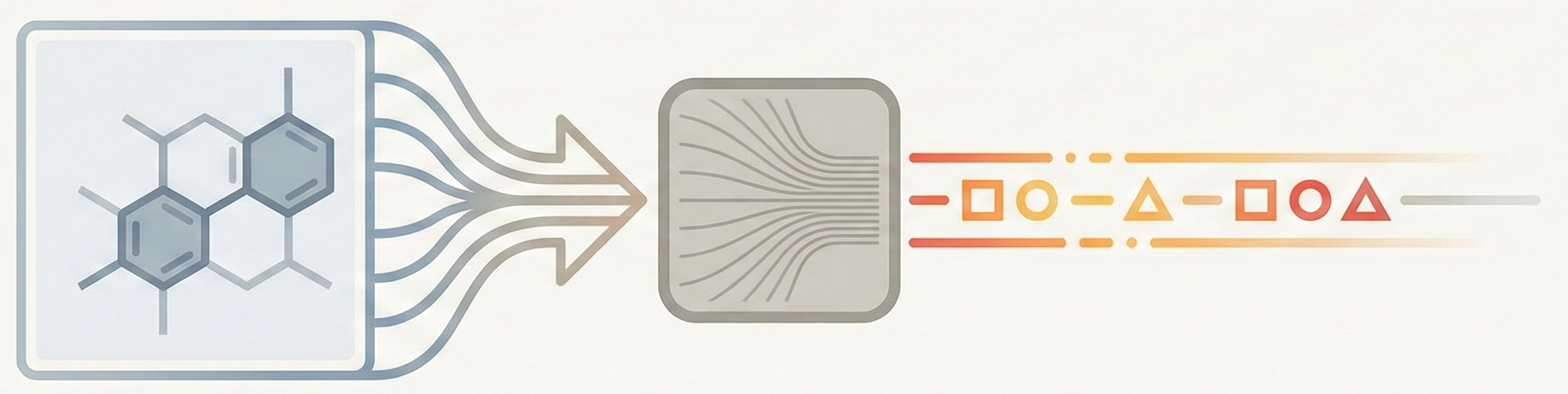

This note provides a comparative analysis of image-to-sequence methods for Optical Chemical Structure Recognition (OCSR). These methods treat molecular structure recognition as an image captioning task, using encoder-decoder architectures to generate sequential molecular representations (SMILES, SELFIES, InChI) directly from pixels.

For the full taxonomy of OCSR approaches including image-to-graph and rule-based methods, see the OCSR Methods taxonomy.

Architectural Evolution (2019-2025)

The field has undergone rapid architectural evolution, with clear generational shifts in both encoder and decoder design.

Timeline

| Era | Encoder | Decoder | Representative Methods |

|---|---|---|---|

| 2019-2020 | CNN (Inception V3, ResNet) | LSTM/GRU with Attention | Staker et al., DECIMER |

| 2021 | EfficientNet, ViT | Transformer | DECIMER 1.0, Img2Mol, ViT-InChI |

| 2022 | Swin Transformer, ResNet | Transformer | SwinOCSR, Image2SMILES, MICER |

| 2023-2024 | EfficientNetV2, SwinV2 | Transformer | DECIMER.ai, Image2InChI, MMSSC-Net |

| 2025 | EfficientViT, VLMs (Qwen2-VL) | LLM decoders, RL fine-tuning | MolSight, GTR-CoT, OCSU |

Encoder Architectures

| Architecture | Methods Using It | Key Characteristics |

|---|---|---|

| Inception V3 | DECIMER (2020) | Early CNN approach, 299x299 input |

| ResNet-50/101 | IMG2SMI, Image2SMILES, MICER, DGAT | Strong baseline, well-understood |

| EfficientNet-B3 | DECIMER 1.0 | Efficient scaling, compound coefficients |

| EfficientNet-V2-M | DECIMER.ai, DECIMER-Hand-Drawn | Improved training efficiency |

| EfficientViT-L1 | MolSight | Optimized for deployment |

| Swin Transformer | SwinOCSR, MolParser | Hierarchical vision transformer |

| SwinV2 | MMSSC-Net, Image2InChI | Improved training stability |

| Vision Transformer (ViT) | ViT-InChI | Pure attention encoder |

| DenseNet | RFL, Hu et al. RCGD | Dense connections, feature reuse |

| Deep TNT | ICMDT | Transformer-in-Transformer |

| Qwen2-VL | OCSU, GTR-CoT | Vision-language model encoder |

Decoder Architectures

| Architecture | Methods Using It | Output Format |

|---|---|---|

| GRU with Attention | DECIMER, RFL, Hu et al. RCGD | SMILES, RFL, SSML |

| LSTM with Attention | Staker et al., ChemPix, MICER | SMILES |

| Transformer | Most 2021+ methods | SMILES, SELFIES, InChI |

| GPT-2 | MMSSC-Net | SMILES |

| BART | MolParser | E-SMILES |

| Pre-trained CDDD | Img2Mol | Continuous embedding → SMILES |

Output Representation Comparison

The choice of molecular string representation significantly impacts model performance. Representations fall into three categories: core molecular formats for single structures, extended formats for molecular families and variable structures (primarily Markush structures in patents), and specialized representations optimizing for specific recognition challenges.

The Rajan et al. 2022 ablation study provides a comparison of core formats.

Core Molecular Formats

These represent specific, concrete molecular structures.

| Format | Validity Guarantee | Sequence Length | Key Characteristic | Used By |

|---|---|---|---|---|

| SMILES | No | Shortest (baseline) | Standard, highest accuracy | DECIMER.ai, MolSight, DGAT, most 2023+ |

| DeepSMILES | Partial | ~1.1x SMILES | Reduces non-local dependencies | SwinOCSR |

| SELFIES | Yes (100%) | ~1.5x SMILES | Guaranteed valid molecules | DECIMER 1.0, IMG2SMI |

| InChI | N/A (canonical) | Variable (long) | Unique identifiers, layered syntax | ViT-InChI, ICMDT, Image2InChI |

| FG-SMILES | No | Similar to SMILES | Functional group-aware tokenization | Image2SMILES |

SMILES and Variants

SMILES remains the dominant format due to its compactness and highest accuracy on clean data. Standard SMILES uses single characters for ring closures and branches that may appear far apart in the sequence, creating learning challenges for sequence models.

DeepSMILES addresses these non-local syntax dependencies by modifying how branches and ring closures are encoded, making sequences more learnable for neural models. Despite this modification, DeepSMILES sequences are ~1.1x longer than standard SMILES (not shorter). The format offers partial validity improvements through regex-based tokenization with a compact 76-token vocabulary, providing a middle ground between SMILES accuracy and guaranteed validity.

SELFIES guarantees 100% valid molecules by design through a context-free grammar, eliminating invalid outputs entirely. This comes at the cost of ~1.5x longer sequences and a typical 2-5% accuracy drop compared to SMILES on exact-match metrics. The validity guarantee makes SELFIES particularly attractive for generative modeling applications.

InChI uses a layered canonical syntax fundamentally different from SMILES-based formats. While valuable for unique molecular identification, its complex multi-layer structure (formula, connectivity, stereochemistry, isotopes, etc.) and longer sequences make it less suitable for image-to-sequence learning, resulting in lower recognition accuracy.

Key Findings from Rajan et al. 2022

- SMILES achieves highest exact-match accuracy on clean synthetic data

- SELFIES guarantees 100% valid molecules but at cost of ~2-5% accuracy drop

- InChI is problematic due to complex layered syntax and longer sequences

- DeepSMILES offers middle ground with partial validity improvements through modified syntax

Extended Formats for Variable Structures

Markush structures represent families of molecules, using variable groups (R1, R2, etc.) with textual definitions. They are ubiquitous in patent documents for intellectual property protection. Standard SMILES cannot represent these variable structures.

| Format | Base Format | Key Feature | Used By |

|---|---|---|---|

| E-SMILES | SMILES + XML annotations | Backward-compatible with separator token | MolParser |

| CXSMILES | SMILES + extension block | Substituent tables, compression | MarkushGrapher |

E-SMILES (Extended SMILES) maintains backward compatibility by using a <sep> token to separate core SMILES from XML-like annotations. Annotations encode Markush substituents (<a>index:group</a>), polymer structures (<p>polymer_info</p>), and abstract ring patterns (<r>abstract_ring</r>). The core structure remains parseable by standard RDKit.

CXSMILES optimizes representation by moving variable groups directly into the main SMILES string as special atoms with explicit atom indexing (e.g., C:1) to link to an extension block containing substituent tables. This handles both frequency variation and position variation in Markush structures.

Specialized Representations

These formats optimize for specific recognition challenges beyond standard single-molecule tasks.

RFL: Ring-Free Language

RFL fundamentally restructures molecular serialization through hierarchical ring decomposition, addressing a core challenge: standard 1D formats (SMILES, SSML) flatten complex 2D molecular graphs, losing explicit spatial relationships.

Mechanism: RFL decomposes molecules into three explicit components:

- Molecular Skeleton (𝒮): Main graph with rings “collapsed”

- Ring Structures (ℛ): Individual ring components stored separately

- Branch Information (ℱ): Connectivity between skeleton and rings

Technical approach:

- Detect all non-nested rings using DFS

- Calculate adjacency (γ) between rings based on shared edges

- Merge isolated rings (γ=0) into SuperAtoms (single node placeholders)

- Merge adjacent rings (γ>0) into SuperBonds (edge placeholders)

- Progressive decoding: predict skeleton first, then conditionally decode rings using stored hidden states

Performance: RFL achieves SOTA results on both handwritten (95.38% EM) and printed (95.58% EM) structures, with particular strength on high-complexity molecules where standard baselines fail completely (0% → ~30% on hardest tier).

Note: RFL does not preserve original drawing orientation; it’s focused on computational efficiency through hierarchical decomposition.

SSML: Structure-Specific Markup Language

SSML is the primary orientation-preserving format in OCSR. Based on Chemfig (LaTeX chemical drawing package), it provides step-by-step drawing instructions.

Key characteristics:

- Describes how to draw the molecule alongside its graph structure

- Uses “reconnection marks” for cyclic structures

- Preserves branch angles and spatial relationships

- Significantly outperformed SMILES for handwritten recognition: 92.09% vs 81.89% EM (Hu et al. RCGD 2023)

Use case: Particularly valuable for hand-drawn structure recognition where visual alignment between image and reconstruction sequence aids model learning.

Training Data Comparison

Training data scale has grown dramatically, with a shift toward combining synthetic and real-world images.

Data Scale Evolution

| Year | Typical Scale | Maximum Reported | Primary Source |

|---|---|---|---|

| 2019-2020 | 1-15M | 57M (Staker) | Synthetic (RDKit, CDK) |

| 2021-2022 | 5-35M | 35M (DECIMER 1.0) | Synthetic with augmentation |

| 2023-2024 | 100-150M | 450M+ (DECIMER.ai) | Synthetic + real patents |

| 2025 | 1-10M + real | 7.7M (MolParser) | Curated real + synthetic |

Synthetic vs Real Data

| Method | Training Data | Real-World Performance Notes |

|---|---|---|

| DECIMER.ai | 450M+ synthetic (RanDepict) | Strong generalization via domain randomization |

| MolParser | 7.7M with active learning | Explicitly targets “in the wild” images |

| GTR-CoT | Real patent/paper images | Chain-of-thought improves reasoning |

| MolSight | Multi-stage curriculum | RL fine-tuning for stereochemistry |

Data Augmentation Strategies

Common augmentation techniques across methods:

| Technique | Purpose | Used By |

|---|---|---|

| Rotation | Orientation invariance | Nearly all methods |

| Gaussian blur | Image quality variation | DECIMER, MolParser |

| Salt-and-pepper noise | Scan artifact simulation | DECIMER, Image2SMILES |

| Affine transforms | Perspective variation | ChemPix, MolParser |

| Font/style variation | Rendering diversity | RanDepict (DECIMER.ai) |

| Hand-drawn simulation | Sketch-like inputs | ChemPix, ChemReco, DECIMER-Hand-Drawn |

| Background variation | Document context | MolParser, DECIMER.ai |

Hardware and Compute Requirements

Hardware requirements span several orders of magnitude, from consumer GPUs to TPU pods.

Training Hardware Comparison

| Method | Hardware | Training Time | Dataset Size |

|---|---|---|---|

| Staker et al. (2019) | 8x GPUs | 26 days | 57M |

| IMG2SMI (2021) | 1x RTX 2080 Ti | 5 epochs | ~10M |

| Image2SMILES (2022) | 4x V100 | 2 weeks | 30M |

| MICER (2022) | 4x V100 | 42 hours | 10M |

| DECIMER 1.0 (2021) | TPU v3-8 | Not reported | 35M |

| DECIMER.ai (2023) | TPU v3-256 | Not reported | 450M+ |

| SwinOCSR (2022) | 4x RTX 3090 | 5 days | 5M |

| MolParser (2025) | 8x A100 | Curriculum learning | 7.7M |

| MolSight (2025) | Not specified | RL fine-tuning (GRPO) | Multi-stage |

Inference Considerations

Few papers report inference speed consistently. Available data:

| Method | Inference Speed | Notes |

|---|---|---|

| DECIMER 1.0 | 4x faster than DECIMER | TensorFlow Lite optimization |

| OSRA (baseline) | ~1 image/sec | CPU-based rule system |

| MolScribe | Real-time capable | Optimized Swin encoder |

Accessibility Tiers

| Tier | Hardware | Representative Methods |

|---|---|---|

| Consumer | 1x RTX 2080/3090 | IMG2SMI, ChemPix |

| Workstation | 4x V100/A100 | Image2SMILES, MICER, SwinOCSR |

| Cloud/HPC | TPU pods, 8+ A100 | DECIMER.ai, MolParser |

Benchmark Performance

Common Evaluation Datasets

| Dataset | Type | Size | Challenge |

|---|---|---|---|

| USPTO | Patent images | ~5K test | Real-world complexity |

| UOB | Scanned images | ~5K test | Scan artifacts |

| Staker | Synthetic | Variable | Baseline synthetic |

| CLEF | Patent images | ~1K test | Markush structures |

| JPO | Japanese patents | ~1K test | Different rendering styles |

Accuracy Comparison (Exact Match %)

Methods are roughly grouped by evaluation era; direct comparison is complicated by different test sets.

| Method | USPTO | UOB | Staker | Notes |

|---|---|---|---|---|

| OSRA (baseline) | ~70% | ~65% | ~80% | Rule-based reference |

| DECIMER 1.0 | ~85% | ~80% | ~90% | First transformer-based |

| SwinOCSR | ~88% | ~82% | ~92% | Swin encoder advantage |

| DECIMER.ai | ~90% | ~85% | ~95% | Scale + augmentation |

| MolParser | ~92% | ~88% | ~96% | Real-world focus |

| MolSight | ~93%+ | ~89%+ | ~97%+ | RL fine-tuning boost |

Note: Numbers are approximate and may vary by specific test split. See individual paper notes for precise figures.

Stereochemistry Recognition

Stereochemistry remains a persistent challenge across all methods:

| Method | Approach | Stereo Accuracy |

|---|---|---|

| Most methods | Standard SMILES | Lower than non-stereo |

| MolSight | RL (GRPO) specifically for stereo | Improved |

| MolNexTR | Graph-based explicit stereo | Better handling |

| Image2InChI | InChI stereo layers | Mixed results |

Hand-Drawn Recognition

A distinct sub-lineage focuses on hand-drawn/sketched chemical structures.

| Method | Target Domain | Key Innovation |

|---|---|---|

| ChemPix (2021) | Hand-drawn hydrocarbons | First deep learning for sketches |

| Hu et al. RCGD (2023) | Hand-drawn structures | Random conditional guided decoder |

| ChemReco (2024) | Hand-drawn C-H-O structures | EfficientNet + curriculum learning |

| DECIMER-Hand-Drawn (2024) | General hand-drawn | Enhanced DECIMER architecture |

Hand-Drawn vs Printed Trade-offs

- Hand-drawn methods sacrifice some accuracy on clean printed images

- Require specialized training data (synthetic hand-drawn simulation)

- Generally smaller training sets due to data collection difficulty

- Better suited for educational and lab notebook applications

Key Innovations by Method

| Method | Primary Innovation |

|---|---|

| Staker et al. | First end-to-end deep learning OCSR |

| DECIMER 1.0 | Transformer decoder + SELFIES |

| Img2Mol | Continuous embedding space (CDDD) |

| Image2SMILES | Functional group-aware SMILES (FG-SMILES) |

| SwinOCSR | Hierarchical vision transformer encoder |

| DECIMER.ai | Massive scale + RanDepict augmentation |

| MolParser | Extended SMILES + active learning |

| MolSight | RL fine-tuning (GRPO) for accuracy |

| GTR-CoT | Chain-of-thought graph traversal |

| OCSU | Multi-task vision-language understanding |

| RFL | Hierarchical ring decomposition with SuperAtoms/SuperBonds |

Open Challenges

- Stereochemistry: Consistent challenge across all methods; RL approaches (MolSight) show promise

- Abbreviations/R-groups: E-SMILES and Markush-specific methods emerging

- Real-world robustness: Gap between synthetic training and patent/paper images

- Inference speed: Rarely reported; important for production deployment

- Memory efficiency: Almost never documented; limits accessibility

- Multi-molecule images: Most methods assume single isolated structure

References

Individual paper notes linked throughout. For the complete method listing, see the OCSR Methods taxonomy.