Paper Information

Citation: Hewahi, N., Nounou, M. N., Nassar, M. S., Abu-Hamad, M. I., & Abu-Hamad, H. I. (2008). Chemical Ring Handwritten Recognition Based on Neural Networks. Ubiquitous Computing and Communication Journal, 3(3).

Publication: Ubiquitous Computing and Communication Journal 2008

What kind of paper is this?

This is a Method paper ($\Psi_{\text{Method}}$).

It proposes a specific algorithmic architecture (the “Classifier-Recognizer Approach”) to solve a pattern recognition problem. The rhetorical structure centers on defining three variations of a method, performing ablation-like comparisons between them (Whole Image vs. Lower Part), and demonstrating superior performance metrics (~94% accuracy) for the proposed technique.

What is the motivation?

The authors identify a gap in existing OCR and handwriting recognition research, which typically focuses on alphanumeric characters or whole words.

- Missing Capability: Recognition of specific heterocyclic chemical rings (23 types) had not been performed previously.

- Practical Utility: Existing chemical search engines require text-based queries (names); this work enables “backward” search where a user can draw a ring to find its information.

- Educational/Professional Aid: Useful for chemistry departments and mobile applications where chemists can sketch formulas on screens.

What is the novelty here?

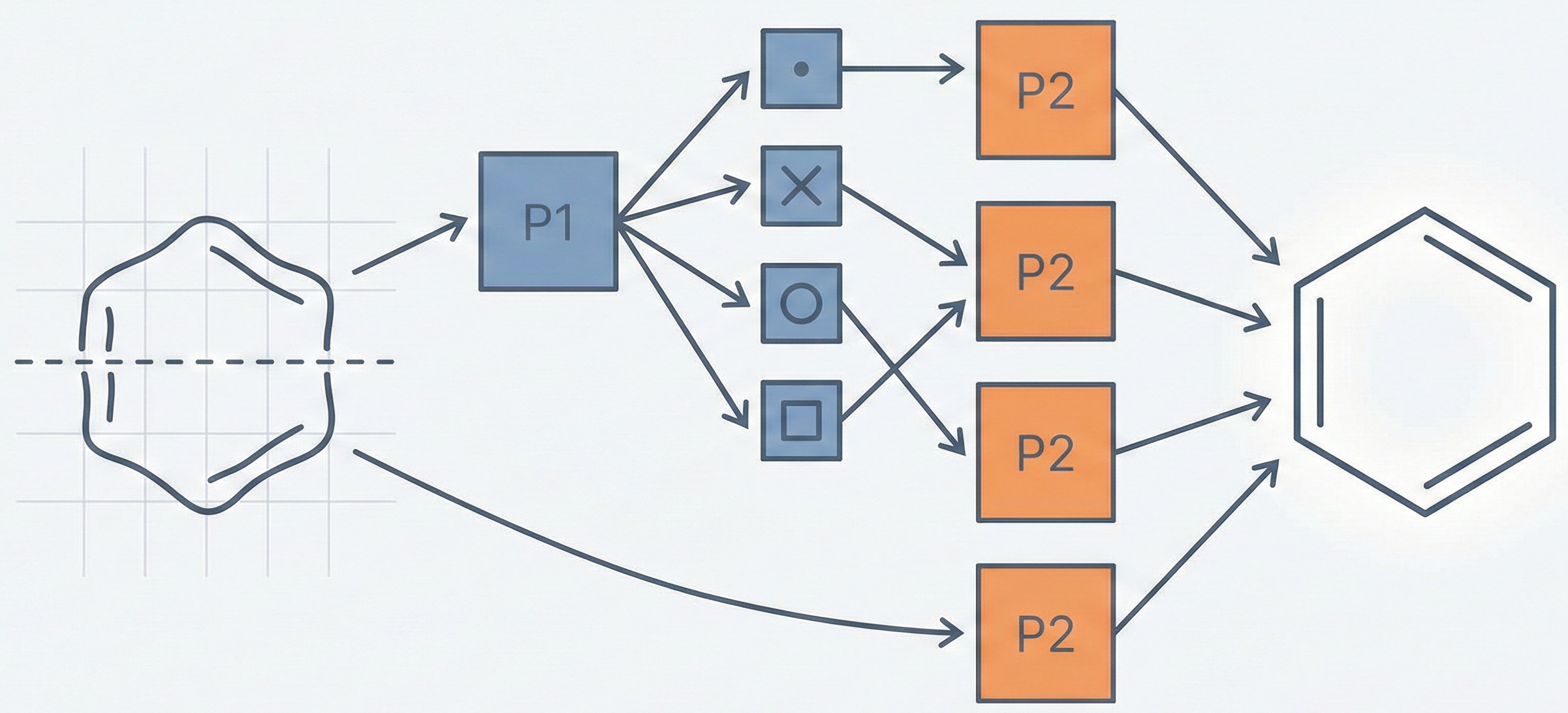

The core novelty is the two-phase “Classifier-Recognizer” architecture designed to handle the visual similarity of heterocyclic rings:

- Phase 1 (Classifier): A neural network classifies the ring into one of four broad categories (S, N, O, Others) based solely on the upper part of the image (40x15 pixels).

- Phase 2 (Recognizer): A class-specific neural network identifies the exact ring.

- Optimization: The most successful variation (“Lower Part Image Recognizer with Half Size Grid”) uses only the lower part of the image and odd rows (half-grid) to reduce input dimensionality and computation time while improving accuracy.

What experiments were performed?

The authors conducted a comparative study of three methodological variations:

- Whole Image Recognizer: Uses the full image.

- Whole Image (Half Size Grid): Uses only odd rows ($20 \x40$ pixels).

- Lower Part (Half Size Grid): Uses the lower part of the image with odd rows (the proposed method).

Setup:

- Dataset: 23 types of heterocyclic rings.

- Training: 1500 samples (distributed across S, N, O, and Others classes).

- Testing: 1150 samples.

- Metric: Recognition accuracy (Performance %) and Error %.

What were the outcomes and conclusions drawn?

- Superior Method: The “Lower Part Image Recognizer with Half Size Grid” achieved the best performance (~94% overall).

- High Classifier Accuracy: The first phase (classification into S/N/O/Other) is highly robust, with 100% accuracy for class ‘S’ and >97% for others.

- Class ‘Others’ Difficulty: The ‘Others’ class showed lower performance (~90-93%) compared to S/N/O due to the higher complexity and similarity of rings in that category.

- Efficiency: The half-grid approach significantly reduced input size and training time without sacrificing accuracy.

Reproducibility Details

Data

The dataset consists of handwritten samples of 23 specific heterocyclic rings.

| Purpose | Dataset | Size | Notes |

|---|---|---|---|

| Training | Heterocyclic Rings | 1500 samples | Split: 300 (S), 400 (N), 400 (O), 400 (Others) |

| Testing | Heterocyclic Rings | 1150 samples | Split: 150 (S), 300 (O), 400 (N), 300 (Others) |

Preprocessing Steps:

- Monochrome Conversion: Convert image to monochrome bitmap.

- Grid Scaling: Convert drawing area (regardless of original size) to a fixed 40x40 grid.

- Bounding: Scale the ring shape itself to fit the 40x40 grid.

Algorithms

The “Lower Part with Half Size” Pipeline:

- Cut Point: A horizontal midline is defined; the algorithm separates the “Upper Part” and “Lower Part”.

- Phase 1 Input: The Upper Part (rows 0-15 approx, scaled) is fed to the Classifier NN to determine the class (S, N, O, or Others).

- Phase 2 Input:

- For classes S, N, O: The Lower Part of the image is used.

- For class Others: The Whole Ring is used.

- Dimensionality Reduction: For the recognizer networks, only odd rows are used (effectively a 20x40 input grid) to reduce inputs from 1600 to 800.

Models

The system uses multiple distinct Feed-Forward Neural Networks (Backpropagation is implied by “training” and “epochs” context, though not explicitly named as the algorithm):

- Structure: 1 Classifier NN + 4 Recognizer NNs (one for each class).

- Hidden Layers: The preliminary experiments used 1600 hidden units, but the final optimized method likely uses fewer due to reduced inputs (exact hidden node count for the final method is not explicitly tabulated, but Table 2 mentions “50” and “1000” hidden nodes for different trials).

- Input Nodes:

- Standard: 1600 (40x40).

- Optimized: ~800 (20x40 via half-grid).

Evaluation

Testing Results (Lower Part Image Recognizer with Half Size Grid):

| Class | Samples | Correct | Accuracy | Error |

|---|---|---|---|---|

| S | 150 | 147 | 98.00% | 2.00% |

| O | 300 | 289 | 96.33% | 3.67% |

| N | 400 | 386 | 96.50% | 3.50% |

| Others | 300 | 279 | 93.00% | 7.00% |

| Overall | 1150 | - | ~94.0% | - |

Citation

@article{hewahiCHEMICALRINGHANDWRITTEN2008,

title = {CHEMICAL RING HANDWRITTEN RECOGNITION BASED ON NEURAL NETWORKS},

author = {Hewahi, Nabil and Nounou, Mohamed N and Nassar, Mohamed S and Abu-Hamad, Mohamed I and Abu-Hamad, Husam I},

year = {2008},

journal = {Ubiquitous Computing and Communication Journal},

volume = {3},

number = {3}

}