Paper Information

Citation: Rajan, K., Brinkhaus, H.O., Zielesny, A. et al. (2024). Advancements in hand-drawn chemical structure recognition through an enhanced DECIMER architecture. Journal of Cheminformatics, 16(78). https://doi.org/10.1186/s13321-024-00872-7

Publication: Journal of Cheminformatics 2024

Additional Resources:

What kind of paper is this?

This is a Method paper. It proposes an enhanced neural network architecture (EfficientNetV2 + Transformer) specifically designed to solve the problem of recognizing hand-drawn chemical structures. The primary contribution is architectural optimization and a data-driven training strategy, validated through ablation studies (comparing encoders) and SOTA benchmarking against existing rule-based and deep learning tools.

What is the motivation?

Chemical information in legacy laboratory notebooks and modern tablet-based inputs often exists as hand-drawn sketches.

- Gap: Existing Optical Chemical Structure Recognition (OCSR) tools—particularly rule-based ones—lack robustness and fail when images have variability in style, line thickness, or noise.

- Need: There is a critical need for automated tools to digitize this “dark data” effectively to preserve it and make it machine-readable/searchable.

What is the novelty here?

The core novelty is the architectural enhancement and synthetic training strategy:

- Decoder-Only Transformer: Moving from a full Transformer (encoder-decoder) to a Decoder-only setup significantly improved performance.

- EfficientNetV2 Integration: Replacing standard CNNs or EfficientNetV1 with EfficientNetV2-M provided better feature extraction and 2x faster training speeds.

- Scale of Synthetic Data: The authors demonstrate that scaling synthetic training data (up to 152 million images generated by RanDepict) directly correlates with improved generalization to real-world hand-drawn images, without ever training on real hand-drawn data.

What experiments were performed?

- Model Selection (Ablation): Tested three architectures (EfficientNetV2-M + Full Transformer, EfficientNetV1-B7 + Decoder-only, EfficientNetV2-M + Decoder-only) on standard benchmarks (JPO, CLEF, USPTO, UOB).

- Data Scaling: Trained the best model on four progressively larger datasets (from 4M to 152M images) to measure performance gains.

- Real-World Benchmarking: Validated the final model on the DECIMER Hand-drawn dataset (5088 real images drawn by volunteers) and compared against 9 other tools (OSRA, MolVec, Img2Mol, MolScribe, etc.).

What were the outcomes and conclusions drawn?

- SOTA Performance: The final DECIMER model achieved 99.72% valid predictions and 73.25% exact accuracy on the hand-drawn benchmark, significantly outperforming the next best tool (MolScribe at ~8% accuracy on this specific dataset).

- Robustness: Deep learning methods vastly outperform rule-based methods (which had <3% accuracy) on hand-drawn data.

- Data Saturation: Quadrupling the dataset from 38M to 152M images yielded only marginal gains (~3% accuracy), suggesting current synthetic data strategies may be hitting a plateau.

Reproducibility Details

Data

The model was trained entirely on synthetic data generated using the RanDepict toolkit. No real hand-drawn images were used for training.

| Purpose | Dataset Source | Size (Molecules) | Images Generated | Notes |

|---|---|---|---|---|

| Training (Phase 1) | ChEMBL-32 | ~2.2M | ~4.4M - 13.1M | Used for model selection |

| Training (Phase 2) | PubChem | ~9.5M | ~38M | Scaling experiment |

| Training (Final) | PubChem | ~38M | 152.16M | Final model training |

| Evaluation | DECIMER Hand-Drawn | 5,088 images | N/A | Real-world benchmark |

Preprocessing:

- SMILES strings length < 300 characters.

- Images resized to $512 \x512$.

- Images generated with and without “hand-drawn style” augmentations.

Algorithms

- Tokenization: SMILES split by heavy atoms, brackets, bond symbols, and special characters. Start

<start>and end<end>tokens added; padded with<pad>. - Optimization: Adam optimizer with a custom learning rate schedule (warmup + decay).

- Loss Function: Focal loss.

- Augmentations: RanDepict applied synthetic distortions to mimic handwriting (wobbly lines, variable thickness, etc.).

Models

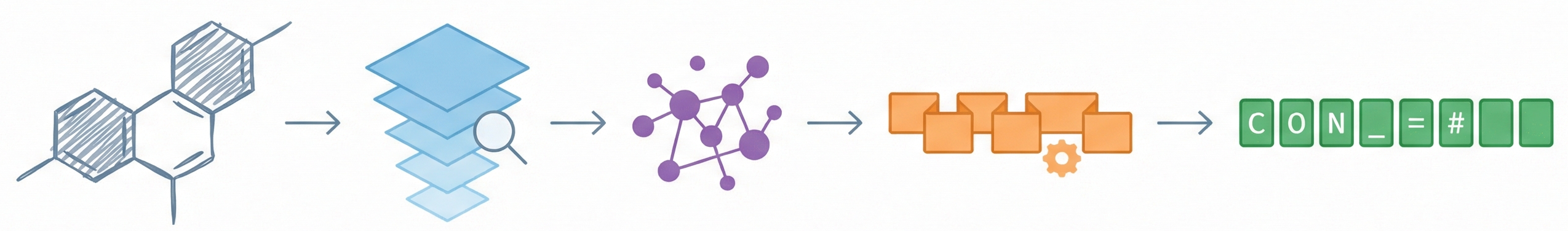

The final architecture (Model 3) is an Encoder-Decoder structure:

- Encoder: EfficientNetV2-M (pretrained backbone not specified, likely ImageNet).

- Input: $512 \x512 \x3$ image.

- Output Features: $16 \x16 \x512$ (reshaped to sequence length 256, dimension 512).

- Note: The final fully connected layer of the CNN is removed.

- Decoder: Transformer (Decoder-only).

- Layers: 6

- Attention Heads: 8

- Embedding Dimension: 512

- Output: Predicted SMILES string token by token.

Evaluation

Metrics used for evaluation:

- Valid Predictions (%): Percentage of outputs that are syntactically valid SMILES.

- Exact Match Accuracy (%): Canonical SMILES string identity.

- Tanimoto Similarity: Fingerprint similarity (PubChem fingerprints) between ground truth and prediction.

Key Results (Hand-Drawn Dataset):

| Metric | DECIMER (Ours) | MolScribe | Img2Mol | OSRA (Rule-based) |

|---|---|---|---|---|

| Valid Predictions | 99.72% | 95.66% | 98.96% | 54.66% |

| Exact Accuracy | 73.25% | 7.65% | 5.25% | 0.57% |

| Tanimoto | 0.94 | 0.59 | 0.52 | 0.17 |

Hardware

- Compute: Google Cloud TPU v4-128 pod slice.

- Training Time:

- EfficientNetV2-M model trained ~2x faster than EfficientNetV1-B7.

- Average training time per epoch: 34 minutes (for Model 3 on 1M dataset subset).

- Epochs: Models trained for 25 epochs.

Citation

@article{rajanAdvancementsHanddrawnChemical2024,

title = {Advancements in Hand-Drawn Chemical Structure Recognition through an Enhanced {{DECIMER}} Architecture},

author = {Rajan, Kohulan and Brinkhaus, Henning Otto and Zielesny, Achim and Steinbeck, Christoph},

year = 2024,

month = jul,

journal = {Journal of Cheminformatics},

volume = {16},

number = {1},

pages = {78},

issn = {1758-2946},

doi = {10.1186/s13321-024-00872-7}

}