Paper Information

Citation: Weir, H., Thompson, K., Woodward, A., Choi, B., Braun, A., & Martínez, T. J. (2021). ChemPix: Automated Recognition of Hand-Drawn Hydrocarbon Structures Using Deep Learning. Chemical Science, 12(31), 10622-10633. https://doi.org/10.1039/D1SC02957F

Publication: Chemical Science 2021

Additional Resources:

What kind of paper is this?

This is primarily a Method paper, with a secondary contribution as a Resource paper.

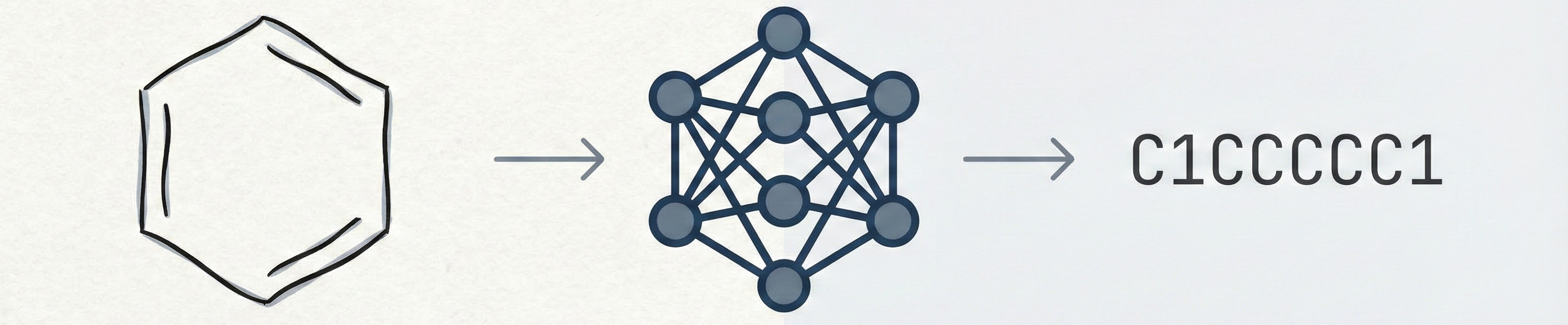

The paper’s core contribution is the ChemPix architecture and training strategy using neural image captioning (CNN-LSTM) to convert hand-drawn chemical structures to SMILES. The extensive ablation studies on synthetic data generation (augmentation, degradation, backgrounds) and ensemble learning strategies confirm the methodological focus. The secondary resource contribution includes releasing a curated dataset of hand-drawn hydrocarbons and code for generating synthetic training data.

What is the motivation?

Inputting molecular structures into computational chemistry software for quantum calculations is often a bottleneck, requiring domain expertise and cumbersome manual entry in drawing software. While optical chemical structure recognition (OCSR) tools exist, they typically struggle with the noise and variability of hand-drawn sketches. There is a practical need for a tool that allows chemists to simply photograph a hand-drawn sketch and immediately convert it into a machine-readable format (SMILES), making computational workflows more accessible.

What is the novelty here?

Image Captioning Paradigm: The authors treat the problem as neural image captioning, using an encoder-decoder (CNN-LSTM) framework to “translate” an image directly to a SMILES string. This avoids the complexity of explicit atom/bond detection and graph assembly.

Synthetic Data Engineering: The paper introduces a rigorous synthetic data generation pipeline that transforms clean RDKit-generated images into “pseudo-hand-drawn” images via randomized backgrounds, degradation, and heavy augmentation. This allows the model to achieve >50% accuracy on real hand-drawn data without ever seeing it during pre-training.

Ensemble Uncertainty Estimation: The method utilizes a “committee” (ensemble) of networks to improve accuracy and estimate confidence based on vote agreement, providing users with reliability indicators for predictions.

What experiments were performed?

Ablation Studies on Data Pipeline: The authors trained models on datasets generated at different stages of the pipeline (Clean RDKit → Augmented → Backgrounds → Degraded) to quantify the value of each transformation in bridging the synthetic-to-real domain gap.

Sample Size Scaling: They analyzed performance scaling by training on synthetic dataset sizes ranging from 50,000 to 500,000 images to understand data requirements.

Real-world Validation: The model was evaluated on a held-out test set of hand-drawn images collected via a custom web app, providing genuine out-of-distribution testing.

Fine-tuning Experiments: Comparisons of “zero-shot” performance (training only on synthetic data) versus fine-tuning with a small fraction of real hand-drawn data to assess the value of limited real-world supervision.

What were the outcomes and conclusions?

Pipeline Efficacy: Augmentation and image degradation were the most critical factors for generalization, improving accuracy from ~8% to nearly 50% on hand-drawn data. Adding backgrounds had a surprisingly negligible effect compared to degradation.

State-of-the-Art Performance: The final ensemble model achieved 76% accuracy (top-1) and 86% accuracy (top-3) on the hand-drawn test set, demonstrating practical viability for real-world use.

Synthetic Generalization: A model trained on 500,000 synthetic images achieved >50% accuracy on real hand-drawn data without any fine-tuning, validating the synthetic data generation strategy as a viable alternative to expensive manual labeling.

Ensemble Benefits: The voting committee approach improved accuracy and provided interpretable uncertainty estimates through vote distributions.

Reproducibility Details

Data

The study relies on two primary data sources: a massive synthetic dataset generated procedurally and a smaller collected dataset of real drawings.

| Purpose | Dataset | Size | Notes |

|---|---|---|---|

| Training | Synthetic (RDKit) | 500,000 images | Generated via RDKit with “heavy” augmentation: rotation ($0-360°$), blur, salt+pepper noise, and background texture addition. |

| Fine-tuning | Hand-Drawn (Real) | ~600 images | Crowdsourced via a web app; used for fine-tuning and validation. |

| Backgrounds | Texture Images | 1,052 images | A pool of unlabeled texture photos (paper, desks, shadows) used to generate synthetic backgrounds. |

Data Generation Parameters:

- Augmentations: Rotation, Resize ($200-300px$), Blur, Dilate, Erode, Aspect Ratio, Affine transform ($\pm 20px$), Contrast, Quantize, Sharpness

- Backgrounds: Randomly translated $\pm 100$ pixels and reflected

Algorithms

Ensemble Voting

A committee of networks casts votes for the predicted SMILES string. The final prediction is the one with the highest vote count. Validity of SMILES is checked using RDKit.

Beam Search

Used in the decoding layer with a beam width of $k=5$ to explore multiple potential SMILES strings.

Optimization:

- Optimizer: Adam

- Learning Rate: $1 \x10^{-4}$

- Batch Size: 20

- Loss Function: Cross-entropy loss (calculated as perplexity for validation)

Models

The architecture is a standard image captioning model (Show, Attend and Tell style) adapted for chemical structures.

Encoder (CNN):

- Input: 256x256 images (implicit from scaling operations)

- Structure: 4 blocks of Conv2D + MaxPool

- Block 1: 64 filters, (3,3) kernel

- Block 2: 128 filters, (3,3) kernel

- Block 3: 256 filters, (3,3) kernel

- Block 4: 512 filters, (3,3) kernel

- Activation: ReLU throughout

Decoder (LSTM):

- Hidden Units: 512

- Embedding Dimension: 80

- Attention: Mechanism with intermediary vector dimension of 512

Evaluation

- Primary Metric: Exact SMILES match accuracy (character-by-character identity between predicted and ground truth SMILES)

- Perplexity: Used for saving model checkpoints (minimizing uncertainty)

- Top-k Accuracy: Reported for $k=1$ (76%) and $k=3$ (86%)

Citation

@article{weir2021chempix,

title={ChemPix: Automated Recognition of Hand-Drawn Hydrocarbon Structures Using Deep Learning},

author={Weir, Hayley and Thompson, Keiran and Woodward, Amelia and Choi, Benjamin and Braun, Augustin and Mart{\'i}nez, Todd J.},

journal={Chemical Science},

volume={12},

number={31},

pages={10622--10633},

year={2021},

publisher={Royal Society of Chemistry},

doi={10.1039/D1SC02957F}

}