Paper Information

Citation: Xiong, J., Liu, X., Li, Z., Xiao, H., Wang, G., Niu, Z., Fei, C., Zhong, F., Wang, G., Zhang, W., Fu, Z., Liu, Z., Chen, K., Jiang, H., & Zheng, M. (2024). αExtractor: A system for automatic extraction of chemical information from biomedical literature. Science China Life Sciences, 67(3), 618-621. https://doi.org/10.1007/s11427-023-2388-x

Publication: Science China Life Sciences (2024)

Additional Resources:

What kind of paper is this?

This is primarily a Method ($\Psi_{\text{Method}}$) paper with a significant secondary Resource ($\Psi_{\text{Resource}}$) contribution (see the AI and Physical Sciences paper taxonomy for more on these categories).

The dominant methodological contribution is the ResNet-Transformer recognition architecture that achieves state-of-the-art performance through robustness engineering - specifically, training on 20 million synthetic images with aggressive augmentation to handle degraded image conditions. The work answers the core methodological question “How well does this work?” through extensive benchmarking against existing OCSR tools and ablation studies validating architectural choices.

The secondary resource contribution comes from releasing αExtractor as a freely available web service and open-source tool, correcting labeling errors in standard benchmarks (CLEF, UOB, JPO), and providing an end-to-end document processing pipeline for biomedical literature mining.

What is the motivation?

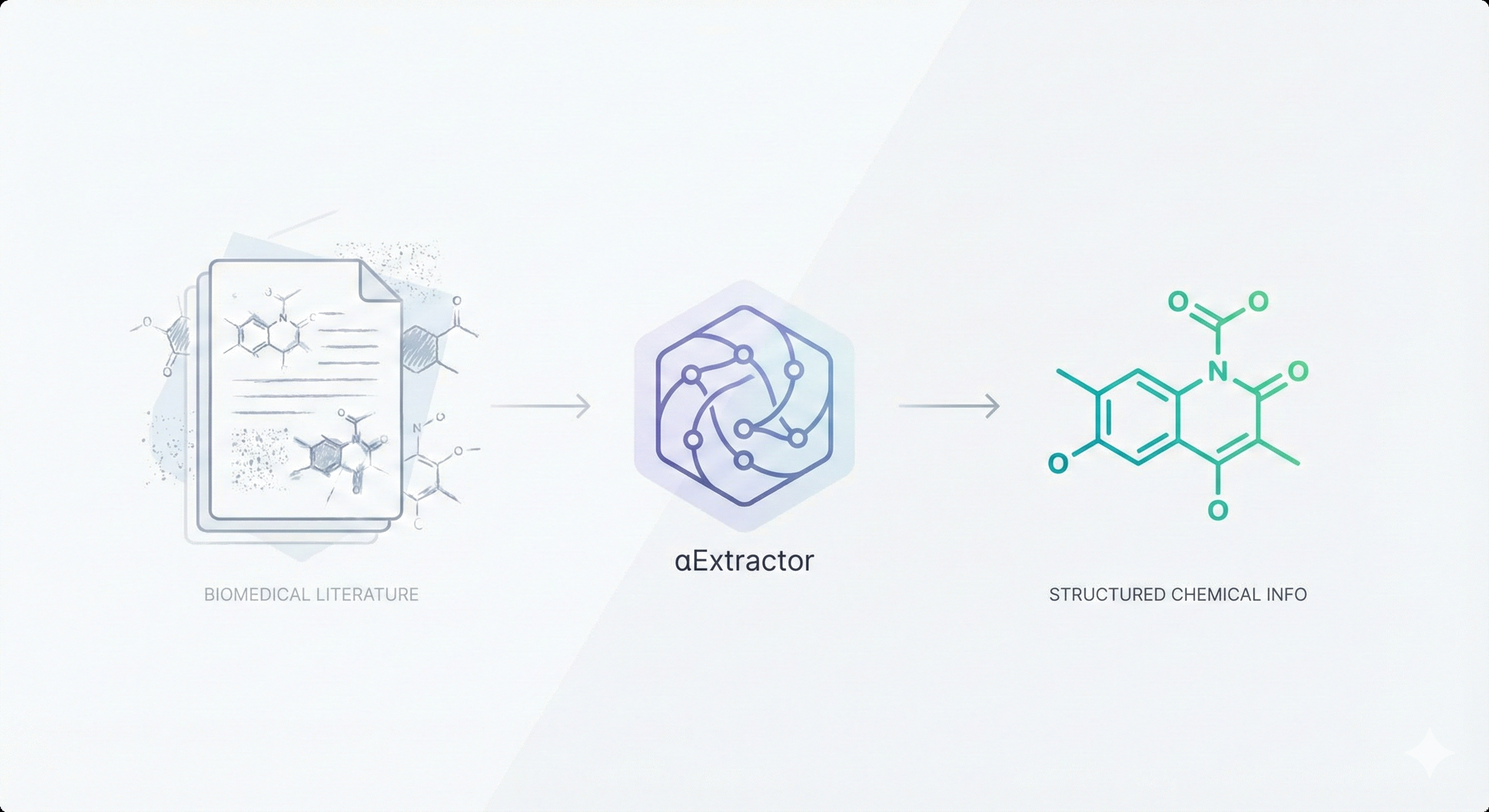

The motivation hits a familiar pain point in chemical informatics, but with a biomedical twist. Vast amounts of chemical knowledge in biomedical literature exists only as images - molecular structures embedded in figures, chemical synthesis schemes, and compound diagrams. This visual knowledge is effectively invisible to computational methods, creating a massive bottleneck for drug discovery research, systematic reviews, and large-scale chemical database construction.

While OCSR tools exist, they face two critical problems when applied to biomedical literature:

Real-world image quality: Biomedical papers often contain low-resolution figures, images with complex backgrounds, noise from scanning/digitization, and inconsistent drawing styles across different journals and decades of publications.

End-to-end extraction: Most OCSR systems assume you already have clean, cropped molecular images. But in practice, you need to first find the molecular structures within multi-panel figures, reaction schemes, and dense document layouts before you can recognize them.

The authors argue that a practical literature mining system needs to solve both problems simultaneously - robust recognition under noisy conditions and automated detection of molecular images within complex documents.

What is the novelty here?

The novelty lies in combining a competition-winning recognition architecture with extensive robustness engineering and end-to-end document processing. The key contributions are:

ResNet-Transformer Recognition Model: The core recognition system uses a Residual Neural Network (ResNet) encoder paired with a Transformer decoder in an image-captioning framework. This architecture won first place in a Kaggle molecular translation competition, providing a strong foundation for the recognition task.

Enhanced Molecular Representation: The model produces an augmented representation that includes:

- Standard molecular connectivity information

- Bond type tokens (solid wedge bonds, dashed bonds, etc.) that preserve 3D stereochemical information

- Atom coordinate predictions that allow reconstruction of the exact molecular pose from the original image

This dual prediction of discrete structure and continuous coordinates makes the output more faithful to the source material and enables better quality assessment.

Massive Synthetic Training Dataset: The model was trained on approximately 20 million synthetic molecular images generated from PubChem SMILES with aggressive data augmentation. The augmentation strategy randomized visual styles, image quality, and rendering parameters to create maximum diversity - ensuring the network rarely saw the same molecular depiction twice. This forces the model to learn robust, style-invariant features.

End-to-End Document Processing Pipeline: αExtractor integrates object detection and structure recognition into a complete document mining system:

- An object detection model automatically locates molecular images within PDF documents

- The recognition model converts detected images to structured representations

- A web service interface makes the entire pipeline accessible to researchers without machine learning expertise

Robustness-First Design: The system was explicitly designed to handle degraded image conditions that break traditional OCSR tools - low resolution, background interference, color variations, and scanning artifacts commonly found in legacy biomedical literature.

What experiments were performed?

The evaluation focused on demonstrating robust performance across diverse image conditions, from pristine benchmarks to challenging real-world scenarios:

Benchmark Dataset Evaluation: αExtractor was tested on four standard OCSR benchmarks:

- CLEF: Chemical structure recognition challenge dataset

- UOB: University of Birmingham patent images

- JPO: Japan Patent Office molecular diagrams

- USPTO: US Patent and Trademark Office structures

Performance was measured using exact SMILES match accuracy.

Error Analysis and Dataset Correction: During evaluation, the researchers discovered numerous labeling errors in the original benchmark datasets. They systematically identified and corrected these errors, then re-evaluated all methods on the cleaned datasets to get more accurate performance measurements.

Robustness Stress Testing: The system was evaluated on two challenging datasets specifically designed to test robustness:

- Color background images (200 samples): Molecular structures on complex, colorful backgrounds that simulate real figure conditions

- Low-quality images (200 samples): Degraded images with noise, blur, and artifacts typical of scanned documents

These tests compared αExtractor against open-source alternatives under realistic degradation conditions.

Generalization Testing: In the most challenging experiment, αExtractor was tested on hand-drawn molecular structures - a completely different visual domain not represented in the training data. This tested whether the learned features could generalize beyond digital rendering styles to human-drawn chemistry.

End-to-End Document Extraction: The complete pipeline was evaluated on 50 PDF files containing 2,336 molecular images. This tested both the object detection component (finding molecules in complex documents) and the recognition component (converting them to SMILES) in a realistic literature mining scenario.

Speed Benchmarking: Inference time was measured to demonstrate the practical efficiency needed for large-scale document processing.

What were the outcomes and conclusions drawn?

Substantial Accuracy Gains: On the four benchmark datasets, αExtractor achieved accuracies of 91.83% (CLEF), 98.47% (UOB), 88.67% (JPO), and 93.64% (USPTO), significantly outperforming existing methods. After correcting dataset labeling errors, the true accuracies were even higher: 95.77% on CLEF, 99.86% on UOB, and 92.44% on JPO.

Exceptional Robustness: While open-source competitors nearly failed on degraded images (achieving only 5.5% accuracy at best), αExtractor maintained over 90% accuracy on both color background and low-quality image datasets. This demonstrates the effectiveness of the massive synthetic training strategy.

Remarkable Generalization: On hand-drawn molecules - a domain completely absent from training data - αExtractor achieved 61.4% accuracy while other tools scored below 3%. This suggests the model learned genuinely chemical features.

Practical End-to-End Performance: In the complete document processing evaluation, αExtractor detected 95.1% of molecular images (2,221 out of 2,336) and correctly recognized 94.5% of detected structures (2,098 correct predictions). This demonstrates the system’s readiness for real-world literature mining applications.

Dataset Quality Issues: The systematic discovery of labeling errors in standard benchmarks highlights a broader problem in OCSR evaluation. The corrected datasets provide more reliable baselines for future method development.

Spatial Layout Limitation: The researchers note that while αExtractor correctly identifies molecular connectivity, the re-rendered structures may have different spatial layouts than the originals. This could complicate visual verification for complex molecules, though the chemical information remains accurate.

The work establishes αExtractor as a significant advance in practical OCSR for biomedical applications. The combination of robust recognition, end-to-end document processing, and exceptional generalization makes it suitable for large-scale literature mining tasks where previous tools would fail. The focus on real-world robustness over benchmark optimization represents a mature approach to deploying machine learning in scientific workflows.

Reproducibility Details

Models

Image Recognition Model:

- Backbone: ResNet50 producing output of shape $2048 \x19 \x19$, projected to 512 channels via a feed-forward layer

- Transformer Architecture: 3 encoder layers and 3 decoder layers with hidden dimension of 512

- Output Format: Generates SMILES tokens plus two auxiliary coordinate sequences (X-axis and Y-axis) that are length-aligned with the SMILES tokens via padding

Object Detection Model:

- Architecture: DETR (Detection Transformer) with ResNet101 backbone

- Transformer Architecture: 6 encoder layers and 6 decoder layers with hidden dimension of 256

- Purpose: Locates molecular images within PDF pages before recognition

Coordinate Prediction:

- Continuous X/Y coordinates are discretized into 200 discrete bins

- Padding tokens added to coordinate sequences to align perfectly with SMILES token sequence, enabling simultaneous structure and pose prediction

Data

Training Data:

- Synthetic Generation: Python script rendering PubChem SMILES into 2D images

- Dataset Size: Approximately 20.3 million synthetic molecular images from PubChem

- Superatom Handling: 50% of molecules had functional groups replaced with superatoms (e.g., “COOH”) or generic labels (R1, X1) to match literature drawing conventions

- Rendering Augmentation: Randomized bond thickness, bond spacing, font size, font color, and padding size

Geometric Augmentation:

- Shear along x-axis: $\pm 15^\circ$

- Rotation: $\pm 15^\circ$

- Piecewise affine scaling

Noise Injection:

- Pepper noise: 0-2%

- Salt noise: 0-40%

- Gaussian noise: scale 0-0.16

Destructive Augmentation:

- JPEG compression: severity levels 2-5

- Random masking

Evaluation Datasets:

- CLEF: Chemical structure recognition challenge dataset

- UOB: University of Birmingham patent images

- JPO: Japan Patent Office molecular diagrams

- USPTO: US Patent and Trademark Office structures

- Color background images: 200 samples

- Low-quality images: 200 samples

- Hand-drawn structures: Test set for generalization

- End-to-end document extraction: 50 PDFs (567 pages, 2,336 molecular images)

Training

Image Recognition Model:

- Optimizer: Adam with learning rate of 1e-4

- Batch Size: 100

- Epochs: 5

- Loss Function: Cross-entropy loss for both SMILES prediction and coordinate prediction

Object Detection Model:

- Optimizer: Adam with learning rate of 1e-4

- Batch Size: 24

- Training Strategy: Pre-trained on synthetic “Lower Quality” data for 5 epochs, then fine-tuned on annotated real “High Quality” data for 30 epochs

Evaluation

Metrics:

- Recognition: SMILES accuracy (exact match)

- End-to-End Pipeline:

- Recall: 95.1% for detection

- Accuracy: 94.5% for recognition

Hardware

Inference Hardware:

- Cloud CPU server (8 CPUs, 64 GB RAM)

- Throughput: Processed 50 PDFs (567 pages) in 40 minutes