Paper Information

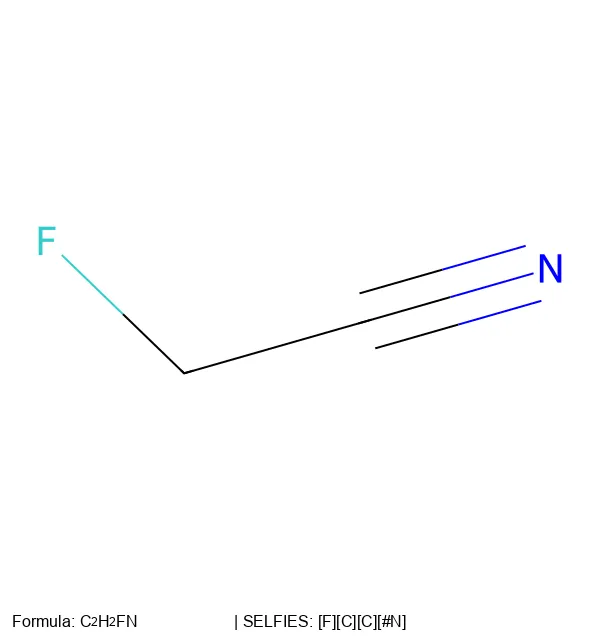

Citation: Krenn, M., Häse, F., Nigam, A., Friederich, P., & Aspuru-Guzik, A. (2020). Self-referencing embedded strings (SELFIES): A 100% robust molecular string representation. Machine Learning: Science and Technology, 1(4), 045024. https://doi.org/10.1088/2632-2153/aba947

Publication: Machine Learning: Science and Technology, 2020

Additional Resources:

What kind of paper is this?

This is a Method paper that introduces a new molecular string representation designed specifically for machine learning applications.

What is the motivation?

When neural networks generate molecules using SMILES notation, a huge fraction of output strings are invalid - either syntax errors or chemically impossible structures. This wasn’t just an inconvenience but a fundamental bottleneck: if your generative model produces 70% invalid molecules, you’re wasting computational effort and severely limiting chemical space exploration.

What is the novelty here?

The authors’ key insight was using a formal grammar approach where each symbol is interpreted based on chemical context - the “state of the derivation” that tracks available valence bonds. This prevents impossible structures like a carbon with five single bonds, making invalid molecular regions impossible.

This approach guarantees 100% validity: every SELFIES string corresponds to a valid molecule, and every valid molecule can be represented.

What experiments were performed?

The authors ran a convincing set of experiments to demonstrate SELFIES’ robustness:

Random Mutation Test

They took the SELFIES and SMILES representations of MDMA and introduced random changes:

- SMILES: After just one random mutation, only 26.6% of strings remained valid

- SELFIES: 100% of mutated strings still represented valid molecules (though different from the original)

This stark difference shows why SELFIES is particularly valuable for evolutionary algorithms and genetic programming approaches to molecular design.

Generative Model Performance

The real test came with actual machine learning models. The authors trained Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) on both representations:

VAE Results:

- SMILES-based VAE: Large invalid regions scattered throughout the latent space

- SELFIES-based VAE: Every point in the continuous latent space mapped to a valid molecule

- The SELFIES model encoded over 100 times more diverse molecules

GAN Results:

- Best SMILES GAN: 18.5% diverse, valid molecules

- Best SELFIES GAN: 78.9% diverse, valid molecules

Evaluation Metrics:

- Validity: Percentage of generated strings representing valid molecular structures

- Diversity: Number of unique valid molecules produced

- Reconstruction Accuracy: How well the autoencoder reproduced input molecules (detailed in Table 1)

Scalability Test

The authors proved SELFIES works beyond toy molecules by successfully encoding and decoding all 72 million molecules from the PubChem database - demonstrating practical applicability to real chemical databases.

What outcomes/conclusions?

Key Findings:

- SELFIES achieves 100% validity guarantee - every string represents a valid molecule

- SELFIES-based VAEs encode over 100x more diverse molecules than SMILES-based models

- SELFIES-based GANs produce 78.9% diverse valid molecules vs. 18.5% for SMILES GANs

- Successfully validated on all 72 million PubChem molecules

Limitations Acknowledged:

- No direct canonicalization method (initially required SMILES conversion)

- Missing features: aromaticity, isotopes, complex stereochemistry

- Requires community testing and adoption

Impact:

This work demonstrated that designing ML-native molecular representations could unlock new capabilities in drug discovery and materials science. The paper sparked broader conversation about representation design and showed that addressing format limitations was more effective than forcing models to work around them.

Reproducibility Details

Data

The machine learning experiments used two distinct datasets:

- QM9 (134k molecules): Primary training dataset for VAE and GAN models

- PubChem (72M molecules): Used only to test representation coverage and scalability; not used for model training

Models

The VAE implementation included:

- Latent space: 241-dimensional with Gaussian distributions

- Input encoding: One-hot encoding of SELFIES/SMILES strings

- Full architectural details (encoder/decoder structures, layer types) provided in Supplementary Information

Algorithms

The authors found GAN performance was highly sensitive to hyperparameter selection:

- Searched 200 different hyperparameter configurations to achieve the reported 78.9% diversity

- Specific optimizers, learning rates, and training duration detailed in Supplementary Information

- Full rule generation algorithm provided in Table 2

Evaluation

All models evaluated on:

- Validity rate: Percentage of syntactically and chemically valid outputs

- Diversity: Count of unique valid molecules generated

- Reconstruction accuracy: Fidelity of autoencoder reconstruction (VAEs only)

Hardware

- Training performed on SciNet supercomputing infrastructure

- Specific GPU/compute details in Supplementary Information

Replication Resources

Complete technical replication requires:

- The full rule generation algorithm (Table 2 in paper)

- Code and trained models: https://github.com/aspuru-guzik-group/selfies

- Supplementary Information for complete architectural and hyperparameter specifications

Note: The modern SELFIES library has evolved significantly since this foundational paper, addressing many of the implementation challenges identified by the authors.