Paper Information

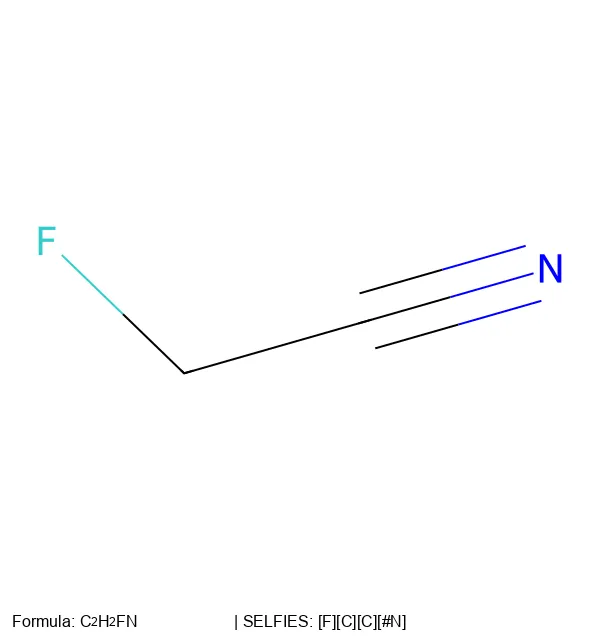

Citation: Krenn, M., Ai, Q., Barthel, S., Carson, N., Frei, A., Frey, N. C., Friederich, P., Gaudin, T., Gayle, A. A., Jablonka, K. M., Lameiro, R. F., Lemm, D., Lo, A., Moosavi, S. M., Nápoles-Duarte, J. M., Nigam, A., Pollice, R., Rajan, K., Schatzschneider, U., … Aspuru-Guzik, A. (2022). SELFIES and the future of molecular string representations. Patterns, 3(10). https://doi.org/10.1016/j.patter.2022.100588

Publication: Patterns 2022

Additional Resources:

What kind of paper is this?

This is a Position paper (perspective) that proposes a research agenda for molecular representations in AI. It reviews the evolution of chemical notation over 250 years and argues for extending SELFIES-style robust representations beyond traditional organic chemistry into polymers, crystals, reactions, and other complex chemical systems.

What is the motivation?

While SMILES has been the standard molecular representation since 1988, its fundamental weakness for machine learning is well-established: randomly generated SMILES strings are often invalid. The motivation is twofold:

- Current problem: Traditional representations (SMILES, InChI, DeepSMILES) lack 100% robustness; random mutations or generations can produce invalid strings, limiting their use in generative AI models.

- Future opportunity: SELFIES solved this for small organic molecules, but many important chemical domains (polymers, crystals, reactions) still lack robust representations, creating a bottleneck for AI-driven discovery in these areas.

What is the novelty here?

The novelty is in the comprehensive research roadmap. The authors propose 16 concrete research projects organized around key themes:

- Domain extension: metaSELFIES (learning graph rules from data), BigSELFIES (polymers/biomolecules), crystal structures via labeled quotient graphs

- Chemical reactions: Robust reaction representations that enforce conservation laws

- Programming perspective: Treating molecular representations as programming languages, potentially achieving Turing-completeness

- Benchmarking: Systematic comparisons across representation formats

- Interpretability: Understanding how humans and machines actually learn from different representations

What experiments were performed?

This perspective paper includes case studies:

Pasithea (Deep Molecular Dreaming): A generative model using gradient descent in latent space to optimize molecular properties (logP). When using one-hot encoded SELFIES, the model successfully optimizes logP monotonically, demonstrating smooth traversal of chemical space impossible with SMILES.

STOUT and DECIMER: Tools for IUPAC-name-to-structure and image-to-structure conversion. Both show improved accuracy when using SELFIES as an intermediate representation, even when the final output is SMILES, suggesting SELFIES provides a more learnable internal representation for sequence-to-sequence models.

What outcomes/conclusions?

The paper establishes robust representations as a fundamental bottleneck in computational chemistry and proposes a clear path forward:

Key outcomes:

- Identification of 16 concrete research projects spanning domain extension, benchmarking, and interpretability

- Evidence that SELFIES enables capabilities (like smooth property optimization) impossible with traditional formats

- Framework for thinking about molecular representations as programming languages

Strategic impact: The proposed extensions could unlock new capabilities across drug discovery (efficient exploration beyond small molecules), materials design (systematic crystal structure discovery), synthesis planning (better reaction representations), and fundamental research (new ways to understand chemical behavior).

Future vision: The authors emphasize that robust representations could become a bridge for bidirectional learning between humans and machines, enabling humans to learn new chemical concepts from AI systems.

The Mechanism of Robustness

The key difference between SELFIES and other representations lies in how they handle syntax:

- SMILES/DeepSMILES: Rely on non-local markers (opening/closing parentheses or ring numbers) that must be balanced. A mutation or random generation can easily break this balance, producing invalid strings.

- SELFIES: Uses a formal grammar (automaton) where derivation rules are entirely local. The critical innovation is overloading: a state-modifying symbol like

[Branch1]doesn’t just start a branch; it changes the interpretation of the next symbol to represent a numerical parameter (the branch length).

This overloading mechanism ensures that any arbitrary sequence of SELFIES tokens can be parsed into a valid molecular graph. The derivation can never fail because every symbol either adds an atom or modifies how subsequent symbols are interpreted.

The 16 Research Projects: Technical Details

This section provides technical details on the proposed research directions:

Extending to New Domains

metaSELFIES (Project 1): The authors propose learning graph construction rules automatically from data. This could enable robust representations for any graph-based system - from quantum optics to biological networks - without needing domain-specific expertise.

Token Optimization (Project 2): SELFIES uses “overloading” where a symbol’s meaning changes based on context. This project would investigate how this affects machine learning performance and whether the approach can be optimized.

Handling Complex Molecular Systems

BigSELFIES (Project 3): Current representations struggle with large, often random structures like polymers and biomolecules. BigSELFIES would combine hierarchical notation with stochastic building blocks to handle these complex systems where traditional small-molecule representations break down.

Crystal Structures (Projects 4-5): Crystals present unique challenges due to their infinite, periodic arrangements. An infinite net cannot be represented by a finite string directly. The proposed approach uses labeled quotient graphs (LQGs), which are finite graphs that uniquely determine a periodic net. However, current SELFIES cannot represent LQGs because they lack symbols for edge directions and edge labels (vector shifts encoding periodicity). Extending SELFIES to handle these structures could enable AI-driven materials design without relying on predefined crystal structures, unlocking systematic exploration of theoretical materials space.

Beyond Organic Chemistry (Project 6): Transition metals and main-group compounds feature complex bonding that breaks the simple two-center, two-electron model. The solution: use machine learning on large structural databases to automatically learn these complex bonding rules.

Chemical Reactions and Programming Concepts

Reaction Representations (Project 7): Moving beyond static molecules to represent chemical transformations. A robust reaction format would enforce conservation laws and could learn reactivity patterns from large reaction datasets, potentially revolutionizing synthesis planning.

Programming Language Perspective (Projects 8-9): An intriguing reframing views molecular representations as programming languages executed by chemical parsers. This opens possibilities for adding loops, logic, and other programming concepts to efficiently describe complex structures. The ambitious goal: a Turing-complete programming language that’s also 100% robust.

Empirical Comparisons (Projects 10-11): With multiple representation options (strings, matrices, images), we need systematic comparisons. The proposed benchmarks would go beyond simple validity metrics to focus on real-world design objectives in drug discovery, catalysis, and materials science.

Human Readability (Project 12): While SMILES is often called “human-readable,” this claim lacks scientific validation. The proposed study would test how well humans actually understand different molecular representations.

Machine Learning Perspectives (Projects 13-16): These projects explore how machines interpret molecular representations:

- Training networks to translate between formats to find universal representations

- Comparing learning efficiency across different formats

- Investigating latent space smoothness in generative models

- Visualizing what models actually learn about molecular structure