Paper Information

Citation: Kim, N., Kim, S., Kim, M., Park, J., & Ahn, S. (2025). MOFFlow: Flow Matching for Structure Prediction of Metal-Organic Frameworks. International Conference on Learning Representations (ICLR).

Publication: ICLR 2025

Additional Resources:

What kind of paper is this?

This is a Methodological Paper ($\Psi_{\text{Method}}$).

It introduces MOFFlow, a novel generative architecture and training framework designed specifically for the structure prediction of Metal-Organic Frameworks (MOFs). The paper focuses on the algorithmic innovation of decomposing the problem into rigid-body assembly on a Riemannian manifold, validates this through extensive comparison against SOTA baselines, and performs ablation studies to justify architectural choices. While it leverages the theory of flow matching, its primary contribution is the application-specific architecture and the handling of modular constraints.

What is the motivation?

The primary motivation is to overcome the scalability and accuracy limitations of existing methods for MOF structure prediction.

- Computational Cost of DFT: Conventional approaches rely on ab initio calculations (DFT) combined with random search, which are computationally prohibitive for large, complex systems like MOFs.

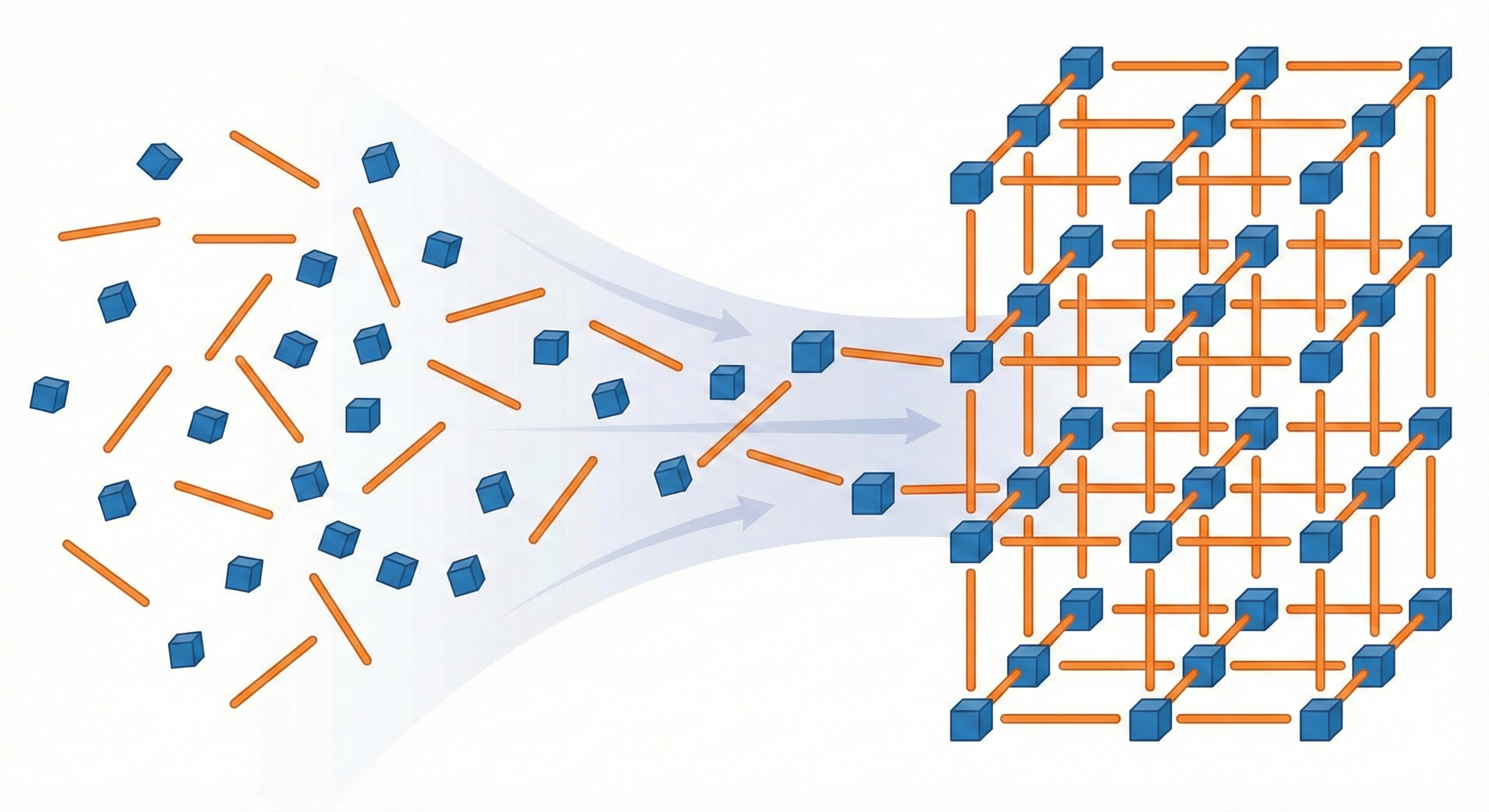

- Failure of General CSP: Existing deep generative models for general Crystal Structure Prediction (CSP) operate on an atom-by-atom basis. They fail to scale to MOFs, which often contain hundreds or thousands of atoms per unit cell, and do not exploit the inherent modular nature (building blocks) of MOFs.

- Tunability: MOFs are highly desirable for applications like carbon capture and drug delivery due to their tunable porosity, making automated design tools highly valuable.

What is the novelty here?

MOFFlow introduces a hierarchical, rigid-body flow matching framework tailored for MOFs.

- Rigid Body Decomposition: Unlike atom-based methods, MOFFlow treats metal nodes and organic linkers as rigid bodies, reducing the search space from $3N$ (atoms) to $6M$ (roto-translation of $M$ blocks).

- Riemannian Flow Matching on $SE(3)$: It is the first end-to-end model to jointly generate block-level rotations ($SO(3)$), translations ($\mathbb{R}^3$), and lattice parameters using Riemannian flow matching.

- MOFAttention: A novel attention module designed to encode the geometric relationships between building blocks, lattice parameters, and rotational constraints.

- Constraint Handling: It incorporates domain knowledge by operating on a mean-free system for translation invariance and using canonicalized coordinates for rotation invariance.

What experiments were performed?

The authors evaluated MOFFlow on structure prediction accuracy, physical property preservation, and scalability.

- Dataset: The Boyd et al. (2019) dataset consisting of 324,426 hypothetical MOF structures, decomposed into building blocks using the MOFid algorithm. Filtered to structures with $<200$ blocks.

- Baselines:

- Optimization-based: Random Search (RS) and Evolutionary Algorithm (EA) using CrySPY and CHGnet.

- Deep Learning: DiffCSP (state-of-the-art for general crystals).

- Self-Assembly: A heuristic algorithm used in MOFDiff (adapted for comparison).

- Metrics:

- Match Rate (MR): Percentage of generated structures matching ground truth within tolerance.

- RMSE: Root mean square error of atomic positions.

- Structural Properties: Volumetric/Gravimetric Surface Area (VSA/GSA), Pore Limiting Diameter (PLD), Void Fraction, etc., calculated via Zeo++.

- Scalability: Performance vs. number of atoms (up to 1000+) and building blocks.

What were the outcomes and conclusions drawn?

MOFFlow significantly outperformed all baselines in accuracy and efficiency, particularly for large structures.

- Accuracy: MOFFlow achieved a 31.69% match rate (stol=0.5) on unseen test structures, whereas RS, EA, and DiffCSP achieved near 0%.

- Speed: Inference took 1.94 seconds per structure, compared to 5.37s for DiffCSP and 1959s for EA.

- Scalability: MOFFlow maintained high match rates even for structures with >1000 atoms, where DiffCSP failed completely (>200 atoms).

- Property Preservation: The distributions of physical properties (e.g., surface area, void fraction) for MOFFlow-generated structures closely matched the ground truth, unlike DiffCSP which often collapsed to non-porous structures.

- Ablation: Learning the assembly (MOFFlow) outperformed heuristic self-assembly, confirming the value of the learned vector fields.

Reproducibility Details

Data

- Source: MOF dataset by Boyd et al. (2019).

- Preprocessing: Structures were decomposed using the metal-oxo decomposition algorithm from MOFid.

- Filtering: Structures with fewer than 200 building blocks were used.

- Splits: Train/Validation/Test ratio of 8:1:1.

- Representations:

- Atom-level: Tuple $(X, a, l)$ (coordinates, types, lattice).

- Block-level: Tuple $(\mathcal{B}, q, \tau, l)$ (blocks, rotations, translations, lattice).

Algorithms

- Framework: Riemannian Flow Matching.

- Objective: Conditional Flow Matching (CFM) loss regressing to clean data $q_1, \tau_1, l_1$.

- Equation: $\mathcal{L}(\theta) = \mathbb{E}_{t, \mathcal{S}^{(1)}} [\frac{1}{(1-t)^2} (\lambda_1 |\log_{q_t}(\hat{q}_1) - \log_{q_t}(q_1)|^2 + \dots)]$.

- Priors:

- Rotations ($q$): Uniform on $SO(3)$.

- Translations ($\tau$): Standard normal on $\mathbb{R}^3$.

- Lattice ($l$): Log-normal for lengths, Uniform(60, 120) for angles (Niggli reduced).

- Inference: ODE solver with 50 integration steps.

- Local Coordinates: Defined using PCA axes, corrected for symmetry to ensure consistency.

Models

- Architecture: Hierarchical encoder-decoder.

- Atom-level Update Layers: 4-layer EGNN-like structure to encode building block features $h_m$ from atomic graphs (cutoff 5Å).

- Block-level Update Layers: Iteratively updates $q, \tau, l$ using the MOFAttention module.

- MOFAttention: Modified Invariant Point Attention (IPA) that incorporates lattice parameters as offsets to the attention matrix.

- Hyperparameters:

- Node dimension: 256 (block-level), 64 (atom-level).

- Attention heads: 6.

- Loss coefficients: $\lambda_1=1.0$ (rot), $\lambda_2=2.0$ (trans), $\lambda_3=0.1$ (lattice).

Evaluation

- Metrics:

- Match Rate: Using

StructureMatcherfrompymatgen. Tolerances:stol=0.5/1.0,ltol=0.3,angle_tol=10.0. - RMSE: Normalized by average free length per atom.

- Match Rate: Using

- Tools: Zeo++ for structural property calculations (Surface Area, Pore Diameter, etc.).

| Metric | MOFFlow (Ours) | DiffCSP | RS |

|---|---|---|---|

| Match Rate (stol=0.5) | 31.69% | 0.09% | 0.00% |

| RMSE | 0.2820 | 0.3961 | - |

| Time per sample | 1.94s | 5.37s | 332s |

Hardware

- Training Hardware: 8 $\times$ NVIDIA RTX 3090 (24GB VRAM).

- Training Time:

- TimestepBatch version: ~5 days 15 hours.

- Batch version: ~1 day 17 hours.

- Batch Size: 160 (capped at $1.6 \x10^6$ atoms squared for memory).

Citation

@inproceedings{kimMOFFlowFlowMatching2025,

title={MOFFlow: Flow Matching for Structure Prediction of Metal-Organic Frameworks},

author={Kim, Nayoung and Kim, Seongsu and Kim, Minsu and Park, Jinkyoo and Ahn, Sungsoo},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=dNT3abOsLo}

}