Paper Information

Citation: Luo, E., Wei, X., Huang, L., Li, Y., Yang, H., Xia, Z., Wang, Z., Liu, C., Shao, B., & Zhang, J. (2025). Efficient and Scalable Density Functional Theory Hamiltonian Prediction through Adaptive Sparsity. Proceedings of the 42nd International Conference on Machine Learning (ICML).

Publication: ICML 2025

Additional Resources:

What kind of paper is this?

This is a methodological paper introducing a novel architecture and training curriculum to solve efficiency bottlenecks in Geometric Deep Learning. It directly tackles the primary computational bottleneck in modern SE(3)-equivariant graph neural networks (the tensor product operation) and proposes a generalizable solution through adaptive network sparsification.

What is the motivation?

SE(3)-equivariant networks are accurate but unscalable for DFT Hamiltonian prediction due to two key bottlenecks:

- Atom Scaling: Tensor Product (TP) operations grow quadratically with atoms ($N^2$).

- Basis Set Scaling: Computational complexity grows with the sixth power of the angular momentum order ($L^6$). Larger basis sets (e.g., def2-TZVP) require higher orders ($L=6$), making them prohibitively slow.

Existing SE(3)-equivariant models cannot handle large molecules (40-100 atoms) with high-quality basis sets, limiting their practical applicability in computational chemistry.

What is the novelty here?

SPHNet introduces Adaptive Sparsity to prune redundant computations at two levels:

- Sparse Pair Gate: Learns which atom pairs to include in message passing, adapting the interaction graph based on importance.

- Sparse TP Gate: Filters which spherical harmonic triplets $(l_1, l_2, l_3)$ are computed in tensor product operations, pruning higher-order combinations that contribute less to accuracy.

- Three-Phase Sparsity Scheduler: A training curriculum (Random → Adaptive → Fixed) that enables stable convergence to high-performing sparse subnetworks.

Key insight: Counter-intuitively, the model preserves more long-range interactions (16-25Å) than short-range ones. This acts as “hard sample mining” - short-range pairs are numerous and easy to learn, but rare long-range interactions are critical for accuracy.

What experiments were performed?

Datasets & Experimental Setup

| Dataset | Molecule Size | Basis Set | $L_{max}$ | Functional |

|---|---|---|---|---|

| MD17 | Small (ethanol, etc.) | def2-SVP | 4 | PBE |

| QH9 | $\leq$ 20 atoms (Stable/Dynamic splits) | def2-SVP | 4 | B3LYP |

| PubChemQH | 40-100 atoms | def2-TZVP | 6 | B3LYP |

Data Availability:

- MD17 & QH9: Publicly available

- PubChemQH: Not yet public (undergoing internal review at Microsoft)

Algorithms

Loss Function:

The model learns the residual $\Delta H$:

$$\Delta H = H_{ref} - H_{init}$$

where $H_{init}$ is a cheap initial guess computed via PySCF.

Loss = $MAE(H_{ref}, H_{pred}) + MSE(H_{ref}, H_{pred})$

Hyperparameters:

| Parameter | PubChemQH | QH9 | MD17 |

|---|---|---|---|

| Batch Size | 8 | 32 | 10 |

| Training Steps | 200k-300k | 200k-300k | 200k-300k |

| Warmup Steps | 1k | 1k | 1k |

| Sparsity Rate | 0.7 | 0.4 | - |

Hardware

- Training: 4x NVIDIA A100 (80GB)

- Benchmarking: Single NVIDIA RTX A6000 (46GB)

Notes

- Naming conflict: “SPHNet” is already the name of a 2019 3D point cloud model. The authors acknowledged this but did not commit to changing the name.

Evaluation Metrics

- Hamiltonian MAE ($H$): Element-wise error in Hartrees ($E_h$)

- Orbital Energy MAE ($\epsilon$): Error in HOMO/LUMO energies

- Coefficient Similarity ($\psi$): Cosine similarity of wavefunctions

Ablation Studies

- Both sparse gates contribute significantly to the speedup

- The three-phase scheduler is critical for stable convergence to a high-performing sparse subnetwork

- A sparsity rate of 70% can be applied with minimal accuracy loss on large systems, demonstrating significant computational redundancy in dense equivariant models

Transferability to Other Models

To prove the speedup wasn’t architecture-specific, the authors applied the Sparse Pair Gate and Sparse TP Gate to the QHNet baseline. This resulted in a speedup improvement from 1.0x to 3.30x on QHNet, confirming the gates are portable modules applicable to other SE(3)-equivariant architectures.

What outcomes/conclusions?

Performance Summary

PubChemQH (Large Scale):

- 7.1x speedup vs QHNet

- 25% memory usage (5.62 GB vs 22.5 GB)

- Improved accuracy ($97.31$ vs $123.74$ MAE)

QH9 (Medium Scale):

- ~3.7x speedup

Scaling Limit: SPHNet can train on systems with ~3000 atomic orbitals on a single A6000; baseline OOMs at ~1800.

Key Findings

- Adaptive sparsity scales with system complexity: The method is most effective for large systems (PubChemQH) where redundancy is high. For small molecules (e.g., Water/H2O with only 3 atoms), every interaction is critical, so pruning hurts accuracy and yields negligible speedup.

- Generalizable components: The sparsification techniques are portable modules, demonstrated by successful integration into QHNet with significant speedups.

- Practical impact: SPHNet enables Hamiltonian prediction for larger and more complex molecular systems than previously feasible with dense equivariant models.

Reproducibility Details

Model Architecture

The model predicts the Hamiltonian matrix $H$ from atomic numbers $Z$ and coordinates $r$.

Inputs: Atomic numbers ($Z$) and 3D coordinates.

Backbone Structure:

- Vectorial Node Interaction (x4): Uses long-short range message passing. Extracts vectorial representations ($l=1$) without high-order TPs to save cost.

- Spherical Node Interaction (x2): Projects features to high-order spherical harmonics (up to $L_{max}$). This is where the Sparse Pair Gate is applied to filter node pairs.

- Pair Construction Block: Splits into Diagonal (self-interaction) and Non-Diagonal (cross-interaction) blocks. This is where the Sparse TP Gate prunes cross-order combinations $(l_1, l_2, l_3)$.

- Expansion Block: Reconstructs the full Hamiltonian matrix from the sparse irreducible representations, exploiting symmetry ($H_{ji} = H_{ij}^T$) to halve computations.

Key Algorithmic Components

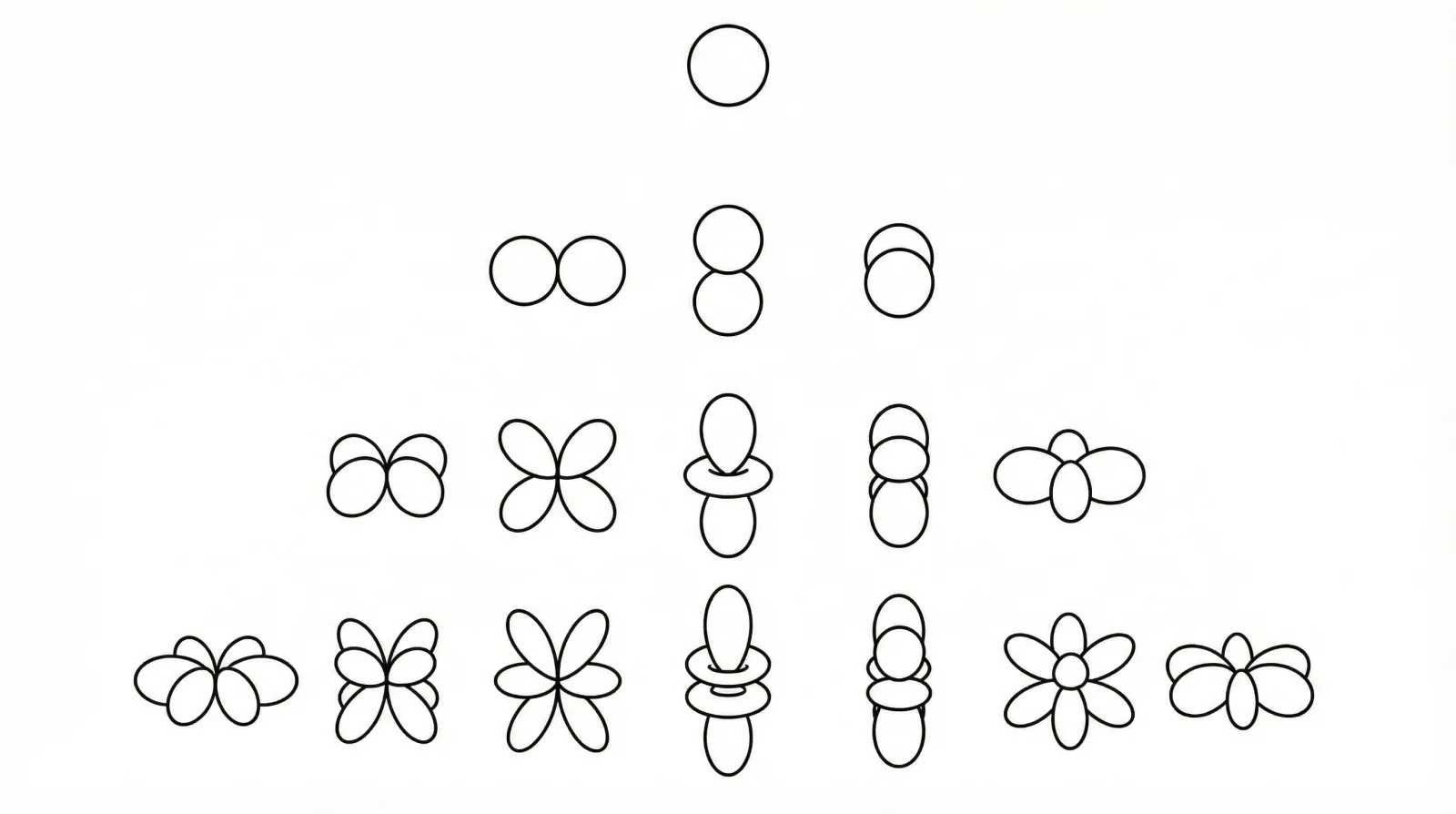

Sparse Pair Gate: Adapts the interaction graph. It learns a weight $W_p^{ij}$ for every pair. Pairs are kept only if selected by the scheduler ($U_p^{TSS}$). Complexity: $\mathcal{O}(N^2 \xd^2)$.

Sparse TP Gate: Filters triplets $(l_1, l_2, l_3)$ inside the TP operation. Higher-order combinations are more likely to be pruned. Complexity: $\mathcal{O}(L^3)$.

Three-Phase Sparsity Scheduler: Training curriculum designed to optimize the sparse gates effectively:

- Phase 1 (Random): Random selection ($1-k$ probability) to ensure unbiased weight updates. Complexity: $\mathcal{O}(|U|)$.

- Phase 2 (Adaptive): Selects top $(1-k)$ percent based on learned magnitude. Complexity: $\mathcal{O}(|U|\log|U|)$.

- Phase 3 (Fixed): Freezes the connectivity mask for maximum inference speed. No overhead.

Weight Initialization: Learnable sparsity weights ($W$) initialized as all-ones vector.

Data

The experiments evaluated SPHNet on three datasets with different molecular sizes and basis set complexities. All datasets use DFT calculations as ground truth, with MD17 using the PBE functional and QH9/PubChemQH using B3LYP.

Data Availability:

- MD17 & QH9: Publicly available

- PubChemQH: Not yet public (undergoing internal review at Microsoft)