What kind of paper is this?

Method (Primary) / Resource (Secondary)

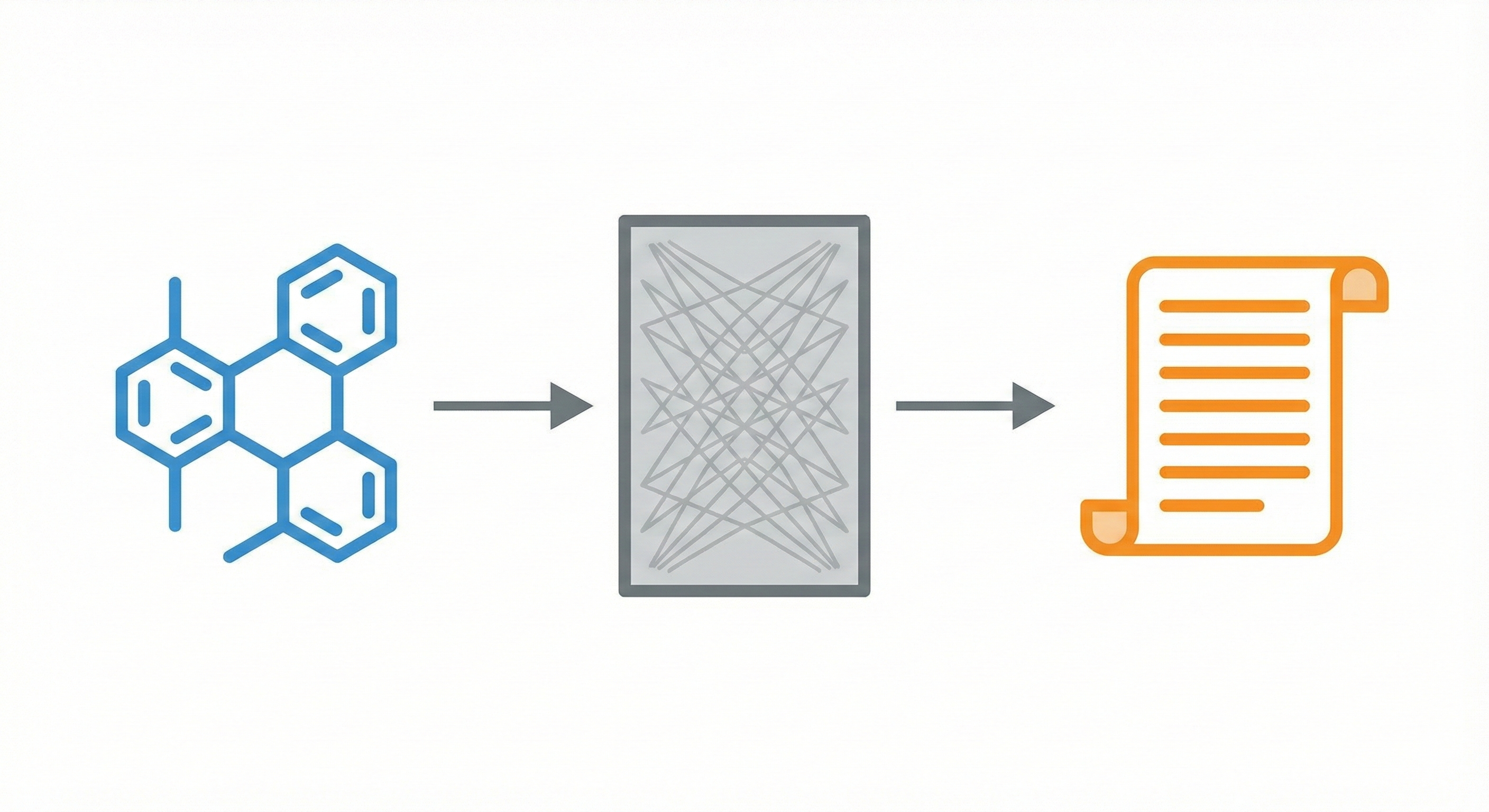

This paper presents a Methodological contribution by developing and validating a Transformer-based neural machine translation model (STOUT V2) for bidirectional chemical nomenclature (SMILES $\leftrightarrow$ IUPAC). It systematically compares this new architecture against previous RNN-based baselines (STOUT V1) and performs ablation studies on tokenization strategies.

It also serves as a significant Resource contribution by generating a massive training dataset of nearly 1 billion SMILES-IUPAC pairs (curated via commercial Lexichem software) and releasing the resulting models and code as open-source tools to democratize access to high-quality chemical naming.

What is the motivation?

Assigning systematic IUPAC names to chemical structures requires adherence to complex rules, challenging human consistency. Deterministic, rule-based software options like OpenEye Lexichem and ChemAxon are reliable commercial solutions. Existing open-source tools like OPSIN focus on parsing names to structures.

The previous version of STOUT (V1), based on RNNs/GRUs, achieved ~90% BLEU accuracy, with room for improvement in stereochemistry handling. This work leverages the superior sequence-learning capabilities of Transformers and massive datasets to create a highly accurate, open-source IUPAC naming tool.

What is the novelty here?

The core novelty lies in the architectural shift and the unprecedented scale of training data:

- Architecture Shift: Moving from an RNN-based Seq2Seq model to a Transformer-based architecture (4 layers, 8 heads), which captures intricate chemical patterns better than GRUs.

- Billion-Scale Training: Training on a dataset of nearly 1 billion molecules (combining PubChem and ZINC15), significantly larger than the 60 million used for STOUT V1.

- Tokenization Strategy: Determining that character-wise tokenization for IUPAC names is superior to word-wise tokenization in terms of both accuracy and training efficiency (15% faster).

What experiments were performed?

The authors conducted three primary experiments to validate bidirectional translation (SMILES $\rightarrow$ IUPAC and IUPAC $\rightarrow$ SMILES):

- Experiment 1 (Optimization): Assessed the impact of dataset size (1M vs 10M vs 50M) and tokenization strategy on SMILES-to-IUPAC performance.

- Experiment 2 (Scaling): Trained models on 110 million PubChem molecules for both forward and reverse translation tasks to test performance on longer sequences.

- Experiment 3 (Generalization): Trained on the full ~1 billion dataset (PubChem + ZINC15) for both translation directions.

- External Validation: Benchmarked against an external dataset from ChEBI (1,485 molecules) and ChEMBL34 to test generalization to unseen data.

Evaluation Metrics:

- Textual Accuracy: BLEU scores (1-4) and Exact String Match.

- Chemical Validity: Retranslation of generated names back to SMILES using OPSIN, followed by Tanimoto similarity checks (PubChem fingerprints) against the original input.

What outcomes/conclusions?

- Superior Performance: STOUT V2 achieved an average BLEU score of 0.99 (vs 0.94 for V1). While exact string matches varied by experiment (83-89%), the model notably achieved a perfect BLEU score (1.0) on 97.49% of a specific test set where STOUT V1 only reached 66.65%.

- Structural Validity (“Near Misses”): When the generated name differed from the ground truth string, the re-generated structure often remained chemically valid. The model maintained an average Tanimoto similarity of 0.68 for these “incorrect” names, suggesting it learns underlying chemical semantics beyond memorizing strings.

- Tokenization: Character-level splitting for IUPAC names outperformed word-level splitting and was more computationally efficient.

- Data Imbalance & Generalization: The model’s drop in performance for sequences >600 characters highlights a systemic issue in open chemical databases: long, highly complex SMILES strings are significantly underrepresented. Even billion-scale training datasets are still bound by the chemical diversity of their source material.

- Limitations:

- Preferred Names (PINs): The model mimics Lexichem’s naming conventions, generating valid IUPAC names distinct from strict Preferred IUPAC Names (PINs).

- Sequence Length: Performance degrades for very long SMILES (>600 characters) due to scarcity in the training data.

- Quality Dependence: The model depends on the quality of the commercial software (Lexichem) used to generate the ground truth.

Reproducibility Details

Data

The training data was derived from PubChem and ZINC15. Ground truth IUPAC names were generated using OpenEye Lexichem TK 2.8.1 to ensure consistency.

| Purpose | Dataset | Size | Notes |

|---|---|---|---|

| Training (Exp 1) | PubChem Subset | 1M, 10M, 50M | Selected via MaxMin algorithm for diversity |

| Training (Exp 2) | PubChem | 110M | Filtered for SMILES length < 600 |

| Training (Exp 3) | PubChem + ZINC15 | ~1 Billion | 999,637,326 molecules total |

| Evaluation | ChEBI | 1,485 | External validation set, non-overlapping with training |

Preprocessing:

- SMILES: Canonicalized, isomeric, and kekulized using RDKit (v2023.03.1).

- Formatting: Converted to TFRecord format in 100 MB chunks for TPU efficiency.

Algorithms

- SMILES Tokenization: Regex-based splitting. Atoms (e.g., “Cl”, “Au”), bonds, brackets, and digits are separate tokens.

- IUPAC Tokenization: Character-wise split was selected as the optimal strategy (treating every character as a token).

- Optimization: Adam optimizer with a custom learning rate scheduler based on model dimensions.

- Loss Function: Sparse Categorical Cross-Entropy, masking padding tokens.

Models

The model follows the standard Transformer architecture from “Attention is All You Need” (Vaswani et al.).

- Architecture: 4 Transformer layers (encoder/decoder stack).

- Attention: Multi-head attention with 8 heads.

- Dimensions: Embedding size ($d_{model}$) = 512; Feed-forward dimension ($d_{ff}$) = 2048.

- Regularization: Dropout rate of 0.1.

- Context Window: Max input length (SMILES) = 600; Max output length (IUPAC) = 700-1000.

Evaluation

Evaluation focused on both string similarity and chemical structural integrity.

| Metric | Scope | Method |

|---|---|---|

| BLEU Score | N-gram overlap | Compared predicted IUPAC string to Ground Truth. |

| Exact Match | Accuracy | Binary 1/0 check for identical strings. |

| Tanimoto | Structural Similarity | Predicted Name $\rightarrow$ OPSIN $\rightarrow$ SMILES $\rightarrow$ Fingerprint comparison to input. |

Hardware

Training was conducted entirely on Google Cloud Platform (GCP) TPUs.

- STOUT V1: Trained on TPU v3-8.

- STOUT V2: Trained on TPU v4-128 pod slices (128 nodes).

- Large Scale (Exp 3): Trained on TPU v4-256 pod slice (256 nodes).

- Training Time: Average of 15 hours and 2 minutes per epoch for the 1 billion dataset.

- Framework: TensorFlow 2.15.0-pjrt with Keras.

Paper Information

Citation: Rajan, K., Zielesny, A., & Steinbeck, C. (2024). STOUT V2.0: SMILES to IUPAC name conversion using transformer models. Journal of Cheminformatics, 16(146). https://doi.org/10.1186/s13321-024-00941-x

Publication: Journal of Cheminformatics 2024

@article{rajanSTOUTV20SMILES2024,

title = {{{STOUT V2}}.0: {{SMILES}} to {{IUPAC}} Name Conversion Using Transformer Models},

shorttitle = {{{STOUT V2}}.0},

author = {Rajan, Kohulan and Zielesny, Achim and Steinbeck, Christoph},

year = 2024,

month = dec,

journal = {Journal of Cheminformatics},

volume = {16},

number = {1},

pages = {146},

issn = {1758-2946},

doi = {10.1186/s13321-024-00941-x}

}

Additional Resources:

- Web Application (Includes Ketcher drawing, bulk submission, and DECIMER integration)

- DECIMER Project

- STOUT V1 Note

- Zenodo Archive (Code Snapshot)