Paper Information

Citation: Handsel, J., Matthews, B., Knight, N. J., & Coles, S. J. (2021). Translating the InChI: Adapting Neural Machine Translation to Predict IUPAC Names from a Chemical Identifier. Journal of Cheminformatics, 13(1), 79. https://doi.org/10.1186/s13321-021-00535-x

Publication: Journal of Cheminformatics 2021

What kind of paper is this?

This is primarily a Method paper. It adapts a specific architecture (Transformer) to a specific task (InChI-to-IUPAC translation) and evaluates its performance against both machine learning and commercial baselines. It also has a secondary Resource contribution, as the trained model and scripts are released as open-source software.

What is the motivation?

Generating correct IUPAC names is difficult due to the comprehensive but complex rules defined by the International Union of Pure and Applied Chemistry. While commercial software exists to generate names from structures, they are closed-source, their methodologies are unknown, and they often disagree with one another. Existing open identifiers like InChI and SMILES are not human-readable. There is a need for an open, automated way to generate informative IUPAC names from standard identifiers like InChI, which is ubiquitous in online chemical databases.

What is the novelty here?

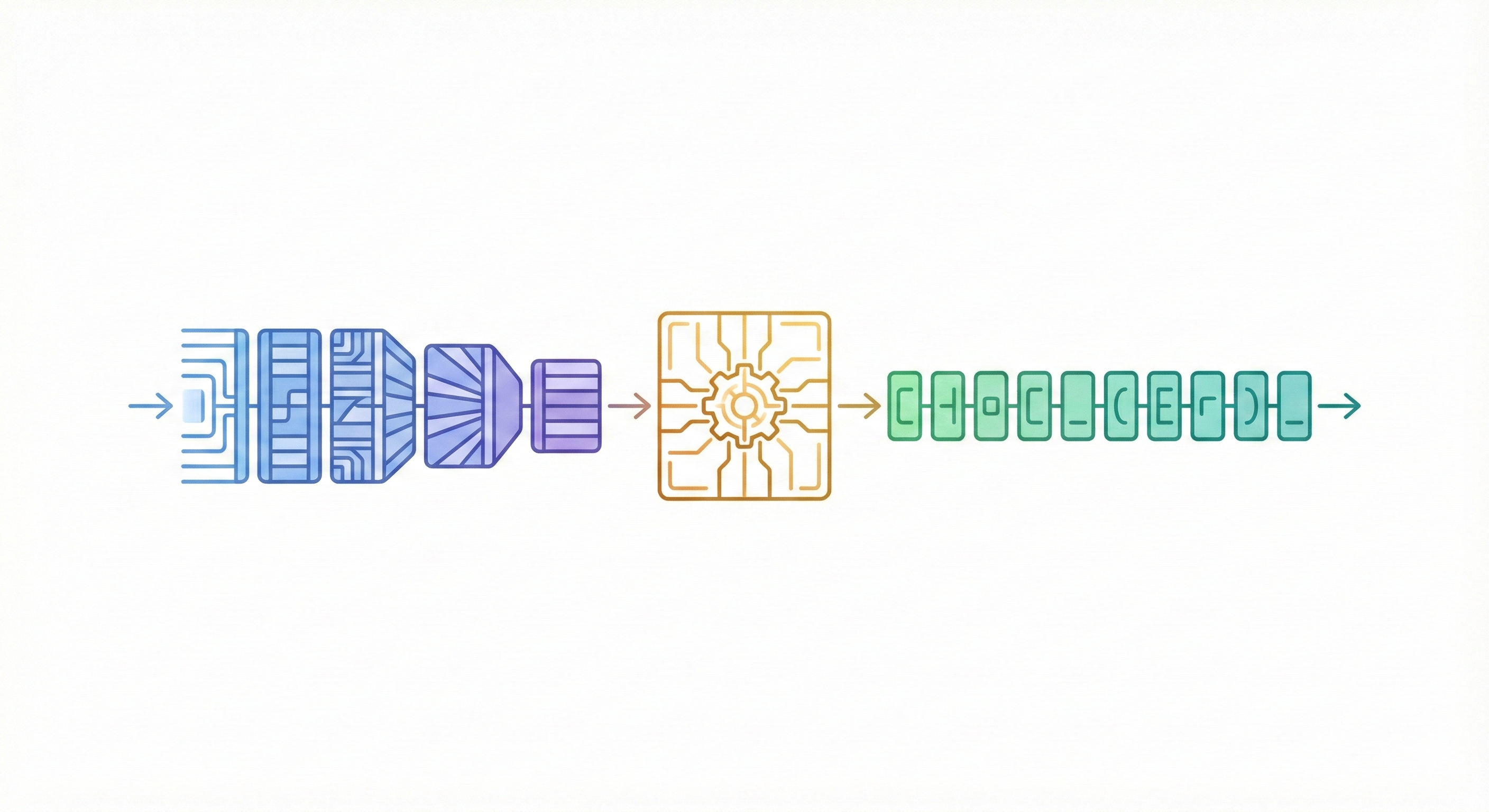

The key novelty is treating chemical nomenclature translation as a character-level sequence-to-sequence problem using a Transformer architecture, specifically using InChI as the source language.

- Unlike standard Neural Machine Translation (NMT) which uses sub-word tokenization, this model processes InChI and predicts IUPAC names character-by-character.

- It demonstrates that character-level tokenization outperforms byte-pair encoding or unigram models for this specific chemical task.

- It leverages InChI’s standardization to avoid the canonicalization issues inherent in SMILES-based approaches.

What experiments were performed?

- Training: The model was trained on 10 million InChI/IUPAC pairs sampled from PubChem.

- Ablation Studies: The authors experimentally validated architecture choices, finding that LSTM models and sub-word tokenization (BPE) performed worse than the Transformer with character tokenization. They also optimized dropout rates.

- Performance Benchmarking: The model was evaluated on a held-out test set of 200,000 samples.

- Commercial Comparison: The authors compared their model against four major commercial packages (ACD/I-Labs, ChemAxon, Mestrelab, and PubChem’s Lexichem) using a specific 100-molecule test set.

- Error Analysis: They analyzed performance across different chemical classes (organics, charged species, macrocycles, inorganics) and visualized attention coefficients to interpret model focus.

What outcomes/conclusions?

- High Accuracy on Organics: The model achieved 91% whole-name accuracy on the test set, performing particularly well on organic compounds.

- Comparable to Commercial Tools: The edit distance between the model’s predictions and commercial packages (15-23%) was similar to the variation found between the commercial packages themselves (16-21%).

- Limitations on Inorganics: The model performed poorly on inorganic (14% accuracy) and organometallic compounds (20% accuracy). This is attributed to limitations in the standard InChI format (which disconnects metal atoms) and low training data coverage.

- Character-Level Superiority: Character-level tokenization was found to be essential; byte-pair encoding reduced accuracy significantly.

Reproducibility Details

Data

The dataset was derived from PubChem.

| Purpose | Dataset | Size | Notes |

|---|---|---|---|

| Raw | PubChem | 100M pairs | Filtered for length (InChI < 200 chars, IUPAC < 150 chars). |

| Training | Subsampled | 10M pairs | Random sample from the filtered set. |

| Validation | Held-out | 10,000 samples | Limited to InChI length > 50 chars. |

| Test | Held-out | 200,000 samples | Limited to InChI length > 50 chars. |

| Tokenization | Vocab | InChI: 66 chars IUPAC: 70 chars | Character-level tokenization. Spaces treated as tokens. |

Algorithms

- Architecture Type: Transformer Encoder-Decoder.

- Optimization: ADAM optimizer ($\beta_1=0.9, \beta_2=0.998$).

- Learning Rate: Linear warmup over 8000 steps to 0.0005, then decayed by inverse square root of iteration.

- Regularization:

- Dropout: 0.1 (applied to dense and attentional layers).

- Label Smoothing: Magnitude 0.1.

- Training Strategy: Teacher forcing used for both training and validation.

- Inference: Beam search with width 10 and length penalty 1.0.

Models

- Structure: 6 layers in encoder, 6 layers in decoder.

- Attention: 8 heads per attention sub-layer.

- Dimensions:

- Feed-forward hidden state size: 2048.

- Embedding vector length: 512.

- Initialization: Glorot’s method.

- Position: Positional encoding added to word vectors.

Evaluation

Metrics reported include Whole-Name Accuracy (percentage of exact matches) and Normalized Edit Distance (Damerau-Levenshtein, scale 0-1).

| Metric | Value | Baseline | Notes |

|---|---|---|---|

| Accuracy (All) | 91% | N/A | Test set of 200k samples. |

| Accuracy (Rajan) | 72% | N/A | Comparative ML model. |

| Edit Dist (Organic) | $0.02 \pm 0.03$ | N/A | Very high similarity for organics. |

| Edit Dist (Inorganic) | $0.32 \pm 0.20$ | N/A | Poor performance on inorganics. |

Hardware

- GPU: Tesla K80.

- Training Time: 7 days.

- Throughput: ~6000 tokens/sec (InChI) and ~3800 tokens/sec (IUPAC).

- Batch Size: 4096 tokens (approx. 30 compounds).

Citation

@article{handselTranslatingInChIAdapting2021a,

title = {Translating the {{InChI}}: Adapting Neural Machine Translation to Predict {{IUPAC}} Names from a Chemical Identifier},

shorttitle = {Translating the {{InChI}}},

author = {Handsel, Jennifer and Matthews, Brian and Knight, Nicola J. and Coles, Simon J.},

year = 2021,

month = oct,

journal = {Journal of Cheminformatics},

volume = {13},

number = {1},

pages = {79},

issn = {1758-2946},

doi = {10.1186/s13321-021-00535-x},

urldate = {2025-12-20},

abstract = {We present a sequence-to-sequence machine learning model for predicting the IUPAC name of a chemical from its standard International Chemical Identifier (InChI). The model uses two stacks of transformers in an encoder-decoder architecture, a setup similar to the neural networks used in state-of-the-art machine translation. Unlike neural machine translation, which usually tokenizes input and output into words or sub-words, our model processes the InChI and predicts the IUPAC name character by character. The model was trained on a dataset of 10 million InChI/IUPAC name pairs freely downloaded from the National Library of Medicine's online PubChem service. Training took seven days on a Tesla K80 GPU, and the model achieved a test set accuracy of 91\%. The model performed particularly well on organics, with the exception of macrocycles, and was comparable to commercial IUPAC name generation software. The predictions were less accurate for inorganic and organometallic compounds. This can be explained by inherent limitations of standard InChI for representing inorganics, as well as low coverage in the training data.},

langid = {english},

keywords = {Attention,GPU,InChI,IUPAC,seq2seq,Transformer}

}