What kind of paper is this?

This is primarily a Method paper with significant Resource contributions.

- Methodological Basis: The paper proposes a novel training pipeline (“mix-sourced distillation”) and a domain-specific Reinforcement Learning approach (DAPO) to enable reasoning capabilities in chemical LLMs. It validates these methods via ablation studies and SOTA comparisons.

- Resource Contribution: It introduces ChemFG, a 101 billion-token corpus enriched with “atomized” knowledge about functional groups and reaction centers.

What is the motivation?

Current chemical LLMs struggle with reasoning for two main reasons:

- Shallow Domain Understanding: Models often learn molecule-level properties directly, bypassing the “atomized” knowledge (e.g., functional groups) that fundamentally determines chemical behavior.

- Limited Reasoning Capability: Chemical logic differs significantly from general domains (math/code). Standard distillation from general reasoner models (like DeepSeek-R1) often fails because even powerful teacher models lack specific chemical insight.

What is the novelty here?

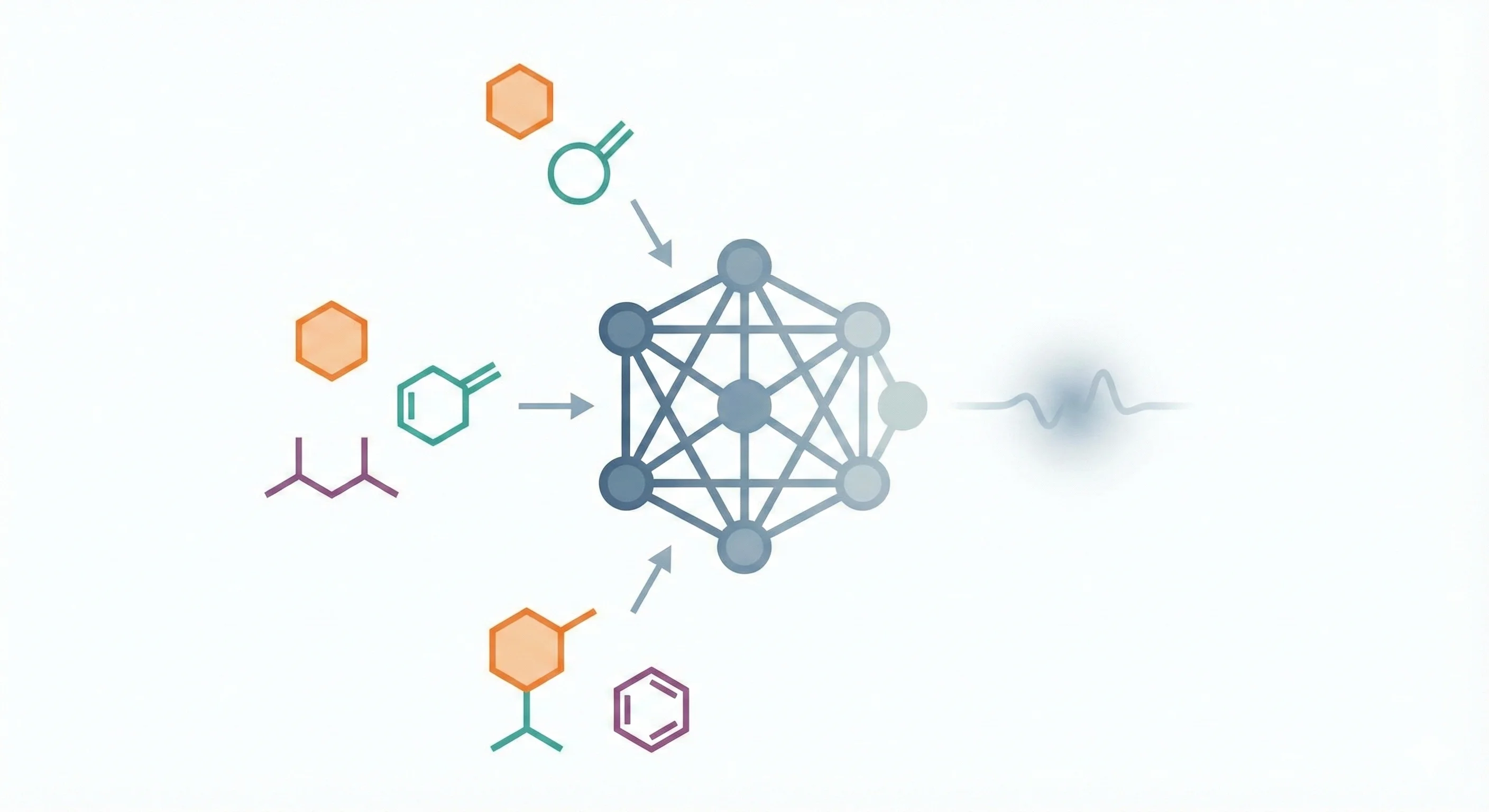

The authors introduce three key technical innovations to address the “reasoning gap”:

Atomized Knowledge Enhancement (ChemFG): A toolkit was developed to identify functional groups and their changes during reactions using SMARTS notations and atom mapping. This was used to create a 101B token pre-training corpus where this structural knowledge is explicit.

Mix-Sourced Distillation: They feed the teacher (DeepSeek-R1/o3-mini) “pseudo-reasoning” consisting of ground truth answers and functional group data. This guides the teacher to generate high-quality chemical rationales that the student model then learns.

Chemical Reinforcement Learning: The model undergoes domain-specific RL using the DAPO algorithm with a comprehensive reward system covering various chemical tasks.

What experiments were performed?

The model was evaluated on two comprehensive chemical benchmarks: SciKnowEval (19 tasks) and ChemEval (36 tasks).

- Baselines: Compared against general models (Qwen2.5-14B-Instruct, GPT-4o, DeepSeek-R1) and domain-specific models (ChemLLM, MolInst, ChemDFM).

- Ablation: Performance was tracked across training stages: Base → ChemDFM-I (Instruction Tuned) → ChemDFM-R (Reasoning/RL) to isolate improvements.

- Qualitative Analysis: Expert manual inspection of generated rationales to verify chemical logic.

What outcomes/conclusions?

- Performance: ChemDFM-R achieves SOTA performance on molecule-centric and reaction-centric tasks, outperforming significantly larger models like DeepSeek-R1 and GPT-4o on specific benchmarks.

- Reasoning Quality: The model generates interpretable rationales that help users verify answers (e.g., identifying reaction mechanisms correctly).

- Limitations: The model still struggles with tasks involving numerical prediction and calculation (e.g., Yield Extraction), where it sometimes underperforms the base model.

Reproducibility Details

Data

The training data is constructed in three phases:

1. Domain Pre-training (ChemFG):

- Size: 101 billion tokens

- Composition:

- 12M literature documents (79B tokens)

- 30M molecules from PubChem/PubChemQC

- 7M reactions from USPTO-FULL

- Augmentation: SMILES augmentation (10x) using R-SMILES

- Atomized Features: Annotated with a custom “Functional Group Identification Toolkit” that identifies 241 functional group types and tracks changes in reaction centers

2. Instruction Tuning:

- Sources: Molecule-centric (PubChem, MoleculeNet), Reaction-centric (USPTO), and Knowledge-centric (Exams, Literature QA) tasks

- Mixing: Mixed with general instruction data in a 1:2 ratio

3. Distillation Dataset:

- Sources:

- ~70% ChemDFM-R instruction data

- ~22% constructed pseudo-reasoning (functional group descriptions)

- ~8% teacher rationales (from DeepSeek-R1/o3-mini)

- Mixing: Mixed with general data (including AM-Deepseek-R1-Distill-1.4M) in a 1:2 ratio

Algorithms

Functional Group Identification:

- Extends the

thermolibrary’s SMARTS list - For reactions, identifies “reacting functional groups” by finding reactants containing atoms involved in bond changes (reaction centers) that do not appear in the product

Mix-Sourced Distillation:

- Teacher models (DeepSeek-R1, o3-mini) are prompted with Question + Ground Truth + Functional Group Info to generate high-quality “Thoughts”

- These rationales are distilled into the student model

Reinforcement Learning:

- Algorithm: DAPO (Domain-Adaptive Preference Optimization)

- Hyperparameters: Learning rate

5e-7, rollout batch size512, training batch size128 - Rewards: Format rewards (structure adherence) + Accuracy rewards (canonicalized SMILES matching)

Models

- Base Model: Qwen2.5-14B

- ChemDFM-I: Result of instruction tuning the domain-pretrained model for 2 epochs

- ChemDFM-R: Result of applying mix-sourced distillation (1 epoch) followed by RL on ChemDFM-I

Evaluation

Benchmarks:

- SciKnowEval: 19 tasks (text-centric, molecule-centric, reaction-centric)

- ChemEval: 36 tasks, categorized similarly

Key Metrics: Accuracy, F1 Score, BLEU score

| Metric | ChemEval (All Tasks) | Baseline (Qwen2.5-14B) | Notes |

|---|---|---|---|

| Score | 0.78 | 0.57 | Significant boost in molecule/reaction tasks |

Paper Information

Citation: Zhao, Z., Chen, B., Wan, Z., Chen, L., Lin, X., Chen, X., & Yu, K. (2025). ChemDFM-R: An Chemical Reasoner LLM Enhanced with Atomized Chemical Knowledge. arXiv preprint arXiv:2507.21990. https://doi.org/10.48550/arXiv.2507.21990

Publication: arXiv 2025

@misc{zhao2025chemdfmr,

title={ChemDFM-R: An Chemical Reasoner LLM Enhanced with Atomized Chemical Knowledge},

author={Zihan Zhao and Bo Chen and Ziping Wan and Lu Chen and Xuanze Lin and Xin Chen and Kai Yu},

year={2025},

eprint={2507.21990},

archivePrefix={arXiv},

primaryClass={cs.CE},

url={https://arxiv.org/abs/2507.21990}

}