What kind of paper is this?

This is primarily a Method paper ($\Psi_{\text{Method}}$), with a significant Resource component ($\Psi_{\text{Resource}}$).

It is a methodological investigation because it systematically evaluates a specific architecture (Transformers/RoBERTa) against established baselines (GNNs) to determine “how well does this work?” in the chemical domain. It ablates dataset size, tokenization, and input representation.

It is also a resource paper as it introduces “PubChem-77M,” a curated dataset of 77 million SMILES strings designed to facilitate large-scale self-supervised pretraining for the community.

What is the motivation?

The primary motivation is data scarcity in molecular property prediction. While Graph Neural Networks (GNNs) are effective, supervised learning is limited by the high cost of generating labeled data via laboratory testing.

Conversely, unlabeled data is abundant (millions of SMILES strings). Inspired by the success of Transformers in NLP, where self-supervised pretraining on large corpora yields strong transfer learning, the authors aim to leverage these massive unlabeled chemical datasets to learn robust molecular representations. Additionally, Transformers benefit from a mature software ecosystem (HuggingFace) that may offer efficiency advantages over GNNs.

What is the novelty here?

While previous works have applied Transformers to SMILES, this paper conducts the first systematic evaluation of scaling laws and architectural components for this domain. Specifically:

- Scaling Analysis: It explicitly tests how pretraining dataset size (100K to 10M) impacts downstream performance.

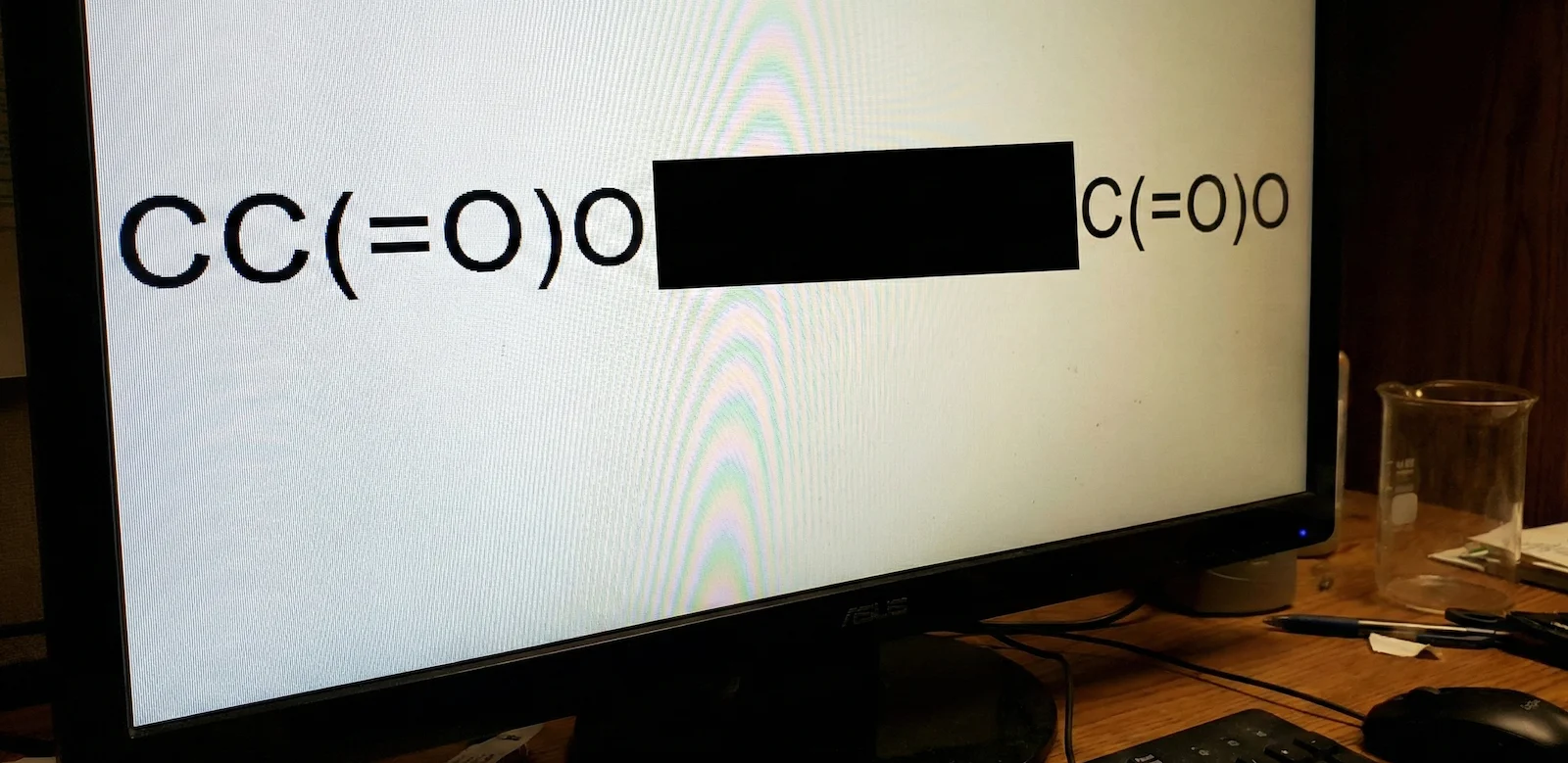

- Tokenizer Comparison: It compares standard NLP Byte-Pair Encoding (BPE) against a chemically-aware “SmilesTokenizer”.

- Representation Comparison: It evaluates if the robust SELFIES string representation offers advantages over standard SMILES in a Transformer context.

What experiments were performed?

The authors trained ChemBERTa (based on RoBERTa) using Masked Language Modeling (MLM) on subsets of the PubChem dataset.

- Pretraining: Models were pretrained on dataset sizes of 100K, 250K, 1M, and 10M compounds.

- Baselines: Performance was compared against D-MPNN (Graph Neural Network), Random Forest (RF), and SVM using 2048-bit Morgan Fingerprints.

- Downstream Tasks: Finetuning was performed on MoleculeNet classification tasks: BBBP (blood-brain barrier), ClinTox (clinical toxicity), HIV, and Tox21 (p53 stress-response).

- Ablations:

- Tokenization: BPE vs. SmilesTokenizer on the 1M dataset.

- Input: SMILES vs. SELFIES strings on the Tox21 task.

What outcomes/conclusions?

- Scaling Laws: Performance scales consistently with pretraining data size. Increasing data from 100K to 10M improved ROC-AUC by +0.110 on average.

- Competitive performance: ChemBERTa approaches the D-MPNN baseline. For example, on Tox21, ChemBERTa-10M achieved a ROC-AUC of 0.728 compared to D-MPNN’s 0.752.

- Tokenization: The custom SmilesTokenizer narrowly outperformed BPE ($\Delta \text{PRC-AUC} = +0.015$), suggesting semantic tokenization is beneficial.

- SELFIES vs. SMILES: Surprisingly, using SELFIES provided no significant difference in performance compared to SMILES.

- Interpretability: Attention heads in the model were found to track chemically relevant features, such as specific functional groups and aromatic rings, mimicking the behavior of graph convolutions.

Reproducibility Details

Data

The authors curated a massive dataset for pretraining and utilized standard benchmarks for evaluation.

- Pretraining Data: PubChem-77M.

- Source: 77 million unique SMILES from PubChem.

- Preprocessing: Canonicalized and globally shuffled.

- Subsets used: 100K, 250K, 1M, and 10M subsets.

- Evaluation Data: MoleculeNet.

- Tasks: BBBP (2,039), ClinTox (1,478), HIV (41,127), Tox21 (7,831).

- Splitting: 80/10/10 train/valid/test split using a scaffold splitter to ensure chemical diversity between splits.

Algorithms

The core training methodology mirrors standard BERT/RoBERTa procedures adapted for chemical strings.

- Objective: Masked Language Modeling (MLM) with 15% token masking.

- Tokenization:

- BPE: Byte-Pair Encoder (vocab size 52K).

- SmilesTokenizer: Regex-based custom tokenizer available in DeepChem.

- Sequence Length: Maximum sequence length of 512 tokens.

- Finetuning: Appended a linear classification layer; backpropagated through the base model for up to 25 epochs with early stopping on ROC-AUC.

Models

- Architecture: RoBERTa (via HuggingFace).

- Layers: 6

- Attention Heads: 12 (72 distinct mechanisms total).

- Baselines (via Chemprop library):

- D-MPNN: Directed Message Passing Neural Network with default hyperparameters.

- RF/SVM: Scikit-learn Random Forest and SVM using 2048-bit Morgan fingerprints (RDKit).

Evaluation

Performance is measured using dual metrics to account for class imbalance common in toxicity datasets.

| Metric | Details |

|---|---|

| ROC-AUC | Area Under Receiver Operating Characteristic Curve |

| PRC-AUC | Area Under Precision-Recall Curve (vital for imbalanced data) |

Hardware

- Compute: Single NVIDIA V100 GPU.

- Training Time: Approximately 48 hours for the 10M compound subset.

- Carbon Footprint: Estimated 17.1 kg $\text{CO}_2\text{eq}$ (offset by Google Cloud).

Paper Information

Citation: Chithrananda, S., Grand, G., & Ramsundar, B. (2020). ChemBERTa: Large-Scale Self-Supervised Pretraining for Molecular Property Prediction. arXiv preprint arXiv:2010.09885. https://doi.org/10.48550/arXiv.2010.09885

Publication: arXiv 2020 (Preprint)

Additional Resources:

BibTeX

@misc{chithranandaChemBERTaLargeScaleSelfSupervised2020,

title = {{{ChemBERTa}}: {{Large-Scale Self-Supervised Pretraining}} for {{Molecular Property Prediction}}},

shorttitle = {{{ChemBERTa}}},

author = {Chithrananda, Seyone and Grand, Gabriel and Ramsundar, Bharath},

year = 2020,

month = oct,

number = {arXiv:2010.09885},

eprint = {2010.09885},

primaryclass = {cs},

publisher = {arXiv},

doi = {10.48550/arXiv.2010.09885},

urldate = {2025-12-24},

archiveprefix = {arXiv}

}