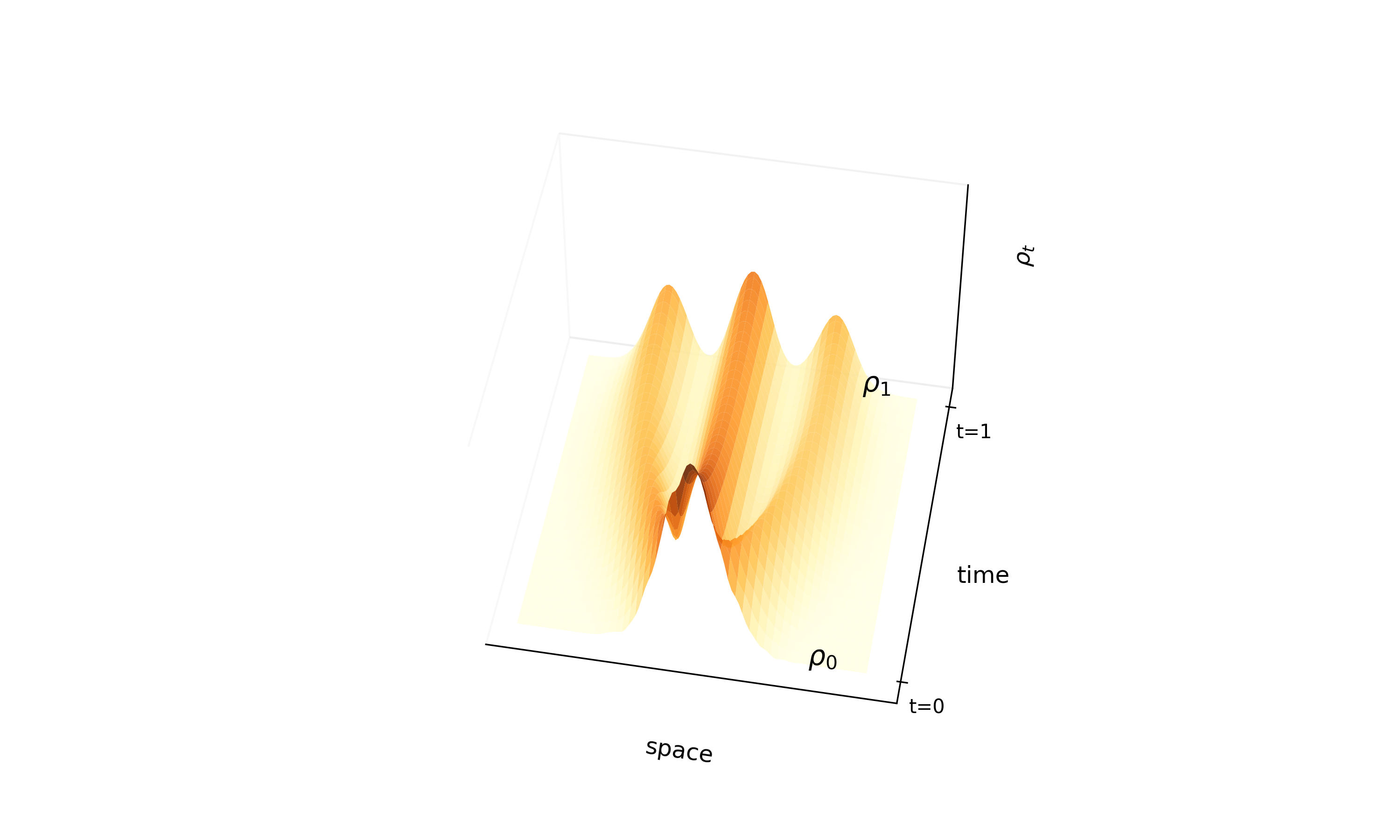

Building Normalizing Flows with Stochastic Interpolants

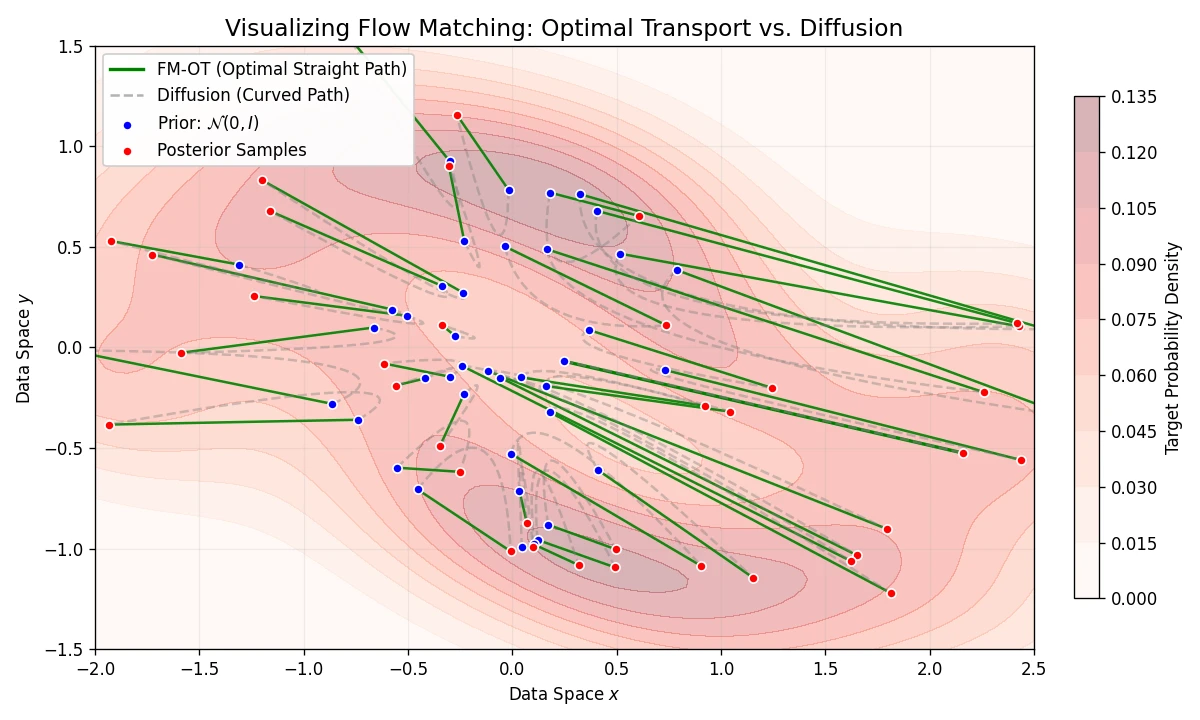

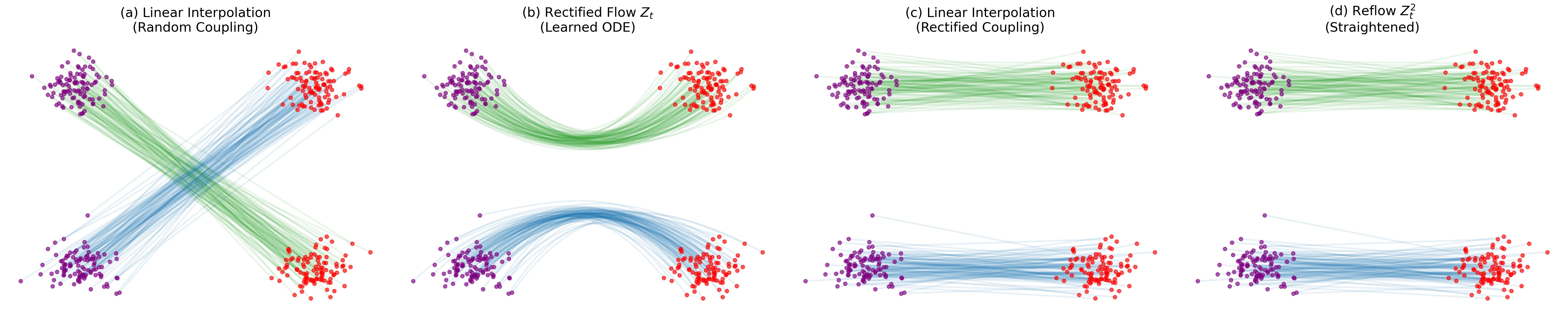

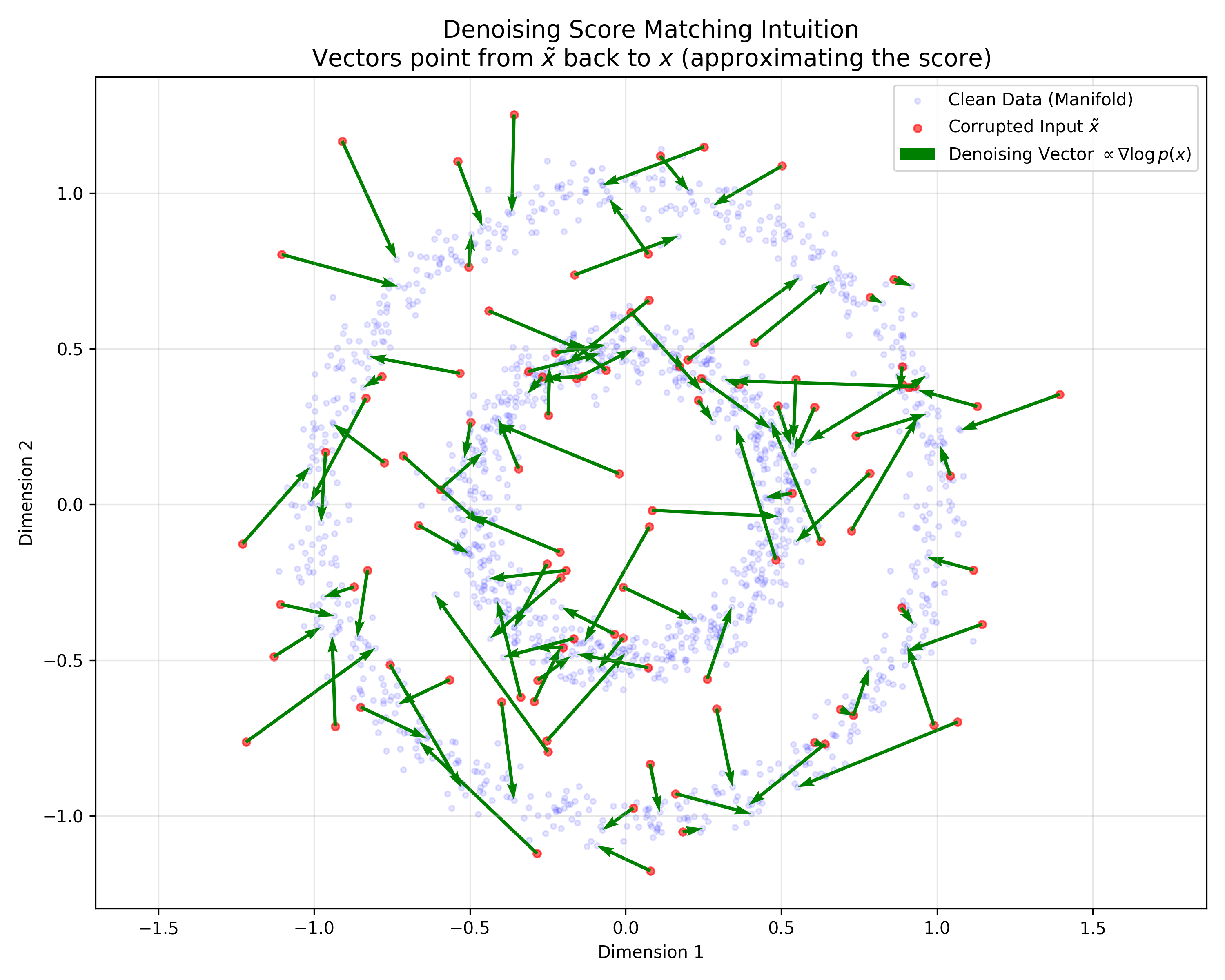

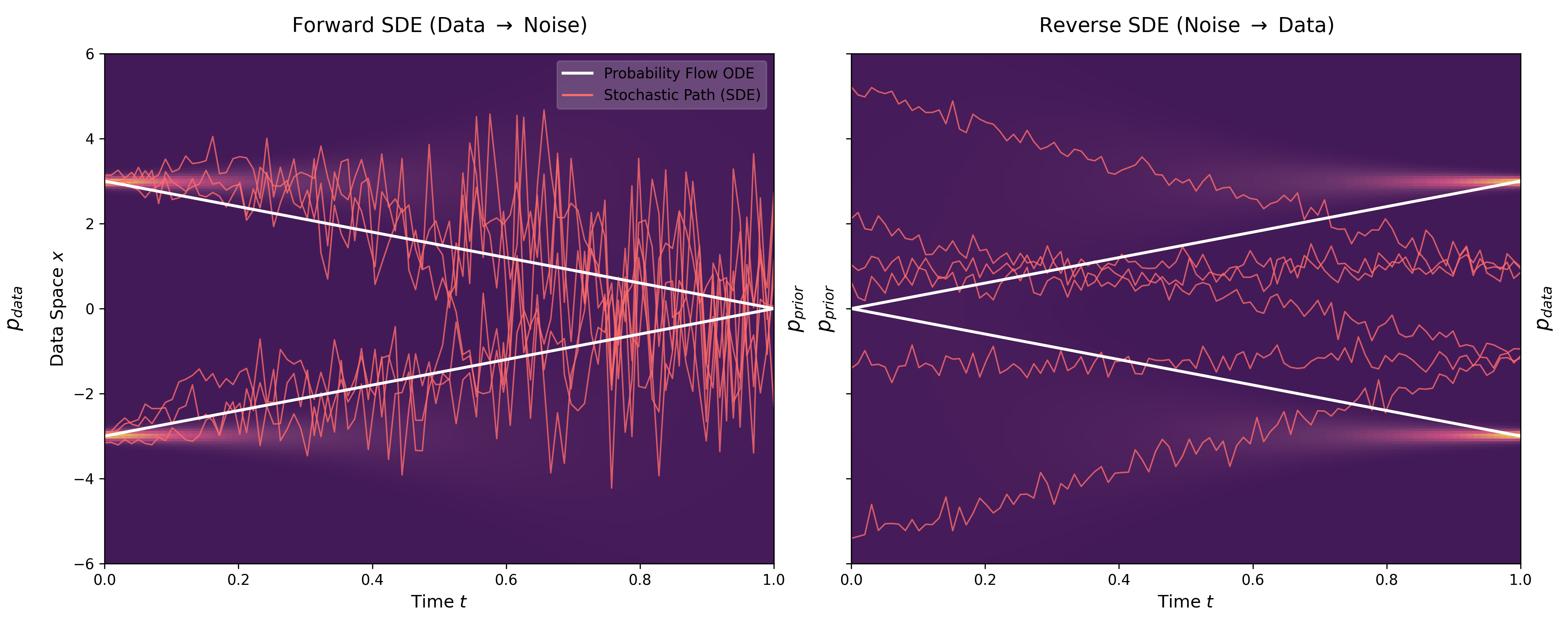

Proposes ‘InterFlow’, a method to learn continuous normalizing flows between arbitrary densities using stochastic interpolants. It avoids ODE backpropagation by minimizing a quadratic objective on the velocity field, enabling scalable ODE-based generation.