Understanding Count Vectorization

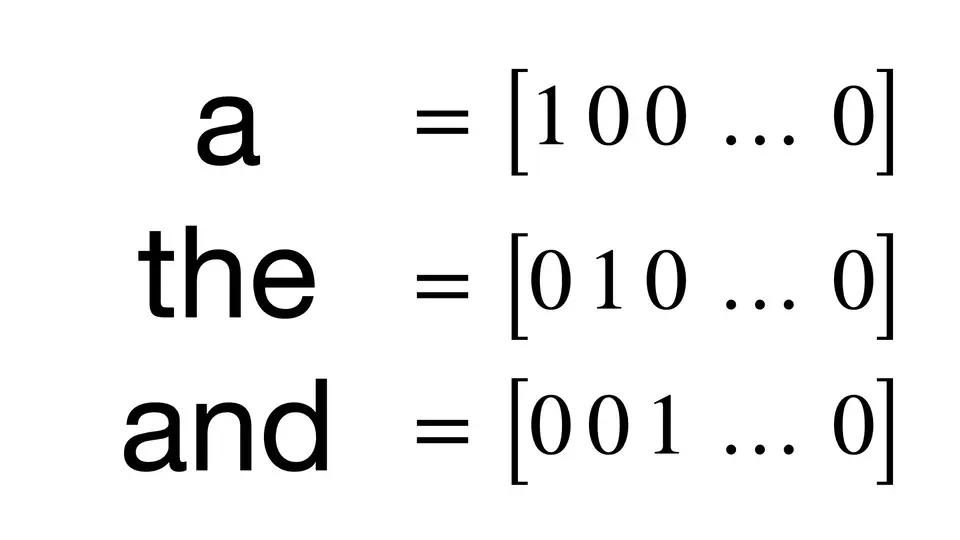

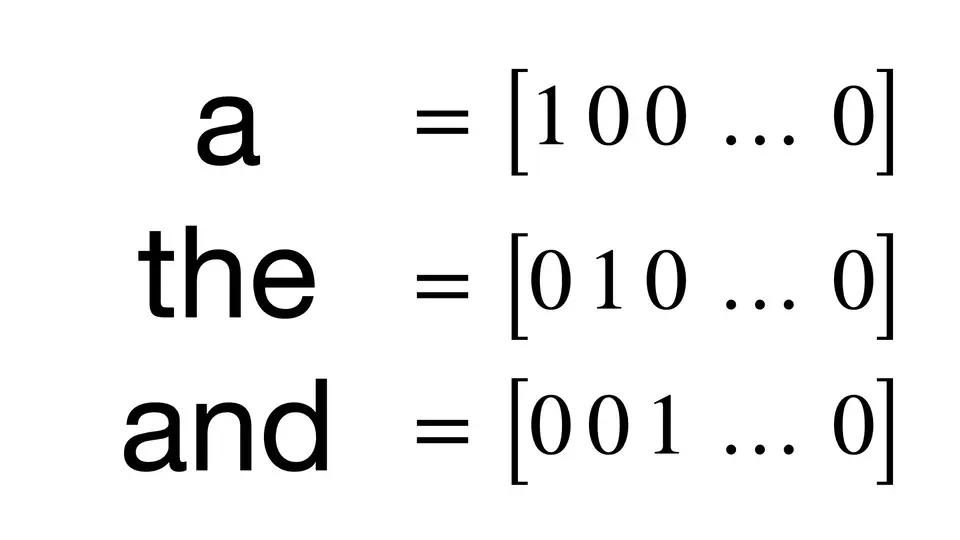

Count vectorization is a fundamental technique for converting text into numerical form that machine learning algorithms can process. This method creates sparse vector representations where each dimension corresponds to a unique word in the vocabulary, enabling us to quantify text content systematically.

This approach serves as the foundation for many NLP tasks, though it has limitations we’ll explore. For more advanced text representation methods, see my Introduction to Word Embeddings.

How Count Vectorization Works

Count vectorization transforms text through a systematic process:

- Build vocabulary: Create a dictionary of all unique words across the corpus

- Define dimensions: Each vector has length equal to vocabulary size

- Count occurrences: For each document, count how many times each vocabulary word appears

- Generate sparse vectors: Most elements are zero (words not in that document)

Key characteristics:

- High-dimensional: Vector length equals vocabulary size (often thousands of words)

- Sparse representation: Most dimensions contain zeros

- Frequency preservation: Maintains exact word counts

- Feature independence: Treats each word as an isolated feature

This approach captures word frequencies accurately but misses semantic relationships between words. We’ll implement this using scikit-learn’s CountVectorizer.

Basic Implementation

Let’s start with a simple example to understand how count vectorization works in practice:

from sklearn.feature_extraction.text import CountVectorizer

# Initialize the vectorizer

vectorizer = CountVectorizer()

# Sample text for demonstration

sample_text = ["One of the most basic ways we can numerically represent words "

"is through the one-hot encoding method (also sometimes called "

"count vectorizing)."]

# Fit the vectorizer to our text data

vectorizer.fit(sample_text)

# Examine the vocabulary and word indices

print('Vocabulary:')

print(vectorizer.vocabulary_)

# Transform text to vectors

vector = vectorizer.transform(sample_text)

print('Full vector:')

print(vector.toarray())

# Transform individual words

print('Single word vector:')

print(vectorizer.transform(['hot']).toarray())

# Transform multiple words simultaneously

print('Multiple words:')

print(vectorizer.transform(['hot', 'one']).toarray())

# Alternative: fit and transform in one step

print('Fit and transform together:')

new_text = ['Today is the day that I do the thing today, today']

new_vectorizer = CountVectorizer()

print(new_vectorizer.fit_transform(new_text).toarray())

Output:

Vocabulary:

{'one': 12, 'of': 11, 'the': 15, 'most': 9, 'basic': 1, 'ways': 18, 'we': 19,

'can': 3, 'numerically': 10, 'represent': 13, 'words': 20, 'is': 7,

'through': 16, 'hot': 6, 'encoding': 5, 'method': 8, 'also': 0,

'sometimes': 14, 'called': 2, 'count': 4, 'vectorizing': 17}

Full vector:

[[1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 2 1 1 1 1 1]]

Single word vector:

[[0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0]]

Multiple words:

[[0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0]]

Fit and transform together:

[[1 1 1 1 2 1 3]]

Notice how repeated words (like “the” and “today”) receive higher counts, while the vocabulary automatically assigns indices to each unique word.

Working with Real Data: 20 Newsgroups Dataset

Now let’s see count vectorization in action with a real dataset. The 20 Newsgroups dataset contains posts from 20 different discussion groups, making it ideal for demonstrating text classification:

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import CountVectorizer

import numpy as np

# Initialize vectorizer

vectorizer = CountVectorizer()

# Load the complete dataset

newsgroups_data = fetch_20newsgroups()

# Examine a sample document

print('Raw sample document:')

print(newsgroups_data.data[0])

print()

# Fit vectorizer to all documents

vectorizer.fit(newsgroups_data.data)

# Check vocabulary size

print(f'Vocabulary size: {len(vectorizer.vocabulary_)}')

# Transform first document

v0 = vectorizer.transform([newsgroups_data.data[0]]).toarray()[0]

print(f'Vector length: {len(v0)}')

print(f'Non-zero elements: {np.sum(v0)}')

print()

# Convert back to words (inverse transform)

print('Words in document:')

print(vectorizer.inverse_transform(v0))

Preprocessing for Better Results

Real-world text data contains noise that can hurt model performance. The 20 Newsgroups dataset includes headers, footers, and quoted text that we should remove:

# Load cleaned dataset

newsgroups_clean = fetch_20newsgroups(remove=('headers', 'footers', 'quotes'))

print('Cleaned sample document:')

print(newsgroups_clean.data[0])

# Refit vectorizer on cleaned data

vectorizer_clean = CountVectorizer()

vectorizer_clean.fit(newsgroups_clean.data)

print(f'Original vocabulary size: {len(vectorizer.vocabulary_)}')

print(f'Cleaned vocabulary size: {len(vectorizer_clean.vocabulary_)}')

Removing metadata reduces vocabulary size and improves the signal-to-noise ratio for content analysis.

Building a Text Classifier

Let’s put count vectorization to work in a complete machine learning pipeline. We’ll build a classifier to categorize newsgroup posts:

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

# Load train and test splits

newsgroups_train = fetch_20newsgroups(subset='train',

remove=('headers', 'footers', 'quotes'))

newsgroups_test = fetch_20newsgroups(subset='test',

remove=('headers', 'footers', 'quotes'))

# Initialize and fit vectorizer on training data

vectorizer = CountVectorizer()

X_train = vectorizer.fit_transform(newsgroups_train.data)

# Build and train classifier

classifier = MultinomialNB(alpha=0.01)

classifier.fit(X_train, newsgroups_train.target)

# Transform test data and make predictions

X_test = vectorizer.transform(newsgroups_test.data)

y_pred = classifier.predict(X_test)

# Evaluate performance

accuracy = metrics.accuracy_score(newsgroups_test.target, y_pred)

f1 = metrics.f1_score(newsgroups_test.target, y_pred, average='macro')

print(f'Accuracy: {accuracy:.3f}')

print(f'F1 Score: {f1:.3f}')

Output:

Accuracy: 0.646

F1 Score: 0.620

These results demonstrate that count vectorization provides a solid foundation for text classification, though more sophisticated methods typically perform better.

Strengths and Limitations

Count vectorization offers clear benefits but also has important constraints to consider:

Strengths:

- Interpretability: Direct mapping between features and vocabulary words

- Simplicity: Easy to understand and implement

- Exact frequencies: Preserves precise word count information

- Computational efficiency: Fast transformation for moderate vocabularies

Limitations:

- High dimensionality: Creates very wide, sparse vectors

- No semantic understanding: Treats all words as independent features

- Context blindness: Can’t distinguish word meanings based on context

- Common word dominance: Frequent words may overwhelm important but rare terms

Understanding these trade-offs helps you decide when count vectorization is appropriate for your specific use case.

Next Steps in Text Representation

Count vectorization provides an excellent starting point for text analysis, but several techniques can improve upon its limitations:

- TF-IDF weighting: Reduces the impact of common words while highlighting distinctive terms

- N-gram features: Captures sequences of words to preserve some context

- Dimensionality reduction: Use PCA or truncated SVD to compress high-dimensional vectors

- Word embeddings: Dense representations that capture semantic relationships between words

Each approach builds on the foundation that count vectorization provides. Despite its simplicity, count vectorization remains valuable for baseline models, exploratory analysis, and applications where interpretability is crucial.

The key is understanding when each tool is appropriate. Count vectorization excels when you need transparent, interpretable features and have clean, well-structured text data.